High Frame Rate Egomotion Estimation

advertisement

High Frame Rate Egomotion Estimation

Natesh Srinivasan, Richard Roberts, and Frank Dellaert

Georgia Institute of Technology

Abstract. In this paper, we present an algorithm for doing high frame

rate egomotion estimation. We achieve this by using a basis flow model,

along with a novel inference algorithm, that uses spatio-temporal gradients, foregoing the computation of the slow and noisy optical flow.

The inherent linearity in our model allows us to achieve fine grained

parallelism. We demonstrate this by running our algorithm on GPUs to

achieve egomotion estimation at 120Hz.

Image motion is tightly coupled with the camera egomotion and depth

of the scene. Hence, we validate our approach by using the egomotion

estimate to compute the depth of a static scene. Our applications are

aimed towards autonomous navigation scenarios where, it is required

to have a quick estimate of the state of the vehicle, while freeing up

computation time for higher level vision tasks.

#$%

$

!

"

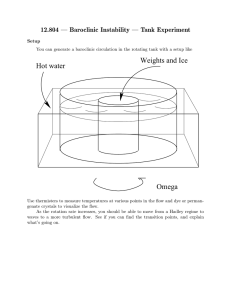

Fig. 1. (a) Input Stream from a high frame rate camera (b) Spatio-temporal gradients

as an RGB image, r- Dx , g-Dy , b-Dt . (c) Learned Dense basis flows corresponding

to pitch, yaw and forward motion (e) Platform showing typical motion during autonomous navigation (f) Estimated translational and rotational velocity (g) Recovered

translational flow (h) Depth map of the scene.

M. Chen, B. Leibe, and B. Neumann (Eds.): ICVS 2013, LNCS 7963, pp. 183–192, 2013.

c Springer-Verlag Berlin Heidelberg 2013

184

1

N. Srinivasan, R. Roberts, and F. Dellaert

Introduction

As high frame rate cameras are becoming commodity hardware, they may play

a very important role in the future of vision algorithms. This is because of the

several obvious benefits [6] that it offers over its lower frame rate counterparts

such as reduced motion blur, smaller inter-frame baseline, etc. However, these

cameras also pose different challenges that necessitate developing new models

while taking a close look at the underlying imaging process. This paper develops

one such model and demonstrates its application to robot egomotion estimation.

In a high frame rate setting, a vision-only solution to egomotion estimation is

advantageous as compared to the traditional way of doing egomotion estimation

by fusing IMUs (Inertial Measurement Units) and cameras. This is because, from

a system standpoint, it is simpler to deal with a single sensor due to fewer integration and synchronization issues. Approaches that fuse multiple sensors [3] works

well in a low frame rate setting, however this might be superfluous when using

high frame rate cameras. State estimates from high speed cameras are more accurate because they have more measurements for the same trajectory, reducing

the dependency on IMUs for high frequency state estimates.

The primary objective of this paper is to develop and evaluate a fast egomotion estimation method which run upwards of 120Hz. This is possible because

of the small inter-frame displacements and brightness variations across images.

As a result, continuous time models, such as the well known constant brightness

model [8] are a near exact representation of the underlying imaging process.

These models enable us to develop closed form analytic expression for the unknown egomotion that can be computed in a single step. In contrast, models

that rely on image warping or feature tracking require multiple iterations of

non-linear estimation to converge to the correct solution.

The first contribution of this paper is to exploit the idea of Basis Flows in a

continuous time model to compute egomotion directly from image gradients. We

take a two-tiered approach, where a slower learning algorithm learns a mapping

from egomotion to optic flow by using a dense version of the Basis Flow model

developed by Roberts et al. [14]. However, rather than using sparse flow as in

that paper, we develop a fully dense approach using spatio-temporal gradients.

A significant advantage of using learned basis flows is that they are independent of camera calibration. In fact, such an approach is not restricted to

using a projective camera model at all. The trade-off is that, while rotational

basis flows are scene independent, translational basis flows depend on the typical

scene structure [14].

The second contribution of this paper is to rapidly estimate the motion and

the depth map of a rigid scene, at better-than-framerate operation, using a

consumer-grade graphics processing unit (GPU). This is possible because the

bulk of the arithmetic to solve our linear model amounts to per-pixel operations.

In the depth estimation stage, we reuse the basis flow model to first obtain the

optical flow corresponding to translational motion. We then exploit the linearity that exists between the translational flow and inverse-depth to estimate the

High Frame Rate Egomotion Estimation

185

depth map in a single step. We further use a Kalman filter model to incrementally

refine our estimate of depth map over time.

This work was developed to serve as a harness to explore the idea of mental

rotations in robot navigation. The depth maps obtained from this paper serve as

a quasi-instantaneous snapshot of the environment that enable the robot to reach

a goal location (an x, y, z co-ordinate location) by iteratively aligning itself with

an abstract representation of the goal state. A similar approach to navigation is

believed to exist among primates [2]. However, the details of this work is beyond

the scope of this paper and we will address this in a subsequent paper.

2

Related Work

A brief survey on egomotion estimation methods starts with the works of Gibson. This was followed by a series of methods developed during the 80s and

90s. They are mostly classified based on the constraints and the underlying

optimization techniques used to solve them. The constrains can either be differential epipolar [11], which map the 3D velocity of a point in the real world

onto a 2D velocity vector in the image plane, or they can be discrete epipolar [10], which map a 3D rigid egomotion between views onto the corresponding

2D image points in each view. A variety of optimization techniques have been

developed using these two fundamental constraints. These techniques attempt

to solve for the egomotion quickly and robustly, a challenging task due to the

non-linear relationship between depth, camera motion, and optical flow. Heeger

et al. [7] solve this by sampling possible translations from a unit sphere and then

deriving an equation that is linear in the inverse depth and the rotational velocity. Bruss and Horn [4] directly use the bi-linear constraint that exists between

the depth and the translational velocity. Other approaches include the use of

motion parallax [13] and the epipolar geometry constraints [10,11].

However, in most of these methods, estimation of either optical flow or feature

correspondences is an intermediate step. This can be noisy and slow because

of wrong feature matches and high-dimensional search spaces. This led to the

advent of a class of methods called the direct methods, [9]. In these methods,

the optical flow is treated as a hidden variable and camera motion is directly

estimated using only image intensity gradients. The estimation is thus reduced

to at most 6 parameters with each pixel providing a single constraint, resulting

in a highly-overdetermined system. A more detailed analysis on the current state

of the art on egomotion estimation can be found in the works of Florian et al. [5].

3

Approach

In this section, we will briefly revisit the basis flow model described in [14]

(Sec 3.1), then derive a closed form expression for estimating egomotion (Sec 3.2).

We will then show how we can use this egomotion to compute scene depth

(Sec 3.3).

186

3.1

N. Srinivasan, R. Roberts, and F. Dellaert

Basis Flows

Basis flows are a powerful model that represent a linear relationship between

optical flow and camera motion. They are a learned mapping that is derived by

exploiting the following bi-linear relationship that exists between camera motion,

T

(v, ω), inverse depth, ρi and the optic flow, ui = uxi uyi

at the ith pixel

ui = ρi Ai v + Bi ω

(1)

T

is the translational component of egomotion and ω =

where v = vx vy vz

T

ωx ωy ωz

is the rotational component. Ai ∈ R2×3 and, Bi ∈ R2×3 are matrices that depend on the camera parameters and pixel co-ordinates. This idea

was first observed by Longuet et al. [11,10] who called it a differential epipolar

constraint to distinguish from the discrete epipolar case.

Roberts et al. [14] developed a calibration-free, probabilistic version of this

idea by making certain simplifying assumptions about depth, namely approximate constancy per-pixel over time. This simplifies Eq 1 to

v

(2)

ui = Wi

ω

where the columns of Wi are the learned basis flows. This method also works for

non-projective camera models as discussed in [14].

Basis flows have several interesting and intuitive properties and afford a finegrained parallelism in the computation of egomotion. During the inference phase,

Roberts et al. use a sparse optical flow as measurements from which to compute

egomotion. In this paper we take an alternate approach, which leverages high

frame-rate, using image gradients.

3.2

Gradient-Based Inference

In this section we develop a probabilistic model for estimating egomotion directly

from image gradients. This is the key contribution of this paper which enables

us to leverage the computational power of GPUs to estimate egomotion.

In a probabilistic sense, the computation of egomotion is the maximization of

the posterior probability of egomotion, yt = (vt , ωt ) given the image gradients,

Zt = {Dtx , Dtt }1 and the basis flow, W at time, t

yˆt = arg max p (yt |Zt , W ) .

yt

(3)

Rewriting this as a product of the measurement likelihood and prior and using

the independence between the basis flows, W , and the egomotion, yt , we get

(4)

yˆt = arg max L Dtt |Dtx , yt , W p (yt ) .

yt

1

Dx ∈ R1×2 and Dt ∈ R represent the spatial and temporal gradients.

High Frame Rate Egomotion Estimation

187

(a)

(b)

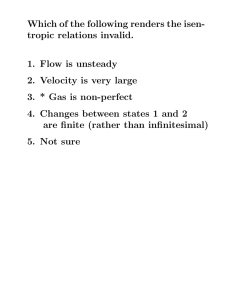

Fig. 2. (a) Bayes-Net Representation of our generative model for pixel i , showing the

relation between the basis flow, W , image gradients, Zi , the camera egomotion, yt and

the optical flow, ui (b) Memory distribution of the GPU implementation of our model

We use L (·) to represent the likelihood (unnormalized probability) to distinguish it from a probability, p (·). Eq. 4 states that the temporal gradient, Dtt can

be independently computed given the spatial gradient, Dtx , camera egomotion,

yt and the basis flows, W .

The basis flow model given in Eq. 2 provides us with a way to compute

the flow, ut , given the egomotion, yt However, the model does not provide an

explicit relationship between the image gradients, Zt and egomotion, yt . In order

to evaluate Eq. 4, we exploit the brightness constancy assumption [8] stated as

t

x

Dti

+ Dti

uti = 0

(5)

where the subscript i corresponds to the ith pixel. This provides us with a addit

x

tional constraint between the flow, uti and the image gradients Zti = {Dti

, Dti

}.

An important observation at this point is that the temporal gradient at each

t

is independent of every other pixel, iff we are given the spatial gradient,

pixel, Dti

x

Dti

at that pixel2 . This allows us to re-write Eq. 4 as a per-pixel product given

by

t

x

(6)

yˆt = arg max p (yt )

L Dti

|Dti

, yt , Wi

yt

i

where the subscript i refers to the ith pixel.

The Bayes’ net representation of our model is shown in Fig. 2a. It tells us

that the hidden flow term uti , provides a link between the egomotion, yt and

the image gradients, Zit . This suggests that we can factorize the measurement

likelihood (Eq. 4) as

t x

t x

|Dti , yt , Wi = L Dti

|Dti , uti L (uti |yti , Wi )

(7)

L Dti

2

This is however not completely true, since the spatio-temporal Sobel kernel used in

the computation of gradients induces some correlation between adjacent pixels

188

N. Srinivasan, R. Roberts, and F. Dellaert

Since our goal is to get rid of the intermediate flow term, we compute the

marginal corresponding to the joint distribution given in Eq. 7 by integrating

out the hidden flow term uti

ˆ t

x

arg max p (yt )

|Dti

, uti L (uti |yti , Wi ) duti

L Dti

yt

i u

ti

which provides us with the following expression for the marginal distribution:

ˆ

t x

t x

L Dti |Dti , uti L (uti |yti , Wi ) duti = L Dti

(8)

|Dti , yt , Wti

uti

Taking the negative log-likelihood of Eq. 8 and maximizing with respect to yt =

(v, ω) gives us the following maximum likelihood estimate:

−1 T

x t

T

2

x T

x

Dti Wi

(9)

yt =

1/Jti Wi (Dti ) Dti Wi

1/Jit2 Dti

i

T

i

x

x

where Ji = (Dti

) Σui Dti

+ σI where Σui is the covariance matrix of the flow

at pixel i.

x

t

, Dti

can be preEvaluation of Jti is a per-pixel independent operation. Dti

computed in parallel. Each pixel provides an completely independent constraint

as detailed in the MAP estimate given in Eq. 9 .

3.3

Depth Maps

In this section we develop a Kalman filter model for computing the scene depth.

The depth map is used as a qualitative measure of the accuracy of the estimated

egomotion. Eq. 1 provides a bi-linear constraint between the egomotion, depth

and flow at each pixel. However the problem is greatly simplified if the optical

flow corresponding to any of the translational component of egomotion is known.

This is because the following linear model exists between the forward flow, ufti

and the inverse depth ρi , given the forward velocity, vt of the camera

ufxti

x

f

(10)

=

ρ v +η

uti =

y i t

ufyti

where η = N (0, Pm ) is the noise on flow. The subscript t refers to time, and

the superscript f indicates that it is the forward component of flow. (x, y) are

co-ordinates of pixel i.

In order to get the forward flow, uf , we subtract the rotational component of

flow, uω , from the total flow, u. The rotational component of flow is obtained

by scaling the basis flows corresponding to rotation, W ω ,3 by our estimate of

rotational velocity, ω.

v ω

3

Note that W = W W , implying that there is a basis for each component of

egomotion. Ex: For 6 DOF motion, we have 6 basis flows.

High Frame Rate Egomotion Estimation

189

Matthies et al. [12] talk about the various techniques to refine the depth map

estimate using a Kalman filter. Assuming that the camera calibration is known,

we get the set of equations shown in Fig. 3, borrowed from [12].

Fig. 3. Kalman Filter Equations for estimating inverse depth from the measured disparity d. The set of equations are borrowed from [12] .

4

Experimental Evaluation

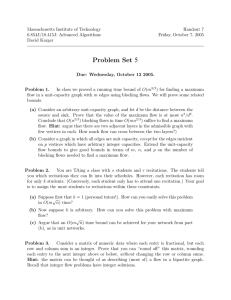

Fig. 5a shows the experimental setup. The test platform is a Pioneer3 P3-DX. We

use a Flea 3 - USB 3.0 high speed camera running at 512×512 resolution. The onboard computer has a Quad-Core CPU and a Nvidia Quadro K2000M GPU. The

platform has 3 degrees of freedom at any time t, namely forward velocity, vt , yaw,

ωy and pitch, ωx (due to rough terrain). We first do a quantitative evaluation of

the accuracy of the estimated egomotion and the we do a qualitative evaluation

by generating depth maps.

4.1

Accuracy of Egomotion Estimation

Fig. 4 shows the accuracy of the estimated egomotion on two test datasets - (1)

indoor scene and (2) outdoor scene. The learning stage is done offline and we

obtain the basis flows, W at a resolution of 32 × 32. They are then upscaled

to match the resolution of the image (512 × 512). The covariance term of the

flow, Σu was assumed to be 1 and the pixel intensity variance, σI was assumed

to be 0.1. The spatial gradients, Dx were computed with a 3 × 3 Sobel operator. The temporal gradients, Dt was computed by taking difference of adjacent

frames after blurring by a 3 × 3 Gaussian kernel. The accuracy of the estimated

egomotion was compared with the wheel odometry as shown in Fig. 4 (d) & (e).

N. Srinivasan, R. Roberts, and F. Dellaert

%& '

("

) +,"" !" #$"

) *

!" #$"

-" *

190

' #$"

%& '

) +,"" ' #$"

Fig. 4. (a) Learned translational basis flow [left] (color coding of flow can be found in

Fig. 5b) for indoor scene [right]. (c) Learned translational basis flow [left] for outdoor

scene [right]. (b) computed rotational basis flow, which is same for both sequence because it is independent of scene structure. (d) & (e) comparison of egomotion estimation

with wheel odometry.

The estimated motion is quite robust for rotational components but the translational estimates incur errors when robot is undergoing rotations. This is because, rotations drastically change the heading of the camera and as a result,

the scene structure. The learned translational basis do not deal well with this

kind of transformation. It is also to be noted that the method described here is

sensitive to passing objects, especially if they form a significant portion of the

camera’s field of view. However, we are currently working on a robust, outlier

rejection model using the method described in [14]. The core computation time

of the motion estimation for each frame was measured at 3.24 ms which is much

better than the required 8.33 ms for an input stream of 120 Hz.

4.2

Application: Dense Depth Maps

We demonstrate the application of our egomotion estimation by obtaining the

depth map of the scene. The pipeline for evaluating the depth map is shown in

Fig. 5b. The goal is to first compute the magnitudes of the egomotion components

and then estimate the depth from the translational component of flow. We use

the TV-L1 dense flow [1] (Fig. 5b (i)) to compute the total flow and then use the

method described in section 3.3 to subtract the rotational flow (Fig. 5b (e)). The

translational flow(Fig. 5b (h)), that is obtained, is used to get a rudimentary

depth map (Fig. 5b (f)) which is then refined using the Kalman filter equations

to get the final depth(Fig. 5b (j)).

High Frame Rate Egomotion Estimation

191

(a)

#

$

%

!

"

!

"

&

(b)

Fig. 5. (a) Experimental Setup. [Left]Diagrammatic representation of the top view,

platform is directly moving toward the object with a velocity vt and yaw, ωyt . It has

3 degrees of freedom at any instant of time. [Top Right] The side view showing the

pitching motion due to rough terrain. [Bottom Right] Picture of the setup.(b) Depth

Map Generation Pipeline [See section 4.2]. We first computes the egomotion , yt . Using

this estimate, we then get the flow corresponding to translation. We then use this to

get a rudimentary depth maps which is refined using the Kalman filter equation to get

the refined depth map.

192

5

N. Srinivasan, R. Roberts, and F. Dellaert

Conclusions and Acknowledgments

We have presented a novel, fully probabilistic model for doing egomotion estimation directly from image gradients. We have further demonstrated its applicability in the estimation of depth of a static, rigid scene. From a system level

perspective we have shown that we can exploit the GPUs to achieve better-than

frame rate performance. While the primary objective of the paper is to estimate

motion, the end result was to obtain quick estimates of the depth of the scene.

This research is supported by the Office of Naval Research under grant

#00014 − 11 − 1 − 0593. The authors would like to thank Prof. Ronald Arkin for

his significant contributions to this project. We would also like to acknowledge

the other student team members for their roles: Ivan Walker and Ryan Kerwin.

References

1. Wedel, A., Pock, T., Zach, C., Bischof, H., Cremers, D.: An Improved Algorithm for

TV-L1 Optical Flow. In: Cremers, D., Rosenhahn, B., Yuille, A.L., Schmidt, F.R.

(eds.) Statistical and Geometrical Approaches to Visual Motion Analysis. LNCS,

vol. 5604, pp. 23–45. Springer, Heidelberg (2009)

2. Arkin, R.C.: The role of mental rotations in primate-inspired robot navigation.

Cognitive Processing 13, 83–87 (2012)

3. Beall, C., Nguyen, T.H.D., Ok, C., Dellaert, F.: Attitude heading reference system

with rotation-aiding visual landmarks (2012)

4. Bruss, A.R., Horn, B.K.P.: Passive navigation. Computer Vision, Graphics, and

Image Processing 21, 3–20 (1983)

5. Florian, R., Heiko, N.: A review and evaluation of methods estimating ego-motion.

Comput. Vis. Image Underst. 116(5), 606–633 (2012)

6. Handa, A., Newcombe, R.A., Angeli, A., Davison, A.J.: Real-time camera tracking:

when is high frame-rate best? In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y.,

Schmid, C. (eds.) ECCV 2012, Part VII. LNCS, vol. 7578, pp. 222–235. Springer,

Heidelberg (2012)

7. Heeger, D., Jepson, A.: Subspace methods for recovering rigid motion I: Algorithm

and implementation. International Journal of Computer Vision 7(2), 95–117 (1992)

8. Horn, B.K.P., Schunck, B.G.: Determining Optical Flow. Artificial Intelligence 17(1-3), 185–203 (1981)

9. Irani, M., Anandan, P.: All about direct methods (1999)

10. Longuet-Higgins, H.C.: A computer algorithm for reconstructing a scene from two

projections. Nature 293, 133–135 (1981)

11. Longuet-Higgins, H.C., Prazdny, K.: The Interpretation of a Moving Retinal Image. Proceedings of the Royal Society of London. Series B, Biological Sciences

(1934-1990) 208(1173), 385–397 (1980)

12. Matthies, L., Szeliski, R., Kanade, T.: Kalman filter-based algorithms for estimating depth from image sequences. International Journal of Computer Vision 3,

209–236 (1989)

13. Rieger, J.H., Lawton, D.T.: Sensor motion and relative depth from difference fields

of optic flows. In: IJCAI, pp. 1027–1031 (1983)

14. Roberts, R., Potthast, C., Dellaert, F.: Learning general optical flow subspaces

for egomotion estimation and detection of motion anomalies. In: IEEE Conf. on

Computer Vision and Pattern Recognition (CVPR) (2009)