COMPUTER-BASED MALAY STUTTERING ASSESSMENT SYSTEM OOI CHIA AI

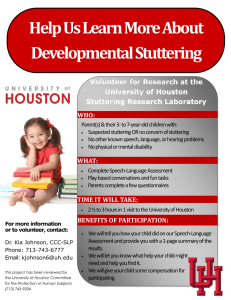

advertisement