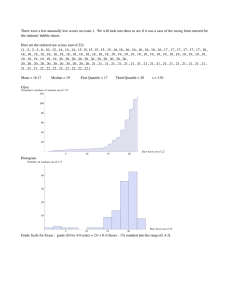

Measure for Measure

advertisement