Cisco Virtual Update – ACI/Nexus 9000 Design Mikkel Brodersen ( )

advertisement

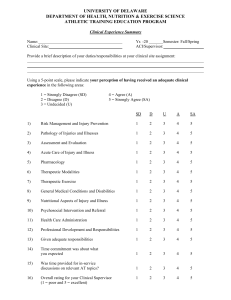

Cisco Virtual Update – ACI/Nexus 9000 Design Mikkel Brodersen (mbroders@cisco.com) Michael Petersen (michaep2@cisco.com) March 2016 Session Objectives At the end of the session, the participants should be able to: § Articulate the migrations options from existing network designs to ACI § Understand the design considerations associated to those migration options Initial assumption: § The audience already has a good knowledge of ACI main concepts (Tenant, BD, EPG, Service Graph, L2Out, L3Out, etc.) 2 Migration Scenarios Scenario 1 Scenario 2 Interconnecting an existing brownfield network to a new ACI greenfield fabric Converting an existing brownfield network (built with N9Ks) to ACI 3 Scenario 1 Interconnecting Existing Networks to ACI 4 Starting Point – Brownfield Network (vPC Based) WAN - Core L3 L2 § Starting from a traditional network (named ‘Brownfield’) § Brownfield network could be built leveraging vPC, STP, FabricPath, VXLAN or even 3rd party devices Migration considerations equally apply to all those cases § Assumption is also that network services are connected in traditional fashion (routed mode at the aggregation layer) vPC Based Brownfield Network 5 Starting Point – Brownfield Network (FP Based) § Same consideration apply when migrating from a FabricPath based topology WAN - Core L3 L2 Cisco Validated Design Guide available for this option: http://www.cisco.com/c/en/us/td/docs/switches/ datacenter/aci/apic/sw/migration_guides/ fabricpath_to_aci_migration_cisco_validated_des ign_guide.html § Default gateway in this case could be at the spine or on a pair of FP Border Leaf nodes FP Based Brownfield Network 6 Network Centric Migration Procedural Steps 1 2 3 Deployment Integration Migration Design and deploy a new ACI Fabric Connect ACI to the current infrastructure Migrate workloads to use new ACI POD 7 Network Centric Migration Procedural Steps 1 Deployment Design and deploy a new ACI Fabric 8 Deployment Building an Initially Small ACI POD The end goal is to migrate endpoints and network services to the ACI fabric WAN - Core Brownfield Network Greenfield ACI Fabric 9 Network Centric Migration Procedural Steps 2 Integration Connect ACI to the current infrastructure 10 Integration Connecting Brownfield and Greenfield Networks First step: creating a L2 connectivity path WAN - Core L3 Back-to-back vPC for avoiding L2 loops L2 L2 Trunk 11 ACI Fabric Loopback Protection § Multiple Protection Mechanisms against external loops § LLDP detects direct loopback cables between any two switches in the same fabric § Mis-Cabling Protocol (MCP) is a new link level loopback packet that detects an external L2 forwarding loop MCP frame sent on all VLAN’s on all Ports If any switch detects MCP packet arriving on a port that originated from the same fabric the port is errdisabled § External devices can leverage STP/BPDU § MAC/IP move detection and learning throttling and err-disable LLDP LLDP Loop Detection BPDU MCP MCP Loop Detection (supported with 11.1 release) STP Loop Detection 12 ACI Fabric – Integrated Overlay Data Path Encapsulation Normalization IP Fabric Using VXLAN Tagging Normalized Encapsulation Any to Any VTEP Localized Encapsulation VXLAN VNID = 5789 802.1Q VLAN 50 VXLAN VNID = 11348 VXLAN NVGRE* VSID = 7456 § All traffic within the ACI Fabric is encapsulated with an extended VXLAN header § External VLAN, VXLAN, NVGRE* tags are mapped at ingress to an internal VXLAN tag § Forwarding is not limited to, nor constrained within, the encapsulation type or encapsulation ‘overlay’ network § External identifies are localized to the Leaf or Leaf port, allowing re-use and/or translation if required IP Payload Eth MAC Payload Eth IP Payload 802.1Q IP Payload Outer IP NVGRE IP Payload Outer IP VXLAN Eth IP Payload *Not currently supported in SW Normalization of Ingress Encapsulation 13 Endpoints Integration Assign Port to an EPG in ACI VLAN 30 BD Web VLAN 20 EPG1 10.10.10.10 § With VMM integration, port is assigned to EPG by APIC dynamically § In all other cases, such as connecting to switch, router, bare metal, port need to be assigned to EPG manually or by using APIs § Use “Static Binding” under EPG to assign port to EPG § The example assigns traffic received on vPC 2 with VLAN tagging 20 to EPG1 § VLANs are locally significant, but operationally may be simpler to use the same VLAN tag for the same EPG 10.10.10.11 14 Endpoints Integration Use Case 1: VLAN == BD == EPG WAN - Core Map VLAN10/EPG1 and VLAN20/EPG2 L3 L2 VLAN 10 App1 Web 10.10.10.10 VLAN 10 App1 Web 10.10.10.11 VLAN 20 App2 Web 10.20.20.10 VLAN 20 VLANs 10, 20 App2 Web 10.20.20.11 § Endpoints connected to different VLANs in the brownfield network § Each legacy VLAN is trunked to the ACI fabric and mapped to a dedicated EPG and BD § Legacy environment is trusted, no security policies applied with ACI internal EPGs 15 Endpoints Integration Use Case 1: VLAN == BD == EPG WAN - Core Map VLAN10/EPG1 and VLAN20/EPG2 L3 L2 VLAN 10 App1 Web 10.10.10.10 VLAN 10 VLAN 20 VLAN 20 App1 Web 10.10.10.11 App1 Web 10.20.20.10 App1 Web 10.20.20.11 VLANs 10, 20 EPG1 BD Web1 EPG2 BD Web2 16 Endpoints Integration Use Case 1: VLAN == BD == EPG WAN - Core Map VLAN10/EPG1 and VLAN20/EPG2 L3 L2 VLAN 10 VLAN 10 VLAN 20 VLAN 20 App1 Web 10.10.10.10 App1 Web 10.10.10.11 App1 Web 10.20.20.10 App1 Web 10.20.20.11 EPG1 BD Web1 EPG2 BD Web2 17 Endpoints Integration Use Case 2: Single VLAN to Different EPGs WAN - Core Map VLAN10 to EPGOutside (still static mapping, NOT L2Out) L3 L2 VLAN 10 App1 Web 10.10.10.10 VLAN 10 App1 Web 10.10.10.11 VLAN 10 App2 Web 10.10.10.20 VLAN 10 VLAN 10 App2 Web 10.10.10.21 § Endpoints connected to the same VLAN in the brownfield network § The VLAN is trunked to the ACI fabric and mapped to a dedicated EPG Outside § Endpoints migrated to the ACI fabric will be part of dedicated internal EPGs à the goal is to be able to apply security policies between legacy and new network environments 18 Endpoints Integration Use Case 2: Single VLAN to Different EPGs WAN - Core Map VLAN10 to EPGOutside (still static mapping, NOT L2Out*) L3 L2 VLAN 10 VLAN 10 VLAN 10 VLAN 10 EPG1 App1 Web 10.10.10.10 App1 Web 10.10.10.10 VLAN 10 C App1 Web 10.10.10.11 App1 Web 10.10.10.20 App1 Web 10.10.10.21 C BD Web EPG2 *Static VLAN-to-EPG mapping is the most common option in network centric migration scenarios 19 Endpoints Integration Use Case 2: Single VLAN to Different EPGs WAN - Core Map VLAN10 to EPG-Outside L3 VLAN 10 L2 VLAN 10 VLAN 10 VLAN 10 VLAN 10 Communication secured by ACI policy App1 Web 10.10.10.10 App1 Web EPG1 10.10.10.10 C App1 Web 10.10.10.11 App1 Web 10.10.10.21 C App1 Web 10.10.10.20 BD Web EPG2 20 Endpoints Integration Configure ACI Bridge Domain Settings Tenant “Tenant-1” § Context “T1-VRF01” Temporary Bridge Domain specific settings Needed for L2 communication between endpoints migrated to ACI and endpoints still connected to the brownfield network (including Default Gateway) Bridge Domain “Web” Subnet 10 EPG-Web § Select Forwarding to be “Custom” and: Enable Flooding of L2 unknown unicast Enable ARP flooding § Recommended to keep routing enabled in the BD (at this point without IP address) Allows to learn IP address information for endpoints connected to the ACI fabric 21 Network Centric Migration Procedural Steps 3 Migration Migrate workloads to use new ACI POD 22 Endpoints Migration § The goal is migrating endpoints to the ACI fabric with minimal disruption § Live migration for Virtual Machines (validated the use of vMotion for VMware deployments*) § Two main scenarios to consider: 1. Single vCenter Server Managing ESXi Clusters in Brownfield and Greenfield 2. Separate vCenter Servers for Brownfield and Greenfield *Similar considerations apply to other Hypervisor Deployments (for example MS SCVMM) 23 Endpoints Migration 1 - Single vCenter Server Scenario WAN - Core L3 L2 Compute Clusters 100.1.1.3 VM VM 100.1.1.7 100.1.1.99 VM VM VM VM VM VM VM Compute Cluster BD Existing App Mgmt Cluster New Compute Clusters DVS vCenter Managed DVS 24 Endpoints Migration 1 - Single vCenter Server Scenario 1.1 Connect the new ESXi hosts to the vCenter managed DVS WAN - Core L3 L2 100.1.1.3 VM VM 100.1.1.7 100.1.1.99 VM VM VM VM VM VM VM BD Existing App DVS vCenter Managed DVS 25 Endpoints Migration 1 - Single vCenter Server Scenario 1.2 Migrate VMs to the new ESXi cluster WAN - Core L3 L2 VM 100.1.1.3 VM VM 100.1.1.99 100.1.1.7 VM VM VM VM VM VM BD Existing App DVS vCenter Managed DVS Migrated VMs initially connect to ACI as “physical resources” 26 Endpoints Migration 1 - Single vCenter Server Scenario 1.3 Create a VMM domain to integrate vCenter with APIC WAN - Core L3 L2 VM VM 100.1.1.3 VM VM 100.1.1.99 100.1.1.7 VM VM VM VM VM BD IC -AP r e t n vCeExisting ping p a M App APIC Managed DVS DVS vCenter Managed DVS New DVS 27 Endpoints Migration 1 - Single vCenter Server Scenario 1.4 WAN - Core Migrate new ESXi clusters to APIC managed DVS and connect VM’s vNICs to new port-groups (associated to EPGs) L3 L2 VM VM 100.1.1.3 VM VM 100.1.1.99 100.1.1.7 VM VM VM VM VM BD IC -AP r e t n vCeExisting ping p a M App APIC Managed DVS DVS vCenter Managed DVS New DVS 28 Endpoints Migration 2 - Multiple vCenter Servers Scenario WAN - Core L3 L2 Compute Clusters 100.1.1.3 VM VM 100.1.1.7 100.1.1.99 VM VM VM VM VM VM VM Compute Cluster BD Existing App Mgmt Cluster Mgmt Cluster vCenter2 New Compute Clusters DVS 29 Endpoints Migration 2 - Multiple vCenter Servers Scenario 2.1 WAN - Core Pair the new vCenter with the APIC controller cluster and connect ESXI servers to the APIC managed DVS L3 L2 100.1.1.3 VM VM 100.1.1.7 100.1.1.99 VM VM VM VM VM VM VM DVS vCenter-APIC Mapping BD Existing App APIC Managed DVS New DVS 30 Endpoints Migration 2 - Multiple vCenter Servers Scenario 2.2 Migrate VMs to the new ESXi cluster* WAN - Core L3 L2 VM VM 100.1.1.3 VM VM 100.1.1.7 100.1.1.99 VM VM VM VM VM VM VM DVS BD Existing App vCenter-APIC Mapping VM APIC Managed DVS New DVS *Cross-vCenter vMotion supported with vSphere 6.0 and ACI 11.2 release 31 Default Gateway Considerations Existing Design ACI Fabric HSRP Default GW Subnet 1 = VLAN 10 P P L2 Bridging VM VM VM Subnet 1 = EPG1 P VM § Default Gateway up to this point is still deployed in the Brownfield network § ACI initially provides only L2 connectivity services § L2 path between the two networks leveraged by migrated endpoints to reach the default gateway 32 Migrate Default Gateway to ACI § Specify the MAC address for the gateway to match the HSRP vMAC previously used in the Brownfield network (optional) § Configure the Anycast Gateway IP address 33 Migrate Default Gateway to ACI WAN - Core Anycast Default Gateway VLAN 10 L3 L3 L2 L2 VLAN 20 EPG1 10.10.10.11 10.10.10.10 EPG2 10.20.20.11 10.20.20.10 Any IP - Anywhere 34 Migrate Default Gateway to ACI ACL Management Function WAN - Core Deploying contracts between EPGs allows also to secure communication between endpoints in the brownfield network (belonging to separate IP subnets) Dynamic ACLs are applied between EPGs VLAN 10 VLAN 20 EPG1 10.10.10.11 10.10.10.10 EPG2 10.20.20.11 10.20.20.10 Traffic is controlled between VLAN 10 & 20 35 Migration Change BD Settings Back to Normal § Once all the endpoints (virtual and/or physical) belonging to a BD have been migrated to the ACI, it is recommended to revert to the default BD settings § Select “Optimize” forwarding to stop flooding ARP and L2 unknown unicast 36 Migration Routing between Brownfield and Greenfield § Routing between Brownfield and Greenfield may still be required • Handling communication to IP subnets that remain only on Brownfield (default gateway remains on aggregation devices) • Handling communication with the WAN Existing Design ACI Fabric L3 Routing HSRP Default GW IP Subnet 2 = VLAN 30 P P IP Subnet 1 = EPG1 VM VM VM P P VM VM VM 37 Migration Routing between Brownfield and Greenfield WAN - Core Default Gateway for VLAN 30 L3 Links L3 L2 L3 L2 VLAN 30 10.30.30.10 VLAN 30 NOT carried on the vPC connection EPG1 10.10.10.11 38 Migrating Network Services § There are three main ways of integrating network services (FWs, SLBs) into ACI mode: 1. Managed integration using a service graph with “device package” 2. Un-managed integration using service graph only, device package not required (from ACI release 11.2) 3. Without service graph, the network service node is connected to the ACI fabric as part of the Physical Domain § The 3rd approach is recommended for the migration procedure § Alternatively, deploy a separate set of network services in the greenfield network (to leverage the full extent of service graph capabilities) 39 Migrating Network Services Example of FW Services Migration Starting point: Active/ Standby FW nodes (routed mode*) connected to the Aggregation layer switches WAN - Core Active Standby *Similar considerations apply for services in transparent mode 40 Migrating Network Services Move the Standby Node to ACI WAN - Core Active FW Keepalives and state synchronization Standby 41 Migrating Network Services Disconnect the Active Node from the Brownfield Network WAN - Core Active X FW activated on the ACI fabric Active 42 Migrating Network Services Both FW Nodes Connected to the ACI Fabric WAN - Core Standby Active 43 Scenario 2 NX-OS Mode to ACI Mode In-Place Migration 44 Starting Point WAN/Core Router Attachment Aggregation Switch (Default Gateways) L3 L2 vPC Configuration Access/Leaf Switch Pod-1 vSwitch Bare Metal Server (Active/Standby Teaming) Pod-2 ESXi Host (LACP Teaming) 45 End-Goal Design ACI Spines L3 L2 ACI VTEP ACI VTEP ACI VTEP ACI VTEP vSwitch ACI VTEP ACI VTEP WAN/Core Router Attachment Border Leaf Nodes 46 NX-OS Mode to ACI Mode In-Place Migration Step-by-Step Procedure 1. Start Creating the ACI Fabric 2. Create ACI Spines 3. Reload the First Leaf in Pod-1 4. Reload the Second Leaf in Pod-1 5. Reload the Leaf Nodes in Pod-2 6. Complete Rebooting the Leaf Nodes 7. Start Re-homing the WAN/Core Routing Peering 8. Migrate Default Gateway to the ACI Fabric 9. Reboot (or Remove) Remaining Spine Nodes 47 Start Creating the ACI Fabric L3 L2 1 1.2 Create a L2 vPC connection between ACI Border Leafs and aggregation devices (must be ACI leaf edge interfaces) ACI VTEP 1.1 Install a new pair of ACI Border Leaf nodes ACI VTEP vSwitch 1.3 Install and connect the APIC Cluster 48 Functions Supported by ACI ‘Spines’ Proxy Proxy Proxy Proxy ² Transit Node ² Multicast Root ² Proxy Lookup § Three data plane functions required within the ‘core’ of an ACI fabric 1. Transit: IP forwarding of traffic between VTEP nodes 2. Multicast Root: Root for one of the 16 multicast forwarding topologies (used for optimization of multicast load balancing and forwarding) 3. Proxy Lookup: data plane based directory for forwarding traffic based on mapping database of endpoints information (MAC and IP addresses) to VTEP bindings 49 Create ACI Spines 2 Option 1 - Upgrade Existing Aggregation Switches (Not Recommended) Proxy located in the upgraded switch (requires 9700 only series line cards) L3 Proxy L2 ACI VTEP ACI VTEP vSwitch Not recommended to avoid running the network with a single point of failure (although temporarily) 50 Create ACI Spines 2 Option 2 (Preferred) – Install New Spine Switches L3 2.1 Install new Spine switches with Proxy database (9336s or 95xx with 9700 series line cards) Proxy Proxy L2 2.2 Connect the Border Leaf nodes to the new spines ACI VTEP ACI VTEP vSwitch 2.3 Map VLANs to EPGs and properly configure flooding behavior for BDs 51 Reload the First Leaf in Pod-1 3 Initial Stage 3.2 Spines start the auto-discovery process for the new ACI leaf L3 Proxy Proxy L2 ACI VTEP ACI VTEP ACI VTEP vSwitch 3.1 Reload the leaf in ACI mode 52 Reload the First Leaf in Pod-1 3 Servers with Active/Standby NIC Teaming Rebooting ACI VTEP Standby Active Common VLANs configured on both switch ports Active Link Down During Software Upgrade NIC Teaming Fails Over Standby Active After upgrade common VLANs (Bridge Domains) are still configured on both switch ports, NIC teaming can return to original state 53 Reload the First Leaf in Pod-1 3 Final Stage L3 Proxy Proxy L2 ACI VTEP ACI VTEP ACI VTEP vSwitch Server communication with external L3 domain 54 Reload the Second Leaf in Pod-1 L3 4 Reload the second leaf in ACI mode ACI VTEP 4 Proxy Proxy L2 ACI VTEP ACI VTEP ACI VTEP vSwitch 55 Reload the Leaf Nodes in Pod-2 5 Initial Stage L3 Proxy Proxy L2 ACI VTEP ACI VTEP ACI VTEP vSwitch ACI VTEP ACI VTEP 5. Reload the leaf in ACI mode 56 Reload the Leaf Nodes in Pod-2 5 Servers with LACP Active/Active NIC Teaming Rebooting Rebooting ACI VTEP ACI VTEP LACP = ‘A’ LACP = ‘A’ LACP = ‘A’ LACP = ‘A’ LACP = ‘B’ LACP = ‘B’ ESXi uplink is moved to the APIC managed DVS Port Channel formed Common LACP System-ID During Software Upgrade Port Channel failover occurs Post Upgrade different System-ID’s are advertised only one link is active Move VMs to the APIC managed DVS and reload the second leaf into ACI mode • A 90-120 seconds outage is experienced when the second leaf nodes comes up in ACI mode Possible workaround still under investigation Recommended to deploy ESXi uplinks in Active/Standby mode (when possible) 57 Reload the Leaf Nodes in Pod-2 5 vPC Peer-Link Considerations L3 Proxy Proxy L2 ACI VTEP ACI VTEP ACI VTEP Lets Look at the vPC Peer-Link ACI VTEP ACI VTEP ACI VTEP vSwitch 58 Reload the Leaf Nodes in Pod-2 5 Final Stage L3 Proxy Proxy L2 ACI VTEP ACI VTEP ACI VTEP Peer-Link not needed (links may be reutilized) ACI VTEP ACI VTEP ACI VTEP vSwitch L2/L3 communication natively handled via ACI 59 Complete Rebooting the Leaf Nodes Default Gateway for all the BDs still positioned on the NXOS Aggregation devices ACI VTEP ACI VTEP L3 6 Proxy Proxy L2 ACI VTEP ACI VTEP ACI VTEP ACI VTEP ACI VTEP ACI VTEP vSwitch 60 Start Re-homing the WAN/Core Routing Peering 7 7.1 Connect WAN edge routers to the Border Leaf nodes L3 Proxy Proxy L2 7.2 Configure L3Out and establish routing peering ACI VTEP ACI VTEP ACI VTEP ACI VTEP ACI VTEP ACI VTEP ACI VTEP ACI VTEP vSwitch 61 Migrate Default Gateway to the ACI Fabric 8 Complete Migration of Default Gateway and WAN/Core Routers Links 8.4 Complete re-homing of WAN/Core routers 8.2 Complete default gateway migration for all the BDs Proxy Proxy 8.5 Disconnect NXOS devices from ACI Border Leaf nodes ACI VTEP ACI VTEP ACI VTEP ACI VTEP ACI VTEP ACI VTEP ACI VTEP ACI VTEP L3 L2 vSwitch 8.3 Revert to default flooding behavior on all BDs 62 Reboot (or Remove) Remaining Spine Nodes Proxy ACI VTEP ACI VTEP ACI VTEP ACI VTEP Proxy ACI VTEP 9 Proxy Proxy ACI VTEP ACI VTEP ACI VTEP L3 L2 vSwitch 63 Where to Go for More Information Migrating Existing Networks to ACI - White Paper http://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/ migration_guides/migrating_existing_networks_to_aci.html Migrating FabricPath Networks to ACI – Validated Design Guide http://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/ migration_guides/ fabricpath_to_aci_migration_cisco_validated_design_guide.html Nexus 9000 in NX-OS Mode to ACI Mode In-Place Migration Coming Soon - Stay Tuned! 64 Thank you ! 65