Document 14681228

advertisement

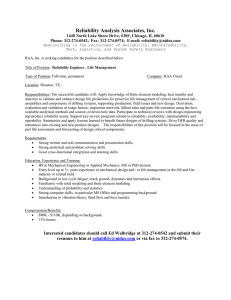

International Journal of Advancements in Research & Technology, Volume 2, Issue 5, M ay-2013 ISSN 2278-7763 312 The Comparative Study of Software Maintenance Forecasting Analysis Based on Backpropogation (NFTOOL and NTSTOOL) and Adaptive nuero fuzzy interference system ANFIS. Anuradha Chug¹ and Ankita Sawhney² ¹ University School of Information and Communication Technology, GGSIP University, Dwarka, New Delhi 110077 ² Computer Sc Department, University School of Information and Communication Technology, GGSIP University, Dwarka, New Delhi 110077 a_chug@yahoo.co.in ankitasawhney1@gmail.com Abstract- Software could be called as heartbeat of today technology. In order to make our software pace with the modern day expansion, it must be inevitable to changes. Enhancements and defects can be stated as two major reasons for software change.Software maintainability refers to the degree to which software can be understood, corrected or enhanced. Maintainability of software accounts for more than any other Software engineering activity. The aim of the paper is to present a study based on the experimental analysis to show that ANFIS can more accurately predict maintainability as compared to other models. Predicting the maintainability accurately using ANFIS has got its benefits in now a day’s risk sensitive business with economic environment where software holds the most valuable assets ,may it be transaction processing (governmental or banking service) ,manufacturing automation(chemicals ,automation) or any other industry. phase is required to keep the software up to date with the environment changes and changing user requirements. The maintenance phase can be defines as: Maintainability: The ease with which a software can be modified in order to isolate defect or its cause, correct defect or its cause, cope with a change environment or meet new requirements. A good quality, maintainable software is always based on robust design. We can design a good design as one which allows easy plug in of new functionality in terms of new methods and new classes without reimplementing the previous iteration results. In order to calculate maintainability we first need to calculate the change effort, which deals with the average effort required to modify, add or delete a class. Maintenance could be regarded as the most important phase as it consumes as it consumes about 70% of the time of a softwares life and about 40-70% of the entire cost of software is spend on the maintenance of the software [1]. IJOART Keywords-ANFIS (Adaptive nuero fuzzy interface system), ANN (Artificial nueral network), Software Maintainability, OO metrics, change, S.D (Standard Deviation), MSE (Mean square error) .1. Introduction Software systems after their release are subjected to continuous evolution and modification. Software includes many phases; maintenance is the last of all the phases .This phase is initiated after the product has been released. The maintenance phase of the software is the most expensive and time consuming of all the phases amongst its life cycle, and because of this it remains a major challenge in quality engineering studies [1]. This Copyright © 2013 SciResPub. The maintenance process can be categorized in the following four areas of interest [2]: a) Corrective maintenance: Modification of software which is performed after the delivery to correct the discovered problems. Examples of corrective maintenance include correcting a failure to test for all possible conditions or a failure to process the last record in a file. IJOART International Journal of Advancements in Research & Technology, Volume 2, Issue 5, M ay-2013 ISSN 2278-7763 b) Adaptive maintenance: Modification of a software performed after the delivery so that the software product remains usable in a changing or changed environment. Examples of adaptive maintenance include implementation of a database management system for an existing application system and an adjustment of two programs to make them use the same record structures. c) Perfective maintenance: Modification of a software performed after delivery so as to improve the performance or maintainability. Examples of perfective maintenance includes modifying the payroll program to incorporate a new union settlement, adding a new report in the sales analysis system, improving a terminal dialogue to make it more user-friendly, and adding an online help command. d) Preventive maintenance: Modification of a software performed after the delivery such that the latent faults get detected and corrected before they become effective faults. Examples of preventive change include restructuring and optimizing code and updating documentation. Many studies have been conducted in past which have found a strong link between the object oriented metrics and software maintainability and that maintainability prediction can be done using these metrics[3]. The Objective of our research is to apply ANFIS tool [4] along with the two other tools, all based on Nueral networks, compare them and ascertain their performance in terms of software maintainability. For this we initially studied various metrics available and amongst them selected those which showed strong relationship to maintainability [5]. The metrics which were chosen by us for the analysis includes size, weighted methods per class, depth of inheritance tree, number of children, number of methods and coupling between objects. Then we divided the available data in three sets of ration 3:1:1 and used them for training, testing and validation. 313 effect of metrics also depends upon the development environment, programming language and the application. I W., Henry S. [3] used A Classic Ada Metric Analyzer in the year 1993 for predicting maintainability. In the year 1993 Rajiv D Banker et al [6] also predicted maintainability based on Statistical Models which assigned weights to each metric used. In year 2002 K.K. Aggarwal et al [7] predicted software maintainability prediction based on fuzzy logic. They took into consideration three aspects of software to measure maintainability which was readability of source code, documentation quality and understandability of software. The advantages of using new rules base of fuzzy models were that they required less storage space and would be efficient when used to find the results in simulation. In year 2003 two predictibilty models were made one by Melis Dagpinar et al based on Correlation, Multivariate Analysis, Best subset Regression Model and the other by Stamelos et al based on Bayesian belief networks. In year 2005 Thwin et al [8] predicted the software development faults and software readiness using general regression nueral network and ward nueral network. In their research they performed two kinds of investigationsprediction of number of defects per class an prediction of number of lines changes per class. In2008 N.Narayanan Prasanth et al based their analysis on Fuzzy repertory table and Regression analysis. They took into consideration sample of 4 products and calculated both absolute and relative complexities over it. Then using fuzzy repertory table technique they acquired the domain knowledge of testers and used it for software complexity analysis. Regression analysis was then used to predict maintainability from code complexity of product. In the year 2011 Marian Jureczkoused Stepwise logistic regression to predict maintainability. IJOART 2. RELATED WORK There are several models which have been proposed to predict the software maintainability. These models varies from simple regression analysis models to complex machine learning algorithms .Some of the existing works are listed as below : G. M. Berns used maintainability analysis tool which is a kind of lexical analyser to predict maintainability in the year 1984.D Kafura et al used static analyser as a count to predict maintainability in year 1987.They used seven different metrics to predict maintainability. For their study they took three different versions of the system and worked on it. Wake, S. et al used Multiple Linear Regression Model in year 1988 to predict maintainability. They used multiple metrics as parameter for their model and also showed that the Copyright © 2013 SciResPub. 3. METRICS STUDIED Software metric is a measure of some property of a piece of software or its specifications [5]. To study the various features of object oriented languages which includes inheritance, encapsulation, data hiding, coupling, cohesion ,memory management we require careful selection of metrics. Object oriented metrics can be used throughout the software life cycle to quantify different aspects of an object oriented application. We studied various metrics and amongst those we have selected those which show strong relationship with software maintainability. The following OO metrics described in table 1 have been taken as independent variables in our study: TABLE 1 IJOART International Journal of Advancements in Research & Technology, Volume 2, Issue 5, M ay-2013 ISSN 2278-7763 INDEPENDENT METRICS DEFINITION Metrics SIZE 1(Lines of code): WMC (Weighted Methods per Class): DIT (Depth of Inheritance Tree): NOC (Number of Children): Definition The number of total lines of code excluding the comments. NOM (Number of Methods): CBO (coupling between object): The number of methods in a class. The sum of McCabe’s cyclomatic complexities of all local methods in a class. The depth of a class in the inheritance tree where the root class is zero. The number of child classes for a class. It counts number of immediate sub classes of a class in a hierarchy. CBO of a class is a count of the number of other classes to which that class is coupled. varying in size and application domains were collected from different open source software progress. In our study we collected and analyzed 25 such classes. CCCC tool was used to analyse the codes and create a report on various metrics of the code. CCCC is a free software tool for measurement of source code related metrics by Tim Littlefair. Some of the advantages of using CCCC as a metric analyser are: It contains internal database recoded using STL which makes it faster, It has got persistent file format which allows analysis of outcomes to be saved across runs, reloaded, read by other tools, Using CCCC all the output files are generated into a single directory, (by default .cccc under the current working directory), Each class identified by CCCC has a detailed report attached with it. In report, low-level data are accompanied by HTML links to the location in the source file which gave rise to the data and configuration directory is no longer required. Six different metrics calculated using CCCC for 25 sample classes are Size1, weighted methods per class, number of children, coupling between object, number of methods, and depth of inheritance tree. The dependant variable change is calculated by keeping following rules in mind. a. Any new comment in line in the new version of the software is not counted as an added line. b. If any two consecutive lines of the previous version are merged as one single line in the recent version then it is regarded as one deleted line change and one modified line change. c. Addition of any new lines to the source code and addition of curly braces which were not there in the previous version and have been added in the recent version of the software are counted as added lines change. d. Deletion and addition of blank lines in not considered as a change. e. Reordering the sequence of a line is not considered as a change. f. Deletion of the statements, curly braces, lines of code which were present in the previous version and are no present in the recent version are regarded and counted as deleted lines. g. Any change or variation in the lines of comment is not considered a change. IJOART Forecasting the maintenance of the software could help in better enhancements at a lower cost. In our study we propose to investigate relation between object oriented metrics and change effort, which indirectly reflects the maintainability of the software being developed. The dependant variable therefore used in our study is change. The dependant variable metric change has been described in TABLE 2: TABLE 2 DEPENDENT METRICS DEFINITION Metrics CHANGE 314 Definition The number of lines added and deleted in a class, change of the content is counted as change. 4. EMPERICAL DATA In this section we will introduce our data sources and will provide description about the data collection method. Two different versions of C++ source codes Copyright © 2013 SciResPub. The data set was than divided in the ratio of 3:1:1 for the training data: testing data: validation data means 60% of the sample data is used for training that is using the specified algorithm machine learns from the data patterns. The next 20% of the data is used for testing to analyse how much the predicted and actual values are close to each other. The last 20% of the data is used for validating. The samples were than trained according to the appropriate algorithm selected. The table 3 shows the descriptive statistics of the various data metrics used. IJOART International Journal of Advancements in Research & Technology, Volume 2, Issue 5, M ay-2013 ISSN 2278-7763 TABLE 3 STATISTICS OF DATA SET Metric Mean S.D Suppose, for instance, that we have data from a housing application and we want to design a network which can predict the value of a house given pieces of geographical and real estate information. We have a total number of homes for which we have items of data and their associated market values. We can solve this problem in two ways: a. Use a graphical user interface, NFTOOL, as described in “Using the Neural Network Fitting Tool”. b. Use command-line functions, as described in “Using Command-Line SIZE1 Minim um 5 1040 281.16 293. 9 WMC 1 24 6.64 5.51 DIT 0 1 0.12 0.33 NOC 0 1 0.16 0.37 CBO 0 2 0.32 0.56 NOM 1 24 5.88 5.80 The number of hidden neurons selected for our sample data: 10 After the collection of data we then used Nueral network toolbox to predict maintainability and validate the results. Following standard steps are followed while designing neural networks to solve problems application areas: The structure of nueral network and the training algorithm selected in NFTOOL inside nueral network toolbox is as shown in figure 1 a. b. c. d. e. f. g. Maximum 315 In our study we are working with the graphical user interface. To work with the fitting problem we first arrange a set of input vectors as columns in a matrix than arrange another set of target vectors (that is the correct output vectors for each of the given input vectors) into a second matrix. IJOART Collect data. Create the network. Configure the network. Initialize the weights and biases. Train the network. Validate the network. Use the network. At the end the results obtained from all the three different techniques obtained based on the mean square error value obtained(MSE).The mean square error could be defined as the average square difference between the outputs obtained and the targets. Lower value of MSE implies better results and zero value of MSE depicts no error. 5. PREDICTION OF MAINTAINACE USING BACKPROPOGATION (NFTOOL) Firstly maintenance effort prediction models are constructed and the predictive accuracies are compared with the actual values using NFTOOL available in the matlab nueral network toolbox. Using the NFTOOL [10] we train the network to fit the inputs and targets. It solves the data fitting problem with a two layer feedforward network trained with Lerenberg-Marquardt. Neural networks are good at fitting functions. In fact, there is proof that a fairly simple neural network can fit any practical function Fig 1 :( Snapshot of trained nueral network using NFTOOL) The Mean Square Error values (MSE) values observed for the above system are listed as below in table 4. TABLE 4 RESULTS OBTAINED FOR NFTOOL Copyright © 2013 SciResPub. IJOART International Journal of Advancements in Research & Technology, Volume 2, Issue 5, M ay-2013 ISSN 2278-7763 Data Training Testing Validation No of samples 17 4 4 316 MSE 8.98e-0 28.26e-0 33.53e-0 A plot of comparison between training, testing and validating data is shown in figure 2: Fig 3: Snapshot of trained nueral network using NTSTOOL The Mean Square Error values (MSE) values observed for the above system are listed as below in table 5. TABLE 5 IJOART RESULTS OBTAINED FOR NTSOOL Fig2: comparison between trained, tested and validated values 6. PREDICTION OF MAINTAINACE USING BACKPROPOGATION (NTSTOOL) The next investigation is to construct maintenance effort prediction models and compare the predictive accuracies with the actual values using NTSTOOL available in the matlab nueral network toolbox. NTSTOOL stands for neural network time series tool. Time series can be defined as a series of data where the past values in the series may influence the future values. (The future value is a nonlinear function of its past m values) NTSTOOL opens the nueral network time series wizard and leads you through solving a problem. This tool takes the past values and predicts the future values. NTSTOOL was introduced in Neural Network Toolbox version 7.0, which is associated with release R2010b. In order to train the data using NTSTOOL, a set of input vectors are arranged as columns in a cell array then the target vectors (that is the correct output vector for each of the given input vectors) are arranged into a second cell matrix. The number of hidden neurons selected for our sample data: 10 The structure of nueral network and the training algorithm selected in NFTOOL inside nueral network toolbox is as shown in figure 3 Copyright © 2013 SciResPub. Data Training Testing Validation No of samples 17 4 4 MSE 262.13e-0 272.84-0 349.53e-0 A plot of comparison between actual and predicted maintenance effort on validation data is shown in fig 4: Fig4: Comparison between trained, tested and validated values IJOART International Journal of Advancements in Research & Technology, Volume 2, Issue 5, M ay-2013 ISSN 2278-7763 7. PREDICTION OF MAINTAINACE USING ADAPTIVE NUERO-FUZZY INTERFERENCE SYSTEM (ANFIS). The next investigation is to calculate maintenance effort using nuero fuzzy technique .ANFIS are a class of adaptive network that are functionally equivalent to fuzzy interference systems, they use hybrid training algorithm. The ANFIS approach learns the rules and membership functions from data. ANFIS is an adaptive network. An adaptive network is network of nodes and directional links. Associated with the network is a learning rule - for example back propagation. It’s called adaptive because some, or all, of the nodes have parameters which affect the output of the node. These networks are learning a relationship between inputs and outputs Using a given input/output data set, the toolbox function ANFIS constructs a fuzzy inference system (FIS) whose membership function parameters are tuned (adjusted) using either a backpropagation algorithm alone, or in combination with a least squares type of method. This allows your fuzzy systems to learn from the data they are modeling. The Matlab Fuzzy Logic Toolbox function that accomplishes this membership function parameter adjustments called ANFIS. We can start ANFIS using either command line option or using graphical user interface. In our study we have worked on graphical user interface for ANFIS.As a initial step we load the data(training ,testing ,validation ) using the appropriate radio buttons. The loaded data gets plotted on the plot region. We then either generate or load an initial FIS model and then we select the FIS model parameter optimization method-backpropagation or a mixture of backpropagation and least squares (hybrid method).after this the number of training epochs and the training error tolerance are chosen and the data is trained by clicking the train now radio button .We can view the FIS output versus the training, testing and checking data. 317 A plot of actual and predicted maintenance effort on training data is shown in figure 5: Fig 5 : Comparison between training and actual values A plot of actual and predicted maintenance effort on testing data is shown in figure 6: IJOART The Mean Square Error values (MSE) values observed for the above system are listed as below in table 6. Fig 6: Comparison between testing and actual values A plot of actual and predicted maintenance effort on validation data is shown in figure 7: TABLE 6 RESULTS OBTAINED FOR NFTOOL Data Training Testing Validation No of samples 17 4 4 Copyright © 2013 SciResPub. MSE 4.48e-0 15.65e-0 23.72e-0 IJOART International Journal of Advancements in Research & Technology, Volume 2, Issue 5, M ay-2013 ISSN 2278-7763 Fig 7 Comparison between checking and actual values] 8. THREATS TO VALIDITY This section included some of the limitations related to the project. First, the object oriented classes are easier to analyze till their complexity is below the threshold level. The understandability difficulty increases as the complexity increase. Second, The project runs well for the small to medium scale projects but the matlab may show an out of memory exception for the v large scale projects for this we need to ensure sufficient memory free space memory is available in the drive where matlab has been installed. 9. CONCLUSIONS In the work we have compared three different techniques (Fitting tool, Time series tool and Adaptive nuero fuzzy interference system) for predicting the software maintenance effort (change) of software system. Comparing the figures(figure2,figure4,figure5,figure6, figure7) showing the difference between actual and predicted values and the tables (table 4,table 5,table 6) showing mean square error obtained from for three techniques clearly shows that the mean square error value for validation data obtained for ANFIS (23.7e-0)trained network is less compared to the networks trained using NFTOOL(33.53e-0) and NTSTOOL(349.53e-0) and therefore predicted values obtained using ANFIS are more closer to actual values in comparison to the values from the other two methods (NFTOOL and NTSTOOL).This concludes that results of ANFIS are better for the prediction purposes. However, this research has been done for a small system and therefore the results needs to be generalized by conducting similar analysis on data of other large real time systems. In future we are planning to take other paradigms apart from those taken in this study and perform validation on it. 318 [6] W. Li and S. Henry, "Object-Oriented Metrics that Predict Maintainability," Journal of Systems and Software, vol. 23, no 2, pp. 111-122, 1993. [7]Chidamber, Shyam R. and Cris .F Kamerer, “Towards a metrics Suite for Object-Oriented Design Proceedings”, OOPSLA'91, July 1991, pp.197-211 Conference 1991. [8] K.K. Aggarwal, Yogesh Singh, Jitender Kumar Chhabra, “An Integrated Measure of Software Maintainability”, proceedings reliability and maintainability symposium, 2002, 0-7803-73480/02/$10 2002 @IEEE . .[9]M. Thwin and T. Quah, "Application of neural networks for software quality prediction using object oriented metrics", Journal of Systems and Software, vol. 76, no. 2, pp. 147-156, 2005. [10]Dr. Arvinder Kaur, Kamaldeep Kaur, Dr. Ruchika Malhotra,” Soft Computing Approaches for Prediction of Software Maintenance Effort”, 2010 International Journal of Computer Applications (0975 8887) Volume 1 – No. 1680. [11] Y. Zhou and H. Leung, "Predicting object-oriented software maintainability using multivariate adaptive regression splines," Journal of Systems and Software, vol. 80, no. 8, pp. 1349-1361, 2007. [12] Mahmoud O. Elish and Karim O. Elish , “ Application of TreeNet in Predicting Object-Oriented Software Maintainability: A Comparative Study”, European Conference on Software Maintenance and Reengineering 1534-5351/09 $25.00 © 2009 IEEE DOI 10.1109/CSMR.2009.57. [13]P. K. Suri1, Bharat Bhushan2. “Simulator for Software Maintainability” IJCSNS International Journal of Computer Science and Network Security, VOL.7 No.11, November 2007. IJOART ABOUT THE AUTHORS REFERENCES [1]Ruchika Malhotra and Anuradha Chug,,” Software Maintainability Prediction using Machine Learning Algorithms”,Software Engineering: An International Journal (SEIJ) , Vol. XX, No. XX, 2012. [2]Sandeep Sharawat “Software maintainability prediction using nueral networksInternational Journal of Engineering Research and Applications “(IJERA) ISSN: 2248-9622 www.ijera.com Vol. 2, Issue 2,Mar-Apr 2012, pp.750-755 [3]W. Li and S. Henry, "Object-Oriented Metrics that Predict Maintainability," Journal of Systems and Software, vol. 23, pp. 111122, 1993. [4]Jyh–Shing,Roger Jang ,”ANFIS-Adaptive network based fuzzy inference system”,IEEE transactions on systems,man,cybernets VOL23,No 3 May/June 1993. [5]S. Chidamber and C. Kemerer, "A Metrics Suite for Object Oriented Design," IEEE Transactions on Software Engineering, vol. 20, no. 6, pp. 476-493, 1994 . Copyright © 2013 SciResPub. Anuradha Chug is Assistant Professor in the Department of University School of Information and Communication Technology (USICT), Guru Gobind Singh Indraprastha University, New Delhi. Her areas of research interest are Software Engineering, Neural Networks, Analysis of Algorithms, Data Structures and Computer Networks. She is currently pursuing her Doctorate from Delhi Technological University in the field of Software Maintenance under the guidance of Ms Ruchika Malhotra. She has earlier achieved top rank in her M.Tech (IT) degree and conferred the University Gold Medal in 2006 from GGS IPU. IJOART International Journal of Advancements in Research & Technology, Volume 2, Issue 5, M ay-2013 ISSN 2278-7763 319 Previously she has acquired her Master’s degree in Computer Science from Banasthali Vidyapith, Rajasthan in the year 1993. She's additionally earned ‘PG Diploma in Marketing Management’ from IGNOU in 1995. She has long teaching experience of almost 19 years to her credit as faculty and in administration at various educational institutions in India. She has also presented number of research papers in national/international seminars, conferences and research journals. She can be contacted by E-mail at a_chug@yahoo.co.in IJOART Ankita Sawhney is perusing M.Tech(Computer Science) from University School of Information and Communication Technology(USCIT),Guru Gobind Singh Indraprastha University, New Delhi. Her areas of research interest are Software Engineering, nueral Networks, software Testing.Previously she has acquired her Bachelors degree(B.Tech) in Information Technology from Bharati Vidyapeeth’s college of Engineering, Guru Gobind Singh Indraprastha University, New Delhi. She secured 1st position in IT department of Bharati Vidyapeeth’s College of Engineering. Apart from this she has been adjudged as a scholar for both scholastic and non scholastic subjects in school days and has also achieved proficiency awards. She can be contacted by E-mail at ankitasawhney1@gmail.com Copyright © 2013 SciResPub. IJOART