P006 NON-UNIFORM UPLAYERING OF HETEROGENEOUS RESERVOIR MODELS

advertisement

P006 NON-UNIFORM UPLAYERING OF HETEROGENEOUS RESERVOIR MODELS

Ahmed Sharif

Roxar, Gamle Forusvei 17, N-4065 Stavanger, Norway

Abstract

A method for non-uniform uplayering of heterogeneous geological models is proposed. The method

generates layering scheme by which coarse scale

simulation models preserve the impact of reservoir heterogeneity on fluid flow and production

response. The uplayering process involves generation of streamline-based dynamic response of

the geological models. The response data were

then analysed and processed to establish a set

of connectivity-based attribute logs. Multivariate analysis and pattern recognition techniques

were finally applied to produce layering scheme

for coarse-scale simulation. The performance of

the proposed method was investigated under a set

of realistic field-scale reservoir models. The results indicate that flow-based non-uniform uplayering can improve the performance of coarse-scale

simulation models, compared to that of the simple

uniform uplayering approach.

1

Introduction

In reservoir characterisation, geologic models are

now routinely built with high resolution grid systems in the order of 106 − 107 cells, whilst production performance and underlying reservoir flow

behaviour are, for practical reasons, modelled with

lower resolution grids in the order of 104 − 105

blocks. This disparity in scale invites the process

of upscaling, by which large-scale properties for

the coarse model are computed. A major challenge to practicing reservoir engineers is to design coarse-scale representation of geologic models that accurately estimates important geological features that may dictate reservoir fluid flow,

whilst keeping the simulation model computationally efficient to enable multiple runs. Hence, the

question of scale and estimation of accurate largescale reservoir properties have attracted much attention. Various methods (from robus flow-based

numerical algorithms to the most exotic analytical approaches) that differ both in complexity and

accuracy were developed to deal with this issue.

The ability of an upscaling process to produce

large-scale properties that capture flow behaviour

of fine-scale models depends upon: 1) proper design and construction of coarse-scale grid models, 2) correct sampling of the fine-scale properties, and 3) application of relevant and accurate

averaging method. This work presents a method

that can be utilised to design coarse-scale simulation grids based on the dynamic behaviour of

fine-scale models. The work focuses exclusively

on uplayering − the design of simulation layering. This is not a particularly farfetched strategy; from depositional perspective, properties of

heterogeneous sedimentary rocks usually exhibit

higher variability in the vertical direction than in

horizontal directions. Geologic models for such

heterogeneous reservoirs are therefore bound to

have a large number of high resolution fine-scale

layers. Besides, with the current computational resources, flow simulation on coarse-scale grids that

have similar resolution in x- and y-directions as

the geologic models is relatively feasible. In the

vertical direction, however, some form of uplayering are often necessary. In practice, representation

of fine-scale layering in reservoir simulation can be

conveniently tackled by one of three approaches:

1) uniform uplayering of fine-scale models based

on a pre-conceived number of simulation layers,

2) preliminary uniform uplayering followed by selective splitting of certain coarse-scale layers, and

3) direct use of the geologic-scale layering. The

first approach, despite being fairly common, can

be potentially erroneous due to the risk of indiscriminately lumping layers with highly distinctive

hydraulic responses. The second one is obviously

an attempt to preserve certain important features

of the geologic model, whereas the last approach

is desirable but computationally prohibitive unless

the geologic models are relatively small.

9th European Conference on the Mathematics of Oil Recovery − Cannes, France, 30 August - 2 September 2004

2

A number of approaches have been proposed for

the design of proper uplayering that on one hand

preserve much of the details of the geologic models whilst on the other hand the simulation model

remains computationally efficient. An attempt to

tackle this problem was probably first presented

by Testerman[1] , who proposed a contrast seeking

statistical algorithm that utilises permeability as

a guide. The basic idea in that approach was to

develop reservoir zonation such that the variance

within individual zones is minimised while retaining maximum variance between zones. Among the

more recent approaches is an algorithm presented

by Stern et al.[2] that was devised to produce an

optimal fine-scale layer grouping based on breakthrough time and flux. Li et al.[3] presented an

optimisation algorithm which generates simulation

layering through minimisation of the discrepancy

between selected properties of the geologic and

simulation models. Durlofsky et al.[4] proposed a

flow-based uplayering technique by which layer averages of the fine-scale flux are employed to guide

grouping of fine-scale layers. Younis et al.[5] proposed a heterogeneity preserving procedure that

first identifies spatially connected important geologic bodies which mainly control reservoir flow

behaviour.

This paper presents an uplayering procedure

that makes use of single-phase flow solution on the

3D fine-scale model and streamlines flow characterisation. Although an essential feature still remains proper choice of an uplayering property (e.g.

permeability, flow rate), neither grouping (or segmentation) of layer averages nor explicit identification of hydraulic bodies within the flow domain is

required. Instead, streamline solutions were used

to aid generation of a set of connectivity-based

attribute logs that reveal regional profiles of the

fieldwide hydraulic characteristics. The streamline

solutions offer an efficient approach to fluid flow

modelling and thereby powerful depiction of dynamic connectivity of the reservoir at fine geologicscale. By making fine-scale total flow rate serve as

an uplayering property, we utilise developments in

feature extraction and pattern recognition to generate coarse-scale layering for simulation models.

Through its reliance on streamline-based regional connectivity description, the approach advocated here reveals feasibility of spatially correlatable regional layering, which is recognisable

from log deflections and variation of response pro-

files brought about by the underlying reservoir heterogeneity. However, it is worthwhile to note that

the pattern recoginition approach identifies presence of appropriate layering due to the similarity implied by response profiles of the fine-scale

model. In plain terms, the pattern recognition algorithm does not inherently grasp the underlying

cause-and-effect relationship between the reservoir

response and rock properties. To deal with this obvious weakness, the utilised algorithm was trained

with model-related background knowledge that supervise it thoughout the uplayering process.

The uplayering obtained by means of the proposed method was tested by carrying out numerical two-phase flow simulations, and the performance of the model was compared with that of its

parent fine-scale solution as well as the solution

obtained via a competing and uniformly uplayered

model. Although no assertions are made here to

have accomplished general solution to the uplayering problem, the approach highlighted here (one

of several currently under investigation) produced

layering design that outperformed the uniformly

layered coarse model.

The outline of this paper proceeds as follows.

First, description of the reservoir model and response measure are introduced in Section 2. Relevant concepts in streamline simulation and the

generation of connectivity-based response logs are

also presented. Section 3 introduces feature extraction method that was applied to pre-process

the response data prior to the pattern recognition

process. The feature extraction operation helps to

’de-noise’ the response data by reducing intercorrelations between response logs, such that the pattern recognition algorithm will focus only on the

intrinsic features present in the data. Section 4

presents the framework of the pattern recognition

technique and the modelling of the uplayering. Finally, the performance of the proposed uplayered

method is demonstrated in Section 5 though numerical waterflood simulations. Further discussion

and conclusions are also highlighted in Section 6.

2

Reservoir Response Measure

The proposed uplayering approach requires flow

modelling on the fine grid and generation of

streamlines. For this purpose, a streamline simulator in RMS was used to solve single-phase flow

equation on the full 3D fine-scale grid with bound-

9th European Conference on the Mathematics of Oil Recovery − Cannes, France, 30 August - 2 September 2004

3

ary conditions defined by injection and production

wells. The reservoir model was populated with

stochastically modelled permeability and porosity.

Fig.[2] shows a snapshot of permeability field with

streamlines. Key fine-scale data having a connection with the matter at hand are flow rate (obtained from pressure via Darcy’s law), time-offlight, streamlines and volumes drained/flooded by

individual producers/injectors. The time-of-flight,

Figure 1: Permeability field and streamlines for finelayered reservoir model. Ten injectors and nine producers were used to generate fine-scale

designated by τ (x), is a central parameter in the

proposed uplayering procedure. By definition[6] ,

s

φ

τ (x) =

dξ

(1)

0 |u|

where u is the total velocity and φ is porosity.

The parameter describes the travel time at which

a neutral tracer along a streamline would move

from zero (at the injection well) along some trajectory, s. In the context of this work, the flexibility of the time-of-flight parameter in tracking

the movement of fluid has been an essential to the

proposed approach. For instance, by selecting a

certain time-of-flight, τ ∗ , the area or volume of

the region enclosed by the envelope τ (x) ≤ τ ∗

can be identified. For an injector producer pair,

the time-of-flight increases from zero (at the injection well) to some maximum (post-breakthough)

value. Hence, for various levels of τ ∗ , the swept

volume can be easily calculated. For this purpose,

let Dk designate pairwise connected region for a

given layer k for which τ (x) is less than or equal

to some prescribed value. The swept volume in

terms of the physical space is provided by

Θ(x − x ) dx

(2)

Vk =

Dk

where Θ is a step function equal to unity within

Dk . Following this solution, the average flow rate

through each layer in Dk was calculated and the

response log for each connected well pair was generated. In the 3D fine-scale grid, the volume elements in Dk were determined by using an indicator

parameter that first selects relevant connect pair

of wells and then filters out cells whose time-offlight exceeds τ ∗ . The choice of τ ∗ is primarily

arbitrary and various values may lead to different

response profiles. A good initial estimate can be

obtained by analysis of the cumulative production

of individual producers connected to a given injection well. Fig.[2] presents profiles of flow rate logs

for 6 connect pairs (out of 30 in the model) in this

study. It may be noted that despite the complex

and seemingly erratic representation of the local

heterogeneity by the individual response logs, the

presence of layering can be nonetheless detected.

Following sections present techniques that systematically detect similarity in response logs.

3

Extraction of Key Response Feature

The response data for the uplayering constitutes

a multivariate data space defined by n logs and

m layers. Let X ∈ Rm×n designate the response matrix in which the elements of the jth

column represent the values of the total flow

rate for layers i = 1, · · · m. Furthermore, let

F = {xj ∈ Rm | j = 1, · · · , n} designate data set

in feature space. Through some functional mapping, we will now apply feature extraction technique that effectively captures essential information hidden in X by means of a p−dimensional

subspace. The feature extraction operation is primarilt intended to ’de-noise’ the data by reducing intercorrelations between response logs, such

that the subsequent pattern recognition algorithm

will focus only on the intrinsic features present

in the data. The functional mapping can be accomplished by means of classical subspace techniques, which map original data along the axes

of some new framework in which a fewer number

of orthogonal vectors systematically absorb most

of variance. An effective mathematical tool in the

9th European Conference on the Mathematics of Oil Recovery − Cannes, France, 30 August - 2 September 2004

4

ual factors. Thus,

the total variance is the sum of

trace(ΣT Σ) = nj=1 σj2 .

Of particular interest here is the column projection XV = U Σ which can be exploited to provide

straightforward analysis of the pattern in the original data through successive partitioning of Σ and

U . Noting that the singular values are ordered,

it follows from the summation in the right hand

side of Eq.[3] that the feature extraction process

can be viewed from the perspective of a partition

along some pth column

Σ

U

)

(4)

X = (U

(V V )T

Σ

0

20

40

60

Layer Number

80

100

Figure 2: Profiles of total flow rate for a set of connected well pairs. The individual logs are scaled by

division with maximum value of each log

context of this work is the singular value decomposition (SVD) technique. The SVD of the response

matrix, with m > n, is the factorisation[7]

X = U ΣV T =

n

σj uj vjT

(3)

j=1

where U ∈ Rm×n is an orthogonal matrix that

contains left singular vectors uj (eigenvectors for

XX T ), V ∈ Rn×n is an orthogonal matrix that

contains right singular vectors uj (eigenvectors for

X T X) and Σ =diag(σj , · · · , σn ), whose entries σi

(arranged in the order σ1 ≥ · · · σn ≥ 0) are the

singular values of X. The magnitude of σj2 (i.e.,

eigenvalues of the covariance matrices) reveals the

proportion of variance described by the individ-

contains the first p singular values, and Σ

where Σ

contains the remaining

n−p singular values. Since

the total variance is nj=1 σj2 , the above partition

admits relationship between the dimension p of the

desired subspace and the cost (in variance) associated with the truncation of the remaining n − p

components. In geological data analysis, there exists a number of approaches[8] that are employed

to decide the dimension of the desired subspace.

A common and simple approach is to specify the

amount of variance loss which can be afforded in

a given analysis. Denoting α by the percentage of

total variance that is lost due to the shrinkage of

the data space, the dimension of the feature subspace was obtained through the following equation

p

2

j=1 σj

(5)

α = 100 1 − n

2

j=1 σj

Fig.[3] presents the percentage variance explained

by retaining p out of n = 28 factors. Using a

variance loss of α = 5%, the feature space was

condensed to 10 components. The resulting reduced model was then passed to the pattern recognition algorithm that eventually generates the desired uplayering.

4

Uplayering by Pattern Recognition

Using the results obtained through the preceding feature extraction procedure, let now

X ∈ Rm×p designate the column projection

of the feature subspace.

Furthermore, let

P = {xi ∈ Rp | i = 1, · · · , m} denote the pattern

space of the data. Uplayering by pattern recognition involves application of cluster analysis that

9th European Conference on the Mathematics of Oil Recovery − Cannes, France, 30 August - 2 September 2004

5

Variance absorbed (%)

100

follows:

α = 5%

80

1. Initialise clustering process by selecting L

cluster centroids.

60

2. Calculate distance to cluster centroids and assign each pattern xi ∈ P to its closest cluster

center.

40

3. Update cluster centroids using the current

cluster memberships.

20

0

4. If a prescribed convergence criterion is not

met, go to step 2.

0

0.2

0.4

0.6

0.8

1.0

p/n

Figure 3: Percentage variance explained vs the number of factors, p, retained out of the total n = 28. Discarding the last 20 components costs 5% loss of total

variance, whereas retaining the first two factors alone

costs 50% of the variance.

generates grouping of pattern data along the layering dimension, i = 1, · · · , m, such that pattern vectors that exhibit similarity tend to fall

into the same class or cluster, whereas those that

are relatively distinct tend to separate into different clusters. The comparison of responses was

accomplished by using distance metric that measures similarity (or rather dissimilarity) between

patterns. In earth sciences, cluster analysis techniques have been widely used in segmentation

of well logs[10,11,13] and zonation of sedimentary

sequences[9,11,12] . In this work, the k-means clustering method[14] , an iterative partitional clustering technique, was utilised to generate a set of

pairwise disjoint clusters or classes that ultimately

define the desired coarse-scale layering. The kmeans operates as an analysis of variance in reverse: it starts with the selection of centers for

a desired number of clusters, L, in P, and returns a set of clusters, C = {C | = 1, · · · , L}, that

partition the pattern data such that some criterion function (e.g., squared error) is optimised.

In Euclidean formulation, the regular (i.e., unsupervised) k−means algorithm is the least-squares

functional

J=

xi − m 2

(6)

xi ∈P

where xi − m is a dissimilarity measure (distortion) between the pattern xi and centroid m

of cluster . The algorithm can be summarised as

Typical convergence criteria are minimal decrease

in dispersion, or that there is no further reassignment of patterns to new cluster centres (i.e., stable

partition).

The regular k−means algorithm, as presented

above, is not directly useful in this context due to

the obvious lack of supervision. A supervised version of the algorithm was therefore applied by incorporating model-related background knowledge

into the clustering procedure. The background

knowledge was presented to the algorithm as constraints that aid initialisation (step 1) and assignment of patterns to their closest cluster centres

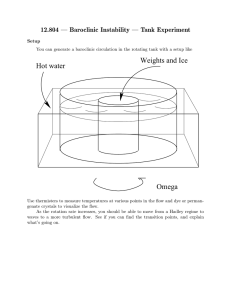

(step 2). First, a model-based initialisation was estimated by generating field response markers, illustrated in Fig.[4], that were obtained through lateral correlation of response shape (peaks, troughs,

levels, ramps etc.) attributes[15] .

The shape attributes were in turn defined by

through the first and second derivatives obtained

by means of smooth differentiation filters[16] of

each curve, and the shape attributes were thereby

identified based on interpretation of the slope, inflexion, concavity and curvature along each trace.

The other set of constraints were designed to

supervise step 2. The scheme involved generation

of a framework that has pairwise ’must-link’ and

’cannot-link’ constraints (with an associated cost

of violating each constraint) between patterns in

the dataset[17] . The cost of having a linkage (or

no linkage) was derived based on facies (or permeability rock type) information, stratigraphic constraints and optional preservation of high (or low)

flow zones.

Penalties with graded severity (i.e., proportional

to the ’seriousness’ of the violation) were used to

enforce the imposed constraints. The level of the

9th European Conference on the Mathematics of Oil Recovery − Cannes, France, 30 August - 2 September 2004

6

of violating the model constraints was set-up by

means of the following optimisation function

Peak

J=

Trough

xi − m 2 +

xi ∈P

Small peak

Small trough

Level

Ramp

Large trough?

Medium peak

Positive spike

Figure 4: Illustration of curve shape analysis and

marker identification for cluster initialisation

penalty was selected to reflect how far apart a

pair of pattern vectors are. The cost of violating constraints for patterns that should (or should

not) link was set higher than the cost of violating constraints for patterns that are in turn far

apart. Moreover, grouping of patterns that originate from the same rock type was given a cost

lower than the cost of grouping of patterns that

differ in rock type but fairly similar in response.

Furthermore, stricter penalties were imposed on

violation of the stratigraphic constraints (i.e., adjacency constraints) and preservation of distinctive

high/low flow zones. For instance, the penalty for

grouping a pair of patterns, (xi , xj ) whose layers

are separated by a specified surface was set high,

whereas the presence of a hydraulically distinctive

pattern vector, xi in an otherwise predominantly

homogeneous body entails preservation (i.e., maximum penalty) of xi at the cost of rejecting the

pairwise groupings (xi−1 , xi ) and (xi , xi+1 ).

Using such model-related background information, linkage conditions implied in the constraints

were used to generate the set L with must-link

pairs (xi , xj ) ∈ L and the set L̄ with cannot-link

pairs (xi , xj ) ∈ L̄, with associated weights and

penalties. Following Basu et al.[18,19] , a supervised

version of the k−means algorithm, which combines

the objective function in Eq.[6] along with the cost

π(xi , xj ) +

(xi ,xj )∈L

π̄(xi , xj )

(7)

(xi ,xj )∈L̄

where π and π̄ are functionals that quantify the

overall cost of violating linkage constraints for a

must-link and cannot-link between two patterns

xi and xj .

The above clustering algorithm was employed

to design the uplayering of a 20 layers coarse-scale

flow simulation model. The number of layers was

first chosen based on streamline breakthrough time

analysis of an arbitrary uniform coarse model, see

Fig.[5].

1.0

Rate of change in relative breakthrough

Small trough

Large Peak

Medium peak

0.8

0.6

0.4

0.2

0

0

5

10

15

20

25

Number of fine layers in a coarse layer

Figure 5: Change of relative breakthrough time with

the number of fine-scale layers lumped in a coarse layer

By increasing the number of fine-scale layers

assigned to a coarse layer from 2 to 20, a uniform coarse model with 1:5 (i.e., 20 layers) layering contrast was created. Finally, the corresponding non-uniform model was generated by means of

the above described clustering algorithm. The levels of fine-scale layer lumping in this non-uniform

model are {6, 7, 2, 4, 5, 3, 9, 10, 2, 3} for the upper

10 layers and {2, 6, 7, 9, 2, 2, 2, 8, 5, 6} for the lower

10 layers. Here 6, 7, · · · represent number of finescale layers that constitute layers 1, 2, · · · of the

coarse-scale model.

9th European Conference on the Mathematics of Oil Recovery − Cannes, France, 30 August - 2 September 2004

7

Numerical Simulation Results

6

Discussion and Conclusions

The uplayering method presented here was intended to examine application of streamline-based

connectivity information in simulation grid designed. In spite of the high level of rock heterogeneity, the performance of the non-uniform uplayering approach has been excellent. Further investigation of this issue is planned and several enhancement of the analysis methodology are currently

under consideration. Nevertheless, generation of

simulation model layering through a quantitative

analysis of streamline-based regional connectivity

response data was found promising.

1.0

Fractional oil rate, qo /qotarget

The performance of the proposed uplayering

method was evaluated using RMSflowsim. A set

of waterflood simulations was carried out using following three simulation grid models: 1) a reference

fine-scale model with 100 layers, 2) a corresponding 20-layers and non-uniformly uplayered coarse

model, and 3) uniformly uplayered 20-layers coarse

simulation model. The coarse-scale permeability

fields were obtained by flow-based diagonal tensor upscaling algorithm that employs sealing (noflow) boundary conditions. The reservoir fluid is

modelled as an undersaturated black oil with viscosity ratio µo /µw = 3, capillary pressure is neglected and relative permeabilities are described

by the Corey-type parameterisation krw = S 3.75

and kro = (1 − S)2.75 with an initial water saturation of Swi = 0.2 and residual oil saturation of

Sor = 0.1. Fig.[6] presents fractional flow of oil

produced versus pore volume water injected for the

three cases. As can be seen, for tD ≤ 1.0, the nonuniform case does not only outperform the uniform

case, but it also adequately reproduces the early

production response of the fine model. This is further illustrated through the comparison of average

reservoir pressure in Fig.[7]. This outperformance

is mainly due to the ability of the non-uniform

model to capture early fluid movements and rapid

advance of injected water along high permeability

streaks. At late post-breakthrough times, however, injection-driven build-up has more or less

stabilised and the gravity force acquired sufficient

time to stabilise the slowly moving water front;

something which the non-uniform model apparently could not satisfactorily reproduce.

Fine Model

Non-uniform

Uniform

0.8

0.6

0.4

0.2

0

0.5

1.0

tD

1.5

2.0

Figure 6: Fraction of target oil rate produced vs

pore volume water injected, tD , for fine-scale reference model (dotted), uniformly uplayered (dashed)

and non-uniformly uplayered model

1.0

Reservoir pressure, p̄/pi

5

Fine Model

Non-uniform

Uniform

0.9

0.8

0

0.5

1.0

1.5

2.0

tD

Figure 7: Average reservoir pressure (as fraction of

initial pressure) vs pore volume water injected, tD , for

fine-scale reference model (dotted), uniformly uplayered (dashed) and non-uniformly uplayered model

9th European Conference on the Mathematics of Oil Recovery − Cannes, France, 30 August - 2 September 2004

8

Nomenclature

k

φ

t

tD

qo

qotarget

pi , p̄

τ

Θ

V

u

U , Σ, V

X

x

α

L, L̄

π, π̄

−

−

−

−

−

−

−

−

−

−

−

−

−

−

−

−

−

Permeability

Porosity

Time

Pore volume injected

Oil production rate

Target oil production rate

Reservoir pressure

Time of flight

Unit function

Volume

Flow rate

SVD matrices

Response matrix

Data vector

Proportion of variance

Pattern linkage sets

Cost function for linkage

References

1. Testerman, J. D.: “A Statistical ReservoirZonation Technique,” JPT, (August 1962) 14,

889-893.

2. Stern, D. & Dowson, A.G.: “A Technique for

Generation Reservoir Simulation Grid to Preserve Geologic Heterogeneity,” paper SPE 51942

presented at the 1999 SPE Reservoir Simulation

Symposium, Houston, Texas, 14-17 Feb., 1999.

3. Li, D. & Beckner, B.: “Optimal Uplayering of

Scaleup of Multimillion-Cell Geologic Models,”

paper SPE 62927 presented at Annual Technical

Conference and Exhibition, Dallas, Texas, 1-4

Oct. 2000.

4. Durlofsky, L. J., Jones, R.C. & Milliken,

W.J.: “A Non-Uniform Coarsening Approach

for the Scale-Up of Displacement Processes in

Heterogeneous Porous Media,” Advances in Water

Resources, (1995) 20, 335-347.

5. Younis, R. M. & Caers, J.: “A Method

for Static-based Up-gridding,” ECMOR VIII,

Freiburg, Germany, 3-6 Sept. 2002.

6. King, M.J. & Datta-Gupta, A.: ”Streamline

simulation: A Current Perspective,” in-situ (1998)

22(1), 91-140

7. Golub, G.H. & Loan, C.F.V.: Matrix Computations, Johns Hopkins University Press, Baltimore,

1996.

8. Swan, A.R.H. & Sandilands, M.: Introduction

to Geologic Data Analysis, Blackwell Science,

Victoria, Australia, 1995.

9. Webster, R.: “Optimally Partitioning Soil Transects,” Journal of Soil Science, (1978) 29, 388-402.

10. Mehta, C.H. Radhakrishnan, S. & Srikanth,

G.: “Segmentation of Well Logs by Maximumlikelihood Estimation,” Mathematical Geology,

Vol. 22 (1990) 7, 853-869.

11. Gill, D., Shomrony, A. & Fligelman, H.:

“Numerical Zonation of Log Suits by AdjacencyConstrained Multivariate Clustering,” AAPG

Bulletin, Vol. 77 10, (1990) 1781-1791

12. Olea, R. A: “Expert System for Automated

Correlation and Interpretation of Wireline Logs,”

Mathematical Geology, Vol. 26 (1994) 8, 879-897.

13. Doveton, J.D.: Geologic Log Analysis Using

Computer Methods, Computer Applications in

Geology, Vol. 2, AAPG, Tulsa, 1994.

14. MacQueen, J.: “Some Methods for Classification and Analysis of Multivariate Observations

,” In Proc. 5th Berkeley Symp. on Math. Stat.

and Probability, Berkeley, CA, (1967) Vol. 1, p.

281-297,

15. Vincent, P., Gartner, J. & Attali, G.: “An

Approach to Detailed Dip Determination Using

Correlation by Pattern Recognition,” J. Petroleum

Tech., Feb. (1979), 232-240.

16. Meer, P. & Weiss, I.: “Smoothed Differentiation Filters for Images,” J. Visual Comm. and

Image Representation, (1992) 3, 58-72.

17. Wagstaff, K., Cardie, C., Rogers, S. &

Schroedl, S.: “Constrained K-means Clustering

with Background Knowledge,” in Proc. 18th Int.

Conf. Machine Learning, MA, (2001), 577-584.

18. Basu, S., Banerjee, A. & Mooney R.J.:

“Semi-Supervised Clustering by Seeding,” in

Proc. 19st Int. Conf. Machine Learning, Sydney,

Australia, (2002)

19. Basu, S., Bilenko, M. & Mooney R.J.: “Comparing and Unifying Search-Based and SimilarityBased Approaches to Semi-Supervised Clustering,” in Proc. ICML-2003 Workshop, Washington

DC, Aug. (2003), 42-49.

9th European Conference on the Mathematics of Oil Recovery − Cannes, France, 30 August - 2 September 2004