I S TANF

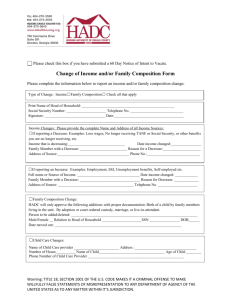

advertisement