SILOs - CS 8803 AIAD Project Proposal

advertisement

SILOs - Distributed Web Archiving & Analysis using Map Reduce

CS 8803 AIAD Project Proposal

Anushree Venkatesh

Sagar Mehta

Sushma Rao

Contents

Motivation............................................................................................................................................. 3

Related work ......................................................................................................................................... 4

An Introduction to Map Reduce ........................................................................................................... 5

Proposed work ...................................................................................................................................... 6

Plan of action ..................................................................................................................................... 11

Evaluation and Testing ........................................................................................................................ 12

Bibliography ........................................................................................................................................ 13

Motivation

The internet is currently the largest database in the world for any required information. However,

one of the most amazing facts about it is that the average life of a page on the internet is, according

to statistics, only 44 days [14]. This means that we are continuously losing an incredible amount of

information. In order to avoid this loss, the concept of web archiving was introduced in as early as

1994. This was just one of the reasons for building a web archive. Some of the other reasons are as

follows [7]:

•

•

•

•

Cultural: The pace of change of technology makes it difficult to maintain the data which is

mostly in digital format.

Technical: Technology needs a while to stabilize but spreading data over different technologies

may lead to loss of data.

Economic: This serves in the interest of the public and helps them access previously hosted

data as well.

Legal: This deals with intellectual property issues.

Considering the vast amount of data that is available on the World Wide Web, it is practically

impossible to even imagine running a crawler on a single machine. This brings into view the

requirement of a distributed architecture. Google's Map-Reduce is an efficient programming model

that allows for distributed processing. Our project tries to explore the usage of this model for

distributed web crawling and archiving and analyzing the results over various parameters

including efficiency through implementation using Hadoop [11], an open source framework

implementing map-reduce in Java.

Related work

This project aims to propose a new method for web archiving. There currently exist a number of

traditional approaches to web archiving and crawling [14]. Some of these are described below.

1. Automatic harvesting approach: It is the implementation of a crawler without any

restrictions per se. One of the most common examples following this approach is the Internet

Archive [16].

2. Deposit approach: Here the author of a page himself submits the page to be included in the

archive, eg. DDB[15] .

Beyond this, our project brings to the forefront the issue of the crawling mechanism itself.

There are two dimensions along which types of crawling can be defined – content based and

architecture based. The different types of existing content based methods of crawling are [4]:

1. Selective: This basically involves changing the policy of insertions and extractions in the

queue of discovered URLs. It can incorporate various factors like depth, link popularity etc.

2. Focused: This is a refined version of a selective crawler where only specific content is

crawled.

3. Distributed: This is done by distributing the task based on content and running crawlers

on multiple processes and implementing parallelization.

4. Web dynamics based: This method keeps the data updated by refreshing the content at

regular intervals based on decided functions and possibly by using intelligent agents.

The different architectural approaches to crawling are:

1. Centralized: Implements a central scheduler and downloader. This is not a very popular

architecture. Most of the crawlers that have been implemented use the distributed architecture.

2. Distributed: To our knowledge, most of the crawlers making use of the distributed architecture

basically implement a peer-to-peer network distribution protocol, eg. Apoidea[5].

We are implementing the distributed architecture provided by the map reduce framework.

An Introduction to Map Reduce

Figure 1 [1]

The MapReduce Library splits the input into M configurable segments, which are processed on a set

of distributed machines in parallel. The intermediate key, value pairs generated by the `map`

function are then partitioned into R regions and assigned to `reduce` workers . For every unique

intermediate key that is generated by map, the corresponding values are passed to the reduce

function. On completion of the reduce function, we will have R output files, one from each reduce

worker.

Proposed work

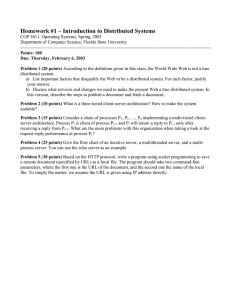

Graph

Builder

Seed List

URL Extractor

Distributed Crawler

M

M

R

URL,

value

URL, page

content

Parse for

URL

Back Links

Mapper

R

URL,

Parent

(Remove

Duplicates)

Adjacency

List

Table

Key Word Extractor

<URL, parent URL>

M

Parent,

URL

R

URL, 1

M

R

Parse for

key word

KeyWord,

URL

Diff

Compression

Page

Content

Table

URL

Table

Back Links

Table

Figure 2: Architecture

Inverted

Index

Table

Description of Tables

Following are the tables used and their respective schemas. Note that these tables are stored in a

Berkley DB database.

Page Content Table

<Hash(Page Content)>

<Page Content>

URL Table

<URL>

Back Links Table

<Hash(URL)>

<Hash(URL)>

<Hash(Page Content)>

<List of Hash(Parent URLs)>

Adjacency List Table

<Hash(URL)>

<List of Hash(URL adjacent to a given URL)>

Inverted Index Table

<Key Word>

<List of Hash(URLs where key word appears)>

Component Description

1. Seed List: This is a list of URLs to start with, which will bootstrap the crawling process.

2. Distributed Crawler:

1. Its uses the map-reduce functions to distribute the crawling process among multiple

worker nodes.

2. Once it gets an input URL it checks for duplicates and if it does not exist, then it inserts

the new URL into the url_table. The url_table has key as the hash of the URL. Note that

wherever hash is mentioned, it’s a standard SHA-1 hash. This URL is then fed to the page

fetcher which sends a HTTP GET request for the url.

3. One copy of the page is sent to the URL extractor and another is sent to the keyword

extractor component. The page is then stored in the page_content_table with key as

hash(page_content) and the value as compressed difference as compared to the

previous latest version of that page if it exists.

Map

Input <url, 1>

If( ! ( duplicate(url) )

{

Insert into url_table

<hash(url), url, hash(page_content) >

Page_content = http_get(url);

}

Output Intermediate pair < url, page_content>

Reduce

Input < url, page_content >

If( ! ( duplicate(page_content ) )

{

Insert into page_content_table

<hash(page_content), compress(page_content) >

}

Else

{

Insert into page_content_table

<hash(page_content), compress( diff_with_latest(page_content) )>

}

}

3. URL Extractor:

1. It parses the input web-page contents that it receives and extracts URLs out of it using

regular expressions, which are fed to the Distributed crawler.

2. Additionally, for all the URLs extracted out of the webpage, we generate and adjacency

list < node, list<adjacent nodes> > and this tuple is fed into the Adjacency list table.

3. The reduce step also emits a tuple <parsed_url, parent_url > which is fed to the back link

Mapper.

Map

Input < url, page_content>

List<urls> = parse(page_content);

Insert into adjacency_list_table

<hash(url), list<(hash(urls)> >

For each url emit

{

Ouput Intermediate pair < url, 1> which is fed to distributed crawler

Output Intermediate pair < url, parent_url> which is fed to backlink mapper

}

Reduce

No need to do anything in reduce component

4. Back link Mapper:

1. It uses map-reduce to generate a list of URLs that point to a particular URL, and the

tuple < url, list(parent_urls) >

Map

Input <parent_url, points_to_url>

Output

Emit Intermediate pair = < points_to_url, parent_url>

Reduce

Input <point_to_url,parent_url>

Combine all pairs <point_to_url,parent_url> which have the same point_to_url to emit

<point_to_url, list of parent_urls>

Insert into backward_links_table <point_to_url, list<parent_urls> >

5. Key word Extractor:

1. It parses the input web-page contents that it receives and extracts words which are not

stop words and these are used to build an inverted index using the map-reduce method.

The inverted index consists of a key-value pair where the key is the keyword and the

value is the list of URLs where that keyword is found.

Map

Input < url, page_content>

List<keywords> = parse(page_content);

For each keyword, emit

Output Intermediate pair < keyword, url>

Reduce

Combine all <keyword, url> pairs with the same keyword to emit

<keyword, List<urls> >

Insert into inverted index table

<keyword, List<urls> >

6. Graph Builder :

1. It takes as input the adjacency list representation of the crawled-web-graph, and builds

a visual representation of the graph.

Plan of action

Task

Duration

Setup of Hadoop Framework

Week 1 – 2

Distributed Crawling

Week 3 – 6

Analysis – Inverted Index

Week 7

Analysis – Back Link Mapper

Week 8

Analysis - Graph Builder

Week 9

Evaluation and Testing

The criteria for evaluation will be as follows:

1. The inverted index will be evaluated by submitting different queries and validating the

results obtained.

2. We will also draw a comparison of this distributed crawling technique using Map Reduce,

with other distributed crawlers like Apoidea [5].

3. We consider only the delta while storing updated versions of an html page by doing a

simple `diff` operation. At any point, a query for a certain version of the page must return

the right results. This will be tested.

4. The tradeoff between speed and compression ratio which arises from the compression

algorithm chosen, will be measured.

5. From the generated adjacency graphs, we will try to determine if a domain represents some

particular pattern.

6. Based on the frequency with which key words appear in a domain, we can predict which

key words are most popular for a given domain.

7. We will draw a comparison of average page size and number of pages between different

domains. For e.g. compare how page sizes on photo sharing sites like flickr would differ

from sites with textual content like Wikipedia.

Bibliography

1. Map Reduce: Simplified Data Processing on Large Clusters, Jeffrey Dean and Sanjay Ghemawat,

Google Inc

2. Brown, A. (2006). Archiving Websites: a practical guide for information management professionals.

Facet Publishing.

3. Brügger, N. (2005). Archiving Websites. General Considerations and Strategies. The Centre for Internet

Research.

4. Pierre Baldi, Paolo Frasconi, Padhraic Smyth. “Modeling the Internet and the Web, Probabilistic

Methods and Algorithms” Chapter 6 – Advanced Crawling Techniques

5. Aameek Singh, Mudhakar Srivatsa, Ling Liu, and Todd Miller. “Apoidea: A Decentralized Peer-to-Peer

Architecture for Crawling the World Wide Web”

6. Day, M. (2003). "Preserving the Fabric of Our Lives: A Survey of Web Preservation Initiatives".

Research and Advanced Technology for Digital Libraries: Proceedings of the 7th European Conference

(ECDL): 461-472.

7. Eysenbach, G. and Trudel, M. (2005). "Going, going, still there: using the WebCite service to

permanently archive cited web pages". Journal of Medical Internet Research 7 (5).

8. Fitch, Kent (2003). "Web site archiving - an approach to recording every materially different

response produced by a website". Ausweb 03.

9. Lyman, P. (2002). "Archiving the World Wide Web". Building a National Strategy for Preservation:

Issues in Digital Media Archiving.

10. Masanès, J. (ed.) (2006). Web Archiving. Springer-Verlag.

11. http://hadoop.apache.org/

12. http://en.wikipedia.org/wiki/Hadoop

13. http://code.google.com/edu/content/submissions/uwspr2007_clustercourse/listing.html

14. http://www.clir.org/pubs/reports/pub106/web.html

15. http://deposit.ddb.de/

16. http://www.archive.org/