SILOs - CS 8803 AIAD Project Report

advertisement

SILOs - Distributed Web Archiving & Analysis using Map Reduce

CS 8803 AIAD Project Report

Anushree Venkatesh

Sagar Mehta

Sushma Rao

Contents

Contents ................................................................................................................................................ 1

Motivation ............................................................................................................................................. 3

Related work ......................................................................................................................................... 4

An Introduction to Map Reduce ............................................................................................................ 5

Architecture........................................................................................................................................... 6

Component Description ……………………………………………………………………………………………………………….....7

Challenges ...........................................................................................................................................11

Evaluation ............................................................................................................................................12

Conclusion ...........................................................................................................................................18

Extensions............................................................................................................................................19

Bibliography.........................................................................................................................................20

Appendix –A ........................................................................................................................................20

Appendix – B........................................................................................................................................27

2|Page

Motivation

The internet is currently the largest database in the world for any required information. However,

one of the most amazing facts about it is that the average life of a page on the internet is, according

to statistics, only 44 days [14]. This means that we are continuously losing an incredible amount of

information. In order to avoid this loss, the concept of web archiving was introduced in as early as

1994. This was just one of the reasons for building a web archive. Some of the other reasons are as

follows [7]:

•

•

•

•

Cultural: The pace of change of technology makes it difficult to maintain the data which is

mostly in digital format.

Technical: Technology needs a while to stabilize but spreading data over different technologies

may lead to loss of data.

Economic: This serves in the interest of the public and helps them access previously hosted data

as well.

Legal: This deals with intellectual property issues.

Considering the vast amount of data that is available on the World Wide Web, it is practically

impossible to even imagine running a crawler on a single machine. This brings into view the

requirement of a distributed architecture. Google's Map-Reduce is an efficient programming model

that allows for distributed processing. Thus our project tries to explore the usage of this model for

distributed web crawling and archiving and analyzing the results over various parameters

including efficiency through implementation using Hadoop [11], an open source framework

implementing map-reduce in Java.

3|Page

Related work

This project aims to propose a new method for web archiving. There currently exist a number of

traditional approaches to web archiving and crawling [14]. Some of these are described below.

1. Automatic harvesting approach: It is the implementation of a crawler without any restrictions

per se. One of the most common examples following this approach is the Internet Archive [16].

2. Deposit approach: Here the author of a page himself submits the page to be included in the

archive, eg. DDB[15] .

Beyond this, our project brings to the forefront the issue of the crawling mechanism itself.

There are two dimensions along which types of crawling can be defined – content based and

architecture based. The different types of existing content based methods of crawling are [4]:

1. Selective: This basically involves changing the policy of insertions and extractions in the

queue of discovered URLs. It can incorporate various factors like depth, link popularity etc.

2. Focused: This is a refined version of a selective crawler where only specific content is

crawled.

3. Distributed: This is done by distributing the task based on content and running crawlers on

multiple processes and implementing parallelization.

4. Web dynamics based: This method keeps the data updated by refreshing the content at

regular intervals based on decided functions and possibly by using intelligent agents.

The different architectural approaches to crawling are:

1. Centralized: Implements a central scheduler and downloader. This is not a very popular

architecture. Most of the crawlers that have been implemented use the distributed architecture.

2. Distributed: To our knowledge, the crawlers making use of the distributed architecture

basically implement a peer-to-peer network distribution protocol, eg. Apoidea[5].

We are implementing the distributed architecture provided by the map reduce framework.

4|Page

An Introduction to Map Reduce

Figure 1 [1]

The MapReduce Library splits the input into M configurable segments, which are processed on a set

of distributed machines in parallel. The intermediate key, value pairs generated by the `map`

function are then partitioned into R regions and assigned to `reduce` workers . For every unique

intermediate key that is generated by map, the corresponding values are passed to the reduce

function. On completion of the reduce function, we will have R output files, one from each reduce

worker.

5|Page

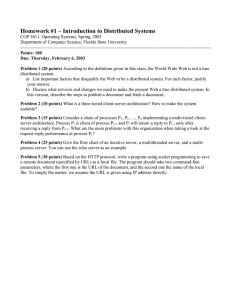

Architecture

Graph

Builder

Seed List

URL Extractor

Distributed Crawler

M

M

R

URL,

value

URL, page

content

Parse for

URL

R

URL,

Parent

M

R

Parse for

key word

KeyWord,

URL

Diff

Compression

Page

Content

Table

URL

Table

Back Links

Table

Figure 2: Architecture

6|Page

(Remove

Duplicates)

Adjacency

List

Table

Key Word Extractor

<URL, parent URL>

Back Links

Mapper

M

Parent,

URL

R

URL, 1

Inverted

Index

Table

Component Description

1. Seed List: This is a list of urls to start with, which will bootstrap the crawling process.

2. Distributed Crawler:

1. Its uses the map-reduce functions to distribute the crawling process among multiple

worker nodes.

2. Once it gets an input a url it checks for duplicates and if not then it inserts the new url

into the url_table. The url_table has key as the hash of the url. Note that wherever hash

is mentioned, it’s a standard SHA-1 hash. This url is then fed to page fetcher which sends

a http get request for the url.

3. One copy of the page is sent to the Url extractor and another is sent to the keyword

extractor component.The page is then stored in the page_content_table with key as

hash(page_content) and the value as compressed difference as compared to the

previous latest version of that page if it exists.

Map

Input <url, 1>

if(!duplicate(URL))

{

Insert into url_table

Page_content = http_get(url);

<hash(url), url, hash(page_content),time_stamp >

Output Intermediate pair < url, page_content>

}

Else If( ( duplicate(url) && (Current Time – Time Stamp(URL) > Threshold))

{

Page_content = http_get(url);

Update url table(hash(url),current_time);

Output Intermediate pair < url, page_content>

}

Else

{

Update url table(hash(url),current_time);

7|Page

}

Reduce

Input < url, page_content >

If(! Exits hash(URL) in page content table)

{

Insert into page_content_table

<hash(page_content), compress(page_content) >

}

Else if(hash(page_content_table(hash(url)) != hash(current_page_content) {

Insert into page_content_table

<hash(page_content), compress( diff_with_latest(page_content) )>

}

}

3. URL Extractor:

1. It parses the input web-page contents that it receives and extracts urls out of it using

regular expressions, which are fed to the Distributed crawler.

2. Additionally, for all the urls extracted out of the webpage, we generate and adjacency

list < node, list<adjacent nodes> > and this tuple is fed into the Adjacency list table.

3. The reduce step also emits a tuple <parsed_url, parent_url > which is fed to the back link

Mapper.

Map

Input < url, page_content>

List<urls> = parse(page_content);

Insert into adjacency_list_table

<hash(url), list<(hash(urls)> >

For each url emit

{

Ouput Intermediate pair < url, 1> which is fed to distributed crawler

8|Page

Output Intermediate pair < url, parent_url> which is fed to backlink mapper

}

Reduce

No need to do anything in reduce component

4. Back link Mapper:

1. It uses map-reduce to generate a list of urls that point to a particular url, and the tuple <

url, list(parent_urls) >

Map

Input <parent_url, points_to_url>

Output

Emit Intermediate pair = < points_to_url, parent_url>

Reduce

Input <point_to_url,parent_url>

Combine all pairs <point_to_url,parent_url> which have the same point_to_url to emit

<point_to_url, list of parent_urls>

Insert into backward_links_table <point_to_url, list<parent_urls> >

5. Key word Extractor:

1. It parses the input web-page contents that it receives and extracts words which are not

stop words and these are used to build an inverted index using the map-reduce method.

The inverted index consists of a key-value pair where the key is the keyword and the

value is the list of urls where that keyword is found.

Map

Input < url, page_content>

List<keywords> = parse(page_content);

For each keyword, emit

Output Intermediate pair < keyword, url>

9|Page

Reduce

Combine all <keyword, url> pairs with the same keyword to emit

<keyword, List<urls> >

Insert into inverted index table

<keyword, List<urls> >

6. Graph Builder :

1. It takes as input the adjacency list representation of the crawled-web-graph, and builds

a visual representation of the graph.

10 | P a g e

Challenges

The following were the main challenges in the project:

•

Understanding the map reduce framework and its usage

•

Installing and managing the Hadoop open source map-reduce framework which is a non-trivial

task

•

Identification of components in the architecture that could be ported to a map reduce structure

•

Identification of experiments that made use of the map reduce framework

•

Implementing the identified components and experiments in accordance with the framework

•

Coming up with a fair performance comparison between equivalent programs written within

and outside map-reduce implicitly answering the question “When is it better to have a mapreduce version of a program instead of just multi-threading the same program? “

11 | P a g e

Evaluation

A zoomed out snapshot of the crawled data starting with the www.gatech.edu domain [ Note that here

the crawled links are not restricted only to Gatech.edu meaning if a link points to an outside domain it is

crawled too ]

The above visualization is created by the graph-builder component which uses the graphviz library to

visualize a graph given the adjacency list structure. To understand how this works consider a small

example where the adjacency list is as follows –

12 | P a g e

digraph G {

gatech -> stanford

gatech -> cmu

gatech -> cornell

stanford->MIT

stanford-> cornell

cornell-> MIT

}

The corresponding graph using graphviz is as follows

So basically we automated the construction of the digraph structure while parsing out the links on a

particular page during the distributed crawling.

Distributed Crawler:

We were successfully able to distribute the process of crawling using map reduce. We provided an input

file of URLs to the map task. These URLs were crawled and the fetched pages were stored as the output

of the reduce function.

13 | P a g e

We noticed that the execution time for the distributed crawler using map-reduce was much lesser than

the time taken by a single threaded crawler for crawling the same number of URLs. However as noted

previously, having a fair comparison of similar functionalities within and outside map-reduce is a nontrivial task and another project in itself.

Appendix A has a sample code of the distributed fetch functionality that we implemented using mapreduce.

Analysis on the fetched data using map-reduce:

We also used map-reduce to analyze the data that was obtained from the fetched pages.

In these experiments however, we noticed that the execution time of map reduce was significantly

higher than that of a single threaded java application performing the same task. For example for the

keyword frequency experiment that we describe below even a single threaded standalone java program

was on average 10 times faster than the equivalent map-reduce version. This could be attributed to the

fact that the communication overhead among the master-slave nodes in map-reduce is noticeable when

the size of the data set is not large enough.

Key word frequency:

In this task we wanted to find out the top frequently occurring words on different comparable websites.

So using the distributed crawler we fetched equivalent subsets from the sites www.gatech.edu,

www.cmu.edu and www.cornell.edu

On the fetched data we ran the Frequency count program using map-reduce. The program in map-phase

basically parses out the page contents using html-parser and for each keyword emits the pair

<keyword,one> to signify that the particular keyword occurred once. So if a keyword occurs N times, N

such pairs will be emitted across different map-functions.

In the reduce phase, we sum up the counts for all such keywords thus giving us the frequency count for

each keyword. Also the task is distributed since we have a large number of map and reduce tasks.

14 | P a g e

Appendix B has a java implementation of the above frequency count program in map-reduce

framework.

Verifying the Zipf law on the natural language word corpus

Another interesting experiment related to the above frequency count task, was to verify the Zipf law

which basically states that the frequency of a word is inversely proportional to its rank in the frequency

table. The Zipf distribution is a power-law distribution and to verify this, we plot the log of frequency of

word count against the log of word count. If we get a linear plot on such a scale, it is a power law.

To get the frequency of word-count, i.e the frequencies of each frequency of a word, we ran another

pass of the Frequency count map-reduce program where each distinct frequency was now the keyword.

When plotted on the cmu dataset, the zipf distribution looked as follows -

15 | P a g e

URL Depth:

We observed the average URL depth for a given domain.

For e.g – www.gatech.edu/sports would mean a URL depth of 1 & www.gatech.edu/library/books would

mean a URL depth of 2. Hence the average depth would be (1+2)/2 = 1.5

Again this was done using map-reduce where the map phase would emit the url and its depth, while the

reduce phase would sum it up and produce the average for each such url.

Our results are presented in the following tables:

Average URL Depth

CMU

cmu.edu

Cornell

2.73

cornell.edu

alumni.cmu.edu 2.18

www.library.cmu.edu

2.23

www.alumniconnections.com 4.81

Gatech

1.34

gatech.edu

1

www.gradschool.cornell.edu 1

gtalumni.org

3

www.news.cornell.edu 2.57

ramblinwreck.cstv.com 2.57

www.sce.cornell.edu 1

cyberbuzz.gatech.edu

2

URL Count:

URL Count refers to the number of URLs of a particular domain that are present in the pages of a domain

being crawled.

16 | P a g e

The table below shows for e.g. that www.cmu.edu has links to alumni.cmu.edu, hr.web.cmu.edu,

www.library.cmu.edu etc.

Note that here we extracted only the domain portion of the urls, to get the count and again used a

similar map-reduce program as in the above experiments.

Top 6 URL domains that get traversed

CMU

Cornell

Gatech

www.cornell.edu 43

gtalumni.org

alumni.cmu.edu 92

www.cuinfo.cornell.edu 2

centennial.gtalumni.org 4

hr.web.cmu.edu 13

www.gradschool.cornell.edu 2

cyberbuzz.gatech.edu

www.alumniconnections.com 16

www.news.cornell.edu 7

georgiatech.searchease.com 9

www.carnegiemellontoday.com 10

www.sce.cornell.edu

8

ramblinwreck.cstv.com

www.library.cmu.edu 69

www.vet.cornell.edu

1

www.gatech.edu

www.cmu.edu

17 | P a g e

170

236

7

56

14

Conclusion

The basic goal of our project was to understand the map-reduce framework and use the knowledge to

work on some interesting experiments on large scale web dataset. To get the web data set, we started

out with an open source crawler.

As we got more acquainted with Hadoop framework we thought why not exploit the distributed

processing functionality of the map-reduce framework to write a distributed crawler. We then came up

with a map-reduce algorithm for it and were able to successfully write a distributed crawler using mapreduce.

Given the time constraints of the project and that it was our first time working with a crawler too; we

were able to contribute a substantial part.

To conclude with, the following might be considered the three most important contributions of our

system:

-

It brings to light the advantage that map reduce brings to the table when processing large

amounts of data and how the distributed processing is abstracted out by the framework which

allows a person to concentrate on the problem at hand

-

With different experiments, it shows how most problems can be modeled as a map reduce

problem.

-

Distributed crawler using map-reduce which according to our knowledge is the first ever such

attempt

-

We have laid out the framework to build a distributed archiving system

-

The inverted index that our system generates can be used to build a layer of search engine on

the top of it.

As a course project, it was extremely beneficial, as it allowed us to see the details of two major existing

systems – Peer Crawler and the Hadoop Framework and allowed us to use our knowledge to try and

integrate the two concepts of crawling and map-reduce and perform experiments.

As an extension, it might be interesting to see the results, if we stored the data in a better manner and

also implemented a search over this data that would do improved information retrieval from the

archived data set.

18 | P a g e

Extensions

•

An obvious question especially if you are from a systems background is when map-reduce is

better than an equivalent multi-threaded program outside map-reduce. This is a non-trivial

question in itself given that most of the optimizations are domain-specific and would require at

minimum –

1. An in-depth study of the Hadoop source code along with the various optimizations used

2. Coming up with a fair comparison of the equivalent programs

•

Another extension as we previously noted is to have distributed archiving using the framework

we have laid out. Currently the pages are written directly to the file system; however a better

approach would be to store them in a relational database with different versions stored

incrementally. Again this is a non-trivial task since

1. The pages are in html and therefore would require html diffs before storing the pages.

2. When queried for a particular version of page, it needs to be generated at runtime

based on the incremental html diffs

•

Another interesting experiment would be to build a search engine layer on the top of the

inverted index that our system generates

19 | P a g e

Bibliography

1. Map Reduce: Simplified Data Processing on Large Clusters, Jeffrey Dean and Sanjay Ghemawat,

Google Inc

2. Brown, A. (2006). Archiving Websites: a practical guide for information management professionals.

Facet Publishing.

3. Brügger, N. (2005). Archiving Websites. General Considerations and Strategies. The Centre for Internet

Research.

4. Pierre Baldi, Paolo Frasconi, Padhraic Smyth. “Modeling the Internet and the Web, Probabilistic

Methods and Algorithms” Chapter 6 – Advanced Crawling Techniques

5. Aameek Singh, Mudhakar Srivatsa, Ling Liu, and Todd Miller. “Apoidea: A Decentralized Peer-to-Peer

Architecture for Crawling the World Wide Web”

6. Day, M. (2003). "Preserving the Fabric of Our Lives: A Survey of Web Preservation Initiatives".

Research and Advanced Technology for Digital Libraries: Proceedings of the 7th European Conference

(ECDL): 461-472.

7. Eysenbach, G. and Trudel, M. (2005). "Going, going, still there: using the WebCite service to

permanently archive cited web pages". Journal of Medical Internet Research 7 (5).

8. Fitch, Kent (2003). "Web site archiving - an approach to recording every materially different

response produced by a website". Ausweb 03.

9. Lyman, P. (2002). "Archiving the World Wide Web". Building a National Strategy for Preservation:

Issues in Digital Media Archiving.

10. Masanès, J. (ed.) (2006). Web Archiving. Springer-Verlag.

11. http://hadoop.apache.org/

12. http://en.wikipedia.org/wiki/Hadoop

13. http://code.google.com/edu/content/submissions/uwspr2007_clustercourse/listing.html

14. http://www.clir.org/pubs/reports/pub106/web.html

15. http://deposit.ddb.de/

16. http://www.archive.org/

17. http://en.wikipedia.org/wiki/Zipf's_law

18. HtmlParser: http://htmlparser.sourceforge.net/

20 | P a g e

Appendix –A

Sample Distributed fetch using map-reduce

import java.io.File;

import java.io.FileWriter;

import java.io.IOException;

import java.net.MalformedURLException;

import java.net.URL;

import java.net.URLConnection;

import java.util.*;

import java.io.*;

import java.net.*;

import java.util.Scanner;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.conf.*;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapred.*;

import org.apache.hadoop.util.*;

public class MyWebPageFetcher {

public static class Map extends MapReduceBase implements Mapper {

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

String downloadedStuff = "initial";

public void map(WritableComparable key, Writable value, OutputCollector output, Reporter reporter) throws IOException

{

String line = ((Text)value).toString();

StringTokenizer tokenizer = new StringTokenizer(line);

21 | P a g e

while (tokenizer.hasMoreTokens()) {

word.set(tokenizer.nextToken());

String url = word.toString();

String option = "header";

MyWebPageFetcher fetcher = new MyWebPageFetcher(url);

/*if ( HEADER.equalsIgnoreCase(option) ) {

log( fetcher.getPageHeader() );

}

else if ( CONTENT.equalsIgnoreCase(option) ) {

log( fetcher.getPageContent() );

}

else {

log("Unknown option.");

}*/

downloadedStuff = fetcher.getPageContent();

//System.out.println(downloadedStuff);

//System.out.println("\n\nIn Map " + downloadedStuff + "\n\nEnd Map");

output.collect(word,new Text(downloadedStuff));

}

}

}

public static class Reduce extends MapReduceBase implements Reducer {

public void reduce(WritableComparable key, Iterator values, OutputCollector output, Reporter reporter) throws IOException {

String sum = "";

while (values.hasNext()) {

sum += ((Text)values.next()).toString();

output.collect(key, new Text(sum));

}

22 | P a g e

//System.out.println("\n\nIn reduce " + sum + "\n\nEnd reduce");

}}

public MyWebPageFetcher( URL aURL ){

if ( ! HTTP.equals(aURL.getProtocol()) ) {

throw new IllegalArgumentException("URL is not for HTTP Protocol: " + aURL);

}

fURL = aURL;

}

public MyWebPageFetcher( String aUrlName ) throws MalformedURLException {

this ( new URL(aUrlName) );

}

/** Fetch the HTML content of the page as simple text. */

public String getPageContent() {

String result = null;

URLConnection connection = null;

try {

connection = fURL.openConnection();

Scanner scanner = new Scanner(connection.getInputStream());

scanner.useDelimiter(END_OF_INPUT);

result = scanner.next();

}

catch ( IOException ex ) {

log("Cannot open connection to " + fURL.toString());

}

return result;

}

/** Fetch HTML headers as simple text. */

23 | P a g e

public String getPageHeader(){

StringBuilder result = new StringBuilder();

URLConnection connection = null;

try {

connection = fURL.openConnection();

}

catch (IOException ex) {

log("Cannot open connection to URL: " + fURL);

}

//not all headers come in key-value pairs - sometimes the key is

//null or an empty String

int headerIdx = 0;

String headerKey = null;

String headerValue = null;

while ( (headerValue = connection.getHeaderField(headerIdx)) != null ) {

headerKey = connection.getHeaderFieldKey(headerIdx);

if ( headerKey != null && headerKey.length()>0 ) {

result.append( headerKey );

result.append(" : ");

}

result.append( headerValue );

result.append(NEWLINE);

headerIdx++;

}

return result.toString();

}

// PRIVATE //

private URL fURL;

24 | P a g e

private static final String HTTP = "http";

private static final String HEADER = "header";

private static final String CONTENT = "content";

private static final String END_OF_INPUT = "\\Z";

private static final String NEWLINE = System.getProperty("line.separator");

private static void log(Object aObject){

//System.out.println(aObject);

/*File outFile1 = new File("bodycontent.txt");

FileWriter fileWriter1 = new FileWriter(outFile1,true);

fileWriter1.write((String)aObject);

fileWriter1.close();*/

//System.out.println((String) aObject);

}

public static void main(String[] args) throws Exception {

try {

JobConf conf = new JobConf(MyWebPageFetcher.class);

conf.setJobName("MyWebPageFetcher");

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(Text.class);

conf.setMapperClass(Map.class);

conf.setCombinerClass(Reduce.class);

conf.setReducerClass(Reduce.class);

conf.setInputFormat(TextInputFormat.class);

conf.setOutputFormat(TextOutputFormat.class);

25 | P a g e

conf.setInputPath(new Path(args[0]));

conf.setOutputPath(new Path(args[1]));

conf.setNumMapTasks(8);

conf.setNumReduceTasks(8);

JobClient.runJob(conf);

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

26 | P a g e

Appendix – B

Word count using map-reduce

import java.io.IOException;

import java.util.*;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.conf.*;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapred.*;

import org.apache.hadoop.util.*;

public class WordCount {

public static class Map extends MapReduceBase implements Mapper {

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(WritableComparable key, Writable value, OutputCollector output, Reporter reporter) throws IOException {

String line = ((Text)value).toString();

StringTokenizer tokenizer = new StringTokenizer(line);

while (tokenizer.hasMoreTokens()) {

word.set(tokenizer.nextToken());

output.collect(word, one);

}

}

}

public static class Reduce extends MapReduceBase implements Reducer {

public void reduce(WritableComparable key, Iterator values, OutputCollector output, Reporter reporter) throws IOException {

int sum = 0;

while (values.hasNext()) {

sum += ((IntWritable)values.next()).get();

27 | P a g e

}

output.collect(key, new IntWritable(sum));

}

}

public static void main(String[] args) throws Exception {

JobConf conf = new JobConf(WordCount.class);

conf.setJobName("wordcount");

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(IntWritable.class);

conf.setMapperClass(Map.class);

conf.setCombinerClass(Reduce.class);

conf.setReducerClass(Reduce.class);

conf.setInputFormat(TextInputFormat.class);

conf.setOutputFormat(TextOutputFormat.class);

conf.setInputPath(new Path(args[0]));

conf.setOutputPath(new Path(args[1]));

JobClient.runJob(conf);

}

}

28 | P a g e