PARSING Materi Pendukung : T0264P23_2 8.1 Introduction

advertisement

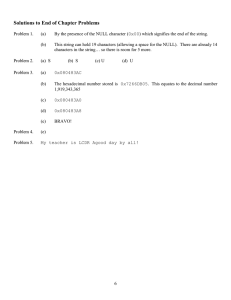

Materi Pendukung : T0264P23_2 PARSING Patrick Blackburn and Kristina Striegnitz Version 1.2.4 (20020829) 8.1 Introduction Last week we discussed bottom-up parsing/recognition, and implemented a simple bottom up recognizer called bureg/1. Unfortunately, bureg/1 was very inefficient, and its inefficiency had two sources. First the implementation used, which was based on heavy use of append/3 to split lists up into all possible sequences of three sublists, was highly inefficient. Second, the algorithm was naive. That is, it did not make use of a chart to record what has already been discovered about the syntactic structure. This week we discuss top-down parsing, and we will take care to remove the implementational inefficiency: we won't use append/3, but will work with difference lists instead. As we shall see, this makes a huge difference to the performance. Whereas bureg/1 is unusable for anything except very small sentences and grammars, today's parser and recognizer will easily handle examples that bureg/1 finds difficult. However we won't remove the deeper algorithmic inefficiency. Today's top-down algorithms are still naive: they don't make use of a chart. 8.2 Top Down Parsing As we have seen, in bottom-up parsing/recognition we start at the most concrete level (the level of words) and try to show that the input string has the abstract structure we are interested in (this usually means showing that it is a sentence). So we use our CFG rules right-to-left. In top-down parsing/recognition we do the reverse. We start at the most abstract level (the level of sentences) and work down to the most concrete level (the level of words). So, given an input string, we start out by assuming that it is a sentence, and then try to prove that it really is one by using the rules left-to-right. That works as follows: If we want to prove that the input is of category and we have the rule , then we will try next to prove that the input string consists of a noun phrase followed by a verb phrase. If we furthermore have the rule , we try to prove that the input string consists of a determiner followed by a noun and a verb phrase. That is, we use the rules in a left-to-right fashion to expand the categories that we want to recognize until we have reached categories that match the preterminal symbols corresponding to the words of the input sentence. Of course there are lots of choices still to be made. Do we scan the input string from right-to-left, from left-to-right, or zig-zagging out from the middle? In what order should we scan the rules? More interestingly, do we use depth-first or breadth-first search? In what follows we'll assume that we scan the input left-to-right (that is, the way we read) and the rules from top to bottom (that is, the way Prolog reads). But we'll look at both depth first and breadth-first search. 8.2.1 With Depth First Search Depth first search means that whenever there is more than one rule that could be applied at one point, we explore one possibility and only look at the others when this one fails. Let's look at an example. Here's part of the grammar ourEng.pl, which we introduced last week: s np vp vp ---> ---> ---> ---> [np,vp]. [pn]. [iv]. [tv,np]. lex(vincent,pn). lex(mia,pn). lex(died,iv). lex(loved,tv). lex(shot,tv). The sentence ``Mia loved vincent'' is admitted by this grammar. Let's see how a top-down parser using depth first search would go about showing this. The following table shows the steps a top-down depth first parser would make. The second row gives the categories the parser tries to recognize in each step and the third row the string that has to be covered by these categories. It should be clear why this approach is called top-down: we clearly work from the abstract to the concrete, and we make use of the CFG rules left-to-right. And why was this an example of depth first search? Because when we were faced with a choice, we selected one alternative, and worked out its consequences. If the choice turned out to be wrong, we backtracked. For example, above we were faced with a choice of which way to try and build a VP -- using an intransitive verb or a transitive verb. We first tried to do so using an intransitive verb (at state 4) but this didn't work out (state 5) so we backtracked and tried a transitive analysis (state 4'). This eventually worked out. 8.2.2 With Breadth First Search Let's look at the same example with breadth-first search. The big difference between breadth-first and depth-first search is that in breadth-first search we carry out all possible choices at once, instead of just picking one. It is useful to imagine that we are working with a big bag containing all the possibilities we should look at --- so in what follows I have used set-theoretic braces to indicate this bag. When we start parsing, the bag contains just one item. The crucial difference occurs at state 5. There we try both ways of building VPs at once. At the next step, the intransitive analysis is discarded, but the transitive analysis remains in the bag, and eventually succeeds. The advantage of breadth-first search is that it prevents us from zeroing in on one choice that may turn out to be completely wrong; this often happens with depth-first search, which causes a lot of backtracking. Its disadvantage is that we need to keep track of all the choices --- and if the bag gets big (and it may get very big) we pay a computational price. So which is better? There is no general answer. With some grammars breadthfirst search, with others depth-first. 8.3 Top Down Recognition in Prolog It is easy to implement a top-down depth-first recognizer in Prolog --- for this is the strategy Prolog itself uses in its search. Actually, it's not hard to implement a top-down breadth-first recognizer in Prolog either, though I'm not going to discuss how to do that. The implementation will be far better than that used in the naive bottom up recognizer that we discussed last week. This is not because because top-down algorithms are better than bottom-up ones, but simply because we are not going to use append/3. Instead we'll use difference lists. Here's the main predicate, recognize_topdown/3. Note the operator declaration (we want to use our ---> notation we introduced last week). ?- op(700,xfx,--->). recognize_topdown(Category,[Word|Reststring],Reststring) :lex(Word,Category). recognize_topdown(Category,String,Reststring) :Category ---> RHS, matches(RHS,String,Reststring). Here Category is the category we want to recognize (s, np, vp, and so on). The second and third argument are a difference list representation of the string we are working with (read this as: the second argument starts with a string of category Category leaving Reststring, the third argument behind). The first clause deals with the case that Category is a preterminal that matches the category of the next word on the input string. That is: we've got a match and can remove that word from the string that is to be recognized. The second clause deals with phrase structure rules. Note that we are using the CFG rules right-to-left: Category will be instantiated with something, so we look for rules with Category as a left-hand-side, and then we try to match the righthand-side of these rules (that is, RHS) with the string. Now for matches/3, the predicate which does all the work: matches([],String,String). matches([Category|Categories],String,RestString) :recognize_topdown(Category,String,String1), matches(Categories,String1,RestString). The first clause handles an empty list of symbols to be recognized. The string is returned unchanged. The second clause lets us match a non-empty list against the difference list. This works as follows. We want to see if String begins with strings belonging to the categories [Category|Categories] leaving behind RestString. So we see if String starts with a substring of category Category (the first item on the list). Then we recursively call matches to see whether what's left over (String1) starts with substrings belonging to the categories Categories leaving behind RestString. This is classic difference list code. Finally, we can wrap this up in a driver predicate: recognize_topdown(String) :recognize_topdown(s,String,[]). Now we're ready to play. We shall make use of the ourEng.pl grammar that we worked with last week. We used this same grammar with our bottom-up recognizer bottomup_recognizer.pl --- and we saw that it was very easy to grind bottomup_recognizer.pl into the dust. For example, the following are all sentences admitted by the ourEng.pl grammar: jules believed the robber who shot the robber fell jules believed the robber who shot the robber who shot marsellus fell The bottom-up recognizer takes a long time on these examples. But the topdown program handles them without problems. The following sentence is not admitted by the grammar, because the last word is spelled wrong (felll instead of fell). jules believed the robber who shot marsellus felll Unfortunately it takes bottomup_recognizer.pl a long time to find that out, and hence to reject the sentence. The top-down program is far better. 8.4 Top Down Parsing in Prolog It is easy to turn this recognizer into a parser --- and (unlike with bottomup_recognizer.pl ) it's actually worth doing this, because it is efficient on small grammars. As is so often the case in Prolog, moving from a recognizer to a parser is simply a matter of adding additional arguments to record the structure we find along the way. Here's the code. The ideas involved should be familiar by now. Read what is going on in the fourth argument position declaratively: ?- op(700,xfx,--->). parse_topdown(Category,[Word|Reststring],Reststring,[Category,Word]) :lex(Word,Category). parse_topdown(Category,String,Reststring,[Category|Subtrees]) :Category ---> RHS, matches(RHS,String,Reststring,Subtrees). matches([],String,String,[]). matches([Category|Categories],String,RestString,[Subtree|Subtrees]) :parse_topdown(Category,String,String1,Subtree), matches(Categories,String1,RestString,Subtrees). And here's the new driver that we need: parse_topdown(String,Parse) :parse_topdown(s,String,[],Parse). Time to play. Here's a simple example: parse_topdown([vincent,fell]). [s,[np,[pn,vincent]],[vp,[iv,fell]]] yes And another one: parse_topdown([vincent,shot,marsellus]). [s,[np,[pn,vincent]],[vp,[tv,shot],[np,[pn,marsellus]]]] yes And here's a much harder one: parse_topdown([jules,believed,the,robber,who,shot,the,robber,who,shot,t he, robber,who,shot,marsellus,fell]). [s,[np,[pn,jules]],[vp,[sv,believed],[s,[np,[det,the], [nbar,[n,robber],[rel,[wh,who],[vp,[tv,shot],[np,[det,the], [nbar,[n,robber],[rel,[wh,who],[vp,[tv,shot],[np,[det,the], [nbar,[n,robber],[rel,[wh,who],[vp,[tv,shot], [np,[pn,marsellus]]]]]]]]]]]]]],[vp,[iv,fell]]]]] yes As this last example shows, we really need a pretty-print output!