Search Methods Materi Pendukung : T0264P03_1 Table of Contents

advertisement

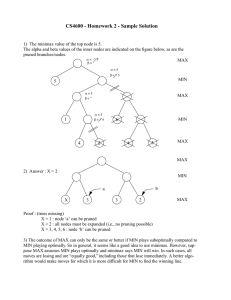

Materi Pendukung : T0264P03_1 Search Methods Patrick Doyle pdoyle@cs.stanford.edu Table of Contents 1. 2. 3. 4. 5. 6. 7. Definitions General Paradigms a. Generate and Test b. Means-End Analysis c. Bidirectional Search d. Iterative Deepening e. Important Issues in Search Brute-Force / Blind Search Methods a. Breadth-First Search b. Depth-First Search c. DFS Iterative Deepening (DFID) d. Iterative Broadening e. British Museum Heuristic Search a. Hill Climbing b. Steepest Ascent c. Branch and Bound d. Best-First Search e. Beam Search f. A* g. Iterative-Deepening A* h. B' i. Simulated Annealing j. Agendas Performance of Search Algorithms AND/OR Graphs Game Trees / Adversarial Search a. Minimax b. The Horizon Effect i. Secondary Search ii. The Killer Heuristic c. Alpha-Beta Pruning d. B* e. SSS* SCOUT Performance of Game Searching Algorithms Real-Time Algorithms a. Minmin b. Alpha Pruning c. Real-time A* Constraint-Satisfaction Problems a. Backtracking and Thrashing b. Dependency-Directed Backtracking i. Lookahead Schemes ii. Look-back Schemes c. Preprocessing d. Cycle Cutset e. Backtrack-Free Search Other Search Methods a. Island-Driven Search Notable Search Programs a. Logic Theorist b. General Problem Solver (GPS) c. ABSTRIPS Sources f. 8. 9. 10. 11. 12. 13. 1. Definitions heuristic Heuristics (the term was coined by Polya) are rules of thumb that may solve a given problem, but do not guarantee a solution. Put another way, heuristics are knowledge about the domain that helps guide search and reasoning in the domain. admissible search algorithm A search algorithm that always terminates in an optimal path from the initial node to a goal node if one exists. A* is admissible when the heuristic function never overestimates. problem space The environment in which the search takes place; it consists of a set of states of the problem and a set of operators that change the states. problem instance A problem space, together with an initial state and a goal state. The problem is to find a sequence of operators that transform the initial state into the goal state; such a sequence is called a solution to the problem. branching factor The average number of children of a given node, or the average number of new operators applicable to a given state. weak methods General search methods that use no domain knowledge. heuristic evaluation function A function that estimates the likelihood of a given state leading to a goal state. Heuristic functions can be used to guide search with efficient guesses about the "goodness" of a state. more informed function A function h1 is more informed than a function h2 if for all non-goal nodes n, h2(n) > h1(n). monotonic cost function A cost function that never decreases along a path away from the initial state. Any nonmonotonic cost function can be converted to a monotonic one by setting the value of a node equal to the maximum value of a path to the node. A heuristic function is monotonic if it is locally consistent -- that is, it obeys the triangle inqeuality of cost. dynamic programming principle The best way through a place is the best way to it followed by the best way from it. effective branching factor A measure of the average number of branches explored by an algorithm from a typical node of the search space. If I(d) is the average number of nodes generated during a search of depth d, then limd->inf(I(d))^(1/d) is the effective branching factor. constraint satisfaction problem A problem in which there is a set of variables each of which has a range of possible values, and for which there are constraints on the values. The goal is to find values for all the variables that satisfy the constraints. More specifically, a set of n variables X1, ... Xn, each having a value in a corresponding domain R1, ..., Rn. A constraint Ci(Xi, ..., Xj) is a subset of the Cartesian product Ri1 x ... x Rij that specifies which variable values are compatible with one another. constraint graph CSPs can be represented as constraint graphs in which nodes represent variables and arcs connect pairs of variables that are constrained. ordered constraint graph A constraint graph where the nodes are linearly ordered to reflect the sequence of variable assignments set by the search algorithm. arc consistency A directed arc between two nodes is arc consistent exactly when, for any value of the variable corresponding to the first node, there is a value for the second that satisfies the problem constraints. Arc consistency is a directional concept. path consistency A path through a sequence of nodes is consistent exactly when there is a set of variable assignments such that there is simultaneous arc consistency between each pair of nodes in order in the sequence. d-arc-consistent Given an order d on a constraint graph R, R is d-arc-consistent if all edges directed along d are arc consistent. k-consistency If, when some k - 1 variables are given values satisfying their constraints, there exists a value for any remaining variable that satisfies all constraints among the k variables. futility If the estimated cost of a solution becomes greater than FUTILITY, abandon the search. credit assignment problem [Minsky, 1963] The problem of deciding which of a series of actions is actually responsible for a particular outcome. backjumping [Gaschnig, 1979] A backtracking scheme in CSPs. When a dead-end is hit, backjumping marks each value of the dead-end variable with the age of the oldest ancestor forbidding that value. It then jumps back to the youngest among these ancestors. Picking the youngest ancestor guarantees completeness. OPEN and CLOSED lists In most search techniques that explore graphs, it is necessary to record two kinds of nodes: the nodes we have seen but not explored, and the nodes we have seen and explored. The OPEN list is the former, and the CLOSED list is the latter. Generally, search proceeds by examining each node on the OPEN list, performing some expansion operation that adds its children to the OPEN list, and moving the node to the CLOSED list. Manhattan distance The sum of the vertical and horizontal offsets between two places in a grid. (So called because, in Manhattan, you can't fly directly from one place to another; you have to travel in an L-shape instead.) A standard heuristic in the Eight Puzzle. classes of search [from "Tweak" article] There are eight: no backtracking, explicitly represented alternatives, dependency-directed modification, chronological backtracking, dependency-directed backtracking, heuristic search, metaplanning, and protection (once a goal is achieved it stays achieved). 2. General Paradigms Generate and Test A generator makes hypotheses and a tester tests them. Good generators have three properties: completeness -- They eventually produce all possible solutions. non-redundant -- They never propose a solution more than once. informed -- They use information to limit the possibilities and hence the number of solutions proposed. DENDRAL uses the generate-and-test approach. Means-End Analysis This approach tries to minimize differences between the current state and the goal. It chooses a difference and then applies an operator to remove it. Sometimes the operator cannot be applied directly; in this case a subgoal is established to reach a state where it can. This kind of backward chaining is called operator subgoaling. Differences can be prioritized. In GPS [Newell, Shaw, and Simon, 1957], each rule had preconditions and a list of effects. A data structure called a difference table indexed the rules by the differences they could reduce. Bidirectional Search Simultaneously search from the initial state toward the goal and from the goal state back toward the initial state, until a common state is found along the frontier. Requires an explicit goal state and invertible operators (or backward chaining). Note that if a heuristic function is inaccurate, the two searches might miss one another. Iterative Deepening [Slate and Atkin, 1977] Invented for CHESS 4.5. Search the entire tree to a depth of one, evaluate the results, then repeat to depth of two, and so on. Previous iterations can be used to guide search (use the best result to date as the starting point of the next level's search in e.g., minimax). Important Issues in Search direction in which to search -- search forward through the state space, or backward from the goal matching -- production systems need efficient ways to match preconditions in their rules knowledge representation tree vs. graph 3. Brute-Force / Blind Search Methods Also called weak search methods, most general methods are brute-force because they do not need domain knowledge; however, they are less efficient as a result. All brute-force search algorithms must take O(bd) time, and use O(d) space. Breadth-First Search Generate nodes in the tree in order of their distance from the root. That is, all nodes at distance one, followed by all nodes at distance two, etc. The first path to a goal will be of shortest length. The corresponding data structure for storing nodes during search is a queue. Depth-First Search Follow one path deep into the tree until a goal is found or backtracking is required. It is reasonable when unproductive paths aren't too long. The corresponding data structure for storing nodes during search is a stack. DFS Iterative Deepening (DFID) Perform depth-first search to a bounded depth d, starting at d = 1 and increasing it by 1 each iteration. Depth-first iterative deepening is asymptotically optimal in terms of time and space among all brute-force search algorithms that find optimal solutions on a tree. Iterative deepening is an anytime algorithm in the sense that it can be stopped at any time and will produce the best move found so far. Iterative Broadening [Ginsberg] Rather than increasing the depth of the search, iterative broadening increases its breadth at every iteration. Thus on the first iteration only the first child of every node is expanded; on the next iteration both the first and second, and so forth. The intuition is that goal nodes are not usually randomly distributed in a tree, and so it is wasteful to explore an entire portion of a tree at once. British Museum Look everywhere until you find the solution. That is, BFS or DFS throughout the entire graph. Necessary on a finite graph where cost is an arbitrary value associated with arc lengths. 4. Heuristic Search Heuristic searches use some function that estimates the cost from the current state to the goal, presuming that such a function is efficient. (Generally speaking, heuristic search incorporates domain knowledge to improve efficiency over blind search.) Hill Climbing Looking at all of our operators, we see which one, when applied, leads to a state closest to the goal. We then apply that operator. The process repeats until no operator can improve our current situation (which may be a relative maximum, such as in the TSP). Problems with hill climbing: local maxima (we've climbed to the top of the hill, and missed the mountain), plateau (everything around is about as good as where we are), ridges (we're on a ridge leading up, but we can't directly apply an operator to improve our situation, so we have to apply more than one operator to get there). Solutions include: backtracking, making big jumps (to handle plateaus or poor local maxima), applying multiple rules before testing (helps with ridges). Hill climbing is best suited to problems where the heuristic gradually improves the closer it gets to the solution; it works poorly where there are sharp drop-offs. It assumes that local improvement will lead to global improvement. Steepest Ascent Hill climbing in which you generate all successors of the current state and choose the best one. These are identical as far as many texts are concerned. Branch and Bound Generally, in search we want to find the move that results in the lowest cost (or highest, depending). Branch and bound techniques rely on the idea that we can partition our choices into sets using some domain knowledge, and ignore a set when we can determine that the optimal element cannot be in it. In this way we can avoid examining most elements of most sets. This can be done if we know that a higher bound on set X is lower than a lower bound on set Y (in which case Y can be pruned). Example: Travelling Salesman Problem. We decompose our set of choices into a set of sets, in each one of which we've taken a different route out of the current city. We continue to decompose until we have complete paths in the graph. If while we're decomposing the sets, we find two paths that lead to the same node, we can eliminate the more expensive one. Best-first B&B is a variant in which we can give a lower bound on a set of possible solutions. In every cycle, we branch on the class with the least lower bound. When a singleton is selected we can stop. Depth-first B&B selects the most recently generated set; it produces DFS behavior but saves memory. Some types of branch-and-bound algorithms: A*, AO*, alpha-beta, SSS*, B*. Best-First Search Expand the node that has the best evaluation according to the heuristic function. An OPEN list contains states that haven't been visited; a CLOSED list contains those that have, to prevent loops. This approach doesn't necessarily find the shortest path. (When the heuristic is just the cost function g, this is blind search. When it's just h', the estimated cost to the goal, this is steepest ascent (I think -- POD). When it's g + h', this is A*. Beam Search Best-first search where the list of nodes under consideration is limited to the best n. "Beam" is meant to imply the beam of a flashlight wandering around the search space. A* [Nilsson] A kind of best-first search where the cost function f(n) = g(n) + h'(n), the actual cost of the path so far (g(n)), plus the estimated cost of the path from the current node to the goal (h'(n)). A* will always find an optimal path to a goal if the heuristic function is admissible; that is, if it never overestimates the distance to the goal. A* is optimal among heuristic searches in the sense that it will expand the fewest number of nodes, up to tie-breaking. If the heuristic function has constant absolute error (just when will this ever happen? -POD) the number of nodes expanded is linear in the solution depth. Graceful decay of admissibility: if h' rarely overestimates h by more than d, then the algorithm will rarely find a solution whose cost is more than d than the cost of the optimal solution. Iterative-Deepening A* A version of iterative deepening that keeps track of the A* heuristic evaluation function. As soon as the cost of exploring a path exceeds some threshold, that branch is cut off and search backtracks to the most recently generated node. The cost threshold starts with the heuristic estimate of the initial state and in each successive iteration increases to the minimum value that exceeded the previous threshold. IDA* is optimal if the heuristic is admissible. It is also faster and easier to implement than A*, because it is a DFS that does not need to maintain an OPEN list. B' A variant of A* that changes the heuristic evaluation function when it can be improved. This can happen when the heursitic value at a node is less than all descendents minus the length to each of them (so we can increase the length). It can also occur when all descendents have a better cost evaluation f than their parent, in which case the h' value is increased so that their f value equals the parent. B' can prove that it will not expand the same node more than O(n2) times, while A* may expand it an exponential number of times, though this is only relevant if the heuristic function is non-monotonic. Simulated Annealing The goal is to find a minimal energy state. The search descends except occasionally when, with low probability, it moves uphill instead. The probability of moving uphill decreases as the temperature of the system decreases, so such moves are much more likely earlier than later. Problems include: choosing an initial temperature, choosing the annealing schedule (the rate at which the system cools). Agendas An agenda is a list of tasks a system could perform. Each task has a list of justifications why it is proposed and a rating representing the overall weight of evidence suggesting it would be a useful task. The idea is that we should pay attention when several paths recommend the same action. Agendas are good for implementing monotonic production systems, and bad for nonmonotonic ones. Brute-Force Search Methods Space Optimal? Complexity Name Time Complexity BFS O(bd) O(bd) maybe optimal only when the optimal path is the shortest DFS O(bd) O(d) no - DFID O(bd) O(d) yes asymptotically optimal in both time and space among brute-force tree-searching algorithms Bidirectional O(bd/2) O(bd/2) yes assumes comparisons for common states can be done in constant time British Museum O(bd) O(bd) yes - Name Heuristic Search Methods Time Space Optimal? Complexity Complexity Comments Comments Hill Climbing N/A O(1) no needs mechanism for escaping from local minima Steepest Ascent N/A O(1) no needs mechanism for escaping from local minima Beam Search N/A O(1) no amount of space depends on size of OPEN list (size of beam) yes optimal if h'(n) never overestimates; a time bound of O(bd) is possible only for monotonic estimation functions (N the number of nodes) A* O(2N) O(bd) IDA* O(2N) O(d) yes as above; asymptotically optimal in terms of time and space over all heuristic search algorithms with monotonic estimation functions over tree search B' O(N2) O(bd) yes - yes will find optimal solution if cooling is slow enough and as time goes to infinity Simulated Annealing unbounded O(1) 5. Performance of Search Algorithms One or two comments on complexity. First, it's important to note in the above tables that in the case of BFS, the actual space complexity is O(bd+1), because in the worst case all of the nodes at the level below the goal node will be added to the queue before the goal node is found. A helpful general formula for doing more detailed analysis than asymptotic is this: 1 + b + b2 + ... + bd = (bd - 1) / (b - 1) In particular, this is the actual number of nodes explored in BFS through level d. In the case of iterative deepening this would a summation of this formula for i = 1 to i = d. 6. AND/OR Graphs In AND/OR graphs, nodes typically represent complete subproblems; the root node is the original problem to be solved, and the leaves are solved problems. Edges represent problem reduction operators, which decompose a problem into subproblems. If only one of the subproblems needs to be solved, the node is an OR node. If all of its subproblems must be solved in order to solve it, it is an AND node. A solution to an AND/OR graph is a subgraph that contains the root node, an edge from every OR node, and all the branches from every AND node, with only goal states as terminal nodes. Such graphs are most suitable for problems for which tree structures are the natural representation for the solution, as opposed to a simple path. Example: symbolic integration. The OR links represent the integrand (find some way to integrate the expression), while the AND links represent individual summands within the integrand, all of which must eventually be integrated. The solution required is a partiallyordered sequence of actions. The search algorithm AO* estimates the cost of the solution graphs rooted at various nodes and is guaranteed to find a cheapest solution if the estimates are optimistic. Rather than using OPEN and CLOSED lists, AO* uses a graph structure containing the nodes expanded so far. Each node points up to its immediate predecessors and down to its immediate successors. The nodes contain partial solutions, the heuristic estimate h' of the cost of a path to a set of solution nodes. (It is not possible to store g since there may be many paths to the node.) AO* is a two-step process. The first step is a top-down algorithm that marks the current, best partial solution. A nonterminal leaf node of this solution is chosen and expanded. The second step is a bottom-up, cost-revising, connector-marking step. The connectors that give the best estimate for a node are marked "best." Selecting the next node to expand isn't easy; one possibility is to select the node most likely to change the estimate of the best partial path solution graph. 7. Game Trees / Adversarial Search Games are a useful domain in which to study machine intelligence because they have clearly defined rules and goals, and highly-structured environments in which success or failure is clearly defined. Although Samuel argued that games were a good subject of study because they require limited knowledge, many standard testbeds (such as chess) are highly stylized in places (such as the opening and the endgame) and can beneficially use databases of stored patterns. Two-player turn-taking games can be represented as AND/OR trees. The root node is the initial situation, and the edges represent legal moves, alternating at each level between the player and the opponent. Leaf nodes are final positions that are wins, losses, or draws. From the perspective of the player, his moves emanate from OR nodes, since only one must be successful to achieve a win; meanwhile, his opponent's nodes are AND nodes, since the player must find a winning move for each response. The task in a two-player game is to choose a sequence of moves that forces a path that leads to a winning state. Solving a game tree means labeling each leaf node as a win, loss, or possibly draw. Labeling can be done recursively beginning with the leaf nodes (since their values can be determined externally) and backing up toward the root. This is usually done by a depthfirst algorithm that traverses the tree from left to right, but skips all nodes that cannot provide useful information (e.g., as soon as one successor of a node is a win, the node can be labeled a win). In most game trees, the tree is too deep, so moves are determined heuristically based upon a limited lookahead. Minimax Minimax is a depth-first, depth-limited search procedure, and is the prevaling strategy for searching game trees. Minimax searches down to a certain depth, and treats the nodes at that depth as if they were terminal nodes, invoking a heuristic function (called a static evaluation function) to determine their values. Assigning values recursively back up the tree, a player's node are assigned the largest (maximum) value among its successors, and an opponent's node is assigned the smallest (minimum) value among its successors. The problems involved in implementing minimax are when to cut off the recursive search and invoke the static evaulation function, and what evaluation function to use. Considerations include the number of plys explored, how promising the path is, how much time the computer has left to think, and how "stable" the configuration is. The Horizon Effect A potential problem in game tree search to a fixed depth is the horizon effect, which occurs when there is a drastic change in value immediately beyond the place where the algorithm stops searching. There are two proposed solutions to this problem, neither very satisfactory. Secondary Search One proposed solution is to examine the search space beyond the apparently best move to see if something is looming just over the horizon. In that case we can revert to the second-best move. Obviously then the second-best move has the same problem, and there isn't time to search beyond all possible acceptable moves. The Killer Heuristic This heuristic recommends that, if a good move for the opponent is found, the area of the tree beneath it should be examined early when considering the opponent's options. Alpha-Beta Pruning Intuitively, alpha-beta is an improvement over minimax that avoids searching portions of the tree that cannot provide more information about the next move. Specifically, alphabeta is a depth-first, branch-and-bound algorithm that traverses the tree in a fixed order (such as left to right) and uses the information it gains in the traversals to "prune" branches that can no longer change the minimax value at the root. Such cut off branches consist of options at game positions that the player will always avoid, because better choices are known to be available. Example. Suppose the left subtree of an OR node (a player's move) has value 3. This means the player can get at least a value of 3 by choosing the move leading to that tree. Now we begin to explore the right subtree, which consists of moves of the opponent. The first child we examine has value 2. This means that, if the player goes to that subtree, the opponent can make a move that results in a game value of 2. Knowing this, we can prune the rest of the right subtree, since we know it will have a value of 2 or worse (since the opponent's goal is to minimize the board value), and we already know the player can get a 3 by choosing the left subtree. Two variables are kept during the search. Alpha is the lower bound encountered so far, and beta is the upper bound. At maximizing levels (player's moves), only beta is used to cut off the search, and at minimizing levels only alpha is used. A number of refinements are possible to alpha-beta. One is waiting for quiescence (don't stop if the estimate changes drastically from one move to another; this avoids the horizon effect). Another is running a secondary search deeper along the chosen path to make sure there is no hidden disaster a few moves further along (this is called singular extension). Book moves can be used for openings and end games in many games where exhaustive search can be done in advance. Alpha-beta can also be extended to cut off search on paths that are only slight improvements; this is called futility cutoff. Alpha-beta is the most popular algorithm for searching game trees. B* [Berlinger, 1979] B* proves that an arc from the root of the search tree is better than any other. For each node expanded, it computes an upper and a lower bound. These two bounds converge as the search progresses, producing a natural termination of the search. The main strength of B* is that it can search branches that are not known to be best; no other known algorithms can do so, i.e., lower their optimistic bounds. (I'm unclear on what this means -- POD) During search, when the maximizing player can show that the pessimistic value of an arc is greater than or equal to the optimistic values of any other arcs, it can either raise the lower bound on the most optimistic node, or lower the upper bound on all other nodes. The important thing to note about B* is that it transfers control decisions (how deep to search, etc.) to the evaluation functions which now determine the effort limit by their crispness and ability to narrow the range between optimistic and pessimistic evaluations. B* can be used both for 1-person and 2-person (adversary) search. SSS* [Stockman, 1979] SSS* is a best-first search procedure that keeps upper bounds on the values of partially developed candidate strategies, choosing the best for further exploration. A strategy specifies one response of the player to each of the opponent's possible moves. The process continues until one strategy is fully developed, at which point it is the optimal strategy. SSS* is optimal in the average number of nodes examined in a two-player game, just as A* is for single-person search. It examines a (sometimes proper) subset of the nodes examined by alpha-beta. However, its superior pruning power is offset by the substantial storage space and bookkeeping required. SCOUT SCOUT evaluates a position J by computing the minimax value v of its first successor. It then "scouts" the remaining successors to see whether any produces a value higher than v, since testing this limited supposition is faster than evaluating the minimax value of each successor. If a successor passes this test, its minimax value is computed and used as v in subsequent tests. The best successor is then returned. 8. Performance of Game Searching Algorithms Every search strategy that evaluates a game tree must examine at least twice the square root of the number of nodes in the tree. This can be established by the following argument. Evaluating a game with value V amounts to showing that, no matter how the opponent reacts, the player can guarantee a payoff of at least V and, simultaneously, no matter what the player does the opponent can limit the payoff to V. Each of these two verification tasks requires the display of an adversary strategy producing value V. Since each strategy branches on every other move (since the opposing side will always pick the best move for it), the number of nodes in such a tree is roughly the square root of the number of nodes in the entire game tree. Thus twice that number must be explored to establish the value V for the game. This lower bound rarely occurs in practice, since we don't know in advance which strategies are "compatible." If no information is available regarding the benefits of impending moves, roughly the 3/4th-root of the number of nodes will be explored, on average. As move-rating information becomes more accurate, the number of nodes explored will approach the twice-square-root bound. Assuming a uniform b-ary tree searched to depth d, with values associated randomly with nodes at the frontier, it can be shown that the effective branching factor of alpha-beta pruning (and SCOUT and SSS*) is B = Xb / (1 - Xb) ~= b3/4 where Xb is the unique positive root of the equation xb + x - 1 = 0. Moreover, this is the best branching factor achievable by any game searching algorithm. Roughly, alpha-beta will search only b3/4 of the b moves available from each game position. Alternatively, this means that the search depth can be increased by a factor of log b / log B ~= 4/3 over an exhaustive minimax search. If the successors are perfectly ordered (i.e., to minimize search using alpha-beta), alphabeta examines 2bd/2 - 1 game positions. Thus, when B is the effective branching factor, we have 1. B = b for exhaustive search, 2. B ~= b3/4 for alpha-beta with random ordering, and 3. B = b1/2 for alpha-beta with perfect ordering. 9. Real-Time Algorithms In systems that have limited time to act, or in which new information is arriving during the search process (such as robots or vehicle navigation), it's necessary to interleave search and action. Some algorithms for real-time search are described below. Minmin [Korf] Minmin searches to a fixed depth determined by resources. The A* evaluation function (f(n) = g(n) + h'(n)) is used at the horizon, and search is moved to best frontier node. The value at the root is the minimum of the frontier values. Alpha Pruning A branch-and-bound extension of minmin. It improves efficiency by evaluating both interior nodes and frontier nodes. If the heuristic function is monotonic, then f (the evaulation function) is nondecreasing, so we can prune any path when its cost equals or exceeds the cost seen so far. This results in a dramatic performance improvement over minmin. Real-time A* [Korf] This algorithm produces an action every k seconds, where k depends upon the depth of the search horizon. Rather than simply repeating minmin search at each action (which could result in infinite loops) it records each action taken and its second-best successor; if the node is encountered again it looks up the heuristic estimate on the node rather than re-doing minmin. Backtracking occurs when the cost to go forward is greater than the estimated cost of going back to a previous state and proceeding from there to the goal state. RTA* will find a path to a solution if one exists and the search graph is completely accessible. The path will not necessarily be optimal, though RTA* will make locally optimal decisions. 10. Constraint-Satisfaction Problems In some search problems, there is no explicit goal state; rather, there is a set of constraints on a possible solution that must be satisfied. The task is not to find a sequence of steps leading to a solution, but instead to find a particular state that simultaneously satisfies all constraints. The approach is to assign values to the constrained variables, each such assignment limiting the range of subsequent choices. Even though the sequence is not of interest, the problem can still be regarded as a search through state space. Example: the eight-queens problem. The Eight-Queens Problem is to place eight queens on a standard chessboard in such a way that no two queens are attacking one another. The topology of a constraint graph can sometimes be used to identify solutions easily. In particular, binary CSPs whose constraint graph is a tree can be solved optimally in time O(nk2) where n is the number of variables and k is the number of values for each variable. Going from the leaves toward the root, we delete from each node the values that do not have at least one match for each of its successors. If any node ends up empty, there is no solution; otherwise, we trace any remaining value from the root down, and this produces a consistent solution. The most common algorithm for solving CSPs is a type of depth-first search called backtracking. The most primitive version assigns variables to values in a predetermined order, at each step attempting to assign a variable to the next value that is consistent with previous assignments and the constraints. If no consistent assignment can be found for the next variable, a dead-end is reached. In this case the algorithm goes back to one of the earlier variables and tries a different value. Backtracking and Thrashing The obvious approach is to assign variables in some order, then go back and change assignments when a conflict is detected. However, the run-time complexity of this approach is still exponential, and it suffers from thrashing; that is, search in different parts of the space keeps failing for the same reasons. The simplest cause of thrashing is node inconsistency, in which there is some possible value of a variable that will cause it to fail in and of itself; when it is instantiated it always fails immediately. This can be resolved by removing such values before search begins. Dependency-Directed Backtracking Since backtracking is used in many AI applications (solving CSPs, TMSs, PROLOG, etc.) there are a number of schemes to improve its efficiency. Such schemes, called dependency-directed backtracking, or sometimes intelligent backtracking [Stallman and Sussman, 1977], can be classified as follows: Lookahead Schemes These schemes control which variable to instantiate next or what value to choose among the consistent options. Variable ordering: This approach tries to choose a variable that will make the rest of the problem easier to solve. This is usually done by choosing the variable involved in the most constraints. Value ordering: A value is chosen that will maximize the number of options available for future assignments. Look-back Schemes These approaches control the decision of where and how to go back in case of dead-ends. There are two basic approaches: Go back to source of failure: Try to change only those past decisions that caused the error, leaving other past decisions unchanged. Constraint recording: Record the "reasons" for the dead-end so that they will be avoided in future search. Gashnig's "backjumping" [1979] is the best-known go-back scheme (q.v.). A simpler version jumps to the youngest ancestor constraining the dead-end variable. Dependency-directed backtracking is also used in truth-maintenance systems (Doyle's RMS, 1979). It works as follows. A variable is assigned some value, and a justification for that value is recorded (and it may be simply that there is no justification for any other values). Then a default value is assigned to some other variable and justified. At this point the system checks whether the assignments violate any constraints; if so, it records that the two are not simultaneously accpetable, and this record is used to justify the choice of some other variable. This continues until a solution is found. Such a system never performs redundant backtracking and never repeats computations. Preprocessing Constraint recording can be implemented by preprocessing the problem or by recording constraints as they are encountered during search. The most common approaches are arc consistency and path consistency. Arc consistency deletes values of variables that have no consistent matches in adjacent (i.e., directly connected) variables. Path consistency records sets of forbidden value pairs when they can't be matched at some third variable. Preprocessing for path consistency can be expensive; O(n3k3) operations, while many forbidden pairs would never actually be encountered. There are more efficient learning techniques that process constraints as the search is performed. Cycle Cutset [Dechter and Pearl, 1987] Another approach to improving backtracking performance. The goal is to identify a set of nodes that, when removed, leave a tree-structured (i.e., cyclefree) constraint graph. Once in tree form, the CSP can be solved in linear time. This gives an upper complexity bound on the complexity of CSPs -- if c is the size of some cycle cutset, and we instantiate the variables in the cutset first, then the complexity of the search is at most O(nkc), rather than the O(kn) associated with general backtrack search. Backtrack-Free Search Theorem [Freuder]: A k-consistent CSP having a width (k - 1) ordering admits a backtrack-free solution in that ordering. In particular, a graph of width 1 (i.e., a tree) that is arc consistent admits of backtrack free solutions; a graph of width 2 that is path consistent admits of backtrack free solutions. 11. Other Search Methods Island-Driven Search Search proceeds outward from islands in the search space; this form of search is usually from hierarchical planning. 12. Notable Search Programs Logic Theorist [Newell, Shaw, and Simon, 1956] The Logic Theorist was a joint production of the RAND Corporation and CMU, designed to prove theorems in propositional calculus. It was one of the first programs to rely heavily upon the use of heuristics to guide search. The Logic Theorist introduced the ideas of forward chaining and backward chaining in the proof procedure. The general algorithm it used was a blind, breadth-first search with backward reasoning. General Problem Solver (GPS) [Newell, Shaw, and Simon, 1957] The successor to the Logic Theorist research, GPS had two main goals: to design a machine that could solve problems requring intelligence, and to learn how human beings solved problems. Notable innovations in GPS were the clear distinction between problem-solving knowledge and domain knowledge and the introduction of means-ends analysis as a means of search. Though GPS was meant to be general-purpose, it largely could solve problems only in the domain of logic; later extensions by Ernst allowed to solve problems in areas such as resolution theorem proving, symbolic integration, and a variety of puzzles. ABSTRIPS [Sacerdoti, 1974] ABSTRIPS is an extension to the STRIPS system. It introduced the idea of hierarchical planning as a way to handle combinatorial explosion in the search space. The idea of hierarchical planning is to try to solve a problem in the most general terms, and then refine the plan to include details until eventually a fully-implementable plan is reached. More specifically, a simplified version of the problem is stated in a higher level problem space or abstraction space, and the detailed, implementable version in the ground space. This is obviously generalizable to more than two levels of hierarchy. Hierarchy was formed by giving each precondition on actions a criticality value. The hierarchy was defined in terms of the criticality levels; on the level of criticality n, all operators of criticality less than n were ignored. Search in ABSTRIPS used means-ends analysis, as GPS. The search strategy could be described as length-first, as a complete plan is formed at one level before being refined at a deeper level. 13. Sources Ronny Kohavi, AI Qual Note #7: Search Methods. Pearl and Korf, Search Techniques. Ginsberg, Introduction to Artificial Intelligence. Kumar, Algorithms for Constraint-Satisfaction Problems: A Survey. Feigenbaum and Cohen, Handbook of AI. See the AI Qual Reading List for further details on these sources.