Materi Pendukung : T0264P21_4 4.1 From FSAs to RTNs

advertisement

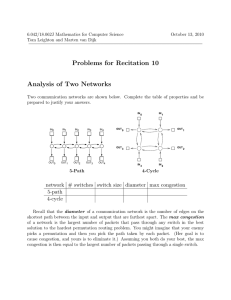

Materi Pendukung : T0264P21_4

Patrick Blackburn and Kristina Striegnitz

Version 1.2.4 (20020829)

4.1 From FSAs to RTNs

There is a very obvious practical problem with FSAs: they are not modular, and

can be very hard to maintain.

To see what this means, suppose we have a large FSA network containing a lot

of information about English syntax. However, as we have seen, FSAs are

essentially just directed graphs, so a large FSA is just going to be a large

directed graph. Suppose we ask the question: where is all the information about

noun phrases located in the graph? The honest answer is: it's probably spread

out all over the place. Usually there will be no single place we can point to and

say: that's what a noun phrase (or a verb phrase, or an adjectival phrase, or a

prepositional phrase ... is).

Note that this is not just a theoretical problem: it's a practical problem too.

Suppose we want to add more linguistic information to the FSA --- say more

information about noun phrases. Doing so will be hard work: we will have to

carefully examine the entire network, for all the possible places where the new

information might be relevant. The bigger the network, the harder it becomes to

ensure that we have made all the changes that are needed.

It would be much nicer if we really could pin down where all the information was -- and there is a simple way to do this. Instead of working with one big network,

work with a whole collection of subnetworks, one subnetwork for every category

(such as sentence, noun phrase, verb phrase, ...) of interest. And now for the first

important idea:

Making an X transition in one subnetwork can be done by traversing the X

subnetwork. That is, the network transitions can call on other subnetworks.

Let's consider a concrete example. Here's our very first RTN:

RTN1

--------------------------------------s |

|

--

|

NP

VP

-> 0 ------> 1 ------> 2 ->

|

|

|

----------------------------------------------------------------------------np |

|

--|

Det

N

-> 0 ------> 1 ------> 2 ->

|

|

|

----------------------------------------------------------------------------vp |

|

--|

V

NP

|

-> 0 ------> 1 ------> 2 ->

|

|

---------------------------------------

We also have the following lexicon, which I've written in our now familiar Prolog

notation:

word(n,wizard).

word(n,broomstick).

word(np,'Harry').

word(np,'Voldemort').

word(det,a).

word(det,the).

word(v,flies).

word(v,curses).

RTN1 consists of three subnetworks, namely the s, np, and vp subnetworks. And

a transition can now be an instruction to go and traverse the appropriate

subnetwork.

Let's consider an example: the sentence Harry flies the broomstick. Informally

(we'll be much more precise about this example later) this is why RTN1

recognizes this sentence. We start in the s subnetwork (after all, we want to

show it's a sentence) in state 0 (the initial state of subnetwork s). The word Harry

is then accepted (the lexicon tells us that it is an np) and this takes us to state 1

of subnetwork s.

But now what? We have to make a vp transition to get to state 2 of the s

subnetwork. So we jump to the vp network, and start trying to traverse that. The

vp network immediately let's us accept flies, as the lexicon tells us that this is a v,

and this takes us to state 1 of subnetwork vp.

But now what? We have to make a np transition to get to state 2 of the vp

subnetwork. So we jump to the np network, and start trying to traverse that. The

np subnetwork lets us accept a broomstick, so we have worked through the

entire input string. We then jump back to state 2 of the vp subnetwork --- a final

state, so we have successfully recognized a vp --- and then jump back to state 2

of the s subnetwork. Again, this is a final state, so we have successfully

recognized the sentence.

As I said, this is a very informal description of what is going on, and we need to

be a lot more precise. (After all, we are doing an awful lot of jumping around, so

we need to know how exactly how this is controlled.)

But forget that for now and simply look at RTN1. It should be clear that it is far

more modular than any FSA we have seen. If we ask where the information

about noun phrases is located, there is a clear answer: it's in the np subnetwork.

And if we need to add more information about NPs, this is now easy: we just

modify the np subnetwork. We don't have to do anything else: all the other

subnetworks can now access the new information simply by making a jump.

So, from a practical perspective, it is clear that the idea of subnetworks with the

ability to call one another is a useful: it helps us organize our linguistic knowledge

better. But once we have come this far, there is a further step that can be taken,

and this will take us to genuinely new territory:

Allow subnetworks to call one another recursively.

Let's consider an example. RTN2 is an extension of RTN1:

RTN2

--------------------------------------s |

|

-|

NP

VP

|

-> 0 ------> 1 ------> 2 ->

|

|

----------------------------------------------------------------------------np |

|

--|

Det

N

|

-> 0 ------> 1 ------> 2 |

/ \

|

/

\

|

wh \/

/\ VP |

\

/

|

\ /

|

3

|

|

-------------------------------------->

--------------------------------------vp |

|

--|

V

NP

|

-> 0 ------> 1 ------> 2 ->

|

|

---------------------------------------

For our lexicon we take all the lexicon of RTN1 together with:

word(wh,who).

word(wh,which).

Note that this network allows recursive subnetwork calls. If we are in subnetwork

vp trying to make an np transition, we do so by jumping to subnetwork np. But if

we there make a transition from 3 to 2, this allows us to jump back to the vp

subnetwork. But then, we can jump back to the np subnetwork, and then back to

the vp, and so on ...

Now, this kind of recursion is linguistically natural: it's what allows us to generate

noun phrases like the wizard who curses the wizard who curses the wizard who

curses Voldemort. But obviously if we are going to do this sort of thing, we need

more than ever to know how to control the jumping around it involves. So let's

consider how to do this.

4.2 A Closer Look

How exactly do RTNs work? In particular, what information do we have to keep

track of when using an RTN in recognition mode?

Now, the first two parts of the answer are clear from our earlier work on FSAs. If

we want to recognize a string with an RTN, it is pretty clear that we are going to

have to keep track of (1) which symbol we are reading on the input tape, and (2)

which state the RTN is in. OK --- things are slightly more complicated for RTNs

as regards point (2). For example, it's not enough to remember that the machine

is in state 0, as several subnetworks may have a state called 0. But this is easy

to arrange. For example, ordered pairs such as

or

uniquely identify

which state we are interested in, so we simply need to keep track of such pairs.

But this (small) complication aside, things are pretty much the same as with

FSAs --- at least, so far.

But there is something else vital we need to keep track of:

which state we return to after we have traversed another subnetwork.

This is something completely new --- what do we do here?

This question brings us to one of the key ideas underlying the use of RTNs: the

use of stacks. Stacks are one of the most fundamental data structures in

computer science. In essence they are a very simple way of storing and

retrieving information, and RTNs make crucial use of stacks. So: what exactly is

a stack?

4.2.1 Stacks

A stack can be though of as an ordered list of information. For example

a 23 4 foo blib gub 2

is a stack containing seven items. But what makes a stack a stack is that items

can only be added or removed from a stack from one particular end. This `active

end' --- the one where data can be added or removed --- is called the top of the

stack. We shall represent stacks as horizontal lists like the one just given, and

when we do this, the left most position represents the top of the stack. Thus, in

our previous example, `a' is the topmost item on the stack.

As I said, all data manipulation takes place via top. Moreover, there are only two

data manipulation operations: push and pop.

The push operation adds a new piece of data to the top of a stack. For example,

if we push 5 onto the previous stack we get

5 a 23 4 foo blib gub 2

The pop operation, on the other hand, removes the top item from the stack. For

example, performing pop on the previous stack removes the top item from the

stack, thus giving us the value 5, and stack is now like it was before:

a 23 4 foo blib gub 2

If we perform three more pops we get the value 4, and the following stack is left

behind

foo blib gub 2

Thus, stacks work on a `last in, first out' principle. The last thing to be added to a

stack (via push) is the first item that can be removed (via pop).

There is a special stack, the empty stack, which we write as follows

empty

We are free to push new values onto an empty stack. For example, if we push 2

onto the empty stack we obtain the stack

2

On the other hand, we cannot pop anything off the empty stack --- after all,

there's nothing to pop. If we try to pop the empty stack, we get an error.

4.2.2 Stacks and RTNs

Stacks are used to control the calling of subnetworks in RTNs. In particular:

1. Suppose we are in a network , and suppose that traversing the

subnetwork would take us to state in subnetwork . Then we push the

state/subnetwork pair

onto the stack and try to make the traversal.

That is, the stack tells us where to return to if the traversal is successful.

2. For example, in RTN1, if we were in the vp network at node 1, we could

get to node 2 by traversing the np subnetwork. So, before we try to make

the traversal we push (2,vp) onto the stack. If the traversal is successful,

we return to state 2 in subnetwork vp at the end of it.

3. But how, and when, do we return? With the help of pop. Suppose we have

just successfully traversed subnetwork . That is, we have reached a final

state for subnetwork . Do we halt? Not necessarily --- we first look at the

stack:

o If the stack is empty, we halt.

o If the stack is not empty, we jump the the state/subnetwork pair

recorded on the stack.

Because stacks work on the `last in, first out' principle, popping the stack

always takes us back to the relevant state/subnetwork pair.

That's the basic idea --- and we'll shortly give an example to illustrate it. But

before we do this, let's define what it means to recognize a string. A subnetwork

of an RTN recognizes a string, if when when we start with the RTN in an initial

state of that subnetwork, with the pointer pointing at the first symbol on the input

tape, and with an empty stack, we can carry out a sequence of transitions and

end up as follows:

1. In a final state of the subnetwork we started in,

2. with the pointer pointing to the square immediately after the last symbol of

the input, and

3. with an empty stack.

Incidentally, in theoretical computer science, RTNs are usually called pushdown

automata, and it should now be clear why.

4.2.3 An Example

Let's see how all this works. Consider the sentence Harry flies a broomstick.

Intuitively, this should be accepted by RTN1 (and indeed, RTN2) but let's go

through the example carefully to see exactly how this comes about.

So: we want to to show that Harry flies a broomstick is a sentence. So we have

to start off (a) in an initial state of the s network, (b) with the pointer scanning the

first symbol of the input (that is, `Harry')

Tape and Pointer

State

Stack

Harry flies a broomstick

^

Harry flies a broomstick

^

Harry flies a broomstick

(0,s)

empty

(1,s)

empty

Comm

ent

Step

1:

Step

2:

Step

sh

3:

Step

4:

Step

sh

5:

Step

6:

Step

7:

^

Harry flies a broomstick

^

Harry flies a broomstick

^

Harry flies a broomstick

^

Harry flies a broomstick

(0,vp)

(2,s)

Pu

(1,vp)

(2,s)

(0,np)

(2,vp) (2,s)

(1,np)

(2,vp) (2,s)

(2,np)

(2,vp) (2,s)

(2,vp)

(2,s)

Po

(2,s)

empty

Po

Pu

^

Step

p

8:

Harry flies a broomstick

Step

p

9:

Harry flies a broomstick

^

^

So: have we recognized the sentence? Yes: at Step 9 we are (a) in a final state

of subnetwork s (namely state 2), (b) scanning the tape immediately to the right

of the last input symbol, and (c) we have an empty stack.

This example illustrates the basic way stacks are used to control RTNs. We shall

see another example, involving recursion, shortly.

4.3 Theoretical Remarks

The ability to use named subnetworks is certainly a great practical advantage of

RTNs over FSAs --- it makes it much easier for us to organize linguistic data. But

it should be clear that the really interesting new idea in RTNs is not just the use

of subnetworks, but the {\em recursive/ use of such subnetworks. And now for

two important questions. Does the ability to use subnetworks recursively really

give us additional power to recognizes/generate languages? And if so, how much

power does it give us?

Let's answer the first question. Yes, the ability to use subnetworks recursively

really {\em does/ offer us extra power. Here's a simple way to see this. As I

remarked in an earlier lecture, it is {\em impossible/ to write an FSA that

recognizes/generates the formal language

. Recall that this is the formal

language consisting of precisely those strings which consist of a block of as

followed by a block of bs. That is, the number of as and bs must be exactly the

same (note that the empty string, , belongs to this language).

However, it is very easy to write a RTN that recognizes/generates this language.

Here's how:

--------------------------------------s |

|

-|

#

|

-> 1 ----------------> 4 ->

|

|

|

|

|

|

|

a \/

/\ b

|

|

|

|

|

|

|

2 ----------------> 3

|

s

|

|

---------------------------------------

This RTN consists of a single subnetwork (named s) that recursively calls itself.

Let's check that this really works by looking at what happens when we recognize

aaabbb. (In this example I won't bother writing states as ordered pairs --- as there

is only one subnetwork, each node is uniquely numbered.)

Tape and Pointer

Step

1:

Step

2:

Step

3:

Step

4:

Step

5:

Step

6:

Step

7:

Step

8:

Step

9:

Step 10:

Step 11:

Step 12:

Step 13:

Step 14:

aaabbb

^

aaabbb

^

aaabbb

^

aaabbb

^

aaabbb

^

aaabbb

^

aaabbb

^

aaabbb

^

aaabbb

^

aaabbb

^

aaabbb

^

aaabbb

^

aaabbb

^

aaabbb

State

Stack

1

empty

2

empty

1

3

2

3

1

3,3

2

3,3

1

3,3,3

4

3,3,3

3

3,3

4

3,3

3

3

4

3

3

empty

4

empty

Comment

Push

Push

Push

Pop

Pop

Pop

^

So, after 14 steps, we have read all the symbols (the pointer is immediately to

the right of the last symbol), we are in a final state (namely 4), and the stack is

empty. So this RTN really does recognize aaabbb. And from this example it

should be pretty clear that this RTN can recognize/generate the language

.

So RTNs can do things that FSAs cannot.

This brings us to the second question. We now know that RTNs can do more

than FSAs can --- but how much more can they do? Exactly which languages

can be recognized/generated by RTNs?

There is an elegant answer to this question: RTNs can recognize/generate

precisely the context free languages. That is, if a language is context free, you

can write an RTN that recognizes/generates it; and, conversely, if you can write

an RTN that recognizes/generates some language, then that language is context

free.

Now, we haven't yet discussed context-free languages in this course (we will

spend a lot of time on them later on) but we discussed them a little in the first

Prolog, course and you have certainly come across this concept before.

Basically, a context free grammar is written using rules like the following:

S

NP VP

NP

Det N

Note that there is only over one symbol to the left hand side of the

symbol; this

is the restriction that makes a grammar `context free'. And a language is context

free if there is some context free grammar that generates it.

There is a fairly clear connection between context free grammars and RTNs.

First, it is easy to turn a context free grammar into an RTN. For example,

consider the following context free grammar:

S

S

aSb

Rule 1 says that an can be rewritten as nothing at all (the stands for the empty

string). Rule 2 says that an can be rewritten as an a followed by an followed by

a b. Note that this second rule is recursive --- the definition of how to rewrite

makes use of .

If you think about it, you will see that this little context free grammar generates

the language

discussed above. For example, we can rewrite to aaabbb as

follows:

S

rewrite using Rule 2

aSb

rewrite using Rule 2

aaSbb rewrite using Rule 2

aaaSbbb rewrite using Rule 1

aaabbb

And if you think about it a little more, you will see that this context free grammar

directly corresponds to the RTN we drew above:

1. The corresponds to the name of the subnetwork (that is, s).

2. Each rule corresponds to a path through the S subnetwork from an initial

to a final node. The first rule (the rule) corresponds to the jump arc, and

the second rule (the recursive rule) corresponds to the recursive transition

through the network (that is, the transition where the s subnetwork

recursively calls itself)

OK --- I haven't spelt out all the details, but the basic point should be clear: given

a context free grammar, you can turn it into an RTN.

But you can also do the reverse: given an RTN, you can turn it into a context free

grammar. For example, consider the following context free grammar:

S

NP

V

N

N

NP

NP

Det

Det

V

V

NP VP

Det N

NP VP

wizard

broomstick

Harry

Voldemort

a

the

flies

curses

This context free grammar corresponds in a very straightforward way to our very

first RTN. The symbols on the left hand side are either subnetwork names or

category symbols, and the right hand side is either a path through a subnetwork,

or a word that belongs to the appropriate category.

4.4 Putting it in Prolog

Although it's more work than implementing an FSA recognizer, its not all that

difficult to implement an RTN recognizer. Above all, we don't have to worry about

stacks. Prolog (as you should all know by know) is a recursive language, and in

fact Prolog has its own inbuilt stack (Prolog's backtracking mechanism is stack

based). So if we simply write ordinary recursive Prolog code, we can get the

behavior we want.

As before, the main predicate is recognize, though now it has four arguments

recognize(Subnet,Node,In,Out)

Subnet is the name of the subnetwork being traversed, Node is the name of a

state in that subnetwork, and In and Out are a difference list representation of the

tape contents. More precisely, the difference between In and Out is the portion of

the tape that the subnetwork Subnetwork recognized starting at state Node:

recognize(Subnet,Node,X,X) :final(Node,Subnet).

recognize(Subnet,Node_1,X,Z) :arc(Node_1,Node_2,Label,Subnet),

traverse(Label,X,Y),

recognize(Subnet,Node_2,Y,Z).

As in our work with FSAs, the real work is done by the traverse predicate. Most

of what follows is familiar form our work on FSAs --- but take special note of the

last clause. Here traverse/3 calls recognize/4. That is, unlike with FSAs, these

two predicates are now mutually recursive. This is the crucial step that gives us

recursive transition networks.

traverse(Label,[Label|Symbols],Symbols) :\+(special(Label)).

traverse(Label,[Symbol|Symbols],Symbols) :word(Label,Symbol).

traverse('#',String,String).

traverse(Subnet,String,Rest) :initial(Node,Subnet),

recognize(Subnet,Node,String,Rest).

One other small change is necessary: not only jumps and category symbols need

to be treated as special, so do subnetwork names. So we have:

special('#').

special(Category) :- word(Category,_).

special(Subnet) :- initial(_,Subnet).

To be added: step through the processing of

Harry flies a broomstick.

Finally, we have some driver predicates. This one tests if a list of symbols is

recognized by the subnetwork Subnet.

test(Symbols,Subnet) :initial(Node,Subnet),

recognize(Subnet,Node,Symbols,[]).

This one tests if a list of symbols is a sentence (that is, if it is recognized by the s

subnetwork). Obviously this driver will only work if the RTN we are working with

actually has an s subnetwork!

testsent(Symbols) :initial(Node,s),

recognize(s,Node,Symbols,[]).

This one generates lists of symbols recognized by all subnetworks.

gen :- test(X,Subnet),

write(X),nl,

write('has been recognized as: '),write(Subnet),nl,nl,

fail.

Last of all, this one generates sentences, that is lists of symbols recognized by

the s subnetwork (assuming there is one).

gensent :test(X,s),

write(X),nl,

write('is a sentence'), nl,nl,

fail.

4.5 Exercises

1. Show what happens when we give the input The wizard curses the wizard

to RTN2. You should give all the steps, showing the tape and pointer, the

state, and the stack, just as was done in the text.

2. Show what happens when we give the input aab to our RTN for the

language

. This string will {\em not/ be accepted. You should give all

the steps, showing the tape and pointer, the state, and the stack, just as

was done in the text. Then say exactly why the string was not accepted.

3. Consider the following context free grammar:

S

S

ab

aSb

4. This generates the language

\ { }. That is, it generates the set of all the

strings in

except the empty string . Draw the RTN that corresponds to

to this grammar, and give the its Prolog representation.

5. Write down the context free grammar that corresponds to our second

RTN.