A DOCUMENT-BASED SOFTWARE TRACEABILITY TO SUPPORT SUHAIMI BIN IBRAHIM

advertisement

A DOCUMENT-BASED SOFTWARE TRACEABILITY TO SUPPORT

CHANGE IMPACT ANALYSIS OF OBJECT-ORIENTED SOFTWARE

SUHAIMI BIN IBRAHIM

UNIVERSITI TEKNOLOGI MALAYSIA

BAHAGIAN A – Pengesahan Kerjasama*

Adalah disahkan bahawa projek penyelidikan tesis ini telah dilaksanakan melalui

kerjasama antara ______________________ dengan ___________________

Disahkan oleh:

Tandangan

: …………………………………………

Nama

: …………………………………………

Jawatan

: …………………………………………

Tarikh: …………..

(Cop rasmi)

* Jika penyediaan tesis/projek melibatkan kerjasama.

============================================================

Bahagian B – Untuk kegunaan Pejabat Sekolah Pengajian Siswazah

Tesis ini telah diperiksa dan diakui oleh:

Nama dan Alamat Pemeriksa Luar

: Prof. Dr. Jubair Jwamear Al-Jaafar Alhadithi

: Department of Computer Science,

: Kulliyyah of Infor. and Comp. Technology,

: International Islamic University Malaysia,

: Gombak, Kuala Lumpur

Nama dan Alamat Pemeriksa Dalam I : Prof. Dr. Safaai bin Deris

: Jabatan Kejuruteraan Perisian,

: Fakulti Sains Komp. dan Sistem Maklumat,

: UTM, Skudai

Nama dan Alamat Pemeriksa Dalam II: Dr. Wan Mohd Nasir bin Wan Kadir

: Jabatan Kejuruteraan Perisian,

: Fakulti Sains Komp. dan Sistem Maklumat,

: UTM, Skudai

Disahkan oleh Penolong Pendaftar di Sekolah Pengajian Siswazah:

Tandatangan : …………………………………….. Tarikh: ……………

Nama

: ……………………………………..

A DOCUMENT-BASED SOFTWARE TRACEABILITY TO SUPPORT

CHANGE IMPACT ANALYSIS OF OBJECT-ORIENTED SOFTWARE

SUHAIMI BIN IBRAHIM

A thesis submitted in fulfilment of the

requirements for the award of the degree of

Doctor of Philosophy

Faculty of Computer Science and Information System

Universiti Teknologi Malaysia

MAY 2006

ii

iii

ALHAMDULILLAH ……

For my beloved parents,

my wife, Hjh. Aslamiah bt. Md Nor

and my children, Muhammad Nazri and Noor Aini

who have given me the strength and courage.

iv

ACKNOWLEDGEMENT

I would like to take this opportunity to thank my main supervisor, Dato’ Prof.

Dr. Norbik Bashah Idris for his encouragement, advice and inspiration throughout

this research. Special thanks go to my co-supervisor, Prof. Dr. Aziz Deraman, the

faculty Dean of Technology and Information Science, Universiti Kebangsaan

Malaysia for his constant support, technical guidance and constructive review of this

research work.

I am especially indebted to Prof. Malcolm Munro of Durham University,

United Kingdom, a renown expert in software impact analysis for his early support

through insightful ideas and constructive comments on the draft model and approach

to this research. At the beginning of this journey he guided and encouraged me

throughout my stay at Durham, giving me inspiring and fruitful experience in the

research area.

A great gratitude also goes to the Universiti Teknologi Malaysia for

sponsoring my three year PhD study and to MOSTI for funding the IRPA research

project. My thanks also go to all CASE (Centre For Advanced Software Engineering)

staff and individuals who have been involved directly or indirectly in the project.

Lastly, my appreciation also goes to post-graduate students of CASE, Universiti

Teknologi Malaysia, Kuala Lumpur for their participation in the controlled

experiment.

v

ABSTRACT

The need for software modifications especially during the maintenance phase,

is inevitable and remains the most costly. A major problem to software maintainers is

that seemingly small changes can ripple through the entire system to cause major

unintended impacts. As a result, prior to performing the actual change, maintainers

need mechanisms in order to understand and estimate how a change will affect the

rest of the system. Current approaches to software evolution focus primarily on the

limited scope of change impact analysis e.g. code. This research is based on the

premise that a more effective solution to manage system evolution can be achieved

by considering a traceability approach to pre-determine the potential effects of

change. The aim of this research is to establish a software traceability model that can

support change impact analysis. It identifies the potential effect to software

components in the system that does not lie solely on code but extends to other high

level components such as design and requirements. As such, in this research,

modification to software is therefore considered as being driven by both high level

and low level software components. This research applies a comprehensive static and

dynamic analysis to provide better impact infrastructures. The main research

contribution in this thesis can be seen in the ability to provide a new software

traceability approach that supports both top-down and bottom-up tracing. In further

proving the concept, some software prototype tools were developed to automate and

support the potential effects. The significant achievement of the model was then

demonstrated using a case study on a non-trivial industrial application software, and

evaluated via a controlled experiment. The results when compared against existing

benchmark proved to be significant and revealed some remarkable achievements in

its objective to determine change impacts.

vi

ABSTRAK

Keperluan

terhadap

pengubahsuaian

perisian

terutama

pada

fasa

penyenggaraan adalah suatu yang tidak dapat dielakkan dan masih melibatkan kos

yang tinggi. Permasalahan utama kepada penyenggara perisian ialah pindaan yang

nampaknya agak kecil boleh merebak ke seluruh sistem lalu menyebabkan impak

luar jangka yang besar. Lantaran itu, sebelum kerja penyenggaraan dilakukan,

penyenggara perlukan beberapa mekanisma untuk memahami dan menganggar

bagaimana pindaan akan menjejaskan bahagian lain dalam sistem. Pendekatan

semasa terhadap evolusi perisian lebih memfokuskan kepada skop analisa impak

pindaan yang terhad, contohnya kod sumber. Kajian ini berasaskan kepada landasan

iaitu penyelesaian efektif untuk menangani evolusi sistem boleh dicapai dengan

mengambilkira pendekatan jejakan bagi mengenalpasti terlebih dahulu impak

pindaan berpotensi. Tujuan kajian ini ialah untuk menghasilkan satu model jejakan

perisian yang boleh membantu menganalisa impak pindaan. Ia mengenalpasti kesan

berpotensi terhadap komponen perisian dalam sistem yang tidak terletak semata-mata

kepada kod tetapi meluas kepada komponen aras tinggi seperti rekabentuk dan

keperluan. Oleh itu dalam kajian ini, pengubahsuaian terhadap perisian boleh

diambilkira dengan melibatkan kedua-dua komponen perisian aras tinggi dan aras

rendah. Penyelidikan ini menggunakan analisa statik dan dinamik yang komprehensif

untuk menyediakan infrastruktur impak yang lebih baik. Sumbangan utama kajian

dalam tesis ini boleh dilihat dari segi keupayaan menyediakan satu pendekatan

perisian jejakan baru yang membantu kedua-dua jejakan atas-bawah dan bawah-atas.

Bagi membuktikan lagi pengesahan konsep, beberapa alatan prototaip perisian dibina

untuk mengautomasi dan membantu kesan berpotensi. Pencapaiannya yang

signifikan kemudian dipersembahkan dengan menggunakan satu kajian kes yang

merupakan satu aplikasi perisian berasaskan industri dan dinilai melalui ekperimen

terkawal. Keputusan yang diperolehi apabila dibandingkan dengan penanda aras

terbukti

ianya signifikan dan

memperlihatkan beberapa pencapaian yang

memberangsangkan dalam matlamatnya untuk mengenalpasti impak pindaan.

vii

TABLE OF CONTENTS

CHAPTER

1

TITLE

PAGE

DECLARATION

ii

DEDICATION

iii

ACKNOWLEDGEMENT

iv

ABSTRACT

v

TABLE OF CONTENTS

vii

INTRODUCTION

1.1 Introduction

1

1.2 Introduction to Software Evolution

1

1.3 Background of the Problem

4

1.3.1

Broader Maintenance Perspective

4

1.3.2

Effective Change Communication

5

1.3.3

Importance of System Documentation

6

1.3.4

Incomplete Maintenance Supported CASE tools

6

1.4

Statement of the Problem

7

1.5

Objectives of the Study

8

1.6

Importance of the Study

9

1.7

Scope of Work

9

1.8

Thesis Outline

11

2 LITERATURE REVIEW ON SOFTWARE EVOLUTION

AND TRACEABILITY

2.1

Introduction

13

viii

2.2

Software Evolution Models

13

2.3

Software Traceability in Software Engineering

16

2.3.1

Software Configuration Management

16

2.3.2

System Documentation

18

2.3.3

Requirements Traceability

21

2.3.4

Change Management Process

23

2.4

2.5

Review and Further Remarks on Software Evolution and

Traceability

27

Impact Analysis Versus Traceability

29

2.5.1

Definitions of Impact Analysis and Traceability

29

2.5.2

Vertical Traceability

32

2.5.2.1 Data Dependencies

32

2.5.2.2 Control Dependencies

33

2.5.2.3 Component Dependencies

33

Horizontal Traceability

34

2.5.3.1 Explicit Links

34

2.5.3.2 Implicit Links

35

2.5.3.3 Name Tracing

36

2.5.3.4 Concept Location

37

2.5.3

2.5.4

Review on Impact Analysis and Traceability

Techniques

2.6

Critiques on State-of-the-art Software Traceability

Approaches

41

2.6.1

The Comparison Framework

41

2.6.2

Existing Software Traceability Models and

Approaches

2.6.3

44

The Comparative Evaluation of Software

Traceability Modeling

2.7

38

Summary of the Proposed Solution

48

49

3 RESEARCH METHODOLOGY

3.1

Introduction

51

3.2

Research Design

51

ix

3.3

Operational Framework

52

3.4

Formulation of Research Problems

54

3.4.1

Need of Change Process Support

54

3.4.2

Establish Communications Within a Project

55

3.4.3

Understanding Requirements to Support

Software Evolution

3.5

55

Some Considerations for Validation Process

55

3.5.1

Supporting tools

56

3.5.2

Data Gathering and Analysis

57

3.5.3

Benchmarking

58

3.6

Some Research Assumptions

62

3.7

Summary

62

4 REQUIREMENTS TRACEABILITY MODELING

4.1

Introduction

64

4.2

Overview of Requirements Traceability

64

4.3

A Proposed Requirements Traceability Model

65

4.3.1 A Conceptual Model of Software Traceability

66

4.3.2 Dynamic and Static Analyses

67

4.3.3 Horizontal Links

68

4.3.4 Vertical Links

71

4.3.5 Program Dependencies

73

Defining Object-Oriented Impact Analysis

76

4.4.1

Ripple Effects of Program Dependencies

76

4.4.2

Defined Dependencies

79

4.4

4.5

Total Traceability of Artifact Links

80

4.6

Summary

81

5 DESIGN AND IMPLEMENTATION OF SOFTWARE

TRACEABILITY

5.1

Introduction

82

5.2

System Traceability Design

82

5.2.1

82

Software Traceability Architecture

x

5.2.2

CATIA Use Case

86

5.2.3

CATIA Class Interactions

87

5.3

CATIA Implementation and User Interfaces

102

5.4

Other Supporting Tools

105

5.4.1 Code Parser

105

5.4.2 Instrumentation Tool

106

5.4.3 Test Scenario tool

108

Summary

109

5.5

6 EVALUATION

6.1

Introduction

110

6.2

Evaluating Model

110

6.2.1

Hypothesizing Traces

111

6.2.2

Software Traceability and Artifact Links

114

6.3

6.4

6.5

6.6

6.7

Case Study

116

6.3.1

Outlines of Case Study

116

6.3.2

About the OBA Project

117

6.3.3

OBA Functionality

118

Controlled Experiment

122

6.4.1 Subjects and Environment

123

6.4.2 Questionnaires

123

6.4.3 Experimental Procedures

124

6.4.4 Possible Threats and Validity

125

Experimental Results

127

6.5.1 User Evaluation

127

6.5.2 Scored Results

134

Overall Findings of the Analysis

137

6.6.1 Quantitative Evaluation

138

6.6.2 Qualitative Evaluation

140

6.6.3 Overall Findings and Discussion

144

Summary

145

7 CONCLUSION

xi

7.1

Research Summary and Achievements

146

7.2

Summary of the Main Contributions

149

7.3

Research Limitation and Future Work

150

REFERENCES

152-166

APPENDICES

167 - 236

Appendix A – E

xii

LIST OF TABLES

TABLE NO.

TITLE

PAGE

2.1

An overview of the general SRS, SDD and STD documents

20

2.2

Sample form of PCR (MIL-STD-498, 2005)

23

2.3

A comparative study of traceability approaches

45

2.4

Existing features of software traceability approaches

49

3.1

Benchmark of AIS# and EIS# possibilities(Arnold and Bohner, 93) 59

3.2

Evaluation for impact effectiveness (Arnold and Bohner, 1993)

60

3.3

Effectiveness metrics

60

4.1

Software documents and corresponding artifacts

65

4.2

Program relations in C++

77

4.3

Classifications of artifact and type relationships

79

6.1

Summary of model specifications and used techniques

115

6.2

Cross tabulation of experience versus frequencies

127

6.3

Cross tabulation of previous jobs versus frequencies

128

6.4

Cross tabulation of previous jobs versus group distribution

128

6.5

Cross tabulation of experience versus group distribution

129

6.6

Cross tabulation of program familiarity versus group classification 129

6.7

Mean of previous jobs for groups

129

6.8

Mean of experience for groups

130

6.9

Mean of program familiarity for groups

130

6.10

Point average of group distribution

130

6.11

Mean of scores for impact analysis features

131

6.12

Results of bottom-up impact analysis

135

6.13

Results of top-down impact analysis

137

6.14

Results of artifact S-Ratio

138

6.15

Existing features of software traceability systems

141

xiii

C1

Summary of object oriented relationships

206

D1

A set of package-class references

231

D2

A set of method-class-package references

232

xiv

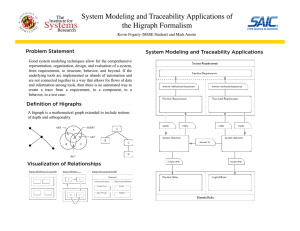

LIST OF FIGURES

FIGURE NO.

TITLE

PAGE

2.1

A Stage Model (Bennett and Rajlich, 2000)

15

2.2

MIL-STD-498 Data Item Descriptions

19

2.3

SADT diagram of software maintenance activities (Bohner, 1991) 25

2.4

SADT diagram of change process (Small and Downey, 2001)

26

2.5

The comparison framework

44

3.1

Flowchart of the operational framework

53

4.1

Conceptual model of traceability system

66

4.2

Hypothesizing traces

67

4.3

Traceability from the requirement perspective

69

4.4

System artifacts and their links

80

5.1

A view of software traceability architecture

83

5.2

Use Case diagram of CATIA system

86

5.3

CATIA class diagrams

88

5.4

CATIA sequence diagrams

89

5.5

First User Interface of CATIA

102

5.6

Primary artifacts at method level

103

5.7

Summary of the impacted artifacts

104

5.8

The Instrumented Code

107

5.9

Some ‘markers’ extracted from a class CruiseManager

108

5.10

The impacted methods of a class CcruiseManager

108

6.1

Hypothesized and observed traces

113

6.2

CSC relationships within a CSCI OBA

118

6.3

Easiness to Use in Overall

131

6.4

Easiness to Find SIS

132

6.5

AIS Support

132

xv

6.6

Cost Estimation Support

133

6.7

Traceability Support

133

6.8

Documentation Support

133

B1

primary option of requirement artifacts

179

B2

Potential effects and impact summary

180

B3

First CATIA interactive window

181

B4

Primary artifacts at method level

182

B5

Detailed results of secondary artifacts

183

B6

Summary of impacted artifacts by methods

184

B7

Impacted artifacts in a message window

185

B8

Primary artifacts at requirement level

186

B9

Summary of impacted artifacts by requirements

186

B10

A hyperlink between CATIA and documentation

187

C1

Table structures of recognizable tokens

189

D1

Start vehicle class diagram

208

D2

Set calibration class diagram

209

D3

Control cruising speed class diagram

210

D4

Request trip assistance class diagram

211

D5

Fill fuel class diagram

212

D6

Service vehicle class diagram

213

xvi

LIST OF ACRONYMS AND SYMBOLS

AIS

- Actual Impact Set

ANOVA

- Analysis of Variance

AST

- Analysis Syntax Tree

CASE

- Computer Aided Software Engineering

CBO

- Coupling Between Object Classes

CCB

- Change Control Board

CI

- Component Identification

CMM

- Capability Maturity Model

CSC

- Computer Software Components

CSCI

- Computer Software Configuration Item

CSU

- Computer Software units

DIF

- Documentation Integration Facility

DIT

- Depth of Inheritance tree

FSM

- Functional Specification Manual

GEOS

- Global Entity Operations System

HTML

- Hypertext Markup Language

IA

- Impact Analysis

LCOM

- Lack of Cohesion Metric

LOC

- Lines of code

MDD

- Model Dependency Descriptor

NOC

- Number of Children

OBA

- Automobile Board Auto Cruise

OMT

- Object Modeling Technique

OOP

- Object Oriented Programming

PCR

- Problem Change Report

PIS

- Primary Impact Set

xvii

RFC

- Response for a Class

RUP

- Rational Unified Process

SADT

- Structured Analysis Design Technique

SCM

- Software Configuration Management

SDLC

- Software Development Lifecycle

SDP

- Software Development Plan

SIS

- Secondary Impact Set

SPEM

- Software Process Engineering Meta Model

SPS

- Specification Product System

SRS

- Software Requirement Specification

STD

- Software Test Description

SUM

- Software User Manual

TRAM

- Tool for Requirement and Architectural Management

UML

- Unified Modeling Language

VG

- Values of complexity

WMC

- Weighted Methods per Class

XML

- Extensible Markup Language

xviii

LIST OF APPENDICES

APPENDIX

TITLE

APPENDIX A

Procedures and Guidelines of the

PAGE

Controlled Experiment

167-176

APPENDIX B

CATIA Manual and Descriptions

177-188

APPENDIX C

Code Parser

189-206

APPENDIX D

OBA System – The Case Study

207-234

APPENDIX E

Published papers

235-236

CHAPTER 1

INTRODUCTION

1.1

Introduction

This chapter provides an introduction to the research work presented in this

thesis.

It describes the research overview that motivates the introduction of a

document-based software traceability to support change impact analysis of objectoriented software. This is followed by a discussion on the research background,

problem statements, objectives and importance of the study.

Finally, it briefly

explains the scope of work and the structure of the thesis.

1.2

Introduction to Software Evolution

It is unanimously accepted that software must be continuously changing in

order for the software to remain relevant in use. The need for changing a software

system to keep it aligned with the users’ need and expectations has long been

recognized within the software engineering community. Due to inherent dynamic

nature of business application, software evolution is seen as the long term result of

software maintenance. In the current decade, the term software evolution is often

used as a synonym for software maintenance, broadly defined as modification of a

software product after delivery (IEEE, 1998a), and both software maintenance and

evolution assume changing the code as their basic operation.

2

The term software evolution lacks a standard definition, but some researchers

and practitioners use it as a preferable substitute for maintenance (Bennett and

Rajlich, 2000). In short, one could say that the evolution of a software system is the

results of observing the changes made to the system components over a long time

span. Consequently, the study of software evolution has aimed at analyzing the

process of continuous change to discover trends and patterns, such as for example,

the hypothesis that software evolution behaves as feedback processes (Lehman and

Ramil, 2000).

The maintenance process describes how to organize maintenance activities.

Kitchenham et al. (1999) identify a number of domain factors believed to influence

the maintenance process, namely i) maintenance activity type, ii) product, iii)

peopleware and iv) process organization. Maintenance activity type covers the

corrections, requirements changes and implementation changes. Product deals with

the size and product composition. Peopleware describes the skills and user requests.

Process organization manages the group and engineering resources that include

methods, tools and technology.

Software maintenance is basically triggered by a change request. Change

requests are typically raised by the clients or internal development staff for the

demand to do software modification. Traditionally, software modification can be

classified into maintenance types that include corrective, adaptive, perfective and

preventive.

Chapin et al. (2001) view software evolution in slightly different

perspective. They use the classification based on maintainers’ activity of mutually

exclusive software evolution clusters ranging from the support interface,

documentation, software properties and business rules. Each cluster is characterized

by some maintenance types. They conclude that different types of maintenance or

evolution may have different impact on software and business processes.

For whatever reason of modification it may be, the real world software

systems require continuous changes and enhancements to satisfy new and changed

user requirements and expectations, to adapt to new and emerging business models

and organizations, to adhere to changing legislation, to cope with technology

3

innovation and to preserve the system structures from deterioration (Canfora, 2004).

Webster et al. (2005) in their study on risk management for software maintenance

projects propose some taxonomy of risk factors that cover requirements, design,

code, engineering specialities and legacy. Some common risk factors identified are

incomplete specifications and limited understanding, high level complexity of the

required change, direct or indirect impacts on current system’s functionalities, and

inadequate test planning and preparation. The fact about software change is that the

changes made by user requirements and operations are propagated onto software

system (Bohner, 2002). These changes will require a substantial extension to both

the database and code. As change is the basic building block of software evolution,

software change is considered a key research challenge in software engineering

(Bennett and Rajlich, 2000).

A large portion of total lifecycle cost is devoted to introduce new

requirements and remove or change the existing software components (Ramesh and

Jarke, 2001). This intrinsically requires appropriate software traceability to manage

it. However, there are at least three problems observed by the investigation of the

current software traceability approaches.

First, most of the Computer Aided

Software Engineering (CASE) tools and applications more focus on the high level

software and yet are directly applicable to software development rather than

maintenance. While, the low level software e.g. code, is given less priority and very

often left to users to decide.

This makes the software change impact analysis

extremely difficult to manage at both levels. Secondly, there exists some research

works (Jang et al., 2001; Lee et al, 2000; Tonella, 2003) on change impact analysis

but the majority confine their solution at the limited space i.e. code, although more

evolvable software can be achieved at the meta model level. Finally, no proper

visibility being made by the ripple effects of a proposed change across different

levels of workproduct. If this can be achieved, a more concrete estimation can be

predicted that can support change decision, cost estimation and schedule plan.

The key point to the above solutions is the software traceability. Software

traceability provides a platform as to how the relationships within software can be

established and how the change impact can be implemented. All these issues require

4

an extensive study on the existing traceability and impact analysis in the existing

software system and research works before a new model and approach can be

decided.

1.3

Background of the Problem

Software traceability is fundamental to both software development and

maintenance of large systems. It shows the ability to trace information from various

resources that requires special skill and mechanism to manage it. In this research

problem, it focuses on software traceability to support change impact analysis.

Following are some major issues to the research problems.

1.3.1

Broader Maintenance Perspective

Many researchers have been working on the code-based maintenance for their

software evolution as mentioned earlier. This type of maintenance is more focused

but limited as it deals with a single problem, i.e. source code. However, managing

software change at a restricted level is not enough to appreciate the actual impacts in

the software system. Other levels of software lifecycle such as requirements, testing

and design should also be considered as they are parts of the software system. To

observe the impact at the broader perspective is considerably hard as it involves

software traceability within and across different workproducts.

Software workproducts refer to explicit products of software as appeared in

software documention. For instance, test plans, test cases, test logs and test results

are the workproducts of testing document. Architectural design model and detailed

design model are the workproducts of design document. Code is by itself a

workproduct of source code. Requirements and specifications are the workproducts

of software requirements specification document. Thus, at the broader perspective of

change impact, it observes the impact within and across different workproducts such

5

within code and from code to design, specification, etc. Within a workproduct, the

software components may be decomposed further into smaller elements, called

artifacts. For instance, code can be decomposed into classes, methods, attributes and

variables of software artifacts. In this thesis the term components and artifacts are

sometimes used interchangeably which refer to the same thing.

The fact about this traceability approach is that if the component relationships

are too coarse, they must be decomposed to understand complex relationships. On

the other hand, if they are too granular, it is difficult to reconstruct them into more

recognized, easily understood software components. However, it is perceived that

there should be some tradeoffs between these two granularities in order to observe

the ripple effects of change.

1.3.2

Effective Change Communication

There is a need to communicate and share information within software

development environment e.g. between software developers and management (Lu

and Yali, 2003). In Software Configuration Management (SCM) for example, the

Change Control Board (CCB) needs to evaluate change requests before the actual

change is implemented.

This requires CCB to consult other staff such as

maintainers, software engineers and project manager. A maintainer himself needs to

examine among other tasks how much and which part of the program modules will

be affected for change and regression testing, its complexity and what types of test

cases and requirements will be involved.

The software engineers need to examine the types of software components to

use and more critically to identify or locate the right affected software components

(Bohner, 2002). The software manager is more concerned about the cost, duration

and staffing before a change request is accepted. This certainly requires a special

repository to handle software components with appropriate links to various levels in

software lifecycle. Some of this information is not readily available in any CASE

6

tools that require the software development staff to manually explore the major

analyses in some existing software models and documentations.

1.3.3

Importance of System Documentation

To established organizations with software engineering practices in place,

software engineers always refer to system documentation as an important

instrumentation for communication (IEEE, 1998). The high level management finds

documentation very useful when communicating with the low level developers or

vice-versa. Despite this, many software engineers are still reluctant to make full use

of the system documentation particularly to deal with software evolution (Nam et al.,

2004). To them, documentation is abstract, seldom up-to-date and time consuming.

However, many still believe that documentation is more significant if the software it

documents is more visible and traceable to other parts of software components. For

example, within documentation the user can visualize the impacted requirements and

design classes in the database repository.

1.3.4

Incomplete Maintenance Supported CASE Tools

Many CASE (Computer Aided Software Engineering) tools that exist today

are aimed at addressing the issues of software development rather than software

maintenance (Pressman, 2004).

Some claim that their tools can support both

software development and maintenance, however their applications are mainly

centered around managing, organizing and controlling the overall system

components, very few focus on the impact analysis of change requests. Deraman

(1998) relates the current CASE tools as applications that do provide special upfront

consistency checking of software components during development but tend to ignore

its equally important relationships with code as it proceeds towards development and

maintenance. The traceability relationships between code and its upper

7

functionalities are not explicitly defined and very often left to software engineers to

decide.

From the above scenarios, there is a need to integrate both the high level and

low level software abstracts such that the effects of component traceability can be

applied in the system thoroughly. Two main issues need to be addressed here firstly,

the software traceability within a software workproduct and secondly, the traceability

across many workproducts.

Software traceability in this context reflects the

underlying infrastructures of ripple effects of change that attempts to incorporate

both the techniques and models in implementing a change impact.

1.4

Statement of the Problem

This research is intended to deal with the problems related to requirements

traceability for change impact analysis as discussed in Section 1.2.

The main

question is “How to produce an effective software traceability model and approach

that can integrate the software components at different component levels to support

change impact analysis of software maintenance?”

The sub questions of the main research question are as follows:

i.

Why the current maintenance models, approaches and tools are still not

able to support potential effects of change impact in the software system?

ii.

What is the best way to capture the potential effects of software

components in the system?

iii.

How to measure the potential effects of a proposed change?

iv.

How to validate the usefulness of software traceability for software

maintenance?

Sub question (i) will be answered via literature reviews in Chapter 2. This

chapter will provide a special attention to explore the software evolution, its models

and traceability issues.

From the impact analysis perspective, this chapter will

8

present a study on the detailed traceability process, the techniques and existing tools

used. The strengths and drawbacks are drawn based on a comparison framework in

order to propose a new model and approach to support change impact analysis.

The above study provides some leverage to answer the sub question (ii).

Chapter 3 describes a design methodology and evaluation plan before the research is

carried out. Sub question (iii) will be counter balanced by a solution to measure the

potential effects. The sub questions (ii) and (iii) will be further explained in the

traceability modeling and implementation as described in Chapter 4 and 5. Lastly,

sub question (iv) leads to the evaluation of the model and approach quantitatively

and qualitatively as described in Chapter 6.

1.5

Objectives of the Study

The above problem statement serves as a premise to establish a set of specific

objectives that will constitute major milestones of this research.

To this end, the objectives of this research are listed as follows

1) To build a new software traceability model to support change impact

analysis that includes requirements, test cases, design and code.

2) To establish a software traceability approach and mechanism that cover

features including artifact dependencies, ripple effects, granularity and

metrics.

3) To develop software supporting tools to support the proposed model and

approach.

4) To demonstrate and evaluate the practicability of the software traceability

model and approach to support change impact analysis.

9

1.6

Importance of the Study

Software maintenance is recognized as the most expensive phase of the

software lifecycle, with typical estimate of more than sixty percent of all effort

expended by a development organization, and the percentage continues to rise as

more software is produced (Han, 2001).

As software changes are introduced,

avoiding defects becomes increasingly labor intensive. Due to lack of advanced

technologies, methods and tools, doing software modification has been difficult,

tedious, time consuming and error prone. Software maintainers need mechanisms to

understand and solve maintenance tasks e.g. how a change impact analysis can be

made for a software system.

Bohner and Arnold (1996) describes a benefit of change impact analysis as

…by identifying potential impacts before making a change, we can greatly reduce

the risks of embarking on a costly change because the cost of unexpected problems

generally increases with the lateness of their discovery.

Clearly, the software change impact is an important activity that needs to be

explored in order to improve software evolution and traceability is seen as a core

infrastructure to support impact analysis.

1.7

Scope of Work

Software traceability can be applied to some applications such as

consistency-checking (Lucca et al., 2002) defect tracking (McConnel, 1997), cross

referencing (Teng et al., 2004) and reuse (Ramesh and Jarke, 2001). The techniques

and approaches used may differ from one another due to different objectives and

feature requirements.

Some of these approaches are geared toward system

development while others are designed for system evolution.

10

In this scope of research, it needs to explore a software traceability

specifically to support a change impact analysis within which it should be able to

capture the impacts of change requests. The models and techniques used should

allow the implementation of impacts across different workproducts. It needs to

capture the software knowledge from the latest correct version of a complete system

prior to implementation. It is assumed that a new traceability approach needs to

develop some reverse engineering tools if ones are not available in the research

community to support and simplify the capturing process.

The term a complete system here may refer to a very large scope as it may

govern all the software models including the business model, specification, high

level design, documentation, code, etc. These models are comprised within the

software lifecycle of software specification, design, coding and testing. However,

for the sake of research and implementation, this work will focus on some relevant

information that includes a set of functional requirements, test cases, design and code

as follows. These software components or artifacts are seen to be the software

development baseline (MIL-STD-498, 2005).

Software development baseline

reflects the software products that are used and confined to the internal development

staff rather than the external users or clients to support software evolution. Thus, this

baseline model is chosen to represent a smaller scope of a large complete system.

The new work should be derived from a set of system documentation

adhering to a certain software engineering standard e.g. MIL-STD-498 (MIL-STD498, 2005).

Nevertheless, it should not be tied up with the documentation

environment as the main focus is not on the traceability and impact in system

documentation but rather to its information contents. With simple interface (not

within this scope) it should allow system documentation to view the traceable

software components as a result of implementing software traceability system.

In this research approach, the scope is decided on object-oriented system to

address the change impact analysis. It should be noted that this research is not

concerned with correcting the software components but only reporting them. The

11

software engineers or maintainers should consider these results as an assistance to

facilitate their initial prediction of change impact.

1.8

Thesis Outline

This thesis covers some discussions on the specific issues associated to

software traceability for impact analysis and understanding how this new research is

carried out. The thesis is organized in the following outline.

Chapter 2: Discusses the literature review of the software evolution and

traceability. Few areas of interest are identified from which all the related

issues, works and approaches are highlighted. This chapter also discusses

some techniques of impact analysis and software traceability. Next, is a

discussion on some existing models and approaches by making a comparative

study based on a defined comparison framework. This leads to improvement

opportunities that form a basis to develop a new proposed software

traceability model.

Chapter 3: Provides a research methodology that describes the research

design and formulation of research problems and validation considerations.

This chapter leads to an overview of data gathering and analysis including

benchmarking. It is followed by some research assumptions.

Chapter 4:

Discusses the detailed model of the proposed software

traceability for impact analysis.

A set of formal notations are used to

represent the conceptual model of the software traceability. It is followed by

some approaches and mechanisms to achieve the model specifications.

Chapter 5: Presents the design and functionality of some developed tools to

support the software traceability model. This includes the implementation of

the design and component tools.

12

Chapter 6: The software traceability model is evaluated for its effectiveness,

usability and accuracy. The evaluation criteria and methods are described

and implemented on the model that includes modeling validation, a case

study and experiment. This research performs evaluation based on

quantitative and qualitative results. Quantitative results are checked against a

benchmark set forth and qualitative results are collected based on user

perception and comparative study made on the existing models and

approaches.

Chapter 7: The statements on the research achievements, contributions and

conclusion of the thesis are presented in this chapter. This is followed by the

research limitations and suggestions for future work.

CHAPTER 2

LITERATURE REVIEW ON SOFTWARE

EVOLUTION AND TRACEABILITY

2.1

Introduction

This chapter presents the state-of-the-art approaches in software evolution

and software traceability. It begins with an overview of the prominent models and

approaches in software evolution followed by the recent uses of software traceability.

It presents the concept of change process that includes the change requests, ripple

effects and definition of both impact analysis and traceability. It also presents some

techniques used in software traceability and impact analysis, and discusses how both

are related to one another. Next, a summary of comparative study on the existing

software traceability models based on some predefined criteria and comparison

framework.

Finally, it presents a proposed model and approach of software

traceability to support change impact analysis.

2.2

Software Evolution Models

Currently, there is no standard view and generally accepted definition on the

term software evolution and this has led both software evolution and maintenance

often used interchangeably (Bennett and Rajlich, 2000; Chapin et al., 2001). For that

reason, instead of reviewing the terminology definitions, the author will summarize

14

views and comments of other researchers and elaborate their own view on software

evolution.

Perry (1994) views software evolution as a well-engineered software system

that consists of three inter-related ingredients: the domains, experiences and process.

The domains are the real world environments made of objects and processes. It is

this model that is the abstraction basis for system specification which can be turned

into an operational system.

The business policies, government regulations and

technical standards are some examples of the real world environment within the

context of software system. Experience is the skill gained from the implemented

software system such as feedback, experimentation and accumulation of knowledge

about various aspects relevant to the system. Feedback provides corrective action,

while experimentation is to create information for the sake of understanding, insight

and judgement. The process is a means of interaction in building and evolving the

software system using methods, technologies and organizations. While, a method

may include the techniques and tools, technology supports the automation and

organization has to make sure that the quality, standards and practices are followed.

These three dimensions are considered the fundamental sources of system evolution

as they are intrinsically evolving themselves.

Bennett (1996) views the software evolution in slightly different way as a

model consisting of three main levels; the organization, process and technology.

The first level focuses on how the software evolution meets the organizational goals

and needs within a defined time scale and budget constraint. The second level relates

to a set of activities for software evolution including initial requests, change

assessment, cost-estimation and change impact analysis. The third level refers to

some technologies used to support the process that include automated tools, methods

and languages.

Bennett and Rajlich (2000) classify the software revolution into a staged

model that ranges from the initial development, iterative evolution, iterative

servicing through phaseout and closedown (Figure 2.1). During the initial

development, the system is built as a first complete version by the software team

15

expertise that meets the system architectures and specifications. As the initial

development is successful, the software enters the iterative evolution stage.

Initial

Development

evolution changes

first running version

Evolution

loss of evolvability

servicing patches

Servicing

servicing discontinued

Phase-Out

Switch-off

Close-Down

Figure 2.1: A staged model (Bennett and Rajlich, 2000)

At this stage, evolution may respond to demand for additional functionalities

and competitive pressures either from the clients or internal development staff for the

need to do modification. As the software evolves over time, servicing stage may

expect no major changes with minimum involvement of domain or software

engineering experts. This situation occurs when the major changes become too

burden and expensive, thus the software evolution is said to reach the saturation

stage or is considered aging. Finally, the software system that becomes less useful in

its environment and costly to maintain will enter the phaseout and closedown stage.

The need and demand for continued change based on traditional maintenance

classification such as corrective, adaptive, perfective and preventive cannot be

avoided if such software is to remain operationally satisfactory.

The effort to

classify software evolution and maintenance by Lehman and Ramil (2002) brings

16

another useful view to software evolution model. They classify a model of evolution

as consisting of five incremental steps.

i) Initial development with complete operation

ii) Improvement on versions, release or upgrades

iii) Application that supports a wide range of co-operative system services

iv) Process that refers to the aggregate of all activities useful to the above

evolution.

v) Mastery of software evolution process

According to Lehman and Ramil (2002), to truly master software evolution

one must understand and master the evolutionary properties of all those entities

individually and collectively. Based on their findings, software traceability brings

the highest value on both software and business processes.

2.3

Software Traceability in Software Engineering

The following sections discuss thoroughly the areas related to software

traceability and change environment as coined in the first chapter. These areas

include

the

software

configuration

management,

system

documentation,

requirements traceability and software change management.

2.3.1

Software Configuration Management

As software system becomes larger and more complex, numerous corrections,

extensions and adaptations tend to be more chaotic and unmanageable. The

traditional way of addressing the maintenance task individually is no longer

practical. It needs a special management system, called the Software Configuration

Management (SCM) that covers the procedures, rules, policies and methods to

handle the software evolution (IEEE, 1998b). SCM has been identified as a major

part of a well defined software development and maintenance task (Pressman, 2004).

17

SCM deals with controlling the evolution of complex software systems that supports

version controls and administrative aspects such as to handle change requests, and to

perform changes in a controlled manner by introducing well-defined processes

(Estublier et al, 2004). The IEEE Standard 1219-1998 for software maintenance

(IEEE, 1998a) highlights the major functions of SCM (or CM for short) as

•

Identification - CM needs to identify the Configuration Items (CIs) that represent

the collection of components and artifacts related to a particular project.

•

Change Management - Change management is the process of managing the

change

request,

its

evaluation,

change

approval

or

disapproval

and

implementation of changes.

•

Status Accounting - This task is required to record statistics, to examine the

status of a product, and to easily generate reports about all aspects of the product

and process.

•

Auditing and Review – To ensure that the change was properly implemented one

can rely on some information gathered from the technical review and

configuration audit.

All these tasks are not taken to be mutually exclusive. It requires SCM to

provide traceability and mechanisms to support procedures and controls over the

shared CIs. The CIs may include all those components composing a software system

such as the requirements, specifications, source codes, test cases and design models.

The change management and identification functions play an important role in SCM

as not only to respond to the need for change but also to serve a more general

purpose in the effective management of the system evolution.

Large number of SCM researchers have been working on version controls

(Walrad and Strom, 2002; Nguyen et al., 2004; Cui and Wang, 2004) while others on

system hierarchies (Wei et al., 2003; Hagen et al, 2004; Janakiraman et al., 2004) and

change management (Small and Downey, 2001; Brown et al., 2005; Keller et al.,

2004). Wei et al. (2003) takes a step further emphasizing on meta-model to express

configuration items, their relationships and evolvement for complex software product

18

structures. Dorrow (2003) designs and implements a configurable middleware

framework for distributed systems. It can address several configuration facets such as

fault tolerance, security, power usage and system performance.

Desai (2003) takes an initiative to support extensibility, reusability and

verifiability by designing a configuration description language to model CM on

heterogeneous cluster environment. This interface incorporates the rapidly changing

configurations at the client sites into server configurations for internal working

administration. The Eclipse (Brand et al., 2003) and ClearCase (White, 2000) are

tools of database management system for program modules, supporting multi version

software relationships among software components, configuration management, and

access control protection.

The study on the current SCM approaches and tools reveals that change

process is part of SCM tasks. However, the existing change management function

emphasizes more on the check-in, check-out procedures on CIs, authentication and

version controls. Least, or almost no effort is taken to determine the traceability and

ripple effects of change within CIs.

2.3.2

System Documentation

System documentation is a software product and a part of software

engineering knowledge (Sommerville, 2004).

System documentation is vital to

software maintainers who need to understand software thoroughly before making any

changes. A maintainer needs to refer to documentation and its standard in order to

understand the cause and effect of changes. Regardless of the software process being

adopted, developers working on a large-scale software project produce a large

variety of software documents typically include requirements, business plan, design

specifications, source code, test plans, bug reports, etc.

19

There are few documentation standards available in software development

such as the IEEE Standard 830-1998 and MIL-STD-498. Different standard may

differ from the other in terms of format templates and structures, although its content

wise could be relatively the same.

The IEEE Recommended Practices have

established standards for three main software documents, namely the Software Test

Document - IEEE Std 829-1998 (IEEE, 1998c), Software Requirements

Specification - IEEE Std 830-1998 (IEEE, 1998d) and Software Design Description IEEE Std 1016-1998 (IEEE, 1998f). MIL-Std-498 is made available for twenty two

types of documentation (i.e. DIDs) at all phases of software life cycle. The general

structure of the software process with DIDs is shown in Figure 2.2. The figure

shows that each phase of software development lifecycle is supported by some

documents e.g. the Plan phase is supported by the SDP, SIP, STRP and the Concept

or Requirement phase is supported by four other documents and so forth.

MIL-STD-498 Data Item Descriptions (DIDs)

Plans

SDP

SIP

STRP

Concept/

Requirements

OCD

SSS

SRS

IRS

Design

SSDD

SDD

DBDD

IDD

Qualification

Test

Products

STP

STD

STR

User/

Operator

Manual

Support

manual

SUM

SCOM

SIOM

COM

CPM

FSM

Software

SPS

SVD

Figure 2.2 : MIL-STD-498 Data Item Descriptions

From investigation, IEEE Standard and MIL-STD-498 Standard (including

IEEE/EIA 12207 compliance) possess three documentation standards in common i.e.

Software Requirements Specification (SRS), Software Design Description (SDD)

and Software Test Document (STD). The descriptions of these documents can be

illustrated as in Table 2.1.

In general, the software development environments are supposed to provide

tools to support software documents. Several works have applied hypertext

technology to improve software documents and their relationships. SLEUTH

(Software Literacy Enhancing Usefulness to Humans) supports an on-line

20

documentation environment (French et al., 1997). It provides an environment for

software documentation management consisting both an authoring and a viewing

environment. The environment applies FrameMaker that integrates hypertext to

cross-referenced filter configuration files. In SLEUTH, system documentation is

maintained as a hypertext with typed links to other associated documents and the

links are always updated.

Table 2.1: An overview of SRS, SDD and STD documents

Document Types

Overview

SRS document describes recommended approaches for the

Software Requirements

software requirements. The purpose is to explain the scope

Specification

of the project, references made to other standards,

(SRS)

definitions of specific terms used, background information

and essential parts of the system.

SDD document describes the necessary design information

including high level and low level designs. The practice is

Software Design Description

not

limited

to

specific

methodologies

for

design,

(SDD)

configuration management or quality assurance. It can be

applied to paper documents, automated database, design

description languages or other means of description.

STD document describes a set of basic software test

information that covers test plan, test specification and test

Software Test Document

report. Test plan prescribes the scope, approach, resource

(STD)

and schedule of the testing activities. Test specification

covers the test cases, test procedures and input/output

assumptions. Test report identifies the test items, test log

and summary report.

CHIME (Customizable Hyperlink Insertion and Maintenance) extends the

hypertext capability by integrating relationships between documents and code

(Devandu et al., 1999). It inserts HTML tags and links in the source code by

querying a database produced by the existing static analysis tools. SC (Software

Concordance) is similar to CHIME but is designed to manage versioning system i.e.

it can keep and update the links as evolution is made to the code and documents

21

(Nguyen et al., 2004). Hartmann et al. (2001) employs an approach to document

software system based on XML and View II tool in reverse engineering environment.

It focuses on the use of Specific Document Type Definitions (DTD) as a standard for

software documentation. Sulaiman (Sulaiman, 2004) employs a DocView to visualize

and re-document some structural information from code.

In the above discussion, some attempt to associate documentation to other

software entities e.g. code, while others attempt to support better visualization within

documentation environment. Each work may use different approach of software

traceability to support its own application. However, it is observed that no proper

attempt has been made to associate traceability between high level and low level

components such from requirements to low level design and code. It is anticipated

that the existing information in the SRS, SDD and STD needs to be recaptured and

reorganized into an appropriate repository in order to provide a new need for

traceability mechanism. Due to component dependencies that would complicate the

matter, a special attention is required to retranslate and transform all the related

sources into a special database repository. This repository then needs an appropriate

mechanism to implement change impact analysis.

2.3.3

Requirements Traceability

There is a need to relate one model to another that constitutes traceability e.g.

from design to code. Ramesh (2001) relates traceability as the ability to trace the

dependent items within a model and the ability to trace the corresponding items in

other models. Research on software traceability has been widely explored since the

last two decades that supports many applications such as regression testing (Jang et

al., 2001), change impact (Lee et al., 2001) and visualization (Bohner and Gracanin,

2003). There are efforts to simplify software evolution at the high level software

system that involves business requirements and specifications. For example, Wan

Kadir (2005) develops a meta model, process and tool called BROOD (Business

Rule-Driven Object-Oriented Design) that link business rules to software design.

Traceability is fundamental to software development and maintenance of large

22

system. It shows the ability to trace from high level abstracts to low level abstracts

e.g. from a requirement to its implementation code or vice versa. Tracing such kind

of traceability is called requirements traceability (Ramesh, 2001). Pursuant to this,

Burd and Munro (1999) assume that requirements traceability implies that all models

of the system are consistently updated, otherwise it is impossible to implement a

software traceability in the system. Many software engineering standards governing

the development of systems (e.g. IEEE/EIA 12207) emphasize on the need of

requirements traceability to be included into software development process and treat

it as a quality factor towards achieving process improvement. Process improvement

via CMM (Capability Maturity Model) is a community-developed guide for evolving

towards a culture of software engineering excellence for organizational improvement

(Saemu and Prompoon, 2004).

To better manage a change impact analysis at a broader perspective, it needs

to associate with traceability approach that requires a rich set of coarse and fine

grained granularity relationships within and across different level of software

models. Sneed’s work (Sneed, 2001) relates traceability approach to a repository by

constructing maintenance tasks that link the code to testing and concept models. His

concept model seems to be too generalized that includes the requirements, business

rules, reports, use cases, service functions and data objects but no formalism was

described. A tool was used to select the impacted entities and pick up their sizes and

complexities for effort estimation. Bianchi et al. (2000) introduce a traceability

model to support impact analysis in object-oriented environment. However, their

work is more involved with manual process which is time consuming and error

prone. Lindvall and Sandahl (1998) present a traceability approach based on domain

knowledge to collect and analyze software change metrics related to impact analysis

for resource estimates. They relate some change requests to the impacted code in

terms of classes but no requirements and test cases are involved.

Investigation on requirements traceability reveals that although the above

works apply requirements traceability for impact analysis, their emphasis and

approaches do not allow a direct reciprocal link between artifacts.

A new

requirements traceability approach can be proposed to support direct links between

23

requirements at the high level and other artifacts at the low level or vice versa in

order to observe the potential effects of change.

2.3.4

Change Management Process

A maintenance task is typically initiated by some change requests when there

is a need to do software change. Change requests are generally expressed in natural

language by users or clients. The lexicon used to describe a concept itself may vary

as users, designer, programmers and maintainers use different words to describe

essentially the same or similar concept. Due to this reason, software developers may

help clients prepare a change request so that the written problem description can be

easily understood and analyzed. Table 2.2 represents a sample form of change

request, also known as PCR (Problem Change Report).

Table 2.2: Sample Form of PCR (MIL-STD-498, 2005)

As far as the change process is concerned, it should include task such as how

a change request or proposed change is evaluated before the actual changes take

place. To put change impact analysis in perspective, it first needs to understand the

process of change. Madvhaji (1992) defines the process of change as consisting of

the following stages.

i)

Identify the need to make a change to a software component in the

environment.

24

ii)

Acquire adequate change related knowledge about the item.

iii)

Assess the impact of a change on other items in the environment.

iv)

Select or construct a method for the process of change.

v)

Make changes to all the items and make their inter-dependencies resolved

satisfactorily.

vi)

Record the details of the changes for future reference, and release the

changed item back to the environment.

For large software systems, change requests are handled by the SCM via the

Change Control Board (CCB). CCB is a special user or a unit who is responsible for

approving and authorizing changes made to the software system. In general, CCB

would require software engineers or maintainers to provide some input to change

impact analysis. The result should describe many issues such as the strategy or plan

for change process, organization impact, resources used and one of the most

challenging tasks is to identify what other software components would be affected,

their sizes and complexities.

Bohner (1991) provides a view of change activities i.e. how a change process

is managed and controlled as shown in SADT (Structured Analysis Design

Technique) in Figure 2.3. The sub process “Manage Software Maintenance” sets the

objective of the proposed change request. The sub process “Understand Software

Change and Determine Impact” determines all possible effects of the change. This

activity can be automated or semi-automated over parts of the software involved to

produce the current impacts.

The current impacts should provide valuable

information to maintenance tasks in terms of the number of affected modules, sizes,

complexity, etc. With the current scope, management can determine whether to

proceed or not with the subsequent tasks.

The sub process “Design Software Change” takes a continuing process, by

generating requirements and design specifications for the change.

Here, the

25

maintainer takes the initiative to model the system to meet the requirements of

change. The sub process “Implement Software Change” is a process of doing the

actual change. This activity also accounts for ripple effect that is to provide the

propagation of changes to other code modules or other workproducts. This serves to

check the effectiveness of the original impact analysis made earlier. The sub process

“(Re) Test Affected Software” is a regression testing made over the changed

software. This is to make sure that the new changes meet the requirements of the

proposed changed.

Objectives

(Corrective

Adaptive

Perfective)

Schedule, Constraints, Resources

Manage

Software

Maintenance

Change Request

Current System

Understand

Software

Reqts &

Change & Change

Design

Determine Request

impacts

Input

Reqts &

Design

Software Design

Spec

Change

Current Impacts

Program

Level

impacts

Re-test

Impacts

Implement Updated (Re) Test

Software Software Affected

Change

Software

New

System

Ripple-Effects

Impact/Scope

Traceability

Stability

Complexity

Modularity

Doc & Descr

Adaptability

Testability &

Completeness

Figure 2.3: SADT diagram of software maintenance activities (Bohner, 1991)

Small and Downey (2001) describes change activities in slightly different

way. They categorize the change process into six subsequent phases (Figure 2.4) i.e.

management, analysis and prediction, design, implementation planning, resource

preparation and change implementation. A0 represents the scope to conduct changes

of which all the phases are included. All the phases can be described as follow.

26

Figure 2.4: SADT diagram of change process (Small and Downey, 2001)

Phase A1, Manage Changes, takes responsibility to plan and direct all the

change activities. It monitors the environment, change situation and products. It

also provides change information and recommendations internally and externally, to

the enterprise and its environment.

Phase A2, Analyze Situation and Predict,

analyzes the possible effects and scope as required for managing change, and in

support of further re-designing the enterprise.

The change predictions and

evaluations consider the emerging new vision, so iteration with A3 is expected.

Phase A3, Re-Design Enterprise, re-designs model and specification for

change process.

This strategy requires inspiration, involvement of experts and

managers from the management and supporting resources; e.g., information

technology and human resources. Phase A4, Plan to Implement Changes, plans both

the preparation of schedules, identification of resources for change, the changeimplementation sequencing and roles.

27

Phase A5, Prepare Resources to Implement, sets up in advance all resources

needed for implementing the changes, including personnel hiring and training.

Phase A6, Implement Changes, performs the changes as planned.

All the above scenarios focus on the management aspect of change process

within the software maintenance environment. Madvhaji emphasizes on some brief

tasks to assess impact and its change implementation. Bohner elaborates the steps on

change management right from the initial objectives of change knowledge through its

implementation and testing. Small and Downey (2001) stage a careful consideration

on the change procedures by adding in more plans to direct changes including the

preparation of schedules and resources.

It seems that the above scenarios

complement each other in the change management, and change prediction is seen as

a prerequisite effort to change implementation. Change prediction requires a special

knowledge of impact analysis and is regarded as one of the research challenges in

software engineering (Finkelstein and Kramer, 2000). The main issue now is how to

perform change impact analysis and its subsequent traceability. These issues are

discussed extensively in the next section.

2.4

Review and Further Remarks on Software Evolution and Traceability

In summary, the views on software evolution as discussed in the beginning of

this section emphasize two important issues: the environment that generates changes

and the process that describes the way to perform changes.

The environment

represents the infrastructure that governs the change management such as the SCM,

documentation and requirements traceability. Based on the objective evidence in

software maintenance, software traceability is considered the most important sources

to software evolution as it governs the software change process that may later be

linked to support other parts of software lifecycle.

28

The process refers to the issues related to software change process such as

software technology, methods, tools and techniques.

The solution to software

evolution problems is likely to succeed by addressing both the software traceability

and change process. We can conclude SCM refers to the management of project

coordination and control changes including the version control for software

productivity. Documentation is concerned with all the software documents of

software development activities as specified in the software lifecycle. Software

traceability is the key element to all these dimensions as it can support software

development and evolution. Software change process is seen as a pivotal point to

software evolution that its success is largely dependent on the software traceability

surrounding it.

A more careful consideration of the reviewed issues and problems in software

evolution leads to some remarks below.

•

The establishment of SCM as a discipline of software engineering demands

for better management and control over CIs (Estublier et al., 2004).

However, the majority have over emphasized on versions and release controls

of source files, and have neglected other equally important requirements, e.g.

analysis and evaluation for change requests. These requirements demand for

a rich set of fine-grained granularity relationships among the CIs of which

many commercial SCM tools tend to ignore.

•

The current CASE tools do support traceability relationships among the

software components that enable them to provide functionalities such as work

on visualization, navigation, cross-references, browsers and consistencychecking between components in the system. However, their objectives are

geared towards managing, organizing and controlling the overall system

components rather than establishing the links between coarse-grained and

fine-grained granularities, e.g. code to requirements.

•

Managing change impact analysis at the broader perspective is more crucial

and challenging as it involves software dependencies from within and across

29

different software lifecycle. Lack of software techniques, methods and tools

makes software evolution significantly hard to manage.

•

The importance of software documentation is well understood by software

developers. However, its use in software evolution is still skeptical as many

perceive that documentation is less updated, obsolete and time consuming.

However, many agree that its use is more significant if more information can

be made traceable within software components of system documentation

(Nam et al., 2004) e.g. software traceability for change impact analysis.

The above discussion reveals that software traceability is central to software

evolution. Software change process seems to be coherent to all other software

entities that would require a special study on its own software traceability. We

believe that the results of our proposed software traceability for change impact

analysis will benefit other software entities in their effort to reduce the maintenance

tasks.

2.5

Impact Analysis Versus Traceability

Impact analysis and software traceability are two interrelated issues. The

following section discusses their definition, techniques and uses to support change

process.

2.5.1

Definition of Impact Analysis and Traceability

Turver and Munro (1994) define impact analysis as “the assessment of a

change, to the source code of a module on the other modules of the system. It

determines the scope of a change and provides a measure of its complexity”. Bohner

and Arnold (1996) define impact analysis as “identifying potential consequences of a

change, or estimating what needs to be modified to accomplish a change”. Both

30

definitions emphasize the estimation of the potential impacts which is crucial in

maintenance tasks because what is actually changed will not be fully known until

after the software change is complete.

Impact analysis is used in some applications such as cost estimation, schedule

plan, risk management, visualization and change impact. It can also be applied to a

system or part of a system for a specific purpose e.g. analysis on code for regression

testing. Software impact analysis, or impact analysis for short, offers considerable

leverage in understanding and implementing change in the system because it

provides a detailed examination of the consequences of changes in software. Impact

analysis provides visibility into the potential effects of the proposed changes before

the actual changes are implemented. The ability to identify the change impact or