1 INPUT/OUTPUT DEVICES AND INTERACTION TECHNIQUES Ken Hinckley, Microsoft Research

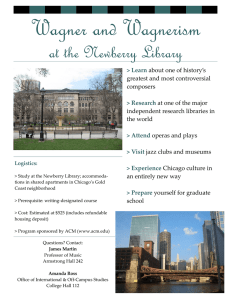

advertisement