Chapter 4: Elements of Statistics

advertisement

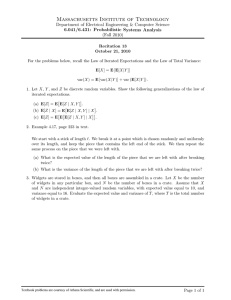

Chapter 4: Elements of Statistics 4-1 Introduction The Sampling Problem Unbiased Estimators 4-2&3 Sampling Theory --The Sample Mean and Variance Sampling Theorem 4-4 Sampling Distributions and Confidence Intervals Student’s T-Distribution 4-5 Hypothesis Testing 4-6 Curve Fitting and Linear Regression 4-7 Correlation Between Two Sets of Data Concepts Sample means and sample variance relation to pdf mean and variance Biased estimates of means and variances How close are the sample values to the underlying pdf values ? Practical curve fitting, using an NTC resistor to measure temperature. Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 1 of 19 ECE 3800 4-1 Introduction Statistics Definition: The science of assembling, classifying, tabulating, and analyzing data or facts: Descriptive statistics – the collecting, grouping and presenting data in a way that can be easily understood or assimilated. Inductive statistics or statistical inference – use data to draw conclusions about or estimate parameters of the environment from which the data came from. Theoretical Areas: Sampling Theory – selecting samples from a collection of data that is too large to be examined completely. Estimation Theory – concerned with making estimates or predictions based on the data that are available. Hypothesis testing – attempts to decide which of two or more hypotheses about the data are true. Curve fitting and regression – attempt to find mathematical expressions that best represent the data. Analysis of Variance – attempt to assess the significance of variations in the data and the relation of these variances to the physical situations from which the data arose. (Modern term ANOVA) Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 2 of 19 ECE 3800 Sampling Theory – The Sample Mean How many samples are required to find a representative sample set that provides confidence in the results? Defect testing, opinion polls, infection rates, etc. Definitions Population: the collection of data being studied N is the size of the population Sample: a random sample is the part of the population selected all members of the population must be equally likely to be selected! n is the size of the sample Sample Mean: the average of the numerical values that make of the sample Population: N Sample set: S x1 , x 2 , x3 , x 4 , x5 , x n Sample Mean x 1 n xi n i 1 To generalize, describe the statistical properties of arbitrary random samples rather than those of any particular sample. Sample Mean 1 n Xˆ X i , where X i are random variables with a pdf. n i 1 Notice that for a pdf, the true mean, X , can be compute while for a sample data set the above sample mean, Xˆ is computed. Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 3 of 19 ECE 3800 As may be noted, the sample mean is a combination of random variables and, therefore, can also be considered a random variable. As a result, the hoped for result can be derived as: 1 n 1 n E Xˆ E X i X X n i 1 n i 1 If and when this is true, the estimate is said to be an unbiased estimate. Though the sample mean may be unbiased, the sample mean may still not provide a good estimate. What is the “variance” of the computation of the sample mean? 4-2 Variance of the sample mean – (the mean itself, not the value of X) You would expect the sample mean to have some variance about the “probabilistic” or actual mean; therefore, it is also desirable to know something about the fluctuations around the mean. As a result, computation of the variance of the sample mean is desired. For N>>n or N infinity (or even a known pdf), using the collected samples …. 2 1 n ˆ Var X E X i E Xˆ n i 1 2 1 n n Var Xˆ E 2 X i X j X j 1 n i 1 1 Var Xˆ E 2 n n n X i 1 j 1 n i n 2 X j X 2 2 1 Var Xˆ E Xi X j X n 2 i 1 j 1 For X i independent (measurements are independent of each other) E Xi X j E X 2 X 2 , i i E X i E X j E Xˆ X , 2 2 for i j for i j Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 4 of 19 ECE 3800 As a result we can define two summation where i=j and i<>j, n n 1 ˆ Var X E Xi X E X i X i n 2 i 1 j 1, j i 2 j X 1 2 2 Var Xˆ 2 n E X i n 2 n E X i X n 2 1 2 2 n2 n Var Xˆ X 2 X X n n2 1 n X X X Var Xˆ X n n n 2 2 2 2 2 2 n where 2 is the true variance of the random variable, X. Therefore, as n approaches infinity, this variance in the sample mean estimate goes to zero! Thus a larger sample size leads to a better estimate of the population mean. Note: this variance is developed based on “sampling with replacement”. When based on sampling without replacement … Destructive testing or sampling without replacement in a finite population results in another expression: 2 N n ˆ Var X n N 1 Note that when all the samples are tested (N=n) the variance necessarily goes to 0. The variance in the mean between the population and the sample set must be zero as the entire population has been measured! Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 5 of 19 ECE 3800 Example: How many samples of an infinitely long time waveform would be required to insure the mean is within 1% of the true (probabilistic) mean value? For this relationship, let 2 2 Var Xˆ 0.01 0.01 10 Infinite set, therefore assume that you use the “with replacement equation”: 2 Var Xˆ n Assume that the true means is 10 and that the true variance is 9 so that 10 3 . Then, 9 2 Var Xˆ 0.01 10 n 9 2 0.1 0.01 n n 900 A very large sample set size to “estimate” the mean within the desired bound! Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 6 of 19 ECE 3800 Central Limit Theorem Estimate Thinking of the characterization after using a very large number of samples … Using the central limit theorem (assume a Gaussian distribution) to estimate the probability that the mean is within a prescribed variance (1% from the previous example): Pr 9.9 Xˆ 10.1 F 10.1 F 9.9 Assume that the statistical measurement density function has become Gaussian centered around 10 with a 1% of the mean standard deviation (assuming that 10 and 0.1 ). We can use Gaussian/Normal Tables to determine the probability … 10.1 10 9.9 10 Pr 9.9 Xˆ 10.1 0.1 0.1 Pr 9.9 Xˆ 10.1 1 1 1 1 1 2 1 1 Pr 9.9 Xˆ 10.1 2 0.8413 1 0.6826 This implies that, after taking so many measurement to form an estimate, there is a 68.3% chance the estimate is within 1% of the mean or that there is a 1-0.6826 or 31.74% probability that the estimate of the population mean is more than 1% away from the true population mean. Summary, as the number of sample measured increases, the density function of the estimated mean about the true (probabilistic) mean takes on a Gaussian characteristic. Based on the variance of the sample mean computation (related to number of samples) the probability that the measurement mean match the probabilistic mean has known probability (based on Gaussian statistics). We will be dealing with Gaussian/Normal Distributions as large sum sizes with some random variable association haves joint density functions that are Gaussian – Central Limit Theorem. Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 7 of 19 ECE 3800 Example #2: A smaller sample size Population: 100 transistors Find the mean value of the current gain, . The true population mean is 120 and the true population variance is 2 25 . How large a sample is required to obtain a sample mean that has a standard deviation of 1% of the true mean? Therefore, we want 2 Var Xˆ 0.01 120 1.2 2 1.44 A smaller sample size, sample mean variance can be computed as N n Var Xˆ n N 1 2 Determining the number of samples needed to meet tolerance … 25 100 n 1.44 n 100 1 100 n n 1.44 99 n 25 100 100 14.92 15 1.44 99 6.7024 1 25 A rule-of-thumb is offered to define “large vs. small” sample sizes, the threshold given is 30. The ultimate goal is to achieve a near-Gaussian probability distribution. Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 8 of 19 ECE 3800 4-3 Sampling Theory – The Sample Variance When dealing with probability, both the mean and variance provide valuable information about the “DC” and “AC” operating conditions (about what value is expected) and the variance (in terms of power or squared value) about the operating point. Therefore, we are also interested in the sample variance as compared to the true data variance. The sample variance of the population (stdevp) is defined as: 1 S n 2 X n i 2 Xˆ i 1 and continuing until (shown in the coming pages) n 1 2 n where is the true variance of the random variable. E S2 Note: the sample variance is not equal to the true variance; it is a biased estimate! To create an unbiased estimator, scale by the biasing factor to compute (stdev): n n 1 n ~ E S 2 x2 E S2 X i Xˆ n 1 n 1 n i 1 n 1 1 X 2 n i 1 i Xˆ 2 When the population is not large, the biased estimate becomes N n 1 2 E S2 N 1 n and removing the bias results in ~ ES2 n N E S2 N 1 n 1 Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 9 of 19 ECE 3800 Additional notes: MATLAB and MS Excel Simulation and statistical software packages allow for either biased or unbiased computations. In MS Excel there are two distinct functions stdev and stdevp. stdev uses (n-1) - http://office.microsoft.com/en-us/excel-help/stdev-function-HP010335660.aspx stdevp uses (n) - http://office.microsoft.com/en-us/excel-help/stdevp-HP005209281.aspx In MATLAB, there is an additional flag associate with the std function. 1 n 2 x j , flag implied as 0 n 1 j 1 std X var X std X ,1 var X ,1 1 n 2 x j , flag specified as 1 n j 1 >> help std std Standard deviation. For vectors, Y = std(X) returns the standard deviation. For matrices, Y is a row vector containing the standard deviation of each column. For N-D arrays, std operates along the first non-singleton dimension of X. std normalizes Y by (N-1), where N is the sample size. This is the sqrt of an unbiased estimator of the variance of the population from which X is drawn, as long as X consists of independent, identically distributed samples. Y = std(X,1) normalizes by N and produces the square root of the second moment of the sample about its mean. std(X,0) is the same as std(X). Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 10 of 19 ECE 3800 Sampling Theory – The Sample Variance - Proof The sample variance of the population is defined as 1 S n 2 X n ˆ 2 X i i 1 n 1 1 2 S Xi Xj n n i 1 j 1 n 2 Determining the expected value ES 2 ES E S2 E S2 E S2 2 1 n 1 n E X i X j n j 1 n i 1 2 1 n n 1 n 2 2 E X i X i X j X j n j 1 n j 1 n i 1 n 1 n 1 n 2 n 2 E X E X X E X X i i j j k 2 n n i 1 n j 1 j k 1 1 1 n 2 2 E Xi 2 n i 1 n 1 2 nE X 2 2 n n E S2 E X 2 2 E X n i 1 n j 1 i n 1 n 1 n X j E 2 X j X k k 1 n i 1 n j 1 1 1 E X n 1 EX n n E X n n 2 2 i 1 i 1 2 1 n 1 2 2 n E X n n E X 1 n i 1 n 2 n2 E S2 E X 2 E S2 E X 2 2 2 n 1 1 2 E X2 E X 3 n n n n 2 n j 1 k 1 j X k n n n 2 E X j E X j X k j 1 k 1, k j j 1 2 2 2 n 1 1 2 2 E X 2 E X 3 n 2 E X 2 n n 2 n EX n n n n E X n n 2 n EX i 1 2 2 n 1 n 1 2 1 2 E S 2 E X 2 1 E X n n n n Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 11 of 19 ECE 3800 n 1 n 1 2 E S 2 E X 2 E X n n n 1 n 1 2 2 2 E S2 E X E X n n Therefore, E S2 n 1 2 n To create an unbiased estimator, scale by the (un-) biasing factor to compute: n ~ ES2 E S2 2 n 1 Variance of the variance As before, the variance of the variance can be computed. (Instead of deriving the values, it is given.) It is defined as Var S 2 4 4 n where 4 is the fourth central moment of the population and is defined by 4 E X X 4 Another proof for extra credit … For the unbiased variance, the result is ~ Var S 2 4 4 n 4 4 n2 n2 2 Var S n n 12 n 12 n 12 Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 12 of 19 ECE 3800 Example: the random time samples problem (first example) previously used where the true means is 10 and that the true variance is 9. Then, 2 9 ˆ Var X n n 9 Var Xˆ 0.01 900 and for n=900 ~2 n 4 4 Var S n 12 for a Gaussian random variable, the 4th central moment is 4 3 4 . Therefore n 3 4 4 2 n 4 ~ Var S 2 n 12 n 12 2 900 9 2 145800 ~ Var S 2 0.1804 900 12 808201 ~ Var S 2 0.4247 The Variance estimate would then be ~ Var S 2 100 Var S~ 2 % 4.72% or within 9 While 900 was selected to provide a mean estimate that was within 1%, the variance estimate is not nearly as close at 4.72%. More samples are required to improve the variance estimate. Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 13 of 19 ECE 3800 4-4 Sampling Distribution and Confidence Intervals Now that we have developed sample values, what are they good for … What is the probability that our estimates are within specified bounds … by measuring samples, can you prove that what you built or did is what was specified or promised? To really answer these questions, it is necessary to know the probability density function associated with parameter estimates such as the sample mean and sample variance. A great deal of effort has been expended in the study of statistics to determine these probability density functions and many such functions are described in the literature. (Interpretation: the material is very difficult, and, except for those who love math and statistic, not necessary to present the following material which provides simplifications that are commonly used by engineers). When in doubt … assume Gaussian. Then, the normalized random variable becomes (the sample mean with the mean removed, divided by the variance of the sample mean) Z Xˆ X n if the true population mean is not known, it can be replaced by the sample variance T Xˆ X Xˆ X ~ S S n 1 n This distribution is defined as a Student’s t distribution with n-1 degrees of freedom. Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 14 of 19 ECE 3800 The Student’s t probability density function (letting v=n-1, the degrees of freedom) is defined as f T t where v 1 v 1 2 2 2 1 t v v v 2 is the gamma function. The gamma function can be computed as k 1 k k k! and for any k for k an integer 2 1 (1) Note that when evaluating the Student’s t-density function, all arguments of the gamma function are integers or an integer plus ½. (2) Note that: The distribution depends on ν, but not μ or σ; the lack of dependence on μ and σ is what makes the t-distribution important in both theory and practice. http://en.wikipedia.org/wiki/Student's_t-distribution Student's distribution arises when (as in nearly all practical statistical work) the population standard deviation is unknown and has to be estimated from the data. Textbook problems treating the standard deviation as if it were known are of two kinds: (1) those in which the sample size is so large that one may treat a data-based estimate of the variance as if it were certain, and (2) those that illustrate mathematical reasoning, in which the problem of estimating the standard deviation is temporarily ignored because that is not the point that the author or instructor is then explaining. Note that: The distribution depends on ν, but not μ or σ; the lack of dependence on μ and σ is what makes the t-distribution important in both theory and practice. Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 15 of 19 ECE 3800 Comparing the density functions: Student’s t and Gaussian Students t and Gaussian Densities 0.4 Gaussian T w/ v=1 T w/ v=2 T w/ v=8 0.35 density function 0.3 0.25 0.2 0.15 0.1 0.05 0 -4 -3 -2 -1 0 1 2 3 4 See Fig_4_2.m and function students_t.m Student’s t Gaussian f T t f X x v 1 v 1 2 2 2 1 t v v v 2 x 2 X exp 2 2 X 2 X 1 Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 16 of 19 ECE 3800 Confidence Intervals and the Gaussian and Student’s t distributions The sample mean is a point-estimate (assigns a single value). An alternative to a point-estimate is an interval-estimate where the parameter being estimated is declared to lie within a certain interval with a certain probability. The interval estimate is the confidence interval. We can then define a q% confidence interval as the interval in which the estimate will lie with a probability of q/100. The limits of the interval are defined as the confidence limits and q is also defined to be the confidence level. Thus we are interested in X k k Xˆ X n n where k is a constant defined as (notice that it multiplies the standard deviation) X k q 100 f Xˆ x dx FXˆ X k FXˆ X k X k When the sample size is sufficient to meet the Central Limit Theorem, a Gaussian normal distribution can be used. Z Xˆ X n q z c z c for z c z z c q z c for z c z Gaussian pdf and PDF X x x X 2 , for x exp 2 2 2 1 v X 2 dv FX x exp 2 2 2 v x 1 Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 17 of 19 ECE 3800 Confidence Interval (in %) Two Tail Bounds k or z c : z c z z c 99.99% 0.005% to 99.995% 3.89 99.9% 0.05% to 99.95% 3.29 99% 0.5% to 99.5% 2.58 95% 2.5% to 97.5% 1.96 90% 5% to 95% 1.64 80% 10% ro 90% 1.28 50% 25% to 75% 0.675 To find the values, (1) determine the percentage value required for the bound (e.g. 75% for a 50% 2-sided interval) (2) find that value in the Normal table (unit variance). The value of k or z c is just the row plus column value that would create the probability! Xˆ X Z n q z c z c for z c z z c q z c for z c z Gaussian q values 0.4 0.35 q= 50.00%, k=0.674 0.3 f(x) in dB 0.25 0.2 0.15 q= 90.00%, k=1.645 0.1 q= 95.00%, k=1.960 0.05 q= 99.00%, k=2.576 0 -5 -4 -3 -2 -1 0 1 2 3 4 5 see Fig_4_6.m Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 18 of 19 ECE 3800 If the sample size is not sufficient, the Student t-distribution must be used. Reminder, as the Student’s t-distribution degrees of freedom increase ( v n 1 becomes large), the t-distribution approaches the Gaussian distribution! T-pdf and Normal pdf, v=30 T-pdf and Normal pdf, v=1 0.4 0.4 0.35 0.35 0.3 0.3 0.25 0.25 0.2 0.2 0.15 0.15 0.1 0.1 0.05 0.05 0 -4 -3 -2 -1 0 1 2 3 4 0 -4 -3 -2 -1 0 1 2 3 4 Appendix F provides tables of t for given v and F based on: v 1 v 1 2 2 2 1 x FT t v v x v 2 t Using the estimated sample mean and the variance of the sample mean: t Xˆ X Xˆ X ~ S S n 1 n tc q 100 fT t dt FT tc FT tc for t c t t c , 2-sided tc tc q 100 fT t dt FT tc for t c t , “right-tail” Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9. B.J. Bazuin, Spring 2015 19 of 19 ECE 3800