IMPLEMENTATION OF SIMULATED ANNEALING IN UNIT SELECTION FOR MALAY TEXT-TO-SPEECH SYSTEM

advertisement

IMPLEMENTATION OF SIMULATED ANNEALING IN UNIT SELECTION

FOR MALAY TEXT-TO-SPEECH SYSTEM

LIM YEE CHEA

A dissertation submitted in fulfillment of the

requirements for the award of the degree of

Master of Science (Mathematics)

Faculty of Science

Universiti Teknologi Malaysia

NOVEMBER 2009

iii

Dedicated to Jesus Christ,

my personal Lord and Savior,

my pastor, Church members,

my beloved mum, dad, brother and sister.

iv

ACKNOWLEDGEMENTS

“Let us then with confidence draw near to the throne of grace, that we may

receive mercy and find grace to help in time of need.” First and foremost, I want to

thank Jesus for His grace and mercy throughout this project. It is by His hand and

wisdom in guiding me to finish my work.

I would like to extend my appreciation to my honorable supervisor, Dr. Zaitul

Marlizawati Zainuddin and my co-supervisor, Dr. Tan Tian Swee, for their academic

guidance, suggestions, support and encouragement shown during the course of my

study. The patience, tolerance, diligence and dedication shown to me have given me

great encouragement and a good example to follow after.

Finally, I would love to convey my gratitude to my beloved family members

and church members for their love and care shown to me along the process of the

study. They have given me so much assistance, comfort and prayer support, either

financially or spiritually, of which words could not express and will forever be

remembered in my heart. Here I want to especially appreciate Mohd Redzuan bin

Jamaludin, his willingness and guidance in doing Matlab.

v

ABSTRACT

Unit selection method has become the predominant approach in speech

synthesis. The quality of unit selection based concatenative speech synthesis

primarily governed by how well two successive units can be joined together.

Therefore, the main purpose of unit selection is to minimize the audible

discontinuities. The process of unit selection is based on phonetic context and

Simulated Annealing that selects units from large database with the minimization of

a criterion, which is often called cost. This dissertation presents a variable-length unit

selection Malay text to speech system that is capable of providing more natural and

accurate unit selection for synthesized speech. To provide the capability of selecting

a speech unit not only limited to phoneme, diphone or triphone but also a string of

phonemes that can be matched directly to the database, unit selection methods have

been implemented. The Mel Frequency Cepstral Coefficients (MFCC) as spectral

parameters have been introduced in the unit selection based speech synthesis.

Distance measurement is needed to measure the difference between two vectors of

this speech feature. The spectral distance used is Euclidean Distance.

vi

ABSTRAK

Kaedah pilihan unit telah menjadi cara utama dalam sintesis pertuturan.

Kualiti untuk pilihan unit dalam penyambungan perkataan adalah berpandukan

kepada betapa baiknya kedua-dua unit menyambung bersama. Oleh itu, matlamat

utama dalam pilihan unit adalah untuk mengurangkan komposisi jarak. Process untuk

pilihan unit adalah bergantung pada konteks fonetik dan Simulated Annealing yang

memilih unit dari database dengan meminimumkan satu criteria, yang selalunya

dipanggil kos. Disertasi ini melaksanakan satu pemilihan unit berlainan panjang

yang mampu memberikan pemilihan unit yang lebih tepat dan semulajadi untuk

pertuturan sintesis. Untuk mengadakan pemilihan pertuturan unit yang berupaya

bukan hanya terhad kepada foneme,dua fonem atau tiga fonem tetapi juga satu

raingkaian fonem yang boleh terusdipadankan kepada pangkalan data, kaedah

pemilihan unit telah dilaksanakan. Mel Frequency Cepstral Coefficients (MFCC)

sebagai spektra parameter telah diperkenalkan dalam pemilihan unit pertuturan

sintesis. Pengiraan jarak adalah diperlukan untuk mengira jarak antara dua vector ini.

Spectra jarak yang digunakan adalah Jarak Euclidean.

vii

TABLE OF CONTENT

CHAPTER

TITLE

TITLE PAGE

i

DECLARATION PAGE

ii

DEDICATION

iii

ACKNOWLEDGEMENT

iv

ABSTRACT

v

ABSTRAK

vi

TABLE OF CONTENTS

vii

LIST OF TABLES

xi

LIST OF FIGURES

xiii

LIST OF SYMBOLS

xvi

LIST OF APPENDICES

1

PAGE

xviii

INTRODUCTION

1

1.0

Introduction

1

1.1

Background of the Problem

2

1.2

Problem Statement

3

1.3

Objective of the Study

3

1.4

Scopes of the Study

3

1.5

Significance of the Study

4

1.6

Research Methodology

4

1.7

Dissertation Layout

5

viii

2

LITERATURE REVIEW

5

2.1

Speech synthesis

6

2.1.1

7

2.2

Concatenative Speech Synthesis

Unit Selection

2.2.1

9

Non-Uniformed or Variable Length Unit

11

Selection

2.2.2

2.3

Corpus-based Unit Selection

Cost function for unit selection

12

14

2.3.1

The Acoustic Parameters

16

2.3.2

Linguistic Features

16

2.3.3 Local cost

17

2.3.3.1 Sub-cost on prosody

19

2.3.3.2 Sub-cost on discontinuity

20

2.3.3.3 Sub-cost on phonetic environment

20

2.3.3.4 Sub-cost on spectral

21

discontinuity

2.3.3.5 Sub-cost on phonetic

22

appropriateness

2.3.3.6 Other sub-costs

23

2.3.3.7 Integrated cost

23

2.4

Cost weighting

24

2.5

Target cost

25

2.6

Concatenation cost

26

2.7

Spectral Distances

29

2.8

Feature Extraction

30

2.8.1

30

2.9

MFCC

Distance Measures

32

2.9.1 Simple Distance Measures

33

2.9.1.1 Absolute Distance

33

2.9.1.2 Euclidean Distance

34

2.9.2 Statistically Motivated Distance Measures

34

2.9.2.1 Mahalanobis Distance

34

2.9.2.2 Kullback–Leibler (KL) distance

35

ix

2.10

3

4

Heuristic Method

36

2.10.1 Simulated Annealing

37

2.10.2 Approaches to improve SA algorithm

39

2.10.3 Polynomial approximation

40

2.10.4 Annealing Schedule

41

2.10.4.1 Theoretically optimum cooling schedule

41

2.10.4.2 Geometric cooling schedule

42

2.10.4.3 Cooling schedule of Van Laarhoven et al.

42

2.10.4.4 Cooling schedule of Otten et al.

43

2.10.4.5 Cooling schedule of Huang et al.

43

2.10.4.6 Adaptive cooling schedules

44

2.10.4.7 A new adaptive cooling schedule

44

2.11

Parallel SA

46

2.12

Segmented Simulated Annealing

47

PROPOSED SYSTEM AND IMPLEMENTATION

49

3.0

Introduction

49

3.1

System Design Flow

50

3.2

Malay Phonetics and Phone Sets

51

3.3

Malay Phoneme

51

3.3.1

Malay Vowels

51

3.3.2

Malay Consonant

51

3.4

Phoneme Units Database

52

3.5

Feature Extraction

55

3.6

Phonetic context

58

3.7

Unit Selection

59

3.8

Concatenation

60

SIMULATED ANNEALING

63

4.0

Introduction

63

4.1

Procedure of Simulated Annealing

65

4.2

Initial Solution

67

4.3

The cooling schedule

67

4.3.1 Markov chain

70

x

4.4

Neighbourhood Generation Mechanism

70

4.5

Metropolis's criterion

80

4.6

Stopping criteria

82

4.7

Unit Selection

82

4.7.1 Phonetic context

82

4.7.2 Concatenation Cost

88

4.8

5

89

4.7.2.2 Concatenation cost for Move 2

90

4.7.2.3 Concatenation cost for Move 3

90

4.7.2.4 Concatenation cost for Move 4

91

Concatenation

100

TESTING, ANALYSIS AND RESULT

107

5.1

Experiment

107

5.2

Test Materials

107

5.3

Test Conditions

107

5.4

Test Procedure

108

5.5

Profiles of Listeners

109

5.5.1 Percentages of Listeners by Gender

110

5.5.2 Percentage of Listeners by Race

111

5.5.3 Percentage of Listeners by State of Origin

112

Result and Analysis

113

5.6.1 Word Level Testing

113

5.6.2 Mean Opinion Score

114

5.6

6

4.7.2.1 Concatenation cost for Move 1

CONCLUSION AND RECOMMENDATION

117

6.1

Conclusion

117

6.2

Suggestion for Future Work

120

REFERENCES

APPENDICES A-E

121

131 -163

xi

LIST OF TABLES

TABLE NO.

TITLE

PAGE

2.1

Sub-cost functions

17

3.1

Total units after extracting the phoneme units from the carrier sentences

54

4.1

Maximum number of iterations for Markov Chain length 1

and 2 to reach final temperature greater than 0.1.

70

4.2

The information of the 10 words before filter using phonetic context.

86

4.3

The information of the 10 words after filter using partially matched

phonetic context (left phonetic context).

4.4

The information of the 10 words after filter using fully matched phonetic

context (left and right phonetic context).

4.5

92

Information of concatenation cost with temperature

reduction rate, α = 0.85

4.11

91

Information of concatenation cost with temperature

reduction rate, α = 0.95

4.10

90

Information of concatenation cost (Move 4) with temperature

reduction rate, α = 0.90

4.9

90

Information of concatenation cost (Move 3) with temperature

reduction rate, α = 0.90

4.8

89

Information of concatenation cost (Move 2) with temperature

reduction rate, α = 0.90

4.7

88

Information of concatenation cost (Move 1) with temperature

reduction rate, α = 0.90

4.6

87

93

Information of concatenation cost with temperature

reduction rate, α = 0.80

94

xii

4.12

Information of concatenation cost with temperature

reduction rate, α = 0.95

4.13

Information of concatenation cost with temperature

reduction rate, α = 0.90

4.14

96

Information of concatenation cost with temperature

reduction rate, α = 0.85

4.15

95

97

Information of concatenation cost with temperature

reduction rate, α = 0.80

98

4.16

The sequences of the 10 selected words.

100

5.1

Profiles of Listeners

109

5.2

Words selected for listening test.

113

5.3

The score line of synthesis words with considers the concatenation cost. 115

5.4

The score line of the 10 synthesis words with considers the concatenation

cost.

116

xiii

LIST OF FIGURE

FIGURE NO.

TITLE

PAGE

2.1

Classes of waveform synthesis methods for speech synthesis.

7

2.2

Viterbi search.

8

2.3

Architecture of corpus-based unit selection concatenative

speech synthesizer.

13

2.4

Schematic diagram of cost function

15

2.5

Example of unit search algorithm. The shortest path is marked in blue.

28

2.6

Example of unit search algorithm. The difference in cost between the

optimal sequences of two graphs is evaluated for d3 in pre-selection.

29

2.7

Objective Spectral distances

30

2.8

Block diagram of the conventional MFCC extraction algorithm

31

2.9

Parallel Simulated Annealing Taxonomy

46

2.10

Segmented simulated annealing

48

3.1

Block Diagram of System Design Flow.

50

3.2

A set of coefficient transform from MFCC algorithm.

53

3.3

Speech unit database.

54

3.4

The GUI to extract MFCCs coefficients.

55

3.5

The GUI to extract MFCCs coefficients.

56

3.6

The 12 coefficients extracted for phoneme “_m”.

56

3.7

The 12 coefficients extracted for phoneme “a”.

57

3.8

Distance measure and speech feature.

57

3.9

The candidate unit for phoneme “_n” that matched right phonetic context. 58

3.10

The candidate unit for phoneme “a” that matched left and right phonetic

3.11

context.

59

Unit selection

60

xiv

3.12

Waveform for phoneme “_n”.

61

3.13

Waveform for phoneme “a”.

61

3.14

Waveform for phoneme “s”.

61

3.15

Waveform for phoneme “i”.

62

3.16

Concatenation of the best matching units for the word “nasi”.

62

4.1

SA flow diagram to find best speech unit sequence.

66

4.2

Temperature reduction pattern for various reduction rates with

Markov Chain length 1.

4.3

69

Temperature reduction pattern for various reduction rate with

Markov Chain length 2.

69

4.4

Metropolis criterion

81

4.5

The feasible search region to form a Malay word “kampung” before filter

using phonetic context.

4.6

The feasible search region to form a Malay word “kampung” after filter

using partially matched phonetic context (left phonetic context).

4.7

85

The feasible search region to form a Malay word “kampung” after filter

using fully matched phonetic context (left and right phonetic context).

4.8

84

85

SA best solutions, mean and worst solutions for

ten problems from Table 4.12.

99

4.9

Waveform “_s1”.

101

4.10

Waveform “e537”

101

4.11

Waveform “l362”

101

4.12

Waveform “a2710”

101

4.13

Waveform “n1031”

102

4.14

Waveform “j7”

102

4.15

Waveform “u206”

102

4.16

Waveform “t142”

102

4.17

Waveform “ny1”

103

4.18

Waveform “a2060”

103

4.19

Concatenation waveform for the word “selanjutnya”.

103

4.20

Spectrogram for the word “nasi”.

104

4.21

Spectrogram for the word “berpengetahuan”.

104

4.22

Spectrogram for the word “demikian”.

105

xv

4.23

Spectrogram for the word “demikian” that do not consider

concatenation cost.

105

4.24

Spectrogram zoom in for the word “demikian” from Figure 4.22.

106

4.25

Spectrogram zoom in for the word “demikian” from Figure 4.23.

106

5.1

Percentage of listeners by gender.

110

5.2

Percentage of listeners by race.

111

5.3

Percentage of listeners by state of origin.

112

5.4

Level of intelligibility of the 10 selected words.

114

5.5

Results of the mean opinion score.

115

xvi

LIST OF SYMBOLS/ ABBREVIATIONS

AC

Average cost

kb

Boltzmann constant

S

Configuration set

C

Cost function

E

Energy

Cmax

Estimation of the maximum value of the cost function

⟨ f (T )⟩

Expected cost in equilibrium

FFT

Fast Fourier Transform

F0

Fundamental Frequency

GUI

Graphical User Interface

KL

Kullback-Leibler

LSF

Line spectral frequencies

LP

Linear prediction

LPC

Linear Predictive Coefficients

LC

Local cost

MC

Maximum cost

MOS

Mean Opinion Score

MCD

Mel-cepstral distortion

MFCCs

Mel-Frequency Cepstral Coefficients

Mel ( f )

Mel scale

MCA

Multiple centroid analysis

NC p

Norm cost

N

Neighbourhood structure

PLP

Perceptual linear prediction

xvii

P(E)

Probabilities of acceptance

δ

Real number

C pro

Sub-cost on prosody

CF0

Sub-cost on F0 discontinuity

Cenv

Sub-cost on phonetic environment

Cspec

Sub-cost on spectral discontinuity

Capp

Sub-cost on phonetic appropriateness

T

Temperature

α

Temperature reduction rate

TTS

Text-to-speech

TSP

Travelling salesman problem

U

Upper bound

σ 2 (T )

Variance in the cost at equilibrium

xviii

LIST OF APPENDICES

APPENDIX

TITLE

PAGE

A

Source Code of MFCC

131

B

Source Code of Simulated Annealing (Move 1)

137

C

Source Code of Simulated Annealing (Move 2)

145

D

Source Code of Simulated Annealing (Move 3)

153

E

Evaluation Questionnaire

161

CHAPTER 1

INTRODUCTION

1.0

Introduction

Corpus-based concatenative synthesis has become the major trend recently

because the resulted speech sounds more natural than that produced by parameterdriven production models (Chou, 1999). Unit selection synthesizers in the current

state produce highly intelligible, near natural synthetic speech (Tsiakoulis et al.,

2008). This method creates speech by re-sequencing pre-recorded speech units

selected from a very large speech database (Cepko et al., 2008). Speech is produced

by searching through large speech database (corpus) and concatenating selected

units, thus forming the output signal. This approach shows its superiority over

formant and articulatory synthesis, because it tends to concatenate natural acoustic

units with no modification. Thus, offering better speech quality (Janicki et al., 2008).

Text to speech synthetic is produced by concatenating speech unit from a very large

speech corpus containing enough prosodic and spectral varieties for all synthetic

units (Vepa et al., 2002; Vepa and King, 2004). Hence, it is possible to synthesize

highly natural-sounding speech by selecting an appropriate sequence of units (Vepa

et al., 2002). The selection of the best unit sequence from the database can be treated

as a search problem which has the lowest overall distance. Since the quality of the

resulting synthetic speech will depend to a large extent on the variability and

availability of representative units, therefore, it is crucial to design a corpus that

covers all speech units and most of their variations in a feasible size (Min et al.,

2001). The unit selection process is based on the cost function that consists of target

cost and join cost. The join cost is measurement of the acoustic smoothness between

2

the concatenated units (Dong and Li, 2008). This dissertation will focus on

concatenation costs which generally use a distance measure on a parameterization of

the speech signal. MFCCs are chosen as spectral parameters as they are most

commonly used in state-of-the-art recognizers (Rabiner and Juang, 1993). Distance

measurement is needed to measure the difference between two vectors of this speech

feature. The spectral distance used is Euclidean Distance. Mel Frequency Cepstral

Coefficients were derived using standard methods commonly used in speech

recognition. MFCCs are representative of the real cepstrum for a windowed short

time signal derived from the Fast Fourier Transform (FFT) of the speech signal (Wei

et al., 2006).

1.1

Background of the Problem

The main problem with the existing Malay text-to-speech (TTS) synthesis

system is the poor quality of the generated speech sound. This poor quality is caused

by the inability of traditional TTS system to provide multiple choices of unit for

generating an accurate synthesized speech (Tan and Sheikh, 2008b). Most of the

current Malay TTS systems are utilizing diphone concatenation that only supports a

single unit for each existing diphone, the selection of speech unit for concatenation

may not be accurate enough (Tan and Sheikh, 2008b). The current trend in high

quality text-to-speech systems (TTS) is to concatenate acoustic units selected from

large-scaled corpus of continuous read speech. Thus, a robust unit selection is needed

to handle the huge volume of data in the database (Blouin et al., 2002).There exist

artifacts such as phase mismatches and discontinuities in spectral shape since units

are extracted from disjoint phonetic contexts which can have a deleterious effect on

perception (Hunt and Black, 1996). It is nominally cast as a multivariate optimization

task, where the available unit inventory is searched for the “best” sequence of units

which makes up the target utterance. This optimization relies on suitable cost criteria

to characterize relevant aspects of acoustic and prosodic context (Bellegarda, 2008).

3

1.2

Problem Statement

The task of the research is to use Simulated Annealing to find the minimum

path for the speech units.

1.3

Objective of the Study

The dissertation aims to achieve the three objectives outlined in this section

i)

To implement Mel Frequency Cepstral Coefficients (MFCCs) in unit

selection.

ii)

To implement heuristic optimization method in unit selection.

iii)

To evaluate the performance of the heuristic optimization method in

unit selection.

1.4

Scopes of the Study

This dissertation presents a variable-length unit selection scheme to select

text-to-speech (TTS) synthesis units from phoneme based corpus which supporting

phoneme pattern in Malay Text to Speech. Speech feature selected are MFCCs.

Spectral distance used is Euclidean distance. Heuristic methods namely Simulated

Annealing is implemented in unit selection to select the best sequence of unit.

4

1.5

Significance of the Study

For Malay TTS system, this is the first version of implementation of unit

selection using heuristic method which is Simulated Annealing. The performance of

this kind of algorithm and methods will be evaluated based on values of cost

functions obtained and listening test. By doing so, the advantages and disadvantages

of this method will be known if compared to other existing unit selection methods.

1.6

Research Methodology

The variable length unit selection is capable of providing more natural and

accurate unit selection for synthesized speech and has been implemented in Malay

text to speech system in this project (Tan and Sheikh, 2008b). During synthesis,

proper units are selected by searching the closest database units to the symbolic

target sequence using the Simulated Annealing. The number of possible units at a

given time can number in the tens of thousands if a database is built from a 100-hour

corpus (Nishizawa and Kawai, 2006). Therefore, heuristic optimization method is

needed to select the appropriate units without having to go through all possible

combination of units sequences. The C++ programming codes for Simulated

Annealing was developed. To make the acoustic distortion measures correspond to

human perception more consistently, the Mel Frequency Cepstral Coefficients

(MFCC) as spectral parameters have been introduced in the unit selection based

speech synthesis (John et al., 1993). Distance measurement is needed to measure the

difference between two vectors of this speech feature. The spectral distance used is

Euclidean Distance. The smaller the magnitude in Euclidean Distance means closer

the concatenation point and thus generated better speech sound. The performance of

the heuristic method and other unit selection method were evaluated based on values

of cost functions obtained and listening test.

5

1.7

Dissertation Layout

This dissertation is divided into six major parts. Chapter 1 includes

introduction, background, objective and scope of the thesis. The purpose is to show

how this research is different from other conventional method.

Chapter 2 provides the comprehensive study in various unit selection

methods. The focus will be on the cost function for unit selection, speech features

and spectral distance. It will also include a discussion for Simulated Annealing (SA)

with the purpose of laying a foundation for the possible approach to improve the

performance of SA.

Chapter 3 describes on the proposed system and implementation. It will

discuss the process involved in generating the waveform for synthesis word from

contextual linguistic, selection of speech units, concatenation and output sound.

Chapter 4 describes the procedure for SA. It will also describe the procedure

in unit selection from contextual linguistic, SA to concatenation. Various parameter

setting and neighbourhood generation mechanism for SA will be used to investigate

the performance of SA.

Chapter 5 is listening test for the synthesis words based on result in Chapter

4. The purpose is to justify the contribution of concatenation cost in improving the

speech quality.

Chapter 6 provides the conclusion for the system. It will also give some

recommendation for further improvement of the system.

CHAPTER 2

LITERATURE REVIEW

2.1

Speech synthesis

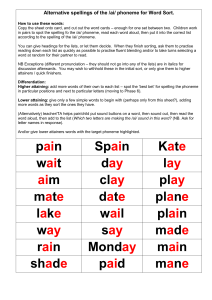

There are two types of speech synthesis methods (Figure 2.1) which are

parameter synthesis and concatenative synthesis (Hirai and Tenpaku, 2004). For

parameter synthesis method, it involved encoded and decoded of speech samples

(Hirai and Tenpaku, 2004). Before the speech samples are stored in a database,

speech samples are encoded into like Linear Predictive Coefficients (LPC)

parameters. In the synthesis stage, this speech samples are decoded. The encoded of

speech samples are required due to small memory storage size. During the encoded

process, it involves information lost and the consequence is speech intelligibility

degrades (Hirai and Tenpaku, 2004). For concatenative synthesis, it do not involved

encoded and decoded of speech samples. During speech concatenation, the original

speech segments are selected to concatenate (Hirai and Tenpaku, 2004). The original

here refer to as they are, or processed lightly. In this case, speech intelligibility and

the speaker’s identity are maintained since information is stored well. However, this

method required large storage capabilities. This method also results in large

computational cost for searching for appropriate concatenation speech segments. The

issues of large storage capabilities and computational cost are resolved with everincreasing advancements in computer technology these days (Hirai and Tenpaku,

2004). For the synthesis system in this dissertation, the length of a segment is a

phoneme.

7

Waveform Synthesis

Parametric Synthesis

Source-filter

Articulatory

Diphones

Concatenative Synthesis

Fixed inventory

Triphones

Nonuniform Unit Selection

Demisyllables

Figure 2.1 Classes of waveform synthesis methods for speech synthesis (Schwarz,

2007).

2.1.1

Concatenative Speech Synthesis

There exists several numbers of different techniques for synthesizing speech

(Chappell and Hansen, 2002). Corpus-based concatenative approach to speech

synthesis has been widely explored in recent years (Sakai et al., 2008). The

concatenative synthesis starts with a collection of speech waveform signals and

concatenates individual segments to form a new utterance. The concatenative

approach is based on the idea of re-combining natural prosodic contours and

phoneme sequences using a superpositional framework (Jan et al., 2005). In

concatenative speech synthesis, speech segments, each of which is often generalized

as a unit, are selected from speech corpus through the minimization of the overall

cost. The Viterbi search (Figure 2.2) is basically employed, which is based on the

dynamic programming (DP) approach to find the unit sequence with the minimal

cost (Nishizawa and Kawai, 2008). The final speech is more natural than with other

forms of synthesis since concatenative synthesis begins with a set of natural speech

segments (Chappell and Hansen, 2002).

8

Figure 2.2 Viterbi search (Sakai et al., 2008)

There exist several possible choices for basic synthesis unit in concatenative

speech synthesizer such as phonemes, diphones, demisyllables, syllables, words or

phrases (Min et al., 2001). Smaller units and larger units have it own advantages and

disadvantages. For a small units like phonemes, it is not difficult to collect a speech

corpus that embodies many prosodic and spectral varieties (Min et al., 2001). But the

disadvantage is the synthesized speech tends to suffer more distortions caused by

mismatches between concatenated units since small units mean much more

concatenation points. For larger units such as words or phrases, it is almost

impossible to cover many varieties in a feasible size (Min et al., 2001) although they

have less concatenation point. Each segment’s boundary for concatentation is chosen

during synthesis in order to best fit the adjacent segments in the synthesized

utterance. Spectral mismatch can be computed using an objective measure to

determine the level of spectral fit between segments at various possible boundaries.

The spectral mismatch is measured at various possible segment boundaries, and the

minimum measure score means the closest spectral match (Chappell and Hansen,

2002).

Large database can yield high speech quality for direct concatenation of

segments since the database contains enough sample segments to include a close

match for each desired segment (Chappell and Hansen, 2002). However, large

9

database can also mean costly in terms of database collection, search requirements,

and segment memory storage and organization (Chappell and Hansen, 2002). For

databases that contain multiple instances of each speech unit, segments selection is

based upon two cost functions which are the target cost and concatenation cost

(Chappell and Hansen, 2002). The target cost measure the difference between

available segments with a theoretical ideal segment, and the concatenation cost

measures the acoustic smoothness between the concatenated units (Dong and Li,

2008). Several spectral distance measures have been compared when used as

concatenation costs (Chappell and Hansen, 2002).

2.2

Unit Selection

Unit selection method has become the major approach in speech synthesis

recently and captures the attention of most researchers. The speech units need to be

carefully selected from a large database of continuous read speech recorded from a

professional speaker in order to yield high quality TTS systems (Sarathy and

Ramakrishnan, 2008). To produce enough speech target specification for unit

selection, the database should be designed to cover as much of the prosodic and

phonetic characteristics of the language as possible (Sarathy and Ramakrishnan,

2008). The unit selection becomes much slower when a larger unit database is used

for high-quality sounds. It is because computational effort in the search for the

optimal unit sequence is proportional to the square of the number of possible units

(Nishizawa and Kawai, 2006). The aim of unit selection speech synthesis is to select

a sequence of units which requires less signal processing or ideally no signal

processing at all (Robert et al., 2007).

In current speech synthesizers, the process of unit selection is based on some

type of dynamic programming that selects units from large database with the

minimization of the integrated cost (Wu et al., 2004). In corpus-based TTS, the

search for optimum unit sequence is determined by a Viterbi algorithm that

minimizes a cost function (Díaz and Banga, 2006). There are two different types of

spectral distortion in unit selection synthesis. These two spectral distortions are

10

contextual unit distortion and inter-unit distortion (Sagisaka, 1994). The unit

selection algorithm uses two cost functions; target cost and concatenation cost (Fek

et al., 2006). The quality of unit selection based concatenative speech synthesis is

determined by how well two successive units can be joined together to minimize the

audible discontinuities (Vepa et al., 2002). The concatenation cost is used as the

objective measure of discontinuity.

Most of the time, contextual unit distortion and inter-unit distortion is often

represented by target cost and concatenation cost respectively. The contextual unit

distortion is a measure of a whole unit caused by the difference between the unitextraction context and the target synthesis context. Most of the time, phonetic

contextual difference is used to represent this contextual unit distortion (Sagisaka,

1994). For inter-unit distortion, it is a measure for spectral discontinuity at unit

concatenation boundaries. This spectral discontinuity is computed from the

difference in spectral envelopes of neighboring units at the concatenation points

(Sagisaka, 1994).

The combinations of units should be considered in unit selection, since the

suitability of synthesis unit includes not only similarity between a synthesis target

and a selected unit but also the smoothness between neighboring units (Nishizawa

and Kawai, 2006). According to Fek et al.,(2006), the target costs is assigned with

null cost if the phonetic context are fully matched. This means that the left and right

phonetic contexts of the input unit and the candidate are exactly matched. According

to Fek et al.,(2006), the concatenation cost is assigned with null cost if units were

consecutive in the speech database.

The coverage of units in all different speaker attitudes and prosodic styles is

one of the biggest problems in the unit selection approach (Sarathy and

Ramakrishnan, 2008). It will not be enough to guarantee the full coverage of target

feature combinations thoroughly even after recording of several hundred thousand

sentences (Sarathy and Ramakrishnan, 2008). Therefore, unit selection is very

important to substitutes the units not matching target specification with the closest

substitutes.

11

To improve the naturalness of the synthesized speech, the possible approach

is to increase the length of basic units from demi-phones, phones, di-phones, triphones, syllables, words to non-uniform or variable-length units (Wu et al., 2004).

Since less concatenation points yields less spectral discontinuity, thus longer

synthesis units will reduce the effect of spectral distortion (Wu et al., 2004).

In concatenative systems, speech units can be either fixed-size diphones or

variable length units (Hasim et al., 2006). Fixed-size units only allow one length size

unit to be available in the speech unit database. The length size can either be

phoneme, diphone, syllable or word unit length, depending on its application. The

approach which utilizes variable length units is known as unit selection (Hasim et al.,

2006). For Malay speech unit concatenation, there are two types of units used namely

single unit and ‘unit selection’. The method which used single unit has only one

occurrence of all possible units. For this type of method, the diphone level has been

used by most of the research and available commercial TTS system namely Festival

Speech Synthesis system, Mbrola, and German TTS-systems (Taylor et al., 1999).

For unit selection method, since a large speech corpus containing more than

one instance of a unit is recorded, it provides more accurate unit selection with

multiple choices for all correspondence units. The variable length units are selected

based on some estimated objective measure to optimize the synthetic speech quality

(Hasim et al., 2006).

2.2.1

Non-Uniformed or Variable Length Unit Selection

Nowadays, many speech synthesis systems used non-uniform or variable

length unit concatenation as an effort to minimize audible signal discontinuities at

the concatenation points (Stylianou and Syrdal, 2001). To produce the output speech,

the most appropriate units of variable lengths, with the desired prosodic features

within the corpus are automatically retrieved and selected on-line in real-time, and

concatenated during the synthesis process (Chou and Tseng, 1998). Usually, longer

units can be used in the synthesis if they appear in the corpus with desired prosodic

12

features and the need for signal modification to obtain the desired prosodic features

for a voice unit is significantly reduced which signal modification usually degrades

the naturalness of the synthesized speech (Chou and Tseng, 1998). The quality of a

waveform generated depends mainly on the number of concatenation points.

Therefore, higher perceived quality speech can be produced as the length of the

elements used in the synthesized speech increases that is decreases in number of

concatenation points (Nagy et al., 2005).

2.2.2

Corpus-based Unit Selection

The concept of corpus-based or unit selection synthesis is that the corpus is

searched for maximally long phonetic strings to match the sounds to be synthesized

(Piits et al., 2007). Corpus-based speech tends to elicit considerably higher ratings

of naturalness in auditory tests than diphone or triphone synthesis (Nagy et al.,

2005). This is because there is less number of real concatenation points (Fek et al.,

2006). To solve the problems with the fixed-size unit inventory synthesis, e.g.

diphone synthesis, a promising methodology has been proposed using corpus-based

concatenative speech synthesis (unit selection) (Hasim et al., 2006). There is more

than one instance of each unit to capture prosodic and spectral variability found in

natural speech in the speech corpus. The acoustic units of varying sizes are selected

from a large speech corpus and concatenated in corpus-based systems. If an

appropriate unit is found in the unit inventory, the needs for signal modifications on

the selected units are minimized (Hasim et al., 2006). A unit selection algorithm is

required to choose the units from the inventory that matches best the target

specification of the input sequence of units because there are more than one instance

of each unit (Hasim et al., 2006). A factor that has been argued to contribute to the

perceived lack of naturalness of synthesis speech is the frequency of unit

concatenations (Möbius, 2000). The unit selection algorithm favors choosing

adjacent speech segments in order to minimize the number of joins (Hasim et al.,

2006). Thus, the limitations of concatenative synthesis for fixed acoustic unit

inventory have been overcome by corpus-based approaches.

13

The unit selection produced much better output speech quality than fixed-size

unit inventory synthesis in terms of naturalness. However, some challenges still face

by the unit selection. One of the problems is the inconsistency of the speech resulting

quality (Hasim et al., 2006). The selected unit is needed to undergo some prosodic

modifications which degrade the speech quality at this segment join if the unit

selection algorithm fails to find a good match for a target unit. To overcome this

problem, speech corpus should be designed to cover all the prosodic and acoustic

variations of the units that can be found in an utterance. It is infeasible to record

larger and larger databases since it will slow down the unit selection. A better way is

to find a way for optimal coverage of the language. Another problem faced by the

unit selection is some glitches exist at the concatenation points in the synthesized

utterance during concatenating the speech waveforms. A speech model is generally

used for speech representation and waveform generation to ensure smooth

concatenation of speech waveforms and to enable prosodic modifications on the

speech units (Hasim et al., 2006). Figure 2.3 shows the components of Malay

waveform generator modules generation.

Figure 2.3 Architecture of corpus-based unit selection concatenative speech

synthesizer.

14

The Algorithm 2.1 shows the algorithm to detect appropriate speech unit sequence

for synthesis using the purtubation of model parameters (Hirai et al., 2002).

Algorithm 2.1

1. Estimate the values of speech features depending on the input text. The

values are called “expected values” of a feature.

2. According to the expected values, a speech unit sequence which shows the

minimum cost C0 is found in the speech database and the IDs of the units in

the sequence are substituted S 0 .

3. The cost C0 and the speech unit sequence S 0 are substituted Cmin and Smin .

4. Choose a feature from among F0 , speech unit duration, power, etc., and

substitute into F. Execute the processing shown below:

4.1

the model parameter values of F are adjusted within the range in

which naturalness and clarity are maintained, and the new expected

values sequence is substituted T.

4.2

Based on T, a speech unit sequence S, which shows the minimum cost

C, is found in the speech database. If C < Cmin , then C and S are

substituted Cmin and Smin .

5. Repeat step (4) until the number of repetitions exceeds the limit or until there

is no expectation to renew the Cmin .

6. Systhesize the speech based on the speech unit sequence Smin .

2.3

Cost function for unit selection

The cost function for unit selection is viewed as a transformation of objective

features such as acoustic measures and linguistic information, into a perceptual

measure (Figure 2.4). The predicted perceptual measure that is expected to capture

the degradation of synthetic speech naturalness is considered a cost (Toda et al.,

2006).

15

Perceptual experiments should be conducted to determine the components of

the cost function based on the results of the experiments. In practice, it is almost

impossible to experimentally transform acoustic measures into a perceptual measure

provided the acoustic features have simple structures, as in the case of F0 or

phoneme duration. Most of the times, acoustic features have such complex structures

that this kind of experiment is infeasible (Toda et al., 2006). Nothing satisfactory has

been found so far although various studies have been carried out to search for an

acoustic measure that can capture perceptual characteristics (Klabbers and Veldhuis,

2001; Stylianou and Syrdal, 2001; Ding and Campbell, 1998; Wouters and Macon,

1998).

Besides, phonetic information can be transformed into perceptual measures

from perceptual experiments (Kawai and Tsuzaki, 2002). However, acoustic

measures that can represent the characteristics of instances of waveform segments

are still necessary since phonetic information can only evaluate the difference

between phonetic categories (Toda et al., 2006).

Figure 2.4 Schematic diagram of cost function (Toda, 2003).

16

2.3.1

The Acoustic Parameters

The parameters that fall into this category are normally prosodic parameters

that describe pitch and duration of unit. The use of prosody alone is not enough to

reflect spectral mismatches. Both spectral parameters and prosodic parameters need

to be included in the unit for unit selection (Dong and Li, 2008). MFCC is employed

as parameters to represent spectral information in this dissertation. Basic synthesis

chosen in this dissertation is phoneme. The 12 MFCC coefficients are used to

represent spectral information.

2.3.2

Linguistic Features

The linguistic features are used for predicting the acoustic parameters. The

linguistic features can be obtained from input text. The linguistic features that can be

derived from the utterance files included context units, syllable information, syllable

position information, word information, phrase information and utterance

information. For context units, it describes phone identities of the previous 2 and

next 2 units. For syllable information, it describes stress, accent, length of the

previous, current and next syllables. For syllable position information: syllable

position in word and phrase, stressed syllable position in phrase, accented syllable

position in phrase, distance from the stressed syllable, distance from the accented

syllable, and name of the vowel in the syllable. For word information, it describes

length and part-of-speech of the previous word, current word and next word, position

of the word in phrase. For phrase information, it describes lengths (in number of

words and syllables) of previous phrase, current phrase and next phrase, position of

the current phrase in major phrase, boundary tone of the current phase. Finally, for

utterance information, it describes lengths in number of syllables, words and phrases

(Dong and Li, 2008).

17

2.3.3

Local cost

The degradation of naturalness caused by individual candidate unit can be

shown using local cost. The higher local cost means the less naturalness of the

speech synthesis. The cost function is defined as a weighted sum of the five sub-cost

functions (Table 2.1). Each sub-cost represents either source information or vocal

tract information (Toda et al., 2006).

Table 2.1 Sub-cost functions (Toda, 2003).

Source information

Vocal tract information

Prosody ( F0 , duration )

C pro

F0 discontinuity

CF0

Phonetic environment

Cenv

Spectral discontinuity

Cspec

Phonetic

Capp

inappropriateness

The local cost LC ( ui , ti ) at a candidate unit ui for a target phoneme ti is

given by

LC ( ui , ti ) = w pro ⋅ C pro ( ui , ti ) + wF0 ( ui − ui −1 ) + wenv ⋅ Cenv ( ui ,ui −1 ) + wspec ⋅ C ( ui ,ui −1 )

+ wapp ⋅ Capp ( ui ,ti )

w pro + wF0 + wenv + wspec + wapp = 1

where wpro , wF0 , wenv , wspec and wapp denote weights for their respective sub-costs. All

sub-costs need to be normalized so that they have positive values with the same

mean. The previous unit ui −1 shows a candidate unit for the ( i − 1) th target phoneme

ti −1 (Toda et al., 2006). The sub-costs Cenv , Cspec and Capp become null when the

candidate units ui −1 and ui are adjacent in the corpus. The five sub-costs can be

further divided into target cost Ct and concatenation cost Cc (Hunt and Black, 1996;

Campbell and Black, 1997).

18

The unit selection process is based on two types of cost function which are

target cost and concatenation cost (Dong and Li, 2008). The local cost (Toda, 2003;

Toda et al., 2006) is given by

LC ( ui , ti ) = wt ⋅ Ct ( ui , ti ) + wc ⋅ Cc ( ui , ui −1 )

wt + wc = 1

such that the target cost Ct and concatenation cost Cc can be written as

Ct ( ui , ti ) =

wpro

wt

Cc ( ui , ui −1 ) =

wpro

Since wt

+

⋅ C pro ( ui , ti ) +

wapp

wt

⋅ Capp ( ui , ti )

wF

w

wenv

⋅ Cenv ( ui , ui −1 ) + spec ⋅ Cspec ( ui , ui −1 ) + 0 ⋅ CF0 ( ui , ui −1 )

wc

wc

wc

wapp

wt

=1

, therefore

wt = w pro + wapp

.

wenv wspec wF0

+

+

=1

w = wF0 + wenv + wspec

Similarly, wc

, therefore c

wc

wc

The target cost takes into account, the phone label and its position in the

word, the phone label and its position in the syllable, and the segment duration

(according to statistical data). For the concatenation cost, the factors it takes into

account is takes adjacent phones in the same speech segment, avoids too large

duration, pitch and energy differences between adjacent phones, and finally favors

similar phonetic features for adjacent phones (Prudon et al., 2002). It is not easy to

reach a balance between the target cost and the join cost. If we give more weight to

concatenation cost, then the target cost may be weighted low and thus result in bad

synthesis. Combining these two costs is necessary as a way of lessening this behavior

(Vepa and King, 2004).

19

2.3.3.1 Sub-cost on prosody: C pro

The difference in prosodic parameters ( F0 contour and phoneme duration)

between a candidate segment and the target caused the degradation of naturalness.

The C pro sub-cost captures the degradation of naturalness caused by this

phenomenon (Toda et al., 2006). To calculate the difference in the F0 contour, a

phoneme is divided into various parts, then the difference in an averaged log-scaled

F0 is computed in each part. The prosodic cost is represented as an average of the

costs calculated in each phoneme (Toda, 2003). This sub-cost function (Toda et al.,

2006) can be estimated from the results of perceptual experiments. The sub-cost C pro

can be written as

C pro ( ui , ti ) =

1

M

∑ P ( D (u , t , m ) , D (u , t ))

M

m =1

F0

i

i

d

i

i

(2.1)

where DF0 ( ui , ti , m ) is the difference in the averaged log-scaled F0 in the mth

divided part. The DF0 is set to zero in the unvoiced phoneme. Dd is the difference in

the duration. Dd is calculated for each phoneme and used in the calculation of the

cost in each part. M is the number of divisions (Toda, 2003).

P is the nonlinear function and was determined from the results of perceptual

experiments on the degradation of naturalness caused by prosody modification,

assuming that the output speech was synthesized with prosody modification. The

function should be determined based on other experiments on the degradation of

naturalness caused by using a different prosody from that of the target when prosody

modification is not performed (Toda, 2003).

20

2.3.3.2 Sub-cost on F0 discontinuity: CF0

The F0 discontinuity at a segment boundary caused the degradation of

naturalness. The CF0 sub-cost captures the degradation of naturalness caused by this

phenomenon. This sub-cost (Toda et al., 2006) is computed as the distance based on

the log-scaled F0 at the boundary and is given by

(

CF0 ( ui , ui −1 ) = P DF0 ( ui , ui −1 ) , 0

)

where DF0 is the difference in log-scaled F0 at the boundary and is set to zero at the

unvoiced phoneme boundary. The function P in Equation (2.1) is used to normalize

a dynamic range of the sub-cost. The sub-cost becomes zero when the units ui −1 and

ui are adjacent in the corpus (Toda, 2003).

2.3.3.3 Sub-cost on phonetic environment: Cenv

The mismatch of phonetic environments between a candidate segment and

the target caused the degradation of naturalness (Toda et al., 2006). The Cenv subcost captures the degradation of naturalness caused by this phenomenon and is given

by

Cenv ( ui , ui −1 ) =

{

}

{

}

1

S s ( ui −1 , Es ( ui −1 ) , ui ) + S p ( ui , E p ( ui ) , ui −1 )

2

=

1

S s ( ui −1 , Es ( ui −1 ) , ti ) + S p ( ui , E p ( ui ) , ti −1 )

2

(2.2)

(2.3)

Ss and S p is the sub-cost function that captures the degradation of naturalness caused

by the mismatch with the succeeding and preceding environment respectively. Es and

E p are the succeeding phoneme and preceding phoneme in the corpus respectively.

Thus, S s ( ui −1 , Es ( ui −1 ) , ti ) is the degradation caused by the mismatch with the

succeeding environment in the phoneme for ui −1 by substituting Es ( ui −1 ) with the

21

phoneme for ti . S p ( ui , E p ( ui ) , ti −1 ) is the degradation caused by the mismatch with

the preceding environment in the phoneme ui by substituting E p ( ui ) with the

phoneme for ti −1 . The sub-cost functions S s and S p are determined from the results

of perceptual experiments. Equation (2.2) is transformed into Equation (2.3) by

considering that a phoneme for ui is equivalent to a phoneme for ti and a phoneme

for ui −1 is equivalent to a phoneme for ti −1 (Toda, 2003).

This sub-cost is calculated from the results of perceptual experiments. The

sub-cost does not always become zero even if the mismatch of phonetic

environments does not exist since this sub-cost also reflects the difficulty of

concatenation caused by the uncertainty of segmentation (Klabbers and Veldhuis,

2001). The sub-cost becomes null when the units ui −1 and ui are adjacent in the

corpus (Toda, 2003).

2.3.3.4 Sub-cost on spectral discontinuity: Cspec

The spectral discontinuity at a segment boundary caused the degradation of

naturalness. The Cspec sub-cost captures the degradation of naturalness caused by this

phenomenon (Toda et al., 2006). This sub-cost is determined as the weighted sum of

a mel-cepstral distortion between frames of a segment and those of the preceding

segment around the boundary (Toda, 2003). The sub-cost (Toda, 2003) can be

written as

Cspec ( ui , ui −1 ) = cs ⋅

w

−1

2

∑ h ( f )MCD ( u , u

f =− w /2

i

i −1

,f)

where h is the triangular weighting function of length w . MCD ( ui , ui −1 , f ) is the

mel-cepstral distortion between the f th frame from the concatenation frame

(f

= 0 ) of the preceding segment ui −1 and the f th frame from the concatenation

frame ( f = 0 ) of the succeeding segment ui in the corpus. Concatenation is

22

conducted between the -1-th frame of ui −1 and the 0-th frame of ui . cs is a

coefficient to normalize the dynamic range of the sub-cost. The mel-cepstral

distortion (Toda, 2003) calculated in each frame-pair is written as

(

40

20

d

d

⋅ 2 ⋅ ∑ mcα( ) − mcβ( )

ln10

d =1

)

2

where mcα( d ) and mcβ( d ) show the d-th order mel-cepstral coefficient of a frame α and

that of a frame β , respectively. This sub-cost becomes zero when the segments

ui −1 and ui are adjacent in the corpus (Toda, 2003).

2.3.3.5 Sub-cost on phonetic appropriateness: Capp

The phonetic inappropriateness caused the degradation of naturalness. The

Capp sub-cost captures the degradation of naturalness caused by this phenomenon

using outlying segments (Toda et al., 2006). The sub-cost is computed as the melcepstral distortion between the mean vector of a candidate segment and that of the

target (Toda et al., 2006). The sub-cost Capp (Toda, 2003) can be written as

Capp ( ui , ti ) = ct ⋅ MCD ( CEN ( ui ) , CEN ( ti ) )

where CEN is a mean cepstrum calculated at the frames around the phoneme center.

MCD is the mel-cepstral distortion between the mean cepstrum of the segment

ui and that of the target ti . ct denotes a coefficient to normalize the dynamic range of

the sub-cost (Toda, 2003). This sub-cost is set to zero in the unvoiced phoneme

(Toda, 2003).

23

2.3.3.6 Other sub-costs

Besides that, there exist many others sub-cost such as acoustic phonemic

target cost, phoneme cost, phrase cost, etc. Acoustic phonemic target cost (Jan et al.,

2005) is used to measure acoustic distance to acoustic template of a phonetic class.

Foot or phoneme cost is assigned to violations of the same-class constraint. Phrase or

foot cost measure mismatches between the target and the phrase match sequence in

terms of the number and lengths of the feet. Sentence or phrase cost measure

mismatches between the target and the phrase match sequence in terms of the

number and lengths of the feet.

The cost definition must consider spectral compatibility, addressed by subcosts phonological identity difference, phoneme characteristic difference, signal f0 ,

signal f0 derivative, signal energy difference, signal energy derivative difference.

Long-term compatibility is addressed by sub-costs phonological identity difference,

phoneme characteristic difference, syllabic position difference, word position

difference, breath group position difference, system predicted duration difference and

signal duration difference. Sub-costs syllabic position difference, word position

difference and breath group position difference are devised to favour the coherence

of prosodic groups (Blouin et al., 2002).

2.3.3.7 Integrated cost

The optimum set of units for an utterance is selected from a speech corpus in

unit selection. Therefore, local costs for individual units need to be integrated into a

cost for a unit sequence. This cost is referred to as an integrated cost in this

dissertation (Toda et al., 2006). The average cost ( AC ) with the formula

AC =

1 N

⋅ ∑ LC ( ui , ti )

N i =1

is often used as the integrated cost (Hunt and Black, 1996; Campbell and Black,

1997) where N denotes the number of targets in the utterance. The silence before the

24

utterance are represented by the target t0 and the candidate u0 whereas the silence

after the utterance are denoted by t N and uN . Both the sub-costs C pro and Capp are

fixed to zero for the pause. Since the average cost shows the level of naturalness over

the entire synthetic utterance, a unit with an expensive cost can also be selected in

the output sequence of units; with the condition that it is optimal in terms of the

average cost (Toda et al., 2006).

It might be assumed that the largest cost in the sequence would have

significant effect on the degradation of naturalness in synthetic speech. The

maximum cost ( MC ) as the integrated cost given by

MC = max { LC ( ui , ti )} ,

i

1< i < N

is used to verify this assumption. Besides these two types of integration methods,

there is another cost called norm cost, NC p , which is given by

1

p⎤p

⎡1 N

NC p = ⎢ ⋅ ∑ { LC ( ui , ti )} ⎥

⎣ N i =1

⎦

The norm cost is equivalent to the average cost when the value p is set to 1. Whereas

the norm cost is equal to the maximum cost when p approaches infinity. Thus, the

mean value and the maximum value can be obtained using this norm cost by

varying p .

2.4

Cost weighting

There exist many combinations of features for which not every feature can be

simultaneously satisfied. Therefore, some compromises need to be made. Since there

will be relative importance for each sub-cost in the whole cost function, thus, tuning

the weights is an important stage in the design of the selection algorithm to reflect

their relative importance (Díaz and Banga, 2006). The highest weight will be

assigned to the most important sub-cost. Various approaches have been presented for

25

automatically tuning the weights of the cost functions employed in the speech unit

selection process. Various weight set has been integrated into the selection process

and the set that gave the smallest mean square error between the original and the

synthetic pitch contours was selected as the candidate. However, results show that

there does not exist a set of weights with consistent performance across all (or almost

all) of the sets (Díaz and Banga, 2006). Thus, adjusting the weights by manual tuning

seems to be the only solution to this problem and further research needs to be carried

out on optimizing the weights.

However, there is a possible approach of weight optimization, which is using

multiple linear regression (MLR). The multiple linear regression calculated on subcost values generated with the training corpus, as a function of an acoustic measure

of concatenation quality is used to optimize the sub-cost weights (Blouin et al.,

2002).

Besides that, target cost weights can be adjusted automatically using linear

regression in the synthesis system. Taking in the context cost as a target cost element

makes it very critical to use the trained weights since some context mismatches exists

when using such weights. To solve this problem, adjust-listen operations need to be

performed starting from the automatically trained weights until satisfactory results

are obtained. The need for such manual tuning may be caused by the objective

function used in weight training is not perceptually suitable (Hamza et al., 2001).

2.5

Target cost

The target cost is a measure of how much a candidate unit is remote from a

desired position in the synthesized phrase. In the classical approach, it is measured

against, for example, a desired pitch contour, the distance is derived directly from

text. It is based on two factors which are syllable’s position in a word, and presence

and type of a boundary tone. The target cost equals zero if both factors match the unit

in the corpus and the target phrase. Else, various cost values are assigned for

26

different cases, depending on the syllable type (stressed, final, other) and type of a

boundary tone (ending, low, high, none) (Janicki et al., 2008).

The target cost function refers to how well a unit’s phonetic contexts and

prosodic characteristics match with those required in the synthetic phone sequence

(Vepa and King, 2004). In other words, target cost is a mismatch between desired

and candidate's acoustic characteristics (Cepko et al., 2008). These characteristics are

denoted by features as which prediction from input text is possible, like duration,

intensity and intonation curve. The target cost function captures degradation of

naturalness caused by the difference between a target and a selected unit in a

mismatch of the phonetic environment, log F0 (fundamental frequency), phone

duration, and MFCC (mel-frequency cepstral coefficients) (Nishizawa and Kawai,

2006). The target cost is usually calculated as the weighted sum of the differences

between prosodic and phonetic parameters of target and candidate units (Vepa and

King, 2004).

The target cost can be further divided into two types which are phonetic

target costs and prosodic target costs. The phonetic target cost (Zhao et al., 2006)

contains sub-costs for the Left Phone Context and the Right Phone Context. The

prosodic target costs (Zhao et al., 2006) contain the sub-costs for Position in Phrase,

Position in Word, Position in Syllable, Accent Level in Word and Emphasis Level in

Phrase, etc.

2.6

Concatenation cost

Features that are included in the concatenation cost calculation may be certain

spectral type coefficients that parameterize borders of the speech units in the corpus.

The concatenation cost is the distortion between these parameters of two adjacent

candidate units (Zhao et al., 2006). Concatenation cost is used to measure the

acoustic smoothness between the concatenated units (Dong and Li, 2008). According

to Nishizawa and Kawai (2006) the concatenation cost function captures the

27

degradation of naturalness caused by discontinuity at the unit boundary in F0 and

MFCC.

According to Janicki et al (2008), the concatenation cost is a measure of how

well we loose on concatenating acoustic units. This is based also on linguistic

information, because its main component is a cost related to the change of a

phoneme’s context. This information can be obtained directly from the analysis of

the input and corpus texts and is computed as follows (Janicki et al., 2008):

• it is assigned with null cost if the left neighbor in the corpus is exactly the same unit

as the left neighbor in the synthesized phrase,

• the cost is assigned a certain value if the left neighbor in the corpus is the same

phoneme as the left neighbor in the synthesized phrase.

• higher cost is applied if the left corpus neighbor belongs only to the same phonetic

category as the left neighbor in the phrase.

• even higher cost is applied if only the voicing information agrees.

• highest cost is set in all other cases.

Component of the concatenation cost included measure of F0 difference,

which is calculated only for units neighboring with voiced parts (Janicki et al.,

2008). It is believed that unit selection with estimating concatenation cost basing on

the phoneme’s context change, together with F0 difference, should bring similar

results as methods using spectral distance measures (Wouters and Macon, 1998) but

at much lower computational cost.

The ideal concatenation cost should correlates highly with human listeners’

perceptions of discontinuity at concatenation points. That means the concatenation

cost should predict the degree of perceived discontinuity although the computation of

concatenation cost based only on measurable properties of the candidate units such

as spectral parameters, amplitude, and F0 (Vepa and King, 2004).

The concatenation cost function contains a component that measures

differences in the spectral properties of the speech either side of a proposed join

between two candidate units (Vepa and King, 2004). Blouin et al. (2002) conducted

28

a concatenation cost function based on phonetic and prosodic features. The function

presented is defined as a weighted sum of sub-costs, each of which is a function of

various symbolic and prosodic parameters. The multiple linear regression were used

to optimize weights as a function of an acoustic measure of concatenation quality.

Perceptual evaluation results showed that the concatenation sub-cost weights

determined automatically were better than hand-tuned weights, with or without

applying smoothing after unit concatenation (Vepa and King, 2004).

The acoustic measures and phonetic features were compared by Kawai and

Tsuzaki (2002) in term of their ability to predict audible discontinuities. The MFCCs

were employed to derive acoustic measures and the distance between certain frames

were measures using Euclidean distances. Phonetic features were found to be more

efficient than acoustic measures in term of predicting the audible discontinuities

(Vepa and King, 2004). Figure 2.5 shows the unit selection that based on cost

minimization of target cost and concatenation cost. Figure 2.6 shows the search

graph for unit selection for global optimum and local minimum.

Figure 2.5 Example of unit search algorithm. The shortest path is marked in blue

(Janicki et al., 2008).

29

(a) The search graph for the global optimum.

(b) The search graph for the local minimum where d3 is fixed.

Figure 2.6 Example of unit search algorithm. The difference in cost between the

optimal sequences of two graphs is evaluated for d3 in pre-selection (Nishizawa and

Kawai, 2008).

2.7

Spectral Distances

The concatenation cost function consists of a distance measure that operates

on some parameterization of the final and initial frames of two units to be

concatenated (Vepa and King, 2004), as shown in Figure 2.7. Thus, a wide variety of

distance measures and parameterizations are possible.

30

Figure 2.7 Objective Spectral distances (Vepa and King, 2004).

2.8

Feature Extraction

2.8.1

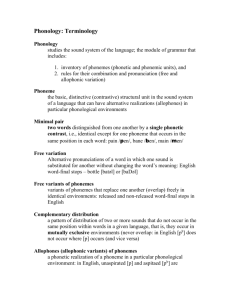

MFCC

One of the most important issues in the field of speech synthesis is feature

extraction (Khor, 2007). MFCCs are a representation defined as the real cepstrum of

a windowed short-time signal and it is derived from the fast Fourier transform (FFT)

of the speech signal (Vepa and King, 2004). The difference of MFCC from the real

cepstrum is that a nonlinear, perceptually motivated frequency scale is used, which

approximates the behavior of the human auditory system. Examples of

parameterizations included linear prediction (LP) coefficients; LP spectrum; Mel

frequency cepstral coefficients (MFCC); line spectral frequencies (LSF); perceptual

linear prediction (PLP) coefficients; PLP spectrum; multiple centroid analysis

(MCA) coefficients.

31

MFCC have good performance in speech recognition systems. Good

performance in speech synthesis systems meanwhile is likely to be determined more

by the relationship between parameter values and human perception of speech

sounds (Donovan, 2003). A study conducted by Wouters and Macon (1998), to

measure the correlation between a number of likely spectral distance measures based

on different speech parameterizations and human perception of the differences

between speech sounds. Their results indicated that MFCCs worked as well as any

other parameterization they tested, when used in either a Euclidean distance measure

or in a Mahalanobis distance measure computed using parameter variances estimated

from their whole database.

Speech

Preemphasis

Windowing

&

Overlapping

FFT

MelFrequency

Filter bank

Cepstrum

Logged

Energy

MFCC

Delta

Figure 2.8 Block diagram of the conventional MFCC extraction algorithm (Khor,

2007).

MFCC is capable of capturing the phonetically important characteristics of

speech (Wong and Sridharan, 2001). MFCC is coefficients that represent audio,

based on perception. The mel scale is a perceptual scale of pitches judged by

listeners to be equal in distance from one another. A cepstrum is the result of taking

the Fourier Transform (FT) of the decibel spectrum as if it were a signal. Cepstrum

used as an excellent feature vector for representing the human voice and musical

signals. The spectrum is usually first transform using Mel Frequency Band. The

result is called MFCCs. The result for mel scale is given as

32

f ⎞

⎛

m = 2595log10 ⎜1 +

⎟.

⎝ 700 ⎠

The transforming from linear frequency to Mel-frequency can be written as:

f ⎞

⎛

Mel ( f ) = 1127 ln ⎜1 +

⎟

⎝ 700 ⎠

It has been discovered that the perception of a particular frequency by auditory

system is influenced by energy in a critical band around mel frequencies for the final

inverse Fourier Transform in the calculation of cepstral coefficients will produce

MFCC (Figure 2.8).

2.9

Distance Measures

There are two stages involve in the computation of a distance measure which

are feature extraction and quantifying it. The feature is extracted from each pair of

candidate units and then the distance between the feature vectors representing the

units is computed (Kirkpatrick and O'Brien, 2006). The distance measure is needed

to measure the difference between two vectors of such parameters. Some examples

of distance measure are absolute magnitude distance, Euclidean distance,

Mahalanobis distance and Kullback-Leibler (KL) divergence. All the distance

measure listed above are metrics except KL divergence. The symmetrical version of

KL divergence can be used to compute the distance between two speech

parameterizations (Vepa and King, 2004). A psychoacoustic experiment on listeners’

detectability of signal discontinuities in concatenative speech synthesis have been

performed by Stylianou and Syrdal (2001).The results indicated that a symmetrical

Kullback-Leibler (KL) distance between FFT-based power spectra and the Euclidean

distance between MFCC have the highest prediction rates (Stylianou and Syrdal,

2001). According to Klabbers et al (2000), the Kullback-Leibler (KL) distance was

found to be the best predictor of audible discontinuities (Klabbers et al., 2000).

Distance measures have many applications in speech technologies. For

speech coding, they are applied as objective measures of speech quality and also

33

applied in the design of vector quantization algorithms. Therefore, in unit selection

synthesis, an objective distance measure which is able to predict audible

discontinuities is very important (Stylianou and Syrdal, 2001). This is none other

than concatenation cost distance measures which are best able to predict audible

discontinuities. Higher concatenation costs will be assigned to the units that are

predicted to produce audible discontinuities in concatenation, and thus they will be

less likely to be selected.

Recently, the most widely used distance in speech recognition is the

Euclidean distance between MFCCs. From the inspiration of speech recognition

methods, some speech synthesis unit selection algorithms use the Euclidean distance

between MFCCs (Stylianou and Syrdal, 2001).

2.9.1

Simple Distance Measures

2.9.1.1 Absolute Distance

Simple absolute distance is calculated as the sum of the absolute magnitude

difference between individual features of the two feature vectors with the formula as

shown in Equation (2.4)

N

Dabs ( X , Y ) = ∑ X i − Yi

i =1

(2.4)

34

2.9.1.2 Euclidean Distance

The Euclidean distance between two feature vectors, X and Y, is calculated as

shown in Equation (2.5). However, the Euclidean distance does not take into account

of variances or covariances of the distribution of the feature vectors (Vepa and King,

2004).

DEu ( X , Y ) =

2.9.2

n

∑( X

i =1

i

− Yi )

2

(2.5)

Statistically Motivated Distance Measures

Examples of famous distance measure from statistics are Mahalanobis

distance and Kullback-Leibler divergence (Vepa and King, 2004).

2.9.2.1 Mahalanobis Distance

Mahalanobis distance is a generalization of standardized (Euclidean) distance

in that it takes account of the variance or covariance of individual features (Vepa and

King, 2004). Donovan (2001) has used this distance measure in a concatenation cost

function. The Mahalanobis needs the estimation of covariance matrices. The offdiagonal elements of the covariance matrix are assumed to be zero, and this will save

computational costs and also storage requirement. Results showed that making this

diagonal covariance assumption was reasonable since using full covariance matrices

did not improve performance over using diagonal matrices. Equation (2.6) shows the

Mahalanobis distance for two feature vectors, X and Y , with diagonal covariance

matrix

DMa ( X , Y )

2

⎡ X −Y ⎤

= ∑⎢ i i ⎥

σi ⎦

i =1 ⎣

n

2

(2.6)

35

where σ i is standard deviation of the i-th feature of the feature vectors (i.e., the

diagonal entries of the covariance matrix).

2.9.2.2 Kullback–Leibler (KL) distance

The KL distance originates from statistics and is asymmetrical. It is also

known as the divergence, discrimination or relative entropy in information theory. It

is used to quantify differences between two probability distributions or densities. The

KL distance can also be employed to quantify differences in shape of strictly positive

sequences (or functions) of which the sum (or integral) equals one. In concatenative

speech synthesis, it has been used to quantify the differences between spectral

envelopes at concatenation points (Veldhuis, 2002). The spectral envelopes at the

boundary are viewed as the probabilistic distributions as shown in Equation (2.7)

(Zhao et al., 2006). In others words, the distance between the vectors is computed to

quantify the degree of similarity between two feature vectors, P and Q (Kirkpatrick

and O'Brien, 2006).