Document 14547824

advertisement

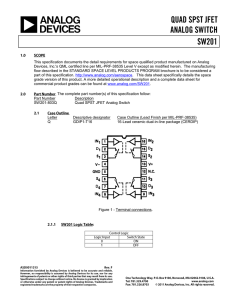

General-­‐Purpose Code Accelera2on with Limited-­‐Precision Analog Computa2on Renée St. Amant Amir Yazdanbakhsh Jongse Park Bradley Thwaites Hadi Esmaeilzadeh Arjang Hassibi Luis Ceze Doug Burger Georgia Ins2tute of Technology Alterna2ve Compu2ng Technologies (ACT) Lab Georgia Ins,tute of Technology The University of Texas at Aus,n University of Washington Microso; Research ISCA 2014 ADC DAC GPU Analog Accelerator DAC CPU DSP Unit Memory Memory Memory ADC ADC Input and Output Display Communica2on Sensing Analog Domain Processing Storage Digital Domain 2 How to use analog circuits for accelera2ng programs wriMen in conven2onal languages? 1) Neural transforma2on [Esmaeilzadeh et. al., MICRO 2012] 2) Analog neurons 3) Compiler-­‐circuit co-­‐design 3 Challenges -­‐ Analog circuits are mainly single func,on -­‐ Instruc,on control cannot be analog -­‐ Storing intermediate results in analog domain is not effec,ve -­‐ Analog circuits have limited opera,onal range 1) Neural transforma2on 2) Analog neurons 3) Compiler-­‐circuit co-­‐design 4 Challenges -­‐ Analog circuits are mainly single func,on -­‐ Instruc,on control cannot be analog -­‐ Storing intermediate results in analog is not effec,ve -­‐ Analog circuits have limited opera,onal range 1) Neural transforma,on 2) Analog neurons 3) Compiler-­‐circuit co-­‐design 5 Challenges -­‐ Analog circuits are mainly single func,on -­‐ Instruc,on control cannot be analog -­‐ Storing intermediate results in analog domain is not effec,ve -­‐ Analog circuits have limited opera,onal range 1) Neural transforma,on 2) Analog neurons 3) Compiler-­‐circuit co-­‐design 6 1st Design Principle Neural Transforma2on 7 Neural Transforma,on Analog Neural Network Analog Neural Network Esmaeilzadeh, Sampson, Ceze, Burger, “Neural Accelera,on for General-­‐Purpose Approximate Programs,” MICRO 2012. 8 A-­‐NPU accelera,on Source Codes Code1 Common Intermediate Representa,on Accelera,on Code2 Code3 Code4 Code5 Code6 … Neural Representa2on CPU A-­‐NPU + × 2nd Design Principle Analog Neurons 10 Analog Neurons for Accelerated Computa,on x0 xi … w0 … wn wi X xn x0 xi xn DAC DAC DAC … I(x0 ) … I(xi ) I(xn ) (xi wi ) y = sigmoid( X (a) (xi wi )) R(w0 ) R(wi ) V to I X V to I V to I (I(xi )R(wi )) R(wn ) ADC y ⇡ sigmoid( X (I(xi )R(wi ))) (b) 11 Mixed-­‐signal A-­‐NPU Config'FIFO … 8"Wide AnalogNeuron Weight'Buffer 8"Wide AnalogNeuron Weight'Buffer 8"Wide AnalogNeuron Weight'Buffer Weight'Buffer Input'FIFO Row'Selector 8"Wide AnalogNeuron Column'Selector Output'FIFO 12 s w0 sx0 w0 I(|x0 |) Resistor' Ladder s wn x0 Current' Steering' DAC wn … I(|xn |) Resistor' Ladder R(|w0 |) V (|w0 x0 |) I + (wn xn ) I (w0 x0 ) V+ w i xi R(|wn |) I (wn xn ) + + Diff' V Current' Steering' DAC Diff' Pair I + (w0 x0 ) ⌘ xn V (|wn xn |) Diff' Pair ⇣X sxn ⇣X w i xi ⌘ -" Flash ADC Amp ⇣ y ⇡ sigmoid V ⇣X sy y w i xi ⌘⌘ 13 Limita,ons of Analog Neuron Limited range of opera,on (e.g. 600mV) Margins for noise resiliency (2-­‐3 mV) Limited Bit-­‐width Topology Restric2on Circuit Non-­‐ideali2es (e.g., Sigmoid) 14 3rd Design Principle Compiler-­‐Circuit Co-­‐design 15 Digital Compila,on Workflow Source'Code Limited'Bit)Width Topology'Restric6on Circuit'Non)ideali6es Programmer Source'Code + Annota2ons Programming DANPU Compiler + Training'Algorithm Accelerator Config Instrumented Binary Compilation (Profiling, Training, Code Generation) CORE Execution 16 Analog Compila,on Workflow Source'Code Limited,Bit.Width Topology,Restric9on Circuit,Non.ideali9es Programmer Source'Code + Annota2ons Programming A@NPU Compiler + Customized'Training' Algorithm Accelerator Config Instrumented Binary Compilation (Profiling, Training, Code Generation) CORE Execution 17 (1) Training with Limited Bit-­‐width Train a fully-­‐precise neural network Limited-­‐Precision Network Input the training data to the discre,zed neural network Calculate the output error from the limited-­‐precision neural network Fully-­‐Precise Network Back propagate the error through the fully-­‐precise neural network Con,nuous-­‐Discrete Learning Method (CDLM), E. Fiesler, 1990 18 (2) Training with topology restric,ons and non-­‐ideali,es xj,0 wj,0 xj,3 wj,3 ANU xj,7 wj,7 1) Robust to the topology restric,ons 2) Tolerate a more shallow sigmoid ac,va,on steepness over all applica,ons yj Resilient Back Propaga,on (RPROP), M. Riedmiller, 1993 19 Measurements Signal Processing, Robo,cs, 3D Gaming, Financial Analysis, Compression, Machine Learning, Image Processing Analog A-­‐NPU with 8 Analog Neurons • Transistor-­‐Level HSPICE Simula,on • Predic,ve Technology Models (PTM), 45nm • Vdd: 1.2 V, f: 1.1 GHz Digital Components • Power Models: McPAT, CACTI, and Verilog Processor Simulator • Marssx86 Cycle-­‐Accurate Simula,on • Intel Nehalem-­‐like 4-­‐wide/5-­‐issue OoO processor • Technology: 45 nm, Vdd: 0.9 V, f: 3.4 GHz 20 Speedup 10.9 24.5 10 8-­‐bit Digital NPU Analog NPU 9 Applica2on Speedup 8 7 6 5 14.1 4 3 3.7 2 2.5 1 0 blackscholes l inversek2j jmeint jpeg kmeans sobel Ranges from 0.8× to 24.5× with Analog NPU 1.2× increase in applica2on speedup with Analog over Digital NPU geomean 21 Energy Savings 10 30.0 51.2 17.8 8-­‐bit Digital NPU Analog NPU 9 Applica2on Energy Reduc2on 8 7 6 5 42.5 6.3 25.8 4 3 5.1 2 1 0 blackscholes l inversek2j jmeint jpeg kmeans sobel geomean Energy saving with Analog NPU is very close to ideal case (6.5x) 22 Applica,on quality loss 100% 8-­‐bit Digital NPU Analog NPU Applica2on-­‐Level Error 90% 80% 70% 60% 50% 40% 30% 20% 2.8% 10% 0% blackscholes l inversek2j jmeint jpeg kmeans Quality loss is below 10% in all cases but one Based on applica2on-­‐specific quality metric sobel geomean 23 What is lef? 3% 46% Energy Reduc2on Speedup We can not reduce the energy of the computa2on much more. 24 3.7x × 6.3x ≈23x Energy Reduc2on Speedup Energy-­‐Delay Product Quality Degrada2on: Avg. 8.2%, Max. 19.7% I1 I0 I2 + Vo I(xn ) R(wn ) Iout = I0 + I1 + I2 Kirchhoff's Law Vo = I(xn ).R(wn ) Ohm’s Law Satura2on Property of Transistors 25 It is s,ll the beginning... 1) Broad applicability of the analog computa,on 2) Prototyping and integra,ng A-­‐NPU within noisy high performance processors 3) Reasoning about the acceptable level of error at the programming level x0 xi xn DAC DAC DAC … I(x0 ) CPU I(xn ) R(wi ) R0 V to I … I(xi ) R(wn ) A-NPU V to I X V to I (I(xi )R(wi )) ADC y ⇡ sigmoid( X (I(xi )R(wi ))) 26 Backup Slides 27 Area Breakdown Sub-­‐circuit Area A-­‐NPU 8x8-­‐bit DAC 3,096 T 8xResistor Ladder (8-­‐bit weights) 4,096 T + 1 K ⌦ (⇡ 450 T) 8xDifferen,al Pair I-­‐to-­‐V Resistors Differen,al Amplifier 8-­‐bit ADC Total 48 T 20 K ⌦ (⇡ 30 T) 244 T 2,550 T + 1K ⌦ ( ⇡ 450) 10,964 T ⇡ D-­‐NPU 8x8-­‐bit mul,ply-­‐adds 8-­‐bit Sigmoid lookup table Total 56,000 T ⇡ 16,456 T 72,456 ⇡ 6.6x fewer transistors in the analog neuron implementa2on 28 Power Breakdown Sub-­‐circuit Percentage of total power A-­‐NPU SRAM-­‐accesses 13% DAC-­‐Resistor Ladder-­‐Diff Pair-­‐Sum 54% Sigmoid-­‐ADC 33% Power numbers vary with applica2ons 29 Applica,ons Signal Processing m 34 x86 instruc,ons 67.4% dynamic instruc,ons Robo2cs inversek2j 100 x86 instruc,ons 95.9% dynamic instruc,ons 3D Gaming jmeint 1,079 x86 instruc,ons 95.1% dynamic instruc,ons 1➙4➙4➙2 Error: 4.1% 2➙8➙2 Error: 9.4% 18➙32➙8 ➙2 Error: 19.7% Financial blackscholes 6➙8➙8➙1 Compression jpeg 64➙16➙8 ➙64 309 x86 instruc,ons 97.2% dynamic instruc,ons 1,257 x86 instruc,ons 56.3% dynamic instruc,ons Error: 10.2% Error: 8.4% Machine Learning kmeans 6➙8➙4➙1 Image Processing sobel 9➙8➙1 26 x86 instruc,ons 29.7% dynamic instruc,ons 88 x86 instruc,ons 57.1% dynamic instruc,ons Error: 7.3% Error: 5.2% 30 Speedup with A-­‐NPU over 8-­‐bit D-­‐NPU 15.2× Speedup over 8-­‐bit D-­‐NPU 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1 0 blackscholes l inversek2j jmeint 3.3× geometric mean speedup Ranges from 1.8× to 15.2× jpeg kmeans sobel geomean 31 Energy savings over 8-­‐bit D-­‐NPU Energy savings with A-­‐NPU over 8-­‐bit D-­‐NPU 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1 0 blackscholes l inversek2j 28.2× 82.2× jmeint jpeg 12.1× geometric mean speedup Ranges from 3.7× to 82.2× kmeans sobel geomean 32 Dynamic Instruc,on Reduc,on 100% Percentage of Instruc2ons Subsumed 90% 80% 66.4% 70% 60% 50% 40% 30% 20% 10% 0% blackscholes l inversek2j jmeint jpeg kmeans sobel geomean 33 Speedup with A-­‐NPU accelera,on Sppedup over All-­‐CPU Execu2on 12 24.5× 11 10 9 8 7 6 5 4 3 2 1 0 blackscholes l inversek2j jmeint 3.7× geometric mean speedup Ranges from 0.8× to 24.5× jpeg kmeans sobel geomean 34 Energy savings with A-­‐NPU accelera,on Energy Savings / All-­‐CPU Execu2on 12 51.2× 30.0× 17.8× inversek2j jmeint 11 10 9 8 7 6 5 4 3 2 1 0 blackscholes l jpeg 6.3× geometric mean energy reduc2on All benchmarks benefit kmeans sobel geomean 35