CLASSIFICATION OF BREAST CANCER MICROARRAY DATA USING RADIAL BASIS FUNCTION NETWORK

advertisement

CLASSIFICATION OF BREAST CANCER MICROARRAY DATA USING

RADIAL BASIS FUNCTION NETWORK

UMI HANIM BINTI MAZLAN

UNIVERSITI TEKNOLOGI MALAYSIA

CLASSIFICATION OF BREAST CANCER MICROARRAY DATA USING

RADIAL BASIS FUNCTION NETWORK

UMI HANIM BINTI MAZLAN

A project report submitted in partial fulfillment of the

requirements for the award of the degree of

Master of Science (Computer Science)

Faculty of Computer Science and Information Systems

Universiti Teknologi Malaysia

October 2009

iii

To my beloved dad, my lovely mum and my precious siblings

iv

ACKNOWLEDGMENT

In the name of Allah, Most Gracious, Most Merciful

Praise to The Almighty, Allah S.W.T for giving me the strength and passion

towards completing this research throughout the semester. By His willing and bless, I

had managed to complete my work within the period given.

First of all, my thanks go to Dr. Puteh Bt Saad, who responsible as my Master

Project’s supervisor for her guidance, counsels, motivation, and most importantly for

sharing her thoughts with me.

My deepest thanks go to my lovely family for their endless prayers, love and

support. Their moral supports that always right by my side had been the inspiration

along the journey and highly appreciated.

I would also like to extend my heartfelt appreciation to all my friends for

their valuable assistance and friendship.

May Allah S.W.T repay all their sacrifices and deeds with His Mercy and Bless.

v

ABSTRACT

Breast cancer is the number one killer disease among women worldwide.

Although this disease may affect women and men but the rate of incidence and the

number of death is high among women compared to men. Early detection of breast

cancer will help to increase the chance of survival since the early treatment can be

decided for the patients who suffer this disease. The advent of the microarray

technology has been applied to the medical area in term of classification of cancer

and diseases. By using the microarray, thousands of genes expression can be

determined simultaneously. However, this microarray suffers several drawbacks such

as high dimensionality and contains irrelevant genes. Therefore, various techniques

of feature selection have been developed in order to reduce the dimensionality of the

microarray and also to select only the appropriate genes. For this study, the

microarray breast cancer data, which is obtained from the Centre for Computational

Intelligence will be used in the experiment. The Relief-F algorithm has been chosen

as the method of the feature selection. As the comparison, another two methods of

feature selection which are Information Gain and Chi-Square will also be used in the

experiment. The Radial Basis Function, RBF network will be used as the classifier to

distinguish between the cancerous and non-cancerous cells. The accuracy of the

classification will be evaluated by using the chosen metric namely Receiver

Operating Characteristic, ROC.

vi

ABSTRAK

Barah payudara merupkan pembunuh nombor satu di kalangan wanita di

seluruh dunia. Walaupun penyakit ini boleh menyerang wanita dan lelaki namun

kadar kejadian dan kematian adalah lebih tinggi di kalangan wanita berbanding

lelaki. Pengesanan awal barah payudara boleh membantu meningkatkan peluang

untuk hidup memandangkan rawatan awal boleh disarankan kepada pesakit yang

menghidapi penyakit ini. Kemunculan teknologi mikroarray telah diaplikasikan

dalam bidang perubatan untuk pengkelasan bagi penyakit barah. Dengan

menggunakan mikroarray, berjuta-juta pengekspresan gen boleh ditentukan secara

serentak. Walaubagaimanapun, mikroarray ini berhadapan dengan beberapa

kelemahan seperti mempunyai dimensi yang tinggi dan juga mengandungi gen-gen

yang tidak relevan. Oleh sebab itu, pelbagai teknik pemilihan fitur telah dibangunkan

bertujuan mengurangkan dimensi mikroarray dan hanya memilih gen-gen yang

bersesuaian sahaja. Bagi kajian ini, data barah payudara mikroarray yang digunakan

dalam eksperimen diperoleh dari Centre for Computational Intelligence. Algoritma

ReliefF telah dipilih sebagai teknik untuk pemilihan fitur. Sebagai perbandingan, dua

lagi teknik pemilihan fitur iaitu Information Gain dan Chi-square juga akan

digunakan dalam eksperimen. Bagi membezakan di antara sel barah dan bukan

barah, rangkaian Radial Basis Function, RBF, akan digunakan sebagai pengkelas.

Ketepatan pengkelasan akan dinilai oleh metrik yang telah dipilih iaitu Receiver

Operating Characteristic, ROC.

vii

TABLE OF CONTENTS

CHAPTER

1

TITLE

PAGE

DECLARATION

ii

DEDICATION

iii

ACKNOWLEDGEMENTS

iv

ABSTRACT

v

ABSTRAK

vi

TABLE OF CONTENTS

vii

LIST OF TABLES

xi

LIST OF FIGURES

xii

LIST OF ABBREVIATIONS

xiv

LIST OF APPENDICES

xv

INTRODUCTION

1.1

Introduction

1

1.2

Problem Background

3

1.3

Problem Statement

4

1.4

Objectives of the Projects

5

1.5

Scopes of the Projects

5

1.6

Importance of the Study

6

1.7

Summary

7

viii

2

LITERATURE REVIEW

2.1

Introduction

8

2.2

Breast cancer

9

2.2.1 What is Breast Cancer

9

2.2.2 Types of Breast Cancer

9

2.2.2.1 Non-invasive Breast Cancer

10

2.2.2.2 Invasive Breast Cancer

11

2.2.3 Screening for Breast Cancer

12

2.2.4 Diagnosis of Breast Cancer

13

2.2.4.1 Biopsy

2.3

2.4

Microarray Technology

15

2.3.1 Definition

15

2.3.2 Applications of Microarray

15

2.3.2.1 Gene Expression Profiling

17

2.3.2.2 Data Analysis

19

Feature Selection

20

2.4.1 Relief

24

2.4.2 Information Gain

25

2.4.3 Chi-Square

26

Radial Basis Function

27

2.5.1 Types of Radial Basis Function, RBF

27

2.5.2 Radial Basis Function, RBF, network

30

Classification

34

2.6.1 Comparison with others Classifiers

35

2.7

Receiver Operating Characteristic, ROC

37

2.8

Summary

38

2.5

2.6

3

14

METHODOLOGY

3.1

Introduction

39

3.2

Research Framework

40

3.3

Software Requirement

41

3.4

Data Source

41

3.5

HykGene

41

ix

3.5.1 Gene Ranking

3.5.1.1 ReliefF Algorithm

44

3.5.1.2 Information Gain

47

2

3.5.1.3 χ -statistic

3.5.2 Classification

3.6

4

47

50

3.5.2.1 k-nearest neigbor

50

3.5.2.2 Support vector machine

51

3.5.2.3 C4.5 decision tree

51

3.5.2.4 Naive Bayes

52

Classification using Radial Basis Function

Network

52

3.7

Evaluation of the Classifier

54

3.8

Summary

56

DESIGN AND IMPLEMENTATION

4.1

Introduction

57

4.2

Data Acquisition

58

4.2.1 Data format

61

Experiments

63

4.3.1 Genes Ranked

63

4.3

4.3.1.1 Experimental Settings

4.3.2 Classifications of top-ranked genes

4.3.2.1 Experimental Settings

4.3.3 Evaluation of Classifiers Performances

4.3.3.1 Experimental Settings

4.4

5

43

Summary

63

64

64

65

65

67

EXPERIMENTAL RESULTS ANALYSIS

5.1

Introduction

68

5.2

Results Discussion

69

5.2.1 Analysis Results of Features Selection

Techniques

5.2.2 Analysis Results of Classifications

69

72

x

5.2.3 Analysis Results of Classifier Performances 74

5.3

6

Summary

77

DISCUSSIONS AND CONCLUSION

6.1

Introduction

79

6.2

Overview

79

6.3

Achievements and Contributions

81

6.4

Research Problem

82

6.5

Suggestion to Enhance the Research

82

6.6

Conclusion

83

REFERENCES

85

APPENDIX A

95

APPENDIX B

99

xi

LIST OF TABLES

TABLE NO

2.1

TITLE

Previous researches using microarray

PAGE

16

technology

2.2

A taxonomy of feature selection techniques

22

2.3

Previous researches on classification using RBF

35

network

4.1

EST-contigs, GenBank accession number and

59

oligonucleotide probe sequence

5.1

Results on breast cancer dataset using Relief-F

69

5.2

Results on breast cancer using Information Gain

69

5.3

Results on breast cancer using χ2-statistic

70

5.4

Results on classification using different

72

classifiers

5.5

TPR and FPR of classifiers

75

5.6

ROC Area of Classifiers

77

xii

LIST OF FIGURES

FIGURE NO

1.1

TITLE

PAGE

Ten most frequent cancer in females, Peninsular

3

Malaysia 2003-2005

2.1

Ductal Carcinoma In-situ, DCIS

10

2.2

The cancer cells spread outside the duct and

11

invade nearby breast tissue

2.3

Gene Expression Profiling

18

2.4

Feature selection process

21

2.5

Gaussian RBF

28

2.6

Multiquadric RBF

29

2.7

Gaussian(green), Multiwuadric (magenta),

30

Inverse-multiquadric (red) and Cauchy (cyan)

RBF

2.8

The traditional radial basis function network

32

2.9

The ROC curve

38

3.1

Research Framework

40

3.2

HykGene: a hybrid system for marker gene

42

selection

3.3

The expanded framework of HykGene in Step1

44

3.4

Original Relief Algorithm

45

3.5

Relief-F algorithm

46

xiii

3.6

χ²-statistic algorithm

49

3.7

The expanded framework of HykGene in Step 4

50

3.8

Radial Basis Function Network architecture

53

3.9

AUC algorithm

55

4.1

The data matrix

58

4.2

Fifteenth of the instances of breast cancer

60

microarray dataset

4.3

Microarray Image

61

4.4

Header of the ARFF file

62

4.5

Data of the ARFF file

62

4.6

ROC Space

66

4.7

Framework to plotting multiple ROC curve

67

using Weka

5.1

Graph on cross validation classification

70

accuracy using 50-top ranked genes

5.2

Graph on cross validation classification

71

accuracy using 100-top ranked genes

5.3

Percentage of Correctly Classified Instances

73

using 50 top-ranked genes

5.4

Percentage of Correctly Classified Instances

73

using 100 top-ranked genes

5.5

ROC Space of 50 top-ranked genes

75

5.6

ROC Space of 100 top-ranked genes

75

5.7

ROC Curve of 50 top-ranked genes

76

5.8

ROC Curve of 100 top-ranked genes

76

xiv

LIST OF ABBREVIATIONS

AUC

-

Area Under Curve

ARFF

-

Artificial Relation File Format

cDNA

-

Complementary Deoxyribonucleic Acid

cRNA

-

Complementary Ribonucleic Acid

DNA

-

Deoxyribonucleic Acid

EST

-

Expressed Sequence Tag

FPR

-

False Positive Rate

k-NN

-

k-nearest neighbor

MLP

-

Multilayer Perceptron

mRNA

-

Messenger Ribonucleic Acid

NB

-

Naive Bayes

RBF

-

Radial Basis Function

RNA

-

Ribonucleic Acid

ROC

-

Receiver Operating Characteristics

SOM

-

Self Organising Maps

SVM

-

Support Vector Machine

TPR

-

True Positive Rate

xv

LIST OF APPENDICES

APPENDIX

TITLE

PAGE

A

Project 1 Gantt Chart

95

B

Project 2 Gantt Chart

99

CHAPTER 1

INTRODUCTION

1.1

Introduction

Cancer or known as malignant neoplasm in medical term is the number one

killer diseases in most of the countries worldwide. The number of deaths and people

suffering with cancer are increasing year by year. This number will continue to

increase with an approximate 12 million deaths in 2030 (Farzana Kabir Ahmad,

2008). This killer disease may affect people at all ages even fetuses and also the

animals but the risk increases with age. According to the National Cancer Institute,

cancer is defined as a disease causes by uncontrolled division of abnormal cells.

Cancer cells are capable to invade other tissues and can spread through the blood and

lymph systems to other parts of the body.

There are various factors that can cause cancer namely mutation, viral or

bacterial infection, hormonal imbalances and others. Mutation can be classified into

two categories which are chemical carcinogens and ionizing radiation. Carcinogens

are the mutagen, substances that cause DNA mutations, which cause cancer. Tobacco

2

smoking which is accounted for 90 percent of lung cancer (Biesalski HK, Bueno de

Mesquita B, Chesson A, et al., 1998) and prolonged exposure asbestos are the

examples of the substances (O'Reilly KM, Mclaughlin AM, Beckett WS, Sime PJ,

2007).

Radon gas which can cause cancer while prolonged exposure to ultraviolet

radiation from the sun that can lead to melanoma and other skin malignancies

(English DR, Armstrong BK, Kricker A, Fleming C,1997) are the sources of the

ionizing radiation. Based on the experiment and epidemiological data, it shows that

viruses become the second most important risk factor of the cancer development in

human (zur Hausen H, 1991). Hormonal Imbalances can lead to cancer because

some hormones are able to behave similarly like the non-mutagenic carcinogens.

This condition may stimulate the uncontrolled cell growth.

Cancer can be classified into various types with various names which are

mostly named based on the part of the body where the cancer originates (American

Cancer Society). Lung cancer, breast cancer, ovary cancer, colon cancer are several

examples of the common cancer types. Breast cancer is the disease which fears every

woman since it is the number one killer cancer for woman. Breast cancer remains a

major health burden since it is a major cause of cancer-related morbidity and

mortality among female worldwide (Bray, D., and Parkin, 2004).

It has been reported that, each year, more than one million new cases of

female breast cancer are diagnosed, worldwide (Ferlay J, Bray F, Pisani P, Parkin

DM, 2001). In United States of America, an estimated 192,370 new cases of invasive

breast cancer and an estimated 40,610 breast cancer death are expected in 2009

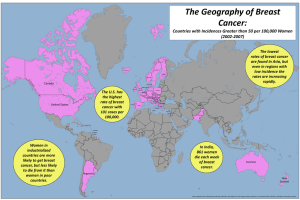

(American Cancer Society, 2009). The following figure shows the percentage of ten

most cancers in female in Malaysia from 2003 to 2005. From the figure, significant

shows that breast cancer hold the highest percentage among all the cancers in female.

3

As discussed in this part, it is proven that breast cancer is one of the main

health problems. A lot of researches done to study the best way to prevent, detect and

treat the breast cancer in order to reduce the number of deaths. This research also

study about detection of breast cancer using the microarray data. The microarray data

of the breast cancer will be classified to determine whether it is benign (noncancerous) or malignant (cancerous) using Radial Basis Function, RBF, after doing

the feature selection.

Figure 1.1: Ten most frequent cancer in females, Peninsular Malaysia 2003-2005

(Lim, G.C.C., Rampal, S., and Yahaya, H., 2008)

1.2

Problem Background

This project will use the microarray data of breast cancer. According to

Tinker et al., 2006, microarray has become a standard tool in many genomic research

laboratories because it has revolutionized the approach of biology research. Now,

scientist can study thousand of genes at once instead of working on a single gene

4

basis. However, microarray data are often overwhelmed, over fitting and confused by

the complexity of data analysis. Using this overwhelmed data during classification

will lead to the increase of the dimensionality of the classification problem presents

computational difficulties and introduces unnecessary noise to the process.

Other than that, due to improper scanning, it also contains multiple missing

gene expression values. In addition, mislabeled data or questioned tissues result by

experts also the drawback of microarray that decreases the accuracy of the results

(Furey et al., 2000). However, feature selection can be utilized to filter the

unnecessary genes before the classification stage is implemented. Based on Farzana

Kabir Ahmad, 2008, (cited from Ben-Dor et al., 2000; Guyon et al., 2002; Li, Y. et

al., 2002; Tago & Hanai, 2003 ) in many large scale of gene expression data analysis,

feature selection has become a prior step and a pre-requisite. The high reliance of

this gene selection technique is due to its important purpose that requires small sets

of genes in order to informatively sufficient to differentiate between cancerous and

non-cancerous cells.

1.3

Problem Statement

The main issue in the classification of microarray data is the presence of

noise and irrelevant genes. According to Liu and Iba, 2002, many genes are not

relevant to differentiate between classes and caused noise in the process of

classification. Hence, this will lead to the drowning out of the relevant ones Shen et

al., 2007. Therefore, the prior step before doing the classification which is feature

selection is important in order to reduce the huge dimension of microarray data.

5

1.4

Objectives of the Projects

The aim of the project is to classify a selected significant genes from

microarray breast cancer data. In order to achieve the aim, the objectives are as

follows:

i. To select significant features from microarray data using a suitable

feature selection technique.

ii. To classify the selected features into non-cancerous and cancerous using

Radial Basis Function network.

iii. To evaluate the performance of the classifier using Receiver Operating

Characteristic, ROC.

1.5

Scopes of the Project

This project will use a breast cancer microarray data which is obtained from

Centre for Computational Intelligence. This data originates from the Zhu, Z., Ong,

Y.S., and Dash, M., (2007). The dataset contains of 97 instances with 24481 genes

and 2 classes.

6

1.6

Importance of the Study

Early detection of breast cancer can help to avoid the cancer from getting

worse and eventually leads to the decreasing of the rate of death. Mammogram is one

of the tools that can detect early stage of breast cancer. However, biopsy is needed in

order to confirm the present of the malignant (cancerous) tissue that cannot be

performed by the mammogram. From this biopsy process the microarray data will be

obtained.

As discussed in the problem background there are several drawbacks of

microarray data. However, it can be solved by implementing the feature selection

before it can proceed to the classification. In this study, several feature selection will

be studied before choosing the most suitable method. Feature selection is very

important since it will determine the accuracy of the result after the classification.

The accuracy of the result is also important because it will classify between

the cancerous and non-cancerous. Since the purpose of biopsy is to confirm the

presence of the cancer cells after the early detection, the confirmation must not take a

long time. The best feature selection method must be used before classifying the

microarray data in order to reduce the time and produce the accurate result.

Through this project, the best feature selection method will be selected and

implemented to microarray data before it will be classified. The performance of the

classifiers will be evaluated based on the suitable metric. The result of this study will

be discussed in order to give the information for the future research in breast cancer

area.

7

1.7

Summary

As a conclusion, this chapter discusses the overview of the project. This

project will classify the breast cancer microarray data using the Radial Basis

Function to two classes namely benign (non-cancerous) and malignant (cancerous).

Feature selections will be studied and the best method will be chosen to implement

the microarray data before the classification.

CHAPTER 2

LITERATURE REVIEW

2.1

Introduction

Breast cancer can affect both genders but women have higher risk compares

to men. Early detection of breast cancer can ensure a better chance to cure the

disease at the early stage. There are three types of screening tools for early detection

of breast cancer. The best tool is the screening mammogram which produces the Xrays pictures. However, the cancer cells cannot be confirmed via the mammogram

alone, so the diagnosis is required to detect the existence of cancer cells. The

microarray data obtained from the biopsy can be used to distinguish the benign

(non-cancerous) and malignant (cancerous) tissue. Because the microarray data also

contains unnecessary genes, feature selection needs to be implemented in order to

filter it before the classification can be made using Radial Basis Function (RBF)

technique.

In this chapter, five main sub topics will be discussed; Breast Cancer,

Microarray Technology, Feature Selection, Radial Basis Function technique and

9

Receiver Operating Characteristic, ROC. The first subtopic will explain about the

types, screening tools and diagnosis of breast cancer. Microarray data produces by

the biopsy will be discussed in the following subtopic. Next, feature selection

method will be discussed to determine the best method that can be implemented in

this study. Finally the classification technique followed by the evaluation of the

classifier using chosen metric will be discussed. Furthermore, several previous

researches will be briefly explained for comparison.

2.2

Breast Cancer

2.2.1

What is Breast Cancer

According to the National Breast Cancer Foundation and American Cancer

Society, breast cancer is a disease in which malignant tumor which is a group of

cancer cells, forms in the tissue or cells of the breast. The malignant tumor is able to

invade surrounding tissues or spread to further area of the body, also called as

metastasize. Woman has the higher risk of developing the cancer compares to man.

2.2.2

Types of Breast Cancer

Breast cancer can be categorized by the areas where the cancer cells begin to

develop; ducts or lobules in which the milk produced. The different types of breast

cancer can be classified into two most common groups which are non-invasive and

10

invasive breast cancer. Besides the invasive and non-invasive, there are many other

common types of breast cancer.

2.2.2.1 Non-invasive Breast Cancer

The term non-invasive or also known as in-situ in breast cancer means that

the cancer cells remain confined to ducts or lubules. This category of breast cancer

did not invade surrounding tissue of the breast and spread to others part of the body.

There are two types of breast cancers that belong to this group which are:

i)

Ductal Carcinoma In-situ, DCIS

This type of breast cancer is the early stage of breast cancer in which

the cancer cells stay in the milk duct of the breast where it started. Although

it can grow, it does not affect the surrounding breast tissue or spread to lymph

nodes and other organs.

Figure 2.1: Ductal Carcinoma In-situ, DCIS

11

ii)

Lobular Carcinoma In-situ, LCIS

LCIS tends to develop into invasive breast cancer eventhough it has

been classified as non-invasive, in which the abnormal cells begins in the

milk-producing glands at the end of breast ducts.

2.2.2.2 Invasive Breast Cancer

Invasive or infliltrating breast cancer is a contrary to the non-invasive or insitu breast cancer. Like non-invasive, this category also have two types of breast

cancer:

Figure 2.2: The cancer cells spread outside the duct and invade nearby breast

tissue

i)

Invasive Ductal Carcinoma, IDC

Invasive ductal carcinoma is the most common type of breast

cancer where it begins in the duct of the breast. The cancer cells then

12

spread through the wall of the ducts and grows into the fatty tissue of

the breast. It can spread to the lymph nodes and to other parts of the

body later on.

ii)

Invasive Lobular Carcinoma, ILC

Like the non-invasive lobular carcinoma, invasive lobular

carcinoma also begins in the milk-producing glands. However, ILC

can spread beyond the lobules (milk-producing glands) to the other

parts of the body.

2.2.3

Screening for Breast Cancer

There are three types of screening test for breast cancer that can detect the

cancer at the early stage. The three screening test are as follows (National Cancer

Institute ):

i)

Screening Mammogram

Mammogram is the screening tool that produces the breast

picture from the X-rays which can help early detection of breast

cancer. This screening tool can often shows a breast lump before it

can be felt and also a cluster of tiny specks of calcium known as

microcalcifications. Cancer, precancerous or other condition can be

formed from that lump or specks.

Although mammograms can help early detection of breast

cancer, it also has several drawbacks which are:

13

a)

False negative because the mammogram may miss some

cancers.

b)

False positive causes by the mammogram may show the result

that is not the cancer.

ii)

Clinical Breast Exam

The clinical breast exam will be done by the doctor and during

the process the differences in size or shape of patient’s breasts will be

inspected. The doctor will examine the breast skin for signs of rash,

dimpling or other abnormal signs. Other examination method is by

squeezing the nipple to check for fluid or by using the pads of the

fingers. The entire breast including underarm and collarbone area will

be checked for the existence of any lumps. Lymph nodes will also be

inspected to ensure that they are in the normal size.

iii)

Breast-self Exam

Screening test for breast cancer can be done by any individual

once a month. This breast-self exam can be carried out even while in

the shower with several simple steps to detect any changes of the

breast. However, studies have shown that the reducing number of

deaths from breast cancer is not solely caused by breast-self exam.

2.2.4

Diognosis of Breast Cancer

There are three procedures in early detection of breast cancer. If there is any

differences discovered during the screening process, diagnosis stage will be required.

Mammograms is the most effective tool to detect breast cancer at the early stage

14

(American Cancer Society) although it has a few disadvantages. Because of the

disadvantages, the follow-up test is required to confirm the present of the cancer. The

biopsy is the follow-up test that can help to diagnose the existence of the malignant

tissue (cancerous).

2.2.4.1 Biopsy

Biopsy is a minor surgery to remove fluid or tissue from the breast to confirm

the presence of the cancer. The biopsy can be done in using three techniques

(National Cancer Institute ):

i)

Fine-needle aspiration

A thin needle will be used to remove the fluid from a breast

lump. The lab test will be run if the fluid consists of cancer cells.

ii)

Core biopsy/ Needle biopsy

To remove the breast tissue, a thick needle will be used and

the cancer cells will be examined afterwards.

iii)

Surgical biopsy

A sample of tissues will be checked for cancer cells after it has

been removed through the surgery. There are two types of surgical

biopsy which are incisional biopsy where a sample of a lump or

abnormal area will be taken and excisional biopsy which takes the

entire lump or area.

15

2.3

Microarray Technology

2.3.1

Definition

Microarray technology is defined as a method to study the activity of a huge

number of genes simultaneously. A high speed robotics is used in this method to

apply tiny droplets consisting of biologically active materials such as DNA, proteins

and chemicals to glass slides precisely. Microarrays are used in Functional Genomics

DNA to monitor gene expression at RNA level. Furthermore, it is also used to

identify genetic mutations and to discover the novel regulatory area in genomics

sequences. In the protein or chemicals materials, microarrays are used to monitor

protein expression or to identify the interactions of protein-protein or drug-protein.

Normally, in a single experiment, there are ten of thousands of genes interactions can

be monitored (Compton, R., et al., 2008).

2.3.2

Applications of Microarray

The applications of microarray were first applied in the mid 90s (Schena, M.,

et al., 1995). Since that, microarray technology has been successfully applied to

almost every field of biomedical research (Alizadeh AA et al., 2000; Miki R., et al.,

2001; Chu S., et al., 1998; Whitfield M.L., et al., 2002; van de vijver MJ et al., 2002;

Boldrick JC et al., 2002). Gene expression profiling is the most common application

of microarray technology (Russell Compton et al., 2008). Others application of

microarray technology are Genotyping of Human Single nucleotide polymorphism

(SNPs), Microarrays analysis of Chromatin Immunoprecipitation (Chip-on-chip) and

Protein Arrays.

16

Microarrays opened up an entire new world to researches (Fodor, S.P. et al.,

1991; Fodor, S.P. et al., 1993; Pease, A.C. et al., 1994). To study a unique single

aspect of the host or pathogen relationship is no longer a restriction but one can

explore a genome-wide view of the complex interaction. This helps scientist to

discover and understand disease pathways to ultimately develop better methods of

detection, treatment and prevention. Microarray technology has provided an

understanding of the tumor subtypes and their attendant clinical outcomes from the

molecular phenotyping of human tumors (Abu Khalaf, M.M. et al., 2007). In the

breast cancer study, it has been reported that the invasive breast cancer can be

divided into four main subtypes through unsupervised analysis by using microarray

technology (Sorlie, T. et al., 2001). In recent studies, the subset of genes that is

identified by microarray profiling has been suggested to be a prediction of a response

to chemotherapy in breast cancer, the taxanes (Abu Khalaf, M.M. et al., 2007).

The following table shows the previous researches using microarray

technology to detect, cure, or prevent various diseases including breast cancer.

Table 2.1: Previous researches using microarray technology

Author

Disease

Keltouma Driouch et al., 2007

Breast cancer

Lance D Miller and Edison T Liu, 2007

Breast cancer

Sofia K Gruvbeger-Saal et al., 2006

Breast cancer

Argryis Tzouvelekesis et al., 2004

Pulmonary

Gustavo Palacios et al., 2007

Infectious

Christian Harwanegg and Reinhard Hiller, 2005

Allergic

17

2.3.2.1 Gene Expression Profiling

In gene expression profiling or also known as mRNA experiment, the

thousand of genes levels are monitored simultaneously to study the effects of certain

treatments, diseases and developmental stages on gene expression (Adomas A. et al.,

2008). Typically, microarrays consist of several thousand single-stranded DNA

sequences, which are “arrayed” at specific locations on a synthetic “chip” via

covalent linkage. These DNA fragments provide a matrix of probes to label the

complementary RNA (cRNA) fluorescently which is derived from the sample of

interest. Each of gene expression is quantified by the intensity of the fluorescence

that emits from the specific location on the array matrix which proportional to the

amount of the gene product (Sauter, G. and Simon, R., 2002). There are two main

types of microarrays used to evaluate the clinical samples (Ramaswamy, S. and

Golub TR, 2002):

i)

cDNA array

This type of array also known as spotted arrays is build using

annotated cDNA probes or by reverse transcription of mRNA from a known

source. The spotted arrays used to generate cDNA probes for specific gene

product that will spot on glass slide systematically (Ramaswamy, S. and

Golub TR, 2002). By using human Universal RNA Standards, the sample

testing is extracted and reverse transcribed in parallel along with test samples.

It will be labeled using fluorescent dye, Cy3, while the cRNA from test

sample will be labeled with fluorescent dye, Cy5. The hybridization of each

Cy5-labeled test sample cRNA with the Cy3-labeled reference probe to the

spotted microarrays is done simultaneously. From the hybridization, the

fluorescence intensities of the scanned images are quantified, normalized, and

corrected to produce the transcript abundance of a gene as an intensity ratio

with respect to the reference sample (Cheung VG et al., 1999).

18

ii)

Oligonucleotides microarrays

25 pair base pair DNA oligomers are used in oligonucleotides

microarrays to be printed onto the matrix of array (Lockhart DJ et al., 1996).

This array that was designed and patented by Affymetric uses combination of

photolithography and combinatorial chemistry. This allows the thousands of

probes to generate simultaneously. In this array, the Perfect Match/Mismatch

(PM/MM) probe strategy is used to avoid nonspecific hybridization. By using

that strategy, a second probe which is identical to the first except for the

mismatched probe is laid adjoin to the PM probe. A signal from the MM

probed is subtracted from the PM probe signal to indicate the nonspecific

binding.

Figure 2.3: Gene Expression Profiling

Reprinted from Sauter, G., and Simon, R. (2002)

19

2.3.2.2 Data Analysis

In gene expression profiling, there are four levels of analysis that have been

applied. The levels of analysis are as follow (Compton, R., et al., 2008):

i)

Using the statistical test to identify the differences of genes expression

between two or more samples.

ii)

By using data reduction technique to simplify the complexity of the

dataset. The cluster of similar expression profile genes is identified

across different experimental.

iii)

A statistical model such as classifier used to identify genes predictive

of samples classes (cancerous and non-cancerous) or to the expression

of interesting biomaker. The evaluation of the accuracy of the model

is really important in this statistical modeling. Holdout method is the

classic approach in which the correct class labels are known and the

data is divided into a set of training and test. The training set builds

the model while the test set provides a group of samples that the

model unseen previously. Then, it can be used to test if the model

predicts their class membership on an independent population

correctly. Gene selection is required using this approach. The gene

may be tested individually (univariate gene selection) or in association

(multivariate selection strategies).

iv)

By using the reverse engineering algorithm to infer the transcriptional

pathway structures within the cells.

20

2.4

Feature Selection

Feature selection is the process of choosing the most suitable features when

building process model (Kasabov, 2002). The main purpose of feature selection in

pattern recognition, statitistic and data mining communities (text mining,

bionformatics, sensor networks) is to select a subset of the input variables by

eliminating features that have little or no predictive information. The

comprehensibilty of the resulting classifier model can significantly be improved by

the feature selection. Feature selection often builds a model of better generalization

to unseen point (YongSeong Kim, et al., 2003). The potential benefits of feature

selection as stated by (Gianluca Bontempi and Benjamin Haiben-Kains,2008) are as

follow:

i)

Facilitate the visualization and comprehensibilty of the data.

ii)

The measurement and storage requirement can be reduced.

iii)

Reduce the times of training and utilization.

iv)

Improve the performance of prediction by defying the curve of

dimensionality.

Feature selection is important for various reasons in many supervised

learning problems namely generalization performance, running time requirements,

and constraints and interpretational issues imposed by the problem itself (J.Weston et

al., 2000). The main goal of feature selection in supervised learning is to search for a

feature subset with a higher classification accuracy (YongSeong Kim, et al., 2003).

21

Evaluation function

Feature set

Selection of

starting feature set

Goodness

Generation of next feature set

Stopping criteria

Figure 2.4: Feature selection process

There are three main approaches of feature selection which are (YongSeong

Kim, et al., 2003) :

i)

Filter methods

The filter methods are the preprocessing methods that attempt to

evaluate the merits of features from the data. The performance of the learning

algorithm which is affected by the chosen feature subset will be ignored.

ii)

Wrapper methods

The subsets of variables is evaluated based on their usefulness to a

given predictor using these methods. In order to select the best subset, its own

learning algorithm will be used as a part of the evaluation function. A

problem of stochastic state space search is identified as the problem in these

methods.

iii)

Embedded methods

These methods implement variable selection as part of the learning

procedure and often specific to given learning machines.

22

Table 2.2: A taxonomy of feature selection techniques

Search Method

Filter

Univariate

Advantages

• Fast

• Scalable

• Independent of the classifier

Disadvantages

• Ignores features

dependencies

• Ignores interaction with

the classifier

Example

• χ2

• Euclidean distance

• Information gain, Gain ratio (BenBassat,

1982)

• t-test

Mutivariate

• Model features dependecies

• Independent of the classifier

• Better than wrapper method

in complexity computation

• Slower compares to

univariate techniques

• Less scalable compares to

univariate techniques

• Ignores interaction with

the classifier

• Correlation-based feature selection, CFS

(Hall, 1999)

• Markov blanket filter, MBF (Koller and

Sahami, 1996)

• Fast correlation-based feature selection,

FCBF (Yu and Liu, 2004)

• Relief ( Kenji Kira and Larry A. Rendell,

1992)

Wrapper

Deterministic

• Simple

• Risk of overfitting

• Interacts with the classifiers

• Prones to trap in a local

• Model feature dependencies

optimum compares to

randomized algorithm

• Sequential forward selestion, SFS

(Kittler, 1978)

• Sequential backward elimination, SBE

(Kittler, 1978)

• Plus q take -away r (Ferri et al., 1994)

23

• Less computationally

intensive compared to

• Classifier dependent

selection

• Beam search (Siedelecky and Sklansky,

1988)

randomized methods

Randomized

• Less prone to local optima

• Computationally intensive

• Simulated annealing

• Interacts with the classifier

• Classifier dependent

• Randomized hill climbing (Skalak, 1994)

• Model features dependencies

selection

• Higher risk of overfitting

compared to deterministic

Embedded

• Interacts with the classifier

• Computational complexity is

• Classifier dependent

selection

• Genetic Algorithms (Holland, 1975)

Estimation of distribution algorithm (Inza

et al.,2000 )

• Decision trees

Weighted naive Bayes (Duda et al., 2001)

better than wrapper method

• Feature selection using the weight vector

• Model features dependencies

of SVM (Guyon et al., 2002; Weston et

al., 2003)

24

Among the three main approaches, the filter approach are the computational

efficient and robust against overfitting. Although it may introduce bias but it may

have considerably less variance (Gianluca Bontempi and Benjamin HaibenKains,2008). Hence, a larger size of dataset can be handled by the filter method

compares to wrapper method (Guyon, I and Elisseeff A, 2003; Kohavi, R and John

G.H.,1997; Liu, H. and Motoda, H., 1997; Ahmad, A. and Dey, L., 2005; Dash, M.

And Liu, H.,1997). The common filter method drawback is the interaction with the

classifier will be ignored which means the search in the feature subset space is

separated from the search in the hypothesis space. The most suggested technique to

solve this problem is univariate. However, univariate technique may lead to worse

performance of classification due to the ignoring feature dependencies compares to

other techniques. Thus, various multivariate filter techniques are proposed to

overcome the problem which aims at the incorporation of feature dependencies to

some degrees (Saeys, Y. et al., 2007).

Relief is one type of the multivariate filter technique which leads to better

performance improvements and proves that the filter methods are capable to handle

the non-linear and multi-modal environments (Reisert, M., and Burkhardt, 2006).

This method is the most well known and deeply studied which estimation is better

than the usual stastical attribute such as correlation or covariance. This is because it

takes into account the attribute interrelationship (Arauzo-Azofra, A., et al., 2004). It

has been reported that this method has shown a good performance in various domain

(Sikonja, M.R. and Kononenko, I., 2003).

2.4.1

Relief

Relief is a feature selection method based on attribute information that was

introduced by Kira and Rendell (Kira, K., and Rendell, L.A., 1992). The original

25

Relief only handled boolean concepts problem but the extend of it has been

constructed to be used in classifications problems (Relief-F) (Kononenko, I., 1994)

and also in regression (RRelief-F) (Sikonja, M.R and Kononenko, I, 1997). In ReliefF, one nearest neighbor of E1 will be search from every class. With these neighbor,

the relevance of every feature f ∈ F will be evaluated by the Relief and accumulated

into the W[ f ] based on the equation (2.1).

W[ f ] = W[ f ] – diff ( f, E1, H ) + Σ

P ( C ) x diff (f, E1, M(C))

C ≠ class(E1)

(2.1)

H is a hit of the nearest neighbor which is from the same class while M(C) is the miss

from the different class of class C. W [ f ] is divided by m to get the average

evaluation in range of [-1,1]. The diff ( f, E1, H ) function computes the grade in

which the values of features f is different in examples E1 and E2 as in equation (2.2).

(2.2)

f is dicrete

f is continuous

diff ( f, E1,E2 ) = 0 if value ( f, E1 ) = value ( f, E2 ) |value ( f, E1 )- value ( f, E2 )|

1 otherwise

max (f) – min (f)

The value ( f, E1 ) indicate the value of feature f on example E1 while the max (f) is

the maximum value f gets. The distance used is the sum of differences considering

nearest neighbor given by diff function, of all features.

2.4.2

Information Gain

Information gain is commonly used as a surrogate for approximating a

conditional distribution in the classification setting (Cover, T., and Thomas, J).

26

According to Mitchell (1997), by knowing the value of a feature, information gain

measure the number of bits of information obtained for class prediction. The

following is the equation of information gain :

InfoGain = H(Y) – H(Y|X)

(2.3)

where

X and Y is the feature and

H(Y) = - Σ p (y) log2 (p(y))

(2.4)

yεY

H(Y|X) = - Σ p (x) Σ p (y|x) log2 (p(y|x))

xεX

2.4.3

(2.5)

yεY

Chi-Square

The Chi-square algorithm will evaluate genes individually with respect to the

classes. This algorithm is based on comparing the obtained values of the frequency

of a class because of the split to the expected frequency of the class. From the N

examples, let Nij be the number of samples of the Ci class within the jth interval

while MIj is the number of samples in the jth interval. The expected frequency of Nij

is Eij = MIj | Ci | lN . Therefore, the Chi-squared statistic of a gene is then defined as

follow (Jin, X., et al., 2006):

C

l

χ = Σ Σ (Nij - Eij)2

2

i=1 j=1

Eij

where I is the number of intervals.

The larger the the χ2 value, the more informative the corresponding gene is.

(2.6)

27

2.5

Radial Basis Function

A Radial Basis Function, RBF, was introduced by Powell to solve the

problem of the real multivariate interpolation (Powell M.J.D., 1997). In a neural

network, radial basis function is a set of functions formed by a hidden unit which

composes a random basis for the input vectors (Haykin, S., 1994). This function is a

real-valued in which the value merely depends on the distance from the origins. The

most general formula is:

h (x) = φ (x - c)2

(2.7)

2

r

where

φ is the function used (Gaussian, Quadratic, Inverse Multiquadric and

Cauchy)

c is the centre

r = R is the metric, Euclidean

2.5.1

Types of Radial Basis Function, RBF

Radial functions are class of function that has the characteristic feature where

response decreases or increases monotonically with distance from the origin. If the

radial functions is linear, all the center, the distance scale and the precise shape will

be fixed as a parameters of the model (Mark J.L.Orr, 1996). There are two common

types of Radial Basis Function based on the types of radial functions used. The types

of Radial Basis Function are as listed :

28

i) Gaussian Radial Basis Function

φ (z) = e –z

(2.8)

Figure 2.5: Gaussian RBF

ii) Multiquadric Radial Basis Function

φ (z) = (1 + z) ½

(2.9)

29

Figure 2.6: Multiquadric RBF

Among all of the types, Gaussian is a typical radial function and the most

commonly used. This is because Gaussian RBF monotonically decreases with the

origin and has a local response which gives a significant response only in a

neighborhood near the origin. Other than that, Gaussian RBF is biologically plausible

caused by its finite response. Multiquadric RBF is contrary to Gaussian RBF since it

monotonically increases with the distance from the origin and have a global

response. This is why the Gaussian RBF is commonly used compares to the

Multiquadric RBF. The following figure illustrates the different result of RBF types

with c = 0 and r = 1.

30

Figure 2.7: Gaussian (green), Multiquadric (magenta), Inverse-multiquadric (red)

and Cauchy (cyan) RBF

2.5.2

Radial Basis Function, RBF, Network

A neural network is designed as a machine to model the way of the brain

performs a particular tasks or function of interest (Haykin S., 1994). Neural network

has been successfully applied in various area including financial, engineering and

mathematics because of its useful properties and capabilities such as nonlinearity,

input-output mapping and neurobiological analogy (Mat Isa, N.A., et al., 2004). The

application of neural network has been expanded in the medical area with two

common applications which are image processing (Sankupellay, M., and

Selvanathan, N., 2002; Chang, C.Y., and Chung, P.C., 1999; Kovacevic, D., and

Loncaric, S., 1997) and diagnostic system (Kuan, M.M., et al., 2002; Ng, E.Y.K., et

al., 2002; Mat Isa, N.A., et al., 2002; Mashor, M. Y., et al., 2002). Neural network

has been proven to perform very well in both applications. A high capability can be

31

reached either to increase the medical image contrast or to detect certain region in the

medical image processing while in disease diagnostic system, it performs as good as

human experts or sometimes better than them (Sankupellay, M., and Selvanathan, N.,

2002; Chang, C.Y., and Chung, P.C., 1999; Kovacevic, D., and Loncaric, S., 1997;

Kuan, M.M., et al., 2002; Ng, E.Y.K., et al., 2002; Mat Isa, N.A., et al., 2002;

Mashor, M. Y., et al., 2002).

Radial Basis Function, RBF, networks is the artificial neural network type for

application of supervised learning problem (Orr, M.J.L., 1996). By using RBF

networks, the training of networks is relatively fast due to the simple structure of

RBF networks. Other than that, RBF networks is also capable of universal

approximation with non-restrictive assumptions (Park. J. et al., 1993). The RBF

networks can be implemented in any types of model whether linear on non-linear and

in any kind of network whether single or multilayer (Orr, M.J.L. 1996). The most

basic design of RBF networks as first proposed by Broomhead and Lowe in 1988 is

traditionally associated with radial functions in a single layer network. The following

figure shows the traditional RBF network with each of the n components of input

vector x feeds forward to m basis function. This yield a output that linearly combined

with weights {wj}jm=1 into the network output f(x).

32

Figure 2.8: The traditional radial basis function network

The first layers indicate the input layer which is the source nodes or sensory

units while the second layer is a hidden layer of high dimension. The last layer which

is the output layer will give the network response to the activation pattern that is

applied to the input layer. From the input space to the hidden unit space, the

transformation is non-linear but the transformation is linear from the hidden space to

the output space (Haykin, S., 1994).

To implement RBF network, we need to identify the hidden unit activation

function, the number of the processing units, a criterion for modeling the given task

and a training algorithm for finding the parameters of the network. The network will

be trained to find the RBF weight while the training set, a set of input-output pairs

will optimize the network parameters so that the network output will fit with the

given inputs. To evaluate the fits, the means of cost function or assumed as mean

square error will be used. The network can be used after the training with the data in

which the underlying statistics is similar to that of the training set. The adaptation of

the network parameters to the changing data statistics is caused by the on-line

training algorithms (Moody, J., 1989).

33

There are various functions that have been tested as activation function for

RBF networks (Matej, S., Lewitt, R.M, 1996) but Gaussian is preferred in the

application of pattern classification (Poggio, T., Girosi, F., 1990; Moody, J., 1989;

Bors, A.G., Pitas, I., 1996; Sanner, R.M., Slotine, J.J.E., 1994; Bors, A.G., Gabbouj,

G., 1994). The following is the Gaussian activation function for RBF network:

φj (X) = exp [(X - μj)T Σj-1 ( X - μj)]

(2.10)

for j = 1,..,L where

X is the input feature vector.

L is the number of hidden units.

μj and Σj are the mean and the covariance matrix of the jth Gaussian function.

The mean of the vector μj is representing the location while Σj models the shape of

activation function. The output layer applies a sum of weight of hidden unit output

according the following formula:

ψk(X) = Σ λjk φ j (X)

(2.11)

for k = 1,..,M where

λjk are the output weight, each corresponding to the connection between a

hidden unit and an output unit.

M represent the number of output units.

For classification problem, if the weight λjk > 0, the activation field of the hidden unit

j contained in the activation field of the output unit k.

34

2.6

Classification

Classification can also be termed as supervised learning (Tou, J.T. and

Gonzalez, R.C., 1974; Lin, C.T., and Lee, C. S. G., 1996). In the classification

problems, the previous unseen patterns will be assigned to their respective classes

according to previous examples from each class. This will cause the output of the

learning algorithm as one of a discrete set of possible classes (Mark J.L.Orr, 1996).

The feature entries will be represented by the input, the hidden layer unit correspond

to the subclasses while the class corresponded by each output (Tou, J.T. and

Gonzalez, R.C., 1974). There are several methods of classification (Bashirahamad

S.Momin et al., 2006):

i)

Decision Tree

A decision space divided into piecewise constant region (Pedrycz, W.,

1998)

ii)

Probabilistic or generative models

Compute the probabilities based on Bayes’ Theorem (Tou, J.T. and

Gonzalez, R.C., 1974).

iii)

Nearest-Neighbor classifiers

Calculate the minimum distance from instances or prototype (Tou,

J.T. and Gonzalez, R.C., 1974).

iv)

Artificial Neural Networks (ANNs)

The division is by nonlinear boundaries. The learning incorporated, in

the environment of data-rich by encoding information in the

conversion weights (Haykin, S., 1994).

35

2.6.1

Comparison with others Classifiers

The following table shows the previous researches that have be done on

classification with various type of data using the RBF network.

Table 2.3: Previous researches on classification using RBF network

Author

Classification

Wang, D.H., et al., 2002

Protein sequences

Li, X., Zhou, G., and Zhou, L., 2006

Novel data

Leonard, J.A., and Kramer, M.A., 1991

Process Fault

Horng, M-H., 2008

Texture of the Ultrasonic Image

of Rotator Cuff Diseases

Kégl, B., Krzyzak, A., and Niemann, H.

Nonparametric (Pattern

(1998)

Recognition)

Based on (Wang, D.H., et al.,2002) Self Organizing Mapping (SOM) networks

(Wang, H.C et al.,1998; Ferran EA., 1994) and Multilayer Perceptrons (Wu C.H. et

al., 1995; Wu C.H., 1997) are the two types of neural models applicable for protein

sequences classification task. By using the SOM networks, the relationship within a

set of proteins can be discovered by clustering them into different groups. However

SOM networks have two main limitation when applied in protein sequences

classification. The limitations are:

i) Time consuming and the difficulty of the results interpretation.

ii) The size selection of Kohonen layer is subjective and often involves the trial

and error procedure.

There are several undesired features arising from system designed of MLP based

classification system which are:

36

i)

The determination of the neural metwork architecture.

ii)

Lack of intepretability of the ”black box”.

iii)

The training time needed as the number of inputs is over 100.

In (Li, X., Zhou, G., and Zhou, L., 2006), RBF network has been compared to

the Support Vector Machine (SVM). Although data classification is one of the main

applications that RBF networks have applied (Oyang, Y.J et al., 2005; Sun, J. et al.,

2003), recent research has focused more on SVM. This is because it has been

reported by several latest research that SVM generally capable to deliver higher

classification accuracy compared to other existing data classification algorithms.

However, SVM suffer with one serious disadvantage namely the time taken to carry

out the model selection which could be unacceptably long for some contemporary

applications. All the proposed approaches for expediting the model selection of SVM

lead to lower prediction accuracy.

Backpropagation network and Nearest Neighbor classifiers have been

compared in (Leonard, J.A., and Kramer, M.A., 1991). The comparison have shown

that the backpropagation classifier can produce nonintuitive and nonrobust decision

surfaces due to the inherent nature of the sigmoid function, the definition of the

training set and the error function used for training. Other than that, there is no

standard training scheme mechanism for identifying unknown classes of regions in

backpropagation network. For the Nearest Neighbor classifier, it becomes inefficient

when the number of the training point is high. This is because it needs a complete

search via the set of training examples to classify each test point.

37

2.7

Receiver Operating Characteristic, ROC

The Reciever Operating Characteristic, ROC, analysis emerges from the

statistical decision theory (Green and Swets, 1996). In the medical area, this theory

was first applied during the late 1960s. This theory analysis has been increasingly

used as evaluation tool in computer science to assess distinction effects among

different methods (Westin, L. K., 2001 ). In neural classifier, ROC analysis is easy to

implement, yet rarely used to evaluate the performance of neural classifier outside of

the medical and signal detection field. The advantages of ROC analysis are the

robust description of the network’s predictive ability and an easy way to change the

existence network based on differential cost of misclassification and varying prior

probabilities of class occurences.

Reciever Operating Characteristic, ROC, curve is the ultimate metric to

detect algorithm performance (John Kerekes, 2008). The ROC curve is defined as

sensitivity and specificity which are the number of true positive decision over the

number of actually positive cases and the number of true negative decisons over the

number of actually negative cases, respectively. This method leads to the

measurement of diagnostic test performance (Park, S.H., et al., 2004) . Based on the

ROC curve, area under the curve, AUC, is the most commonly used and

comprehensive of the performance measures (Downey, T.J., Jr., et al., 1999). The

following equation is the formula of the AUC. The TPR is referred to True Positive

Rate while the FPR is referred to False Positive Rate.

1

ROC = (TPR)( FPR)

2

(2.8)

3

38

Figure 2.9: The RO

OC curve

2

2.8

Sum

mmary

pter discussees about the breast canceer, microarraay

In brrief, this chap

t

technology,

feature selecction, radial basis function, RBF nettwork, and reeceiver

o

operating

ch

haracteristic, ROC. The bbiopsy is thee way to confirm the occcurrence of

c

cancer

cells in the breastt after the m

mammograph

hy. By doing biopsy, the DNA

m

microrrays

w be obtainned. In order to select thhe useful genne, feature seelection needds

will

t be appliedd first. Theree are variouss techniques of feature seelection whiich are

to

c

categorized

into three methods.

m

The filter methood with the m

multivariate techniques

t

n

namely

ReliefF has beenn chosen to bbe applied inn this study. Based on th

he previous

r

researches,

t Radial baasis functionn network haas been show

the

wn the best network

n

in

c

classification

n problem. Therefore,

T

it has been ch

hosen as the classifier to distinguish

t cancerouus and non-ccancerous. Thhe receiver operating

the

o

chharacteristic, ROC curve

m

metric

is useed to evaluatte the perform

mance of thee classifier.

CHAPTER 3

METHODOLOGY

3.1

Introduction

This chapter elaborates the processes or steps which are involved during the

design and implementation phase. The process flows will be illustrated in the

research framework which contains four main steps. Begin with the data acquisition,

the format of the data will be explained briefly. Other than that, the HykGene

software that will be used in the experiment will be discussed according to its

framework.

40

3.2

Research Framework

The following figures describe the research framework for this study. There

are four main steps or processes involved which are data acquisition, feature

selection, classification and evaluation. In the data acquisition steps, the data set of

breast cancer microarray data is obtained. Then, we can proceed to the second step

which involved feature selection. This step is very important because it leads to the

accuracy of the classification. The classification of the microarray data will be

implemented to classify the cancer cells and the normal cells. The final step in this

framework is to evaluate the performance of the classifier using ROC.

Start

Data Acquisition

Feature Selection

Classification

Evaluation

End

Figure 3.1: Research Framework

41

3.3

Software Requirement

In this study, the software requirements include the Matlab version 7.0 Neural

Network toolbox to develop the feature selections function and the classifier namely

radial basis function, RBF, network. Other than that, another two software will be

used which are WEKA and HykGene to support the feature selection function.

3.4

Data Source

The breast cancer microarray data will be obtained from the Centre for

Computational Intelligence (http://www.c2i.ntu.edu.sg/) for this study. The dataset

which contains 97 instances with 24461 genes and 2 classes are in the ArtificialRelation File Format, ARFF. An ARFF format will be explain in detail in the next

chapter.

3.5

HykGene

HykGene is a hybrid approach for selecting marker genes for phenotype

classification using microarray gene expression data and have been developed by

Wang, Y. et al., (2005). The following figure indicates the framework of the

HykGene.

42

Figure 3.2: HykGene: a hybrid system for marker gene selection

(Wang, Y. et al., 2005)

In the fist step, feature filtering algorithms is applied on the training data to

select a set of top-ranked informative genes. Next, to generate a dendogram,

hierarchical clustering is applied on the set of top-ranked genes. Then, in the third

step a sweep- line algorithm is used to extract clusters from the dendogram and the

marker gene will be selected by collapsing clusters. The LOOCV will determine the

best number of marker genes. In the last step, only the marker genes will be used as

the features for classification of test data.

For the first step which is gene ranking, feature filtering algorithms are

applied to rank the genes. Features or genes are ranked based on the statistic over the

features that indicate which features are better than others. In this HykGene

software, three popular feature filtering methods have been used which are ReliefF,

Information Gain and Chi-Square or χ2-statistic.

After done with the feature filtering on the whole set of genes using training

data, the top-ranked genes will be pre-selected from the whole set. Normally, there

are 50 to 100 genes are pre-selected. Next, the hierarchical clustering (HC) will be

43

applied on the set of pre-selected genes. These will resulting a dendogram, or

hierarchical tree where heights represent degrees of dissimilarity. Due to the high of

correlation of the some genes in the pre-selected set of genes, the cluster will be

extracted by analyzing the dendogram output of the HC algorithm. Then, each cluster

will be collapsed into one representative gene from each cluster. These extracted

representative genes were used as marker genes in the classification step.

Finally, the test data will be classified using one of the four classifiers which

are k-nearest neighbor (k-NN), linear support vector machine (SVM), C4.5 decision

tree and Naive Bayes (NB).

3.5.1

Gene Ranking

The estimation accuracy of the features or attributes quality is important in

the preprocessing step of classification. Therefore, to produce an ideal estimation of

attributes in feature selection, the noisy and irrelevant features need to be eliminated.

Other than that, the features which are having the specific characteristics of a class

need to clearly select. As illustrated before in the HykGene framework, feature

selection or gene ranking is the first step which involved three feature filtering

method. Thus, the original framework can be expanded into the following figure in

order to show the methods that involved as mentioned previously more clearly.

44

ReliefF

Training

data

Step1: Ranking

Genes

Information

Gain

Topranked

Genes

χ2-statistic

Figure 3.3: The expanded framework of HykGene in Step1

3.5.1.1 ReliefF Algorithm

The main idea of the Relief algorithm that was proposed by (Kira and

Rendell, 1992) is to estimate the quality of attributes that have weights greater than

the threshold using the distinction of an attribute value between a given instance and

the two nearest instances namely Hit and Miss. The following is the original

algorithm of Relief.

45

Input : a vector space for training instances with the value of

attributes and class values

Output : a vector space for training instances with the weight W of

each attribute

Set all weights W[A]=0.0

for i=1 to m do begin

randomly select instance Ri

find nearest hit H and nearest miss M

for A=1 to all attribute do

W(A) = W(A) – diff (A, Ri, H)/m + diff (A, Ri, M)/m

end

Figure 3.4: Original Relief Algorithm

(Park, H., and Kwon, H-C., 2007)

The drawback of the original Relief algorithm is that it cannot deal with incomplete

data and multiclass datasets. To overcome the disadvantages of original algorithm,

the extension of Relief-F algorithm was suggested by Kononenko (1994). This

extension algorithm is more robust, meaning it can handle incomplete and noisy data

as well as multi-class dataset. The updated Relief-F algorithm is as follow.

46

Input : a vector space for training instances with the value of

attributes and class values

Output : a vector space for training instances with the weight W of

each attribute

Set all weights W[A]=0.0

for i=1 to m do begin

randomly select instance Ri

find k nearest hit Hj

for each class C≠ class(Ri) do

find k nearest miss Mj(C) from class C

for A=1 to all attribute do

k

k

W(A) = W(A) - Σ diff (A, Ri, Hj)/(m, k) + Σ

j=1

k

[P(C)/

C≠ class(Ri)

1-P(class(Ri)) Σ diff(A, Ri, Mj(C))]/(mk)

j=1

end

Figure 3.5: ReliefF algorithm

(Park, H., and Kwon, H-C., 2007)

According to the algorithm, the nearest hits Hj is finds k the nearest neighbors which

is from the same class while the nearest misses Mj(C) is k the nearest neighbors

which is from a different class. W (A) which is the quality estimation for all attributes

A is updated based on their values for Ri, hits Hj and M(C). Each probability weight

must be divided by 1-P (class (Ri)) due to the missing of the class of hits in the sum

of diff (A, R, Mj(C)).

47

3.5.1.2 Information Gain

The second method of feature selection that will be used in the experiment is

Information Gain. In the Hykgene software, the equation of the information gain

method applied is as following.

Let {ci}mi=1 denote the of classes. Let V be the set of possible values for feature f.

Thus, the information gain of a feature f is defined as:

m

m

G(f) = - Σ P(ci)log P(ci) + Σ Σ P (f = v) P(ci | f = v) log P (ci | f = v)

i=1

(3.1)

vεV i=1

In the information gain, the numeric features need to be discretized. Therefore,

HykGene software has used entropy-based discretization method (Fayyad and Urani,

1993) that has been implemented in WEKA software (Witten and Frank, 1999).

3.5.1.3 χ²-statistic

Chi- Square or χ2 –statistic is one more feature selection method that will be

used. The equation of this method that has been used by HykGene software is as

follow:

m

χ (f) = Σ Σ [Ai ( f = v) – Ei( f = v)]2

2

vεV i=1

Ei( f = v)

where

V is the set of possible values for feature f,

(3.2)

48

Ai ( f = v) is the number of instances in class ci with f = v

Ei( f = v) is the expected value of Ai ( f = v)

Ei( f = v) is computed with:

Ei( f = v) = P ( f = v)P(ci)N

(3.3)

where

N is the total number of instances

Like information gain, this method also requires numeric features to be discretized,

so the same discretization method is used. The following is the example of the chisquare algorithm which is consists of two phases. For discretization, in the first

phase, it begins with a high significance level (sigLevel) for all numeric attributes.

The process involved in phase 1 will be iterated with a decreased sigLevel until an

inconsistency rate, δ is exceeded in the discretized data. In the Phase 2, begin with

sigLevel0 determined in Phase 1, each attribute i is associated with a sigLevel [i] and

takes turns for merging until no attribute’s value can be merged. If an attribute is

merged to only one value at the end of Phase 2, it means that this attribute is not

relevant in representing the original dataset. Feature selection is accomplished when

discretization ends.

49

Phase 1:

set sigLevel = .5;

do while (InConsistency (data) < δ) {

for each numeric attribute {

Sort(attribute, data);

chi-sq-calculation(attribute, data)

do {

chi-sq-calculation(attribute, data)

} while (Merge (data))

}

sigLevel0 = sigLevel;

sigLevel = decreSigLevel(sigLevel);

}

Phase 2:

set all sigLvl [i] = sigLevel0

do until no-attribute-can be-merged {

for each attribute i that can be merged {

Sort(attribute, data);

chi-sq-initialization(attribute, data);

do {

chi-sq-calculation(attribute, data)

} while (Merge(data))

if (InConsistency (data) < δ)

sigLvl [i] = decreSigLevel(sigLvl [i]);

else

attribute i cannot be merged

}

}

Figure 3.6: χ²-statistic algorithm

(Liu, H., and Setiono, R., 1995)

50

3.5.2

Classification

Classification is a system capability to learn from a set of input or output

pairs which are composed of two set namely training and test. The input of the

training set is the features vector and class label while the output is the classification

model. For the test set, the inputs are the features vector and also the classification

model while the output is the predicted class. The classification is the last step

applied in the HykGene software after selecting the marker genes. There are four

classifiers that can be chosen as indicate in the following expanded framework of

HykGene.

k- nearest

neighbor

Test

data

Step 4:

Classification

using Marker

Genes Only

Support vector

machine

C4.5 decision

tree

Naïve Bayes

Figure 3.7: The expanded framework of HykGene in Step 4

3.5.2.1 k- nearest neighbor

The k- nearest neighbor or k-NN classifier (Dasarathy, B., 1991) is a wellknown non-parametric classifier. For the classification of a new input x, the k-NNs

are retrieved from the training data. Then, the input x is labeled with the majority

class label corresponding to the k-NNs.

51

3.5.2.2 Support vector machine

Support vector machine, SVM, is a type of the new generation of learning

system based on recent advances in statistical learning theory (Vapnik, V.N., 1998).

In the HykGene software, a linear SVM is used in order to find the separating

hyperplane with the largest margin. This is defined as the sum of distances from a

hyperplane (implied by a linear classifier) to the closest positive and negative

examplars with an expectation that the larger the margin, the better the generalization

of the classifier.

3.5.2.3 C4.5 decision tree

The C4.5 decision tree classifier is based on a tree structure where non-leaf

nodes represent test on one or more features and leaf nodes reflect classification

results. The classification of an instance is starting at the root node of the decision

tree, testing the attributes specified by this node then moving down the tree branch

corresponding to the value of the attribute. The process is iterated at the nodes on

that branch until a leaf node is reached. The J4.8 algorithm in Weka is used in the

HykGene software which is a Java implementation of C4.5 Revision 8.

52

3.5.2.4 Naïve Bayes

The fourth classifier used in the HykGene software is the Naïve Bayes

classifier which is a probabilistic algorithm based on Bayes’ rule. This classifier

approximates the conditional probabilities of classes using the joint probabilities of

training sample observation and classes.

3.6

Classification using Radial Basis Function Network

There are various approaches to solve the classification problem but as

discussed in the previous chapter, radial basis function network have been chosen as

the classifier. This is because others than previous discussion of the advantages of

RBF network, data classification is one of the main applications in RBF network.

To construct or build the classifier, a neural network will be embedded with

the radial basis function network in which each of the hidden unit implements a

radial activation function. The weighed sum of hidden unit outputs will be produce

by the output units. The RBF network is nonlinear from the input but the output is

linear. Typically, the RBF network is a three-layer, feed forward network which is

composed of input layer, hidden layer and output layer. As discussed in the literature

review, Gaussian has been chosen as the activation function. The following figure is