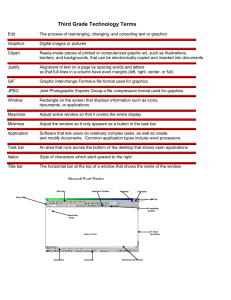

Permission

advertisement