Assessment Measures

advertisement

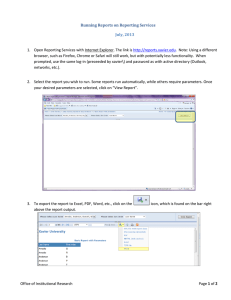

4/11/2011 Assessment Measures Tammy Kahrig, Strategic Information Resources Mary Kochlefl Academic Affairs Mary Kochlefl, Academic Affairs STEPS of SLO ASSESSMENT Identify goals for learning. (Student Learning Outcomes) Identify where in the curriculum the goals are addressed addressed. (Curriculum Mapping) Gather information Use the about how information well students to improve are achieving learning. those goals those goals. (Methods: Direct and Indirect Measures) (Action/Continuous Improvement) Tammy Kahrig, Ph.D. Xavier University Office of Strategic Information Resources 1 4/11/2011 Definitions Student‐Level Assessment of Student Learning Outcomes Section‐Level Assessment of Student Learning Outcomes Course‐Level Assessment of Student Learning Outcomes Program‐Level Assessment of Student Learning Outcomes • How well an individual student is achieving the student is achieving the goals for the assignment and then the course • Grades are usually the measure • Example 1: Based on the measures, I determine that John is not meeting the goal for learning. • Example 2: Our whole department gets together to share our g ratings on a rubric for each student based on our competency goals for individual students. • Action: Provide feedback to the student and develop strategies for helping the student achieve the goal for learning. • How well students as a whole within my section whole within my section of the course are achieving the goals for the course • Example 1: I determine that more than 50% of my students did not meet the goal for learning on this assignment. • Example 2: I determine that my students did not meet the goal for learning on multiple measures in my section. • Action: Redesign course activities in my section with the aim of improving student learning on that goal. • How well students as a whole within the whole within the individual course are achieving the goals for the course • Example: I get together with the other instructors who are teaching the same course and together we share how well are our students are meeting the goal(s) for learning. • Action: We determine as a group that we would like to see students in our course improve on this particular goal. We discuss things we would like to change in the course (all sections) to help students improve on this particular goal. • How well students as a whole are achieving the whole are achieving the goals for your program • Example: At a department meeting, our subgroup discusses what we found in our course and inquire about this particular learning goal across the program. What are other faculty seeing? Where else in the curriculum do we address this? Where else do we assess this? • Action: Plans are made to assess this goal using an assignment completed during students’ last term in the program. Data are collected and steps are made for curricular improvement. Efficiency in Assessment: Where to Begin Current practices • What are you doing already to assess student learning? • Where do conversations about student learning occur? Section‐level to Course‐level Section level to Course level Discussions • What opportunities are in place for taking information about pp p g student learning from the section level (your class) to the course level (everyone teaching that course)? Course‐Level to Program‐Level Discussions End‐of‐term Department Meeting (or Sub‐Group Meeting) • What opportunities are in place for taking information from course‐level to the program‐level? • What regular meetings already exist for discussing student learning? Comprehensive Assignments • Where Where are those student‐level assignments in your are those student‐level assignments in your program that get at multiple student learning outcomes? Common Assignments across Sections of a Course • What common assignments exist currently? • Where are opportunities for a common assignment? Comprehensive or “Capstone” Courses • Are there courses in your program that function as a “capstone” experience, i.e. courses that address multiple student learning outcomes? Tammy Kahrig, Ph.D. Xavier University Office of Strategic Information Resources 2 4/11/2011 Direct Measures • Prompt students to represent or demonstrate their learning or produce work so that observers can assess how well students’ texts or responses fit program‐level expectations • Yield information about specific strengths and weaknesses of students as a whole and weaknesses of students as a whole Indirect Indirect Measures • Signs that students are probably learning, Si th t t d t b bl l i often based on reports of perceived student learning • May help explain information gathered from direct measures Examples of Direct Measures of Student Learning Student Work • Written work, performances, or presentations, scored using a rubric tte o , pe o a ces, o p ese tat o s, sco ed us g a ub c • Research projects, case studies, presentations, theses, dissertations, oral defenses, exhibitions, and performances, scored using a rubric • Portfolios • Tests such as final exams in key courses, qualifying examinations, comprehensive exams • Pre‐post tests Ratings of Student Skills • Field experience supervisors or employers rate students on student skills using a rubric Standardized Tests • Achievement Tests in a Particular Field of Study (e.g. ETS Major Field Tests, ACAT Area Concentration Achievement Tests, GMAT, GRE Subject Tests) • Licensure or Certification Exams • Instruments to Measure Specific Skills (e.g. California Critical Thinking Skills Test) Tammy Kahrig, Ph.D. Xavier University Office of Strategic Information Resources 3 4/11/2011 Examples of Indirect Measures of Student Learning Student Response • Student Survey: Students’ perceptions of their educational experiences and the institution’s impact on their l learning. i • List PSLOs and ask, how well did you achieve each goal? What aspects of your education in the department helped you with your learning? What might the department do differently to help you learn more effectively? • Student Focus Groups: held with representative students to probe a specific issue that might have been identified • For Freshmen and Seniors: National Survey of Student Engagement (NSSE) provided by Xavier University OSIR Alumni, Employer, or Faculty‐Staff Surveys • Perceptions of student learning and the institutions’ impact on their learning • Alumni: ACT Alumni Outcomes Survey provided by Xavier University OSIR Admission Rates into Graduate Programs • What are our students’ acceptance rates? Placement Rates of Graduates into Appropriate Career Positions and Starting Salaries • How do students fare after graduation? Considerations in Selecting Measures Is this assessment based on the whole population (e.g. all majors) or at least a sample of a reasonable size? Do you want a measure that y can be used only for summative assessment or would you like one for formative assessment? How does this measure align with the curriculum and educational experiences? Are students aware that they are responsible for demonstrating this learning? A well designed measure may A well‐designed measure may be used for assessing multiple PSLOs. In TaskStream, you can copy the same measure (e.g. senior ( g survey) by using the “Import Measure” button and clicking the button next to “Show measures for all outcomes.” Tammy Kahrig, Ph.D. Xavier University Office of Strategic Information Resources 4 4/11/2011 Considerations in Selecting Measures for the Years of the Cycle Are there PSLOs that you want to assess every year? Are there measures that you would like to use every year? Are there measures that you would like you use on a time frame other than every year, e.g. every other year? In TaskStream, you can choose “Copy Existing Plan” for years In TaskStream you can choose “Copy Existing Plan” for years beyond the first and choose to copy a plan that you have entered for a previous year. Consider what will work best for sustaining your assessment efforts over the next five years. Entering Measures into TaskStream Tammy Kahrig, Ph.D. Xavier University Office of Strategic Information Resources 5 4/11/2011 Tammy Kahrig, Ph.D. Xavier University Office of Strategic Information Resources 6 4/11/2011 How does a measure get from being a student‐ level or section/course‐level assessment to a program level assessment? program‐level assessment? • 1) yields information about specific strengths and weaknesses of students as a whole • 2) is shared, discussed, and used to improve 2) is shared, discussed, and used to improve learning Resources to Assist You Tammy Kahrig, Ph.D. Xavier University Office of Strategic Information Resources 7 4/11/2011 TaskStream’s Rubric Wizard Click on “Resources Tools” to access. You can choose “Create New” or you can choose “Adapt Rubric” from the examples they have supplied. Not promoting, but in case you are interested ETS Major Field Tests: http://www ets org/mft/about ETS Major Field Tests: http://www.ets.org/mft/about ACAT Area Concentration Achievement Tests: http://www.collegeoutcomes.com/ GRE Subject Tests: http://www ets org/gre/subject/about/ GRE Subject Tests: http://www.ets.org/gre/subject/about/ NCES Sourcebook on Assessment: Definitions and Assessment Methods for Communication, Leadership, Information Literacy, Quantitative Reasoning, and Quantitative Skills: http://nces.ed.gov/pubs2005/2005832.pdf Tammy Kahrig, Ph.D. Xavier University Office of Strategic Information Resources 8 4/11/2011 Academic Assessment Mini‐Grant Program • Grant funds should support efforts that otherwise could not be conducted with existing resources. • Requests are encouraged for new efforts to put in place systems that can be replicated in future years without ongoing funding. • For category 1, applications will be accepted on an on‐ going basis and projects can begin immediately • For all other categories, applications will be accepted twice per year, Monday, April 11th and a date in the fall TBD. Projects begin between July 1, 2011 and December 1, 2011. Academic Assessment Mini‐Grant Program CATEGORY 1: Department‐/program‐level conversations. Request limit: $500. CATEGORY 2: Education. Request limit: $1,000 (or $1,800 for a team) CATEGORY 3: Evaluation of student work. Request limit: $2,000 (individual stipend limit: $500) CATEGORY 4: Analysis of assessment data. Request limit: $1,000. CATEGORY 5: Other Tammy Kahrig, Ph.D. Xavier University Office of Strategic Information Resources 9 4/11/2011 Let us know how we can help. • Tammy kahrigt@xavier.edu x4845 • Mary y kochlefl@xavier.edu x4279 References • Maki, P.L. (2004). Assessing for learning: Building a sustainable commitment across the institution. Sterling, VA: Stylus. • Suskie, L.A. (2009). Assessing student learning: A common sense guide. San Francisco, CA: Jossey‐Bass. • Walvoord, B.E. (2010). Assessment clear and simple: A practical guide for institutions, departments, and general education. San Francisco, CA: Jossey‐Bass. Tammy Kahrig, Ph.D. Xavier University Office of Strategic Information Resources 10