Data Loading

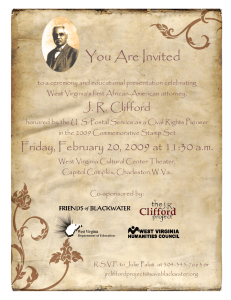

advertisement