ECE 6640 Digital Communications

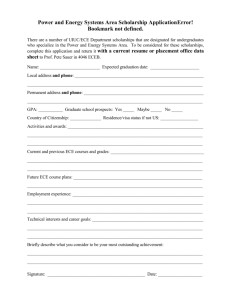

advertisement

ECE 6640 Digital Communications Dr. Bradley J. Bazuin Assistant Professor Department of Electrical and Computer Engineering College of Engineering and Applied Sciences Chapter 7 Chapter 7: Linear Block Codes 7.1 Basic Definitions 7.2 General Properties of Linear Block Codes 7.3 Some Specific Linear Block Codes 7.4 Optimum Soft Decision Decoding of Linear Block Codes 7.5 Hard Decision Decoding of Linear Block Codes 7.6 Comparison of Performance between Hard Decision and Soft Decision Decoding 7.7 Bounds on Minimum Distance of Linear Block Codes 7.8 Modified Linear Block Codes 7.9 Cyclic Codes 4 7.10 Bose-Chaudhuri-Hocquenghem (BCH) Codes 7.11 Reed-Solomon Codes 7.12 Coding for Channels with Burst Errors 7.13 Combining Codes 7.14 Bibliographical Notes and References Problems ECE 6640 401 411 420 424 428 436 440 445 47 463 471 475 477 482 482 2 Chapter Content • Our focus in this chapter and Chapter 8 is on channel coding schemes with manageable decoding algorithms that are used to improve performance of communication systems over noisy channels. • This chapter is devoted to block codes whose construction is based on familiar algebraic structures such as groups, rings, and fields. • In Chapter 8 we will study coding schemes that are best represented in terms of graphs and trellises. ECE 6640 3 Channel Codes • Block Codes – In block codes one of the M = 2k messages, each representing a binary sequence of length k, called the information sequence, is mapped to a binary sequence of length n, called the codeword, where n > k. – The codeword is usually transmitted over the communication channel by sending a sequence of n binary symbols, for instance, by using BPSK or another symbol scheme. • Convolutional Codes – Convolutional codes are described in terms of finite-state machines. In these codes, at each time instance i , k information bits enter the encoder, causing n binary symbols generated at the encoder output and changing the state of the encoder. ECE 6640 4 Code Rate Considerations • The code rate of a block or convolutional code is denoted by Rc and is given by k RC n • A codeword of length n is transmitted using an N-dim. constellation of size M, where M is assumed to be a power of 2 and L=n /log2 M is assumed to be an integer representing the number of M-ary symbol transmitted per codeword. If the symbol duration is Ts , then the transmission time for k bits is T = LTs and the transmission rate is given by R ECE 6640 log 2 M k k k log 2 M RC bits / sec T L TS n TS TS 5 Bandwidth Considerations • The dimension of the space of the encoded and modulated signals is LN, and using the dimensionality theorem as stated in Equation 4.6–5 we conclude that the minimum required transmission bandwidth is given by N 2 TS – Using Ts from the code rate consideration N R W bits / sec 2 RC log 2 M W – the resulting spectral bit rate is r ECE 6640 2 log 2 M R RC W N 6 Channel Coding • These equations indicate that compared with an uncoded system that uses the same modulation scheme, the bit rate is changed by a factor of Rc and the bandwidth is changed by a factor of 1/Rc – i.e., there is a decrease in rate and an increase in bandwidth. R RC log 2 M bits / sec TS r ECE 6640 W N R bits / sec 2 RC log 2 M R 2 log 2 M RC W N 7 Energy per Bit Consideration • If the average energy of the constellation is denoted by Eavg, then the energy per codeword E, is given by E L Eavg n Eavg log 2 M – and Ec, energy per component of the codeword, is given by Eavg E EC L Eavg n log 2 M • The energy per transmitted data bit is denoted by Eb and can be found from Eavg E Ec 1 Eb k RC RC log 2 M ECE 6640 8 Summary for Modulation Schemes • BPSK N 2 • QPSK N 2 • M-PSK N 2 • BFSK N 2 • M-FSK N M ECE 6640 W R RC r R W 2 RC W R RC log 2 M W W r r R RC M R 2 RC log 2 M R 2 RC W R RC log 2 M W r r R RC W R RC W 2 log 2 M R RC W M 9 Linear Block Codes k-bits to n-bits • 2k codewords in a 2n space • codewords should be at a maximum distance from each other • dimensionality allows for bit error detection and bit error corrections ECE 6640 10 LBC Generator Matrix • Applying vector-matrix multiplication methods • Translate a k-tuple data set to an n-tuple codeword. g1 g cm u m G u m 2 gk k cm u mi g i i 1 • Generator Matrix Structure ECE 6640 1 0 0 0 1 0 G I k | P 0 0 1 p11 p21 pk 1 p12 p22 pk 2 p1,( n k ) p2,( n k ) pk ,( n k ) 11 LBC Generator Matrix • The P matrix structure is based on generator polynomials discussed in section 7.1-1. We will come back to these … ECE 6640 12 Parity Check Matrix • The parity check matrix allows the checking of a codeword – vector multiplication results in a zero matrix H PT | I nk ECE 6640 13 MATLAB Generator and Parity Check Matrices • MATLAB uses the Sklar generator and parity check matrices. – The I and P sections are swapped …. G P | I k • H I nk | PT Function calls to create the parmat and genmat are: [parmat,genmat] = hammgen(3) rem(genmat * parmat', 2) [parmat,genmat] = cyclgen(7,cyclpoly(7,4)) rem(genmat * parmat', 2) ECE 6640 14 Textbook Example 7.2-1 • see MATLAB Sec7_2_1.m % Simple single bit parity n = 7; k = 4; % Set codeword length and message length. msg = [0 0 0 0; 0 0 0 1; 0 0 1 0; 0 0 1 1; ... 0 1 0 0; 0 1 0 1; 0 1 1 0; 0 1 1 1; ... 1 0 0 0; 1 0 0 1; 1 0 1 0; 1 0 1 1; ... 1 1 0 0; 1 1 0 1; 1 1 1 0; 1 1 1 1]; % Message is a binary matrix. % Verify the genmat and parmat perform correctly SU = rem(genmat*parmat',2) % Produce standard array coset decoding table. Ucoset = syndtable(parmat); Ucode = rem(msg * genmat, 2) % Add noise. noisycode = rem(Ucode + randerr(2^k,n,[0 1;.7 .3]),2); SN = rem(noisycode * parmat',2); P = [ 1 0 1 ; ... 1 1 1 ; ... 1 1 0 ; ... 0 1 1 ]; SN_de = bi2de(SN,'left-msb'); corrvect = Ucoset(1+SN_de,:); correctedcode = rem(corrvect+noisycode,2) genmat = [eye(4) P]; recvd_msg = correctedcode(:,1:k) parmat = [P' eye(3)]; ECE 6640 % Decode, keeping track of all detected errors. [newmsg,err,ccode,cerr] = decode(noisycode,n,k,'linear',genmat,Ucoset) 15 Hamming Weight and Distance • The Hamming weight of a code/codeword defines the performance • The Hamming weight is defined as the number of nonzero elements in c. – for binary, count the number of ones. – We want the P matrix to have as many ones as possible! – There is always one all zeros codeword … don’t count it. • The Hamming difference between two codewords, d(c1,c2), is defined as the number of elements that differ. – Note the zero codeword to the minimum Hamming weight codeword is likely to be dmin. ECE 6640 16 Example Codewords and Weights Weight = Ucode = 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 0 0 0 0 1 1 1 1 0 0 0 0 1 1 1 1 0 0 1 1 0 0 1 1 0 0 1 1 0 0 1 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 0 1 1 1 1 0 0 1 1 0 0 0 0 1 1 0 1 1 0 1 0 0 1 0 1 1 0 1 0 0 1 0 1 0 1 1 0 1 0 1 0 1 0 0 1 0 1 0 3 3 4 4 3 3 4 3 4 4 3 3 4 4 7 dmin_fn = gfweight(genmat) dmin_fn = ECE 6640 3 17 Minimum Distance • The minimum Hamming distance is of interest – dmin = 3 for the (7,4) example • Similar to distances between symbols for symbol constellations, the Hamming distance between codewords defines a code effective performance in detecting bit errors and correct them. – maximizing dmin for a set number of redundant bits is desired. – error-correction capability – error-detection capability ECE 6640 18 Hamming Distance Capability (coming in section 7.5) • Error-correcting capability, t bits d min 1 t 2 – for (7,4) dmin=3: t = 1 – Codes that correct all possible sequences of t or fewer errors may also be able to correct for some t+1 errors. • see the last coset of (6,3) – 2 bit errors! – a t-error-correcting (n,k) linear code is capable of correcting 2(n-k) error patterns! ECE 6640 19 Hamming Distance Capability (coming in section 7.5) • Error-detection capability e d min 1 – a block code with a minimum distance of dmin guarantees that all error patterns of dmin-1 or fewer error can be detected. – for (7,4) dmin=3: e = 2 bits – The code may also be capable of detecting errors of dmin bits. – An (n,k) code is capable of detecting 2n-2k error patterns of length n. ECE 6640 • there are 2k-1 error patterns that turn one codeword into another and are thereby undetectable. • all other patterns should produce non-zero syndrome • therefore we have the desired number of detectable error patterns • (6,3) 64-8=56 detectable error patterns • (7,4) 128-16=112 detectable error patterns 20 Weight Distribution Polynomial • In any linear block code there exists one codeword of weight 0, and the weights of nonzero codewords can be between dmin and n. • The weight distribution polynomial (WEP) or weight enumeration function (WEF) of a code is a polynomial that specifies the number of codewords of different weights in n a code. n n i AZ Ai Z i 1 i 0 A Z i d min i i A0 Ai 0 1 i 0 n • from the example A1 Ai 1i 2 k i 0 AZ 1 7 Z 3 7 Z 4 1 Z 7 ECE 6640 21 Using the all 0 Codeword • Note that for a linear block code, the set of distances seen from any codeword to other codewords is independent of the codeword from which these distances are seen. – Therefore, in linear block codes the error bound is independent of the transmitted codeword, and thus, without loss of generality, we can always assume that the all-zero codeword 0 is transmitted. • For this case, we can compute Euclidean symbol distances for BPSK transmission becomes d E2 sm 4 Eb RC wcm ECE 6640 22 Distance Enumeration Function • Based on knowing the weight distribution and Euclidean distances: – for BPSK T X n 4 RC Eb i A X AZ 1 Z X 4RC Eb i i d min – for Orthogonal BFSK T X n A X i d min ECE 6640 i 2 RC Eb i AZ 1 Z X 2RC Eb 23 Input-output Weight Enumeration Function • provides information about the weight of the codewords as well as the weight of the corresponding information sequences n k BY , Z Bij Y j Z i i 0 j 0 – where Bij is the number of codewords of weight i • Functional relations AZ BY , Z Y 1 ECE 6640 B0,0 B00 1 24 Conditional Weight Enumeration Function • A third form of the weight enumeration function, called the conditional weight enumeration function (CWEF), is defined by n B j Z Bij Z i i 0 • It represents the weight enumeration function of all codewords corresponding to information sequences of weight j . • And 1 j B j Z BY , Z Y 0 j j! Y ECE 6640 25 Example (7,4) LBC • Weight Distribution Polynomial AZ 1 7 Z 3 7 Z 4 1 Z 7 • Codeword Weights B00 1 B31 3 B32 3 B33 1 B41 1 B42 3 B43 3 B74 1 BY , Z 1 3 Y Z 3 3 Y 2 Z 3 1 Y 3 Z 3 1 Y Z 4 3 Y 2 Z 4 3 Y 3 Z 4 1 Y 4 Z 7 • And ECE 6640 B0 Z 1 B1 Z 3 Z 3 Z 4 B3 Z Z 3 3 Z 4 B2 Z 3 Z 3 3 Z 4 B4 Z Z 7 26 Hard Decision Decoding • S hard decision is made as to whether each transmitted bit in a codeword is a 0 or a 1. – The resulting discrete-time channel (consistingof the modulator, the AWGN channel, and the modulator/demodulator) constitutes a BSC with crossover probability p. – If coherent PSK is employed in transmitting and receiving the bits in each codeword, then 2 EC p Q N0 Q 2 b Rc – If coherent FSK is used to transmit the bits in each codeword, then ECE 6640 EC Q b Rc p Q N 0 27 Minimum-Distance Decoding (Maximum-Likelihood) • The n bits from the detector corresponding to a received codeword are passed to the decoder, which compares the received codeword with the M possible transmitted codewords and decides in favor of the codeword that is closest in Hamming distance (number of bit positions in which two codewords differ) to the received codeword. • Using Syndromes and Standard Arrays … ECE 6640 28 Syndrome Testing • Describing the received n-tuples of U y cm e – where e are potential errors in the received matrix • As one might expect, use the check matrix to generate a the syndrome S y H T cm e H T cm H T e H T • but from the check matrix 0 cm H T S eHT ECE 6640 – if there are no bit errors, the result is a zero matrix! 29 Syndrome Testing • If there are no bit error, the Syndrome results in a 0 matrix • If there are errors, the requirement of a linear block code is to have a one to one mapping between correctable errors and non-zero syndrome results. • Error correction requires the identification of the corresponding syndrome for each of the possible errors. – generate a 2^n x n array representing all possible received n-tuples – this is called the standard array ECE 6640 30 Standard Array Format (n,k) • An n × (n − k) table • The actual codewords are placed in the top row. – The 1st code word is an all zeros codeword. It also defines the 1st coset that has zero errors • Each row is described as a coset with a coset leader describing a particular error. ECE 6640 – for an n-tuple, there will be n-k coset leaders, one of which is zero errors. 31 Example 7.5-1 (5,2) • Let us construct the standard array for the (5, 2) systematic code with generator matrix given by parmat = 1 0 0 1 1 1 1 0 0 0 1 0 0 0 1 dmin = 3 Correct all single bit errors and two 2-bit errors ECE 6640 32 Error Detection • Since the minimum separation between a pair of codewords is dmin, it is possible for an error pattern of weight dmin to transform one of these 2k codewords in the code to another codeword. When this happens, we have an undetected error. • On the other hand, if the actual number of errors is less than dmin, the syndrome will have a nonzero weight. • Clearly, the (n, k) block code is capable of detecting up to dmin−1 errors. ECE 6640 33 Error Correction • The error correction capability of a code also depends on the minimum distance. However, the number of correctable error patterns is limited by the number of possible syndromes or coset leaders in the standard array. – To determine the error correction capability of an (n, k) code, it is convenient to view the 2k codewords as points in an n-dimensional space. – If each codeword is viewed as the center of a sphere of radius (Hamming distance) t, the largest value that t may have without intersection (or tangency) of any pair of the 2k spheres is d min 1 t 2 ECE 6640 34 Code Capability • In general, a code with minimum distance dmin can detect ed errors and correct ec errors, where ed ec d min 1 ec ed ECE 6640 35 Block and Bit Error Probability • Since the binary symmetric channel is memoryless, the bit errors occur independently. • The probability of m errors in a block of n bits is n m nm Pm, n p 1 p m • Therefore, the probability of a codeword error is upperbounded by the expression n m nm Pe p 1 p m t 1 m n – for high SNR and small p it approximates as the first term ECE 6640 n t 1 p 1 p n t 1 Pe t 1 36 Block and Bit Error Probability • To derive an approximate bound on the error probability of each binary symbol in a codeword, we note that – if 0 is sent and a sequence of weight t +1 is received, the decoder will decode the received sequence of weight t + 1 to a codeword at a distance at most t from the received sequence and hence a distance of at most 2t +1 from 0. – since the minimum weight of the code is 2t + 1, the decoded codeword has to be of weight 2t + 1. This means that for each highly probable block error we have 2t + 1 bit errors in the codeword components – Therefore, 2 t 1 n t 1 p 1 p n t 1 Pbe n t 1 ECE 6640 37 Perfect Codes • To describe the basic characteristics of a perfect code, suppose we place a sphere of radius t around each of the possible transmitted codewords. • Each sphere around a codeword contains the set of all codewords of Hamming distance less than or equal to t from the codeword. 2 nk n i 1 i t • When equality holds, it is a perfect code. – Hamming codes – (23,12) Golay code ECE 6640 38 Coming Next • A discussion of types of linear block codes • A discussion od Soft Decoding … ECE 6640 39 ECE 6640 40 Notes Note • the following material is based on the Sklar text. I have not had the time to modify it for the Proakis textbook. ECE 6640 41 Sklar’s Communications System ECE 6640 Notes and figures are based on or taken from materials in the course textbook: Bernard Sklar, Digital Communications, Fundamentals and Applications, Prentice Hall PTR, Second Edition, 2001. 42 Signal Processing Functions ECE 6640 Notes and figures are based on or taken from materials in the course textbook: Bernard Sklar, Digital Communications, Fundamentals and Applications, Prentice Hall PTR, Second Edition, 2001. 43 Encoding and Decoding • Data block of k-bits is encoded using a defined algorithm into an n-bit codeword • Codeword transmitted • Received n-bit codeword is decoded/detected using a defined algorithm into a k-bit data block ECE 6640 Data Block Encoder Codeword Data Block Decoder Codeword 44 Waveform Coding Structured Sequences • Waveform Coding: – Transforming waveforms in to “better” waveform representations – Make signals antipodal or orthogonal • Structured Sequences: – Transforming waveforms in to “better” waveform representations that contain redundant bits – Use redundancy for error detection and correction – Bit sets become longer bit sets (redundant bits) with better “properties” – The required bit rate for transmission increases. ECE 6640 45 Antipodal and Orthogonal Signals • Antipodal – Distance is twice “signal voltage” – Only works for one-dimensional signals d 2 Eb T 1 1 z ij s i t s j t dt E 0 1 for i j for i j • Orthogonal – Orthogonal symbol set – Works for 2 to N dimensional signals d 2 Eb ECE 6640 T 1 1 z ij s i t s j t dt E 0 0 for i j for i j 46 M-ary Signals Waveform Coding • Symbol represents k bits at a time – Symbol selected based on k bits – M-ary waveforms may be transmittedM 2 k • Allow for the tradeoff of error probability for bandwidth efficiency • Orthogonality of k-bit symbols – Number of bits that agree=Number of bits that disagree sumb K z ij ECE 6640 k 1 i k N b sum b ik b kj j k k 1 K 1 0 for i j for i j 47 Hadamard Matrix Orthogonal Codes H D 1 HD H D 1 H D 1 H D 1 • Start with the data set for one bit and generate a Hadamard code for the data set 0 0 0 H 1 0 1 1 0 0 1 1 ECE 6640 0 1 H1 H2 0 H1 1 1 z ij 0 0 H1 0 H1 0 0 for i j for i j 0 1 0 1 0 0 1 1 0 1 1 0 48 Hadamard Matrix Orthogonal Codes (2) 0 0 0 0 1 1 1 1 0 0 1 1 0 0 1 1 0 1 0 1 H H3 2 0 H 2 1 0 1 0 0 0 H 2 0 H 2 0 0 0 0 0 1 0 1 0 1 0 1 0 0 1 1 0 0 1 1 0 1 1 0 0 1 1 0 See Hadamard in MATLAB H4=hadamard(16) H4xcor=H4'*H4=H4*H4' ans = 16*eye(16) ECE 6640 0 0 0 0 1 1 1 1 0 1 0 1 1 0 1 0 0 0 1 1 1 1 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 0 1 1 0 1 0 0 1 Data Set Codeword 0 0 0 0 0 1 0 1 0 0 1 1 1 0 0 1 0 1 1 1 0 1 1 1 H H4 3 0 0 0 H3 0 0 1 0 1 0 0 1 1 1 0 0 1 0 1 1 1 0 1 1 1 0 0 0 0 0 0 0 H 3 0 H 3 0 0 0 0 0 0 0 0 0 1 0 1 0 1 0 1 0 1 0 0 0 1 1 0 0 1 1 0 0 1 0 1 1 0 0 1 1 0 0 1 1 0 0 0 0 1 1 1 1 0 0 0 0 1 0 1 1 0 1 0 0 1 0 0 0 1 1 1 1 0 0 0 0 1 0 1 1 0 1 0 0 1 0 1 1 0 0 0 0 0 0 0 0 1 1 1 0 1 0 1 0 1 0 1 1 0 1 0 0 1 1 0 0 1 1 1 1 0 0 1 1 0 0 1 1 0 1 0 0 0 0 0 0 1 1 1 1 1 1 1 0 1 0 1 1 0 1 0 1 0 1 0 0 1 1 1 1 0 0 1 1 0 1 0 1 0 1 1 0 0 1 1 0 0 1 1 0 0 1 1 1 1 1 1 0 1 0 1 1 1 0 0 0 1 0 0 1 1 1 1 1 1 0 1 0 1 0 0 1 1 0 0 1 1 0 0 1 1 0 0 0 0 0 0 1 0 1 0 0 0 1 1 0 1 1 0 1 0 0 1 1 0 0 1 0 1 1 0 49 Hadamard Matrix Orthogonal Codes (2) • The Hadamard Code Set generates orthogonal code for the bit representations Letting for i j 1 z ij 0 = +1V 0 for i j 1 = -1V • For a D-bit pre-coded symbol a 2^D bit symbol is generated from a 2^D x 2^D matrix – Bit rate increases from D-bits/sec to 2^D bits/sec! • For equally likely, equal-energy orthogonal signals, the probability of codeword (symbol) error can be bounded as Es PE M M 1 Q N0 ECE 6640 M 2D Es D E b 50 Symbol error to Bit Error • Discussed in chapter 4 (Equ 4.112) – k-bit set (codeword) – 4.9.3 Bit error probability versus symbol error probability for orthogonal signals PB k 2 k 1 k PE k 2 1 M 2k M PB M 2 PE M M 1 • Substituting bit error probability for symbol error probability bounds previously stated PB k 2 k 1 ECE 6640 Es PE M M 1 Q N0 k Eb M E S Q PB M Q 2 N 0 N0 51 Biorthogonal Codes • Code sets with cross correlation of 0 or -1 1 z ij 1 0 for i j for i j , i j M for i j , i j M 2 2 • Based on Hadamard codes (2-bit data set shown) H D 1 BD H D 1 ECE 6640 0 0 1 1 0 0 1 H1 0 B2 0 H1 1 1 1 0 1 1 0 52 Biorthogonal Codes (2) 0 0 0 0 1 1 1 1 0 0 1 1 0 0 1 1 ECE 6640 0 0 0 1 0 0 1 H 2 0 B3 0 H 2 1 1 1 1 0 1 1 0 1 0 1 1 0 1 0 0 0 1 1 1 1 0 0 0 1 1 0 1 0 0 1 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 1 0 0 1 0 0 1 1 0 0 1 0 0 1 0 1 0 0 1 1 0 1 1 1 H 3 0 B4 0 0 0 H 3 1 1 0 0 1 0 1 0 1 1 0 1 1 1 0 0 1 1 1 0 1 1 1 0 1 1 1 1 1 0 0 0 0 0 0 0 1 0 1 0 1 0 1 0 1 1 0 0 1 1 1 1 0 0 1 1 0 0 0 0 1 1 1 1 1 0 1 1 0 1 0 0 1 1 1 1 0 0 1 1 0 1 0 0 1 1 1 1 1 1 1 1 0 1 0 1 0 1 0 1 0 0 1 1 0 0 0 0 1 1 0 0 1 1 1 1 0 0 0 0 0 1 0 0 1 0 1 1 0 0 0 0 1 1 0 0 1 0 1 1 0 53 B3 Example H2=hadamard(4) B3=[H2;-H2] B3*B3' ans = 4 0 0 0 -4 0 0 0 ECE 6640 0 0 0 -4 0 0 0 4 0 0 0 -4 0 0 0 4 0 0 0 -4 0 0 0 4 0 0 0 -4 0 0 0 4 0 0 0 -4 0 0 0 4 0 0 0 -4 0 0 0 4 0 0 0 -4 0 0 0 4 54 Biorthogonal Codes (3) • Biorthogonal codes require half as many code bits per code word as Hadamard. – The required bandwidth would be one-half – Due to some antipodal codes, they perform better • For equally likely, equal-energy orthogonal signals, the probability of codeword (symbol) error can be bounded as Es 2 Es PE M M 2 Q Q N0 N0 PB M PE M , 2 ECE 6640 M 2 k 1 Es k E b for M 8 55 Biorthogonal Codes (4) • Comparing to Hadamard to Biorthogonal – Note that for D bits, M is ½ the size for Biorthogonal Biorthogonal PB 2 Es M 2 E s M Q Q 2 N0 N0 Hadamard PB ECE 6640 M E S M Q 2 N 0 M 2 D 1 M 2k Es k E b M 2k Es k E b 56 Waveform Coding System Example • For a k-bit precoded symbol, one of M generators creates an encoded waveform. • For a Hadamard Code, 2^k bits replace the k-bit input ECE 6640 57 Waveform Coding System Example (2) • This bit sequence can be PSK modulated (an antipodal binary system) for transmission over M time periods of length Tc. T 2 k TC M TC • The symbol rate is 1/M*Tc RS 1 1 k T 2 TC • For real-time transmission, the code word transmission rate and the k-bit input data rate must be identical. – Codeword bits shorter than original message bits Rk 1 RS 1 1 k k Tb T 2 TC T k Tb 2 TC k ECE 6640 k T k 2 Tb C 58 Waveform Coding System Example (3) • The coherent detector now works across multiple PSK bits representing a symbol ECE 6640 59 Waveform Coding System Example (4) Stated Improvement • For k=5, detection can be accomplished with about 2.9 dB less Eb/No! k T k 2 Tb C – Required bandwidth is 32/5=6.4 times the initial data word rate. • Homework problem 6.28 – Compare PB of BPSK to the PB for orthogonally encoded symbols at a predefined BER. Solution set uses 10^-5. ECE 6640 60 Types of Error Control • Error detection and retransmission – Parity bits – Cyclic redundancy checking – Often used when two-way communication used. Also used when low bit-error-rate channels are used. • Forward Error Correction – Redundant bits for detection and correction of bit errors incorporated into the sequence – Structured sequences. – Often used for one-way, noisy channels. Also used for real-time sequences that can not afford retransmission time. ECE 6640 61 Structured Sequence • Encoded sequences with redundancy • Types of codes – Block Codes or Linear Block Codes (Sklar Ch. 6, Proakis Ch. 7) – Convolutional Codes (Sklar Ch. 7, Proakis Ch. 8) – Turbo Codes (Sklar Chap. 8, Proakis Ch. 8) ECE 6640 62 Channel Models • Discrete Memoryless Channel (DMC) – A discrete input alphabet, a discrete output alphabet, and a set of conditional probabilities of conditional outputs given the particular input. • Binary Symmetric Channel – A special case of DMC where the input alphabet and output alphabets consist of binary elements. The conditional probabilities are symmetric p and 1-p. – Hard decisions based on the binary output alphabet performed. • Gaussian Channels – A discrete input alphabet, a continuous output alphabet – Soft decisions based on the continuous output alphabet performed. ECE 6640 63 Code Rate Redundancy • For clock codes, source data is segmented into k bit data blocks. • The k-bits are encoded into larger n-bit blocks. • The additional n-k bits are redundant bits, parity bits, or check bits. • The codes are referred to as (n,k) block codes. – The ratio of redundant to data bits is (n-k)/k. – The ratio of the data bits to total bits, k/n is referred to as the code rate. Therefore a rate ½ code is double the length of the underlying data. ECE 6640 64 Binary Bit Error Probability • Defining the probability of j errors in a block of n symbols/bits where p is the probability of an error. n j n j P j , n p 1 p j n! j p 1 p n j P j , n j!n j ! Binomial Probability Law • The probability of j failures in n Bernoulli trials E x n p ECE 6640 E x 2 n p 1 p 65 Code Example Triple Redundancy • Encode a 0 or 1 as 000 or 111 – Single bit errors detected and corrected 3! j p 1 p 3 j P j ,3 j!3 j ! • Assume p = 10-3 3! 3 3 0.999 0.999 0.997 P0,3 0!3 0 ! 3! 1 2 1 2 0.001 0.999 3 0.001 0.999 0.002994 P1,3 1!3 1! 3! 2 1 2 1 0.001 0.999 3 0.001 0.999 0.000002997 P2,3 2!3 2 ! 3! 3 3 0.001 0.001 10 9 P3,3 3!3 3! Code Example Triple Redundancy • Probability of two or more errors Pr x 2 P2,3 P2,3 2.998 10 6 3 10 6 • Probability of one or no errors Pr x 2 P0,3 P1,3 1 Pr x 2 1 2.998 10 6 0.999997 • If the raw bit error rate of the environment is p=10-3 … • The probability of detecting the correct transmitted bit becomes 3*10-6 Parity-Check Codes • Simple parity – single parity check code – Add a single bit to a defined set of bits to force the “sum of bits” to be even (0) or odd (1). – A rate k/k+1 code – Useful for bit error detection • Rectangular Code (also called a Product Code) – Add row and column parity bits to a rectangular set of bits/symbols and a row or column parity bit for the parity row or column – For an m x n block, m+1 x n+1 bits are sent – A rate mn/(m+1)(n+1) code – Correct single bit errors! ECE 6640 68 (4,3) Single-Parity-Code • (4,3) even-parity code example – 8 legal codewords, 8 “illegal” code words to detect single bit errors – Note: an even number of bit error can not be detected, only odd – The probability of an undetected message error n / 2 ( n even ) ( n 1) / 2 ( n odd ) n / 2 ( n even ) ( n 1) / 2 ( n odd ) j 1 j 1 P2 j, n ECE 6640 Message 000 001 010 011 100 101 110 111 2j n! p 1 p n 2 j 2 j!n 2 j ! Parity 0 1 1 0 1 0 0 1 Codeword 0 000 1 001 1 010 0 011 1 100 0 101 0 110 1 111 69 Matlab Results p = 1e-3; n=4 Perror = 0 for j=2:2:n jfactor = factorial(n)/(factorial(j)*factorial(n-j)) jPe = jfactor * p^j * (1-p)^(n-j) Perror = Perror + jPe end n= 4.00000000000000e+000 Perror = 0.00000000000000e+000 jfactor = 6.00000000000000e+000 jPe = 2 bit errors 5.98800600000000e-006 Perror = 5.98800600000000e-006 jfactor = 1.00000000000000e+000 jPe = 4 bit errors 1.00000000000000e-012 Perror = 5.98800700000000e-006 Probability of an undetected message error see ParityBER.m ECE 6640 70 Matlab Results • Computing the probability of a message error – Channel bit size the parity is added to 2 to 16 – Raw BER = 10^-3 – Compare with parity and without (n x p) 10 0 no errors detected errors not detected 10 -1 Probability 10 -2 10 -3 10 -4 10 -5 ECE 6640 10 -6 2 4 6 8 10 12 Bits with Parity Trasnmitted 14 16 71 Rectangular Code • Add row and column parity bits to a rectangular set of bits/symbols and a row or column parity bit for the parity row or column • For an m x n block, m+1 x n+1 bits are sent • A rate mn/(m+1)(n+1) code • Correct signal bit errors! – Identify parity error row and column, then fix the “wrong bit” ECE 6640 72 Rectangular Code Example • 5 x 5 bit array 25 total bits • 6 x 6 encoded array 36 total bits • A (36,25) code • Compute probability that there is an undetected error message – The block error or word error becomes: j errors in blocks of n symbols and t the number of bit errors. (note t=1 corrected) PM ECE 6640 n j n j p 1 p j t 1 j n n t 1 p 1 p n t 1 PM t 1 73 Error-Correction Code Tradeoffs • Improve message error/bit error rate performance • • Tradeoffs – Error performance vs. bandwidth • More bits per sec implies more bandwidth – Power Output vs. bandwidth • Lowering Eb/No changer BER, to get it back, encode and increase bandwidth • If communication need not be in real time … sending more bits increases BER at the cost of latency! ECE 6640 74 Coding Gain • For a given bit-error probability, the relief or reduction in required Eb/No Eb Eb CodeGaindB dB dB No uncoded N o coded ECE 6640 75 Data Rate vs. Bandwidth • From previous SNR discussions Eb PS P W 1 W T S , R N 0 PN PN R T Eb PS W PS 1 N 0 N 0 W R N 0 R • The tradeoff between Eb/No and bit rate ECE 6640 76 Linear Block Codes • Encodeing a “message space” k-tuple into a “code space” n-tuple with an (n,k) linear block code – maimize the distance between code words – Use as efficient of an encoding as possible – 2k message vectors are possible (for example M k-tuples to send) – 2n message codes are possible (M n-tuples after encoding) • Encoding methodology – A giant table lookup – Generators that can span the subspace to create the desired linear independent sets can be defined ECE 6640 77 Generator Matrix • We wish to define an n-tuple codeword U U m1 V1 m2 V2 mk Vk where mi are the message bits (k-tuple elements), and Vi are k linearly independent n-tuples that span the space. – This allows us to think of coding as a vector-matrix multiple with m a 1 x k vector and V a k x n matrix • If a generator matrix composed of the elements of V can be defined ECE 6640 v11 v12 V1 v V v22 2 21 U mG m m V vk 1 vk 2 k v1n v2 n vkn 78 Table 6.1 Example % Simple n = 6; k msg = [0 1 single = 3; % 0 0; 0 0 0; 1 bit parity Set codeword length and message length. 0 1; 0 1 0; 0 1 1; ... 0 1; 1 1 0; 1 1 1]; % Message is a binary matrix. gen = [ 1 1 0 1 0 0; ... 0 1 1 0 1 0; ... 1 0 1 0 0 1]; code = rem(msg * gen, 2) 0 1 0 1 1 0 1 0 ECE 6640 0 0 1 1 1 1 0 0 0 1 1 0 0 1 1 0 0 0 0 0 1 1 1 1 0 0 1 1 0 0 1 1 0 1 0 1 0 1 0 1 79 Systematic Linear Block Codes • The generator preserves part of the message in the resulting codeword p11 p 21 G P I pk1 p12 p22 pk 2 p1,( n k ) p2 ,( n k ) pk , ( n k ) 1 0 0 v11 v12 0 1 0 v21 v22 0 0 1 vk1 vk 2 v1n v2 n vkn • P is the parity array and I regenerates the message bits – Note the previous example uses this format • Matlab generators – hammgen or – POL = cyclpoly(N, K, OPT) finds cyclic code generator polynomial(s) for a given code word length N and message length K. ECE 6640 80 Systematic Code Vectors • Because of the structure of the generators G P I k • The codeword becomes U m G p | m where p are the parity bits generated for the message m ECE 6640 81 Parity Check Matrix • The parity check matrix allows the checking of a codeword – It should be trivial if there are no errors … but if there are?! Syndrome testing H I nk PT I U H T m P | I k H T m P | I k n k 0 mr ,n k P ECE 6640 82 Parity Check Matrix • Let the message be I I U H T I k P | I k H T P | I k n k P P 0 P • The parity check matrix results in a zero vector, representing the “combination” of the parity bits. [parmat,genmat] = hammgen(3) rem(genmat * parmat', 2) [parmat,genmat] = cyclgen(7,cyclpoly(7,4)) rem(genmat * parmat', 2) ECE 6640 83 Syndrome Testing • Describing the received n-tuples of U r U e – where e are potential errors in the received matrix • As one might expect, use the check matrix to generate a the syndrome of r S r H T U e H T U H T e H T • but from the check matrix S e HT ECE 6640 – if there are no bit errors, the result is a zero matrix! 84 Syndrome Testing • If there are no bit error, the Syndrome results in a 0 matrix • If there are errors, the requirement of a linear block code is to have a one to one mapping between correctable errors and non-zero syndrome results. • Error correction requires the identification of the corresponding syndrome for each of the possible errors. – generate a 2^n x n array representing all possible received n-tuples – this is called the standard array ECE 6640 85 Standard Array Format (n,k) • The actual codewords are placed in the top row. – The 1st code word is an all zeros codeword. It also defines the 1st coset that has zero errors • Each row is described as a coset with a coset leader describing a particular error. – for an n-tuple, there will be n-k coset leaders, one of which is zero errors. ECE 6640 86 Figure 6.11 ECE 6640 87 Decoding with the Standard Array • The n-tuple received can be located somewhere in the standard array. – If there is an error, the corrupted codeword is replaced by the codeword at the top of the column. – The standard array contains all possible n-tuples, it is 2^(n-k) x 2^k in size; therefore, all n-tuples are represented. – There are 2^(n-k) cosets ECE 6640 88 The Syndrome of a Coset • Computing the syndrome of the jth coset for the ith codeword S U i e j H T U i H T e j H T e j H T – the syndrome is identical for the entire coset – Thus, coset is really a name defined for “a set of numbers having a common feature” – The syndrome uniquely defines the error pattern ECE 6640 89 Error Correct • Now that we know that the syndrome identifies a coset, we can identify the coset and perform error correction regardless of the n-tuple transmitted. • The procedure 1. 2. 3. 4. ECE 6640 Calculate the Syndrome of r Locate the coset leader whose syd4om is identified This is the assumed corruption of a valid n-tuple Form the corrected codeword by “adding” the coset to the corrupted codeword n-tuple. 90 Error Correction Example • see Table61_example – each step of the process is shown in Matlab for the (6,3) code of the text ECE 6640 91 Decoder Implementation ECE 6640 92 Hamming Weight and Distance • The Hamming weight of a code/codeword defines the performance • The Hamming weight is defined as the number of nonzero elements in U. – for binary, count the number of ones. • The Hamming difference between two codewords, d(U,V), is defined as the number of elements that differ. ECE 6640 Ucode = 0 1 0 1 1 0 1 0 0 0 1 1 1 1 0 0 0 1 1 0 0 1 1 0 0 0 0 0 1 1 1 1 0 0 1 1 0 0 1 1 0 1 0 1 0 1 0 1 w(U)= d(U0,U) 0 3 3 4 3 4 4 3 93 Minimum Distance • The minimum Hamming distance is of interest – dmin = 3 for the (6,3) example • Similar to distances between symbols for symbol constellations, the Hamming distance between codewords defines a code effective performance in detecting bit errors and correct them. – maximizing dmin for a set number of redundant bits is desired. – error-correction capability – error-detection capability ECE 6640 94 Hamming Distance Capability • Error-correcting capability, t bits d 1 t min 2 – for (6,3) dmin=3: t = 1 – Codes that correct all possible sequences of t or fewer errors may also be able to correct for some t+1 errors. • see the last coset of (6,3) – 2 bit errors! – a t-error-correcting (n,k) linear code is capable of correcting 2(n-k) error patterns! – an upper bound on the proabaility of message error is ECE 6640 PM n j n j p 1 p j t 1 j n 95 Hamming Distance Capability • Error-detection capability e d min 1 – a block code with a minimum distance of dmin guarantees that all error patterns of dmin-1 or fewer error can be detected. – for (6,3) dmin=3: e = 2 bits – The code may also be capable of detecting errors of dmin bits. – An (n,k) code is capable of detecting 2n-2k error patterns of length n. • there are 2k-1 error patterns that turn one codeword into another and are thereby undetectable. • all other pattersn should produce non-zero syndrom • therefore we have the desired number of detectable error patterns • (6,3) 64-8=56 detectable error patterns ECE 6640 96 Codeword Weight Distribution • The weight distribution involves the number of codewords with any particular Hamming weight – for (6,3) 4 have dmin=3, 3 have d = 4 – If the code is used only for error detection, on a BSC, the probability that the decoder does not detect an error can be computed from the weight distribution of the code. n Pnd A j p j 1 p n j j 1 – for (6,3) A(0)=1, A(3)=4, A(4)=3, all else A(i)=0 Pnd 4 p 3 1 p 3 p 4 1 p 3 2 4 1 p 3 p p 3 1 p 2 4 p p 3 1 p 2 ECE 6640 – for p=0.001: Pnd ~ 3.9 x 10-6 97 Simultaneous Error Correction and Detection • It is possible to trade capability from the maximum guaranteed (t) for the ability to simultaneously detect a class of errors. • A code can be used for simultaneous correction of alpha errors and detection of beta errors for beta>=alpha provided d min 1 ECE 6640 98 Visualization of (6,3) Codeword Spaces ECE 6640 99 MATLAB References • see http://www.mathworks.com/help/comm/error-detection-andcorrection.html – http://www.mathworks.com/help/comm/block-coding.html – http://www.mathworks.com/help/comm/linear-block-codes.html – http://www.mathworks.com/help/comm/bch-codes.html ECE 6640 100 (8,2) Block Code see: BlockCode82.m A general script for clock codes ECE 6640 101 Block Codes • Now that you have seen how to work with block codes … • How do we select or generate a code? – from p. 348 – “We would like for the space to be filled with as many codewords as possible (efficient utilization of the added redundancet), and we would like the codewords to be as far from one another as possible. Obviously, these goals conflict.” ECE 6640 102 Code Design: Hamming Bound • The Hamming Bound is describes: – the number of parity bits needed n n n n k log 2 1 t 1 2 – the number of cosets is then 2 ECE 6640 nk n n n 1 t 1 2 103 Defining Code Length • t described the bit corrections d min 2 t 1 • Minimum non-trivial k = 2 – if selected an (n,2) code is needed • Allow t=2 n n n k log 2 1 1 2 – Hamming bound might suggest n=7 (standard array size of 4 x 29 < 2^7 – But for small codes, the Plotkin bound must also be met – we will need ECE 6640 d min n 2 k 1 k 2 1 104 Defining Code Length • Using the Plotkin bound for n=7 d min n 2 k 1 7 2 14 2 k 4 2 1 4 1 3 3 • Therefore n=8 is the smallest possible code – as we saw, the (8,2) code corrects 1, 2 and some 3 bit errors ECE 6640 105 Cyclic Codes • An important subclass of linear clock codes • Easily implemented using a feedback shift register – all cyclic shifts of a codeword forms another codeword • The components of the codeword can be treated like a polynomial U X u0 u1 X u2 X 2 un 1 X n 1 ECE 6640 106 Algebraic Structure of Cyclic Codes • Based on U X u0 u1 X u2 X 2 un 1 X n 1 – U(X) (n-1) degree polynomial code word with the property X i U X U i X q X n n X 1 X 1 X i U X q X X n 1 U i X – where the final term is the remainder of the division process and it is also a codeword – The remainder can also be described in terms of modulo arithmetic as U i X X i U X moduloX n 1 ECE 6640 107 Example U X u0 u1 X u2 X 2 un 1 X n 1 • Multiplying a codeword by X and adding “two” un-1which is equal to zero in a binary sense X U X un 1 u0 X u1 X 2 u2 X 2 un 2 X n 1 un 1 X n un 1 X U X un 1 u0 X u1 X 2 u2 X 2 un 2 X n 1 un 1 X n 1 • The final term module Xn+1 results in a new codeword X U X un 1 u0 X u1 X 2 u2 X 2 un 2 X n 1 • Repeat for the rest of the codewords …. ECE 6640 108 Binary Cyclic Codes • We can generate a cyclic code using a generator polynomial in much the same way that we generated a block code using a generator matrix. – The generator polynomial g(x) for an (n,k) cyclic code is unique and is of the form (with the requirement that g0=1 and gp=1) g X g 0 g1 X g 2 X 2 g p X p – Every codeword in the space is of the form U X m X g X – with the message polynomial m X m0 m1 X m2 X 2 mn p 1 X n p 1 – such that k-1=n-p-1 or the number of parity bits is p nk ECE 6640 109 Binary Cyclic Codes • U is said to be a valid codeword of the subspace S if, and only if, g(X) divides into U(X) without a remainder. U X m X g X U i X m X gX • A generator polynomila g(X) of an (n,k) cyclic code is a factor of Xn+1. (A result of the codewords being cyclic). • Therefore some example generators are X 7 1 X 3 X 1 X 4 X 2 X 1 – g(X) for (7,3) with p=3 – g(X) for (7,4) with p=4 ECE 6640 110 Systematic Encoding • Output message and feed message to LFSR • When message done, the parity bits are in the encoder and can be output. • Initialize the encoder and restart for the next message. • Syndrome computation is performed in a similar manner. ECE 6640 111 Cyclic Block Code • With proper manipulation, the cyclic block codes can use linear combinations of codes to recreate the generator and parity matrices of block codes. • Then processing could also be performed as previously shown. ECE 6640 112 Well-Known Block Codes • Hamming Codes – minimum distance of 3, single error correction codes. – known as perfect codes, codes where the standard array has all patterns of t or fewer errors and no others as coset leaders (that is no residual error-correcting capacity) • Extended Golay Codes • Bose-Chadhuri-Hocquenghem (BCH) Codes – powerful class of cyclic codes – generalization of Hamming codes that allow multiple error correction ECE 6640 113 Hamming Codes • Hamming codes are characterized by a single integer m that creates codes of n, k 2m 1,2m 1 m – (3,1) , (7,4), (15,11), (31,26), etc. – dmin = 3, t=1, e=2 … a perfect code ECE 6640 114 Code Generation Algorithm • See Wikipedia: http://en.wikipedia.org/wiki/Hamming_code • In MATLAB see the function hamgen – Produce parity-check and generator matrices for Hamming code. – H = hammgen(M) produces the parity-check matrix H for a given integer M, M >= 3. The code length of a Hamming code is N=2^M-1. The message length is K = 2^M - M - 1. The parity-check matrix is an M-by-N matrix. – [H, G, N, K] = hammgen(...) produces the parity-check matrix H as well as the generator matrix G, and provides the codeword length N and the message length K. ECE 6640 115 MATLAB hamgen [H, G, N, K] = hammgen(3) H= 1 0 0 1 0 1 1 0 1 0 1 1 1 0 0 0 1 0 1 1 1 G= 1 1 0 1 0 0 0 0 1 1 0 1 0 0 1 1 1 0 0 1 0 1 0 1 0 0 0 1 N= 7 K= 4 ECE 6640 116 Performance Improvement • If we assume BPSK transmission with coherent detection. • The channel symbol error and bit error probability of n j n j PS p 1 p j t 1 j n 1 n n j n j PB p 1 p n j t 1 j • For performance over a Gaussian channel we have 2 Ec p Q N0 Ec k Eb N0 n N0 – with the results plotted in on Figure 6.22 and the following slide. ECE 6640 117 Comparative Code Performance Figure 6.22 ECE 6640 118 Extended Golay Code • The binary (24,12) code generated from the perfect (23,12) Golay code. – A rate ½ code. dmin = 8 – Wikipedia: http://en.wikipedia.org/wiki/Binary_Golay_code – Correct 3 bit errors, detect 7 bit errors 1 24 24 j 24 j PB p 1 p 24 j 4 j ECE 6640 119 BCH Codes • Bose-Chadhuri-Hocquenghem (BCH) • From Wikipedia: http://en.wikipedia.org/wiki/BCH_code – One of the key features of BCH codes is that during code design, there is a precise control over the number of symbol errors correctable by the code. In particular, it is possible to design binary BCH codes that can correct multiple bit errors. – BCH codes are used in applications such as satellite communications,[2] compact disc players, DVDs, disk drives, solid-state drives[3] and two-dimensional bar codes. • Table 6.4 provides generators of primitive BCH Codes based on the desired (n,k) and t ECE 6640 120 BCH Code Performance Figure 6.23 ECE 6640 121 Matlab Block Code Examples • ChCode_MPSK_BJB – BPSK, QPSK or 8-PSK Time Symbols at a fixed 1 Mbps channel symbol bit rate (1 Msps for BPSK, ½ Msps for QPSK, and 1/3 Msps for 8-PSK) – Linear Block Channel Encoding • (3,1) with t=1, (6,3) with t=1, (7,4) with t=1, and (8,2) with t=2 – Comparison curve based on symbol bit error rate versus corrected bit-error rates for the data stream ECE 6640 122 BPSK Simulation Results (3,1) t=1, rate 1/3 10 10 BER 10 10 10 10 10 Bit Error Rate, M=2 0 10 -1 10 -2 10 -3 10 BER 10 (6,3) t=1, rate 1/2 -4 10 -5 10 MPSK Simulation Encoded MPSK Simulation MPSK Theory Encoded MPSK Theory -6 -7 -8 -6 -4 -2 0 2 E b/N0 (dB) ECE 6640 10 4 6 8 10 12 10 Bit Error Rate, M=2 0 -1 -2 -3 -4 -5 MPSK Simulation Encoded MPSK Simulation MPSK Theory Encoded MPSK Theory -6 -7 -8 -6 -4 -2 0 2 4 E b/N0 (dB) 6 8 10 12 123 BPSK Simulation Results (2) (7,4) t=1, rate 4/7 10 10 10 10 (8,2) t=2, rate 1/4 Bit Error Rate, M=2 0 10 -1 10 10 BER BER 10 10 10 -2 -3 -4 10 10 -1 -2 10 10 Bit Error Rate, M=2 0 -3 -4 -5 10 -6 MPSK Simulation Encoded MPSK Simulation MPSK Theory Encoded MPSK Theory -7 -8 -6 -4 ECE 6640 -2 0 2 4 E b/N0 (dB) 10 6 8 10 12 10 -5 -6 -7 -10 MPSK Simulation Encoded MPSK Simulation MPSK Theory Encoded MPSK Theory -5 0 5 10 15 E b/N0 (dB) 124 ECE 6640 125 7.2-4 Error Probability • Two types of error probability can be studied when linear block codes are employed. – The block error probability or word error probability is defined as the probability of transmitting a codeword cm and detecting a different codeword cm’ . – The second type of error probability is the bit error probability, defined as the probability of receiving a transmitted information bit in error. ECE 6640 126 Block Error Probability • To determine the block (word) error probability Pe, we note that an error occurs if the receiver declares any codeword cm ≠0 as the transmitted codeword. – which in general depends on the Hamming distance between 0 and cm, which is equal to w(cm), in a way that depends on the modulation scheme employed for transmission of the codewords. Pe n A P i d min i pair wise i – the pairwise error probability is between two codewords with Hamming distance i. – From Chap. 6, example 6.8-1 n P0cm p yi | 0 p yi | cmi ECE 6640 i 1 yi Y 127 Block Error Probability (cont) • With approximations, this leads to the simpler, but looser, bound d k Pe 2 1 min – where p y | 0 p y yi Y i i | cmi • This is not the error of most interest … the bit error probability is. ECE 6640 128 Bit Error Probability • We again assume that the all-zero sequence is transmitted; then the probability that a specific codeword of weight i will be decoded at the detector is equal to P2(i ). • The number of codewords of weight i that correspond to information sequences of weight j is denoted by Bij . Therefore, when 0 is transmitted, the expected number of information bits received in error is given by k b j j 0 n B i d min ij Ppair wise i – with ECE 6640 k n b Pb j Bij Ppair wise i k j 0 i 0 129