ECE 6640 Digital Communications

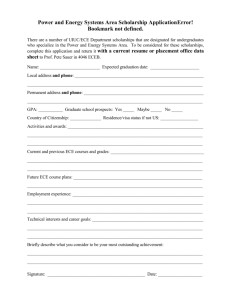

advertisement

ECE 6640 Digital Communications Dr. Bradley J. Bazuin Assistant Professor Department of Electrical and Computer Engineering College of Engineering and Applied Sciences Chapter 7 Chapter 7: Linear Block Codes 7.1 Basic Definitions 7.2 General Properties of Linear Block Codes 7.3 Some Specific Linear Block Codes 7.4 Optimum Soft Decision Decoding of Linear Block Codes 7.5 Hard Decision Decoding of Linear Block Codes 7.6 Comparison of Performance between Hard Decision and Soft Decision Decoding 7.7 Bounds on Minimum Distance of Linear Block Codes 7.8 Modified Linear Block Codes 7.9 Cyclic Codes 4 7.10 Bose-Chaudhuri-Hocquenghem (BCH) Codes 7.11 Reed-Solomon Codes 7.12 Coding for Channels with Burst Errors 7.13 Combining Codes 7.14 Bibliographical Notes and References Problems ECE 6640 401 411 420 424 428 436 440 445 47 463 471 475 477 482 482 2 Chapter Content • Our focus in this chapter and Chapter 8 is on channel coding schemes with manageable decoding algorithms that are used to improve performance of communication systems over noisy channels. • This chapter is devoted to block codes whose construction is based on familiar algebraic structures such as groups, rings, and fields. • In Chapter 8 we will study coding schemes that are best represented in terms of graphs and trellises. ECE 6640 3 Channel Codes • Block Codes – In block codes one of the M = 2k messages, each representing a binary sequence of length k, called the information sequence, is mapped to a binary sequence of length n, called the codeword, where n > k. – The codeword is usually transmitted over the communication channel by sending a sequence of n binary symbols, for instance, by using BPSK or another symbol scheme. • Convolutional Codes – Convolutional codes are described in terms of finite-state machines. In these codes, at each time instance i , k information bits enter the encoder, causing n binary symbols generated at the encoder output and changing the state of the encoder. ECE 6640 4 Code Rate Considerations • The code rate of a block or convolutional code is denoted by Rc and is given by k RC n • A codeword of length n is transmitted using an N-dim. constellation of size M, where M is assumed to be a power of 2 and L=n /log2 M is assumed to be an integer representing the number of M-ary symbol transmitted per codeword. If the symbol duration is Ts , then the transmission time for k bits is T = LTs and the transmission rate is given by R ECE 6640 log 2 M k k k log 2 M RC bits / sec T L TS n TS TS 5 Bandwidth Considerations • The dimension of the space of the encoded and modulated signals is LN, and using the dimensionality theorem as stated in Equation 4.6–5 we conclude that the minimum required transmission bandwidth is given by N 2 TS – Using Ts from the code rate consideration N R W bits / sec 2 RC log 2 M W – the resulting spectral bit rate is r ECE 6640 2 log 2 M R RC W N 6 Channel Coding • These equations indicate that compared with an uncoded system that uses the same modulation scheme, the bit rate is changed by a factor of Rc and the bandwidth is changed by a factor of 1/Rc – i.e., there is a decrease in rate and an increase in bandwidth. R RC log 2 M bits / sec TS r ECE 6640 W N R bits / sec 2 RC log 2 M R 2 log 2 M RC W N 7 Energy per Bit Consideration • If the average energy of the constellation is denoted by Eavg, then the energy per codeword E, is given by E L Eavg n Eavg log 2 M – and Ec, energy per component of the codeword, is given by Eavg E EC L Eavg n log 2 M • The energy per transmitted data bit is denoted by Eb and can be found from Eavg E Ec 1 Eb k RC RC log 2 M ECE 6640 8 Summary for Modulation Schemes • BPSK N 2 • QPSK N 2 • M-PSK N 2 • BFSK N 2 • M-FSK N M ECE 6640 W R RC r R W 2 RC W R RC log 2 M W W r r R RC M R 2 RC log 2 M R 2 RC W R RC log 2 M W r r R RC W R RC W 2 log 2 M R RC W M 9 Linear Block Codes k-bits to n-bits • 2k codewords in a 2n space • codewords should be at a maximum distance from each other • dimensionality allows for bit error detection and bit error corrections ECE 6640 10 LBC Generator Matrix • Applying vector-matrix multiplication methods • Translate a k-tuple data set to an n-tuple codeword. g1 g cm u m G u m 2 gk k cm u mi g i i 1 • Generator Matrix Structure ECE 6640 1 0 0 0 1 0 G I k | P 0 0 1 p11 p21 pk 1 p12 p22 pk 2 p1,( n k ) p2,( n k ) pk ,( n k ) 11 LBC Generator Matrix • The P matrix structure is based on generator polynomials discussed in section 7.1-1. We will come back to these … ECE 6640 12 Parity Check Matrix • The parity check matrix allows the checking of a codeword – vector multiplication results in a zero matrix H PT | I nk ECE 6640 13 MATLAB Generator and Parity Check Matrices • MATLAB uses the Sklar generator and parity check matrices. – The I and P sections are swapped …. G P | I k • H I nk | PT Function calls to create the parmat and genmat are: [parmat,genmat] = hammgen(3) rem(genmat * parmat', 2) [parmat,genmat] = cyclgen(7,cyclpoly(7,4)) rem(genmat * parmat', 2) ECE 6640 14 Textbook Example 7.2-1 • see MATLAB Sec7_2_1.m % Simple single bit parity n = 7; k = 4; % Set codeword length and message length. msg = [0 0 0 0; 0 0 0 1; 0 0 1 0; 0 0 1 1; ... 0 1 0 0; 0 1 0 1; 0 1 1 0; 0 1 1 1; ... 1 0 0 0; 1 0 0 1; 1 0 1 0; 1 0 1 1; ... 1 1 0 0; 1 1 0 1; 1 1 1 0; 1 1 1 1]; % Message is a binary matrix. % Verify the genmat and parmat perform correctly SU = rem(genmat*parmat',2) % Produce standard array coset decoding table. Ucoset = syndtable(parmat); Ucode = rem(msg * genmat, 2) % Add noise. noisycode = rem(Ucode + randerr(2^k,n,[0 1;.7 .3]),2); SN = rem(noisycode * parmat',2); P = [ 1 0 1 ; ... 1 1 1 ; ... 1 1 0 ; ... 0 1 1 ]; SN_de = bi2de(SN,'left-msb'); corrvect = Ucoset(1+SN_de,:); correctedcode = rem(corrvect+noisycode,2) genmat = [eye(4) P]; recvd_msg = correctedcode(:,1:k) parmat = [P' eye(3)]; ECE 6640 % Decode, keeping track of all detected errors. [newmsg,err,ccode,cerr] = decode(noisycode,n,k,'linear',genmat,Ucoset) 15 Hamming Weight and Distance • The Hamming weight of a code/codeword defines the performance • The Hamming weight is defined as the number of nonzero elements in c. – for binary, count the number of ones. – We want the P matrix to have as many ones as possible! – There is always one all zeros codeword … don’t count it. • The Hamming difference between two codewords, d(c1,c2), is defined as the number of elements that differ. – Note the zero codeword to the minimum Hamming weight codeword is likely to be dmin. ECE 6640 16 Example Codewords and Weights Weight = Ucode = 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 0 0 0 0 1 1 1 1 0 0 0 0 1 1 1 1 0 0 1 1 0 0 1 1 0 0 1 1 0 0 1 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 0 1 1 1 1 0 0 1 1 0 0 0 0 1 1 0 1 1 0 1 0 0 1 0 1 1 0 1 0 0 1 0 1 0 1 1 0 1 0 1 0 1 0 0 1 0 1 0 3 3 4 4 3 3 4 3 4 4 3 3 4 4 7 dmin_fn = gfweight(genmat) dmin_fn = ECE 6640 3 17 Minimum Distance • The minimum Hamming distance is of interest – dmin = 3 for the (7,4) example • Similar to distances between symbols for symbol constellations, the Hamming distance between codewords defines a code effective performance in detecting bit errors and correct them. – maximizing dmin for a set number of redundant bits is desired. – error-correction capability – error-detection capability ECE 6640 18 Hamming Distance Capability (coming in section 7.5) • Error-correcting capability, t bits d min 1 t 2 – for (7,4) dmin=3: t = 1 – Codes that correct all possible sequences of t or fewer errors may also be able to correct for some t+1 errors. • see the last coset of (6,3) – 2 bit errors! – a t-error-correcting (n,k) linear code is capable of correcting 2(n-k) error patterns! ECE 6640 19 Hamming Distance Capability (coming in section 7.5) • Error-detection capability e d min 1 – a block code with a minimum distance of dmin guarantees that all error patterns of dmin-1 or fewer error can be detected. – for (7,4) dmin=3: e = 2 bits – The code may also be capable of detecting errors of dmin bits. – An (n,k) code is capable of detecting 2n-2k error patterns of length n. ECE 6640 • there are 2k-1 error patterns that turn one codeword into another and are thereby undetectable. • all other patterns should produce non-zero syndrome • therefore we have the desired number of detectable error patterns • (6,3) 64-8=56 detectable error patterns • (7,4) 128-16=112 detectable error patterns 20 Weight Distribution Polynomial • In any linear block code there exists one codeword of weight 0, and the weights of nonzero codewords can be between dmin and n. • The weight distribution polynomial (WEP) or weight enumeration function (WEF) of a code is a polynomial that specifies the number of codewords of different weights in n a code. n n i AZ Ai Z i 1 i 0 A Z i d min i i A0 Ai 0 1 i 0 n • from the example A1 Ai 1i 2 k i 0 AZ 1 7 Z 3 7 Z 4 1 Z 7 ECE 6640 21 Using the all 0 Codeword • Note that for a linear block code, the set of distances seen from any codeword to other codewords is independent of the codeword from which these distances are seen. – Therefore, in linear block codes the error bound is independent of the transmitted codeword, and thus, without loss of generality, we can always assume that the all-zero codeword 0 is transmitted. • For this case, we can compute Euclidean symbol distances for BPSK transmission becomes d E2 sm 4 Eb RC wcm ECE 6640 22 Distance Enumeration Function • Based on knowing the weight distribution and Euclidean distances: – for BPSK T X n 4 RC Eb i A X AZ 1 Z X 4RC Eb i i d min – for Orthogonal BFSK T X n A X i d min ECE 6640 i 2 RC Eb i AZ 1 Z X 2RC Eb 23 Input-output Weight Enumeration Function • provides information about the weight of the codewords as well as the weight of the corresponding information sequences n k BY , Z Bij Y j Z i i 0 j 0 – where Bij is the number of codewords of weight i • Functional relations AZ BY , Z Y 1 ECE 6640 B0,0 B00 1 24 Conditional Weight Enumeration Function • A third form of the weight enumeration function, called the conditional weight enumeration function (CWEF), is defined by n B j Z Bij Z i i 0 • It represents the weight enumeration function of all codewords corresponding to information sequences of weight j . • And 1 j B j Z BY , Z Y 0 j j! Y ECE 6640 25 Example (7,4) LBC • Weight Distribution Polynomial AZ 1 7 Z 3 7 Z 4 1 Z 7 • Codeword Weights B00 1 B31 3 B32 3 B33 1 B41 1 B42 3 B43 3 B74 1 BY , Z 1 3 Y Z 3 3 Y 2 Z 3 1 Y 3 Z 3 1 Y Z 4 3 Y 2 Z 4 3 Y 3 Z 4 1 Y 4 Z 7 • And ECE 6640 B0 Z 1 B1 Z 3 Z 3 Z 4 B3 Z Z 3 3 Z 4 B2 Z 3 Z 3 3 Z 4 B4 Z Z 7 26 Hard Decision Decoding • S hard decision is made as to whether each transmitted bit in a codeword is a 0 or a 1. – The resulting discrete-time channel (consistingof the modulator, the AWGN channel, and the modulator/demodulator) constitutes a BSC with crossover probability p. – If coherent PSK is employed in transmitting and receiving the bits in each codeword, then 2 EC p Q N0 Q 2 b Rc – If coherent FSK is used to transmit the bits in each codeword, then ECE 6640 EC Q b Rc p Q N 0 27 Minimum-Distance Decoding (Maximum-Likelihood) • The n bits from the detector corresponding to a received codeword are passed to the decoder, which compares the received codeword with the M possible transmitted codewords and decides in favor of the codeword that is closest in Hamming distance (number of bit positions in which two codewords differ) to the received codeword. • Using Syndromes and Standard Arrays … ECE 6640 28 Syndrome Testing • Describing the received n-tuples of U y cm e – where e are potential errors in the received matrix • As one might expect, use the check matrix to generate a the syndrome S y H T cm e H T cm H T e H T • but from the check matrix 0 cm H T S eHT ECE 6640 – if there are no bit errors, the result is a zero matrix! 29 Syndrome Testing • If there are no bit error, the Syndrome results in a 0 matrix • If there are errors, the requirement of a linear block code is to have a one to one mapping between correctable errors and non-zero syndrome results. • Error correction requires the identification of the corresponding syndrome for each of the possible errors. – generate a 2^n x n array representing all possible received n-tuples – this is called the standard array ECE 6640 30 Standard Array Format (n,k) • An n × (n − k) table • The actual codewords are placed in the top row. – The 1st code word is an all zeros codeword. It also defines the 1st coset that has zero errors • Each row is described as a coset with a coset leader describing a particular error. ECE 6640 – for an n-tuple, there will be n-k coset leaders, one of which is zero errors. 31 Example 7.5-1 (5,2) • Let us construct the standard array for the (5, 2) systematic code with generator matrix given by parmat = 1 0 0 1 1 1 1 0 0 0 1 0 0 0 1 dmin = 3 Correct all single bit errors and two 2-bit errors ECE 6640 32 Error Detection • Since the minimum separation between a pair of codewords is dmin, it is possible for an error pattern of weight dmin to transform one of these 2k codewords in the code to another codeword. When this happens, we have an undetected error. • On the other hand, if the actual number of errors is less than dmin, the syndrome will have a nonzero weight. • Clearly, the (n, k) block code is capable of detecting up to dmin−1 errors. ECE 6640 33 Error Correction • The error correction capability of a code also depends on the minimum distance. However, the number of correctable error patterns is limited by the number of possible syndromes or coset leaders in the standard array. – To determine the error correction capability of an (n, k) code, it is convenient to view the 2k codewords as points in an n-dimensional space. – If each codeword is viewed as the center of a sphere of radius (Hamming distance) t, the largest value that t may have without intersection (or tangency) of any pair of the 2k spheres is d min 1 t 2 ECE 6640 34 Code Capability • In general, a code with minimum distance dmin can detect ed errors and correct ec errors, where ed ec d min 1 ec ed ECE 6640 35 Block and Bit Error Probability • Since the binary symmetric channel is memoryless, the bit errors occur independently. • The probability of m errors in a block of n bits is n m nm Pm, n p 1 p m • Therefore, the probability of a codeword error is upperbounded by the expression n m nm Pe p 1 p m t 1 m n – for high SNR and small p it approximates as the first term ECE 6640 n t 1 p 1 p n t 1 Pe t 1 36 Block and Bit Error Probability • To derive an approximate bound on the error probability of each binary symbol in a codeword, we note that – if 0 is sent and a sequence of weight t +1 is received, the decoder will decode the received sequence of weight t + 1 to a codeword at a distance at most t from the received sequence and hence a distance of at most 2t +1 from 0. – since the minimum weight of the code is 2t + 1, the decoded codeword has to be of weight 2t + 1. This means that for each highly probable block error we have 2t + 1 bit errors in the codeword components – Therefore, 2 t 1 n t 1 p 1 p n t 1 Pbe n t 1 ECE 6640 37 Perfect Codes • To describe the basic characteristics of a perfect code, suppose we place a sphere of radius t around each of the possible transmitted codewords. • Each sphere around a codeword contains the set of all codewords of Hamming distance less than or equal to t from the codeword. 2 nk n i 1 i t • When equality holds, it is a perfect code. – Hamming codes – (23,12) Golay code ECE 6640 38 Coming Next • A discussion of types of linear block codes • A discussion of Soft Decoding … ECE 6640 39 Hard vs Soft Decision Decoding • In Hard decision decoding, the received codeword is compared with the all possible codewords and the codeword which gives the minimum Hamming distance is selected • In Soft decision decoding, the received codeword is compared with the all possible codewords and the codeword which gives the minimum Euclidean distance is selected. – Thus the soft decision decoding improves the decision making process by supplying additional reliability information ( calculated Euclidean distance or calculated log-likelihood ratio) ECE 6640 40 7.4 Optimum Soft Decision of LBC • Performed when a binary transmission system is used – Use direct bit sample value to determine a Euclidean distance from the appropriate binary values • For the possible codewords, determine the summed Euclidean distance error from the n-bit codeword samples CM m C r , cm 2 cmj 1 r , n j 1 for m 1,2,, M • Select the codeword that maximizes the correlation. • This system looks across all n-bits simultaneously instead of using n-hard decision detected bits and correcting the bit errors, if possible. ECE 6640 41 Error Probability – Soft Decision • We can use the general bounds on the block error probability from Section 7.2-4. • The simple bound of Equation 7.2–43 under soft decision decoding reduces to – For orthogonal BFSK – For BPSK ECE 6640 42 Comparing Hard and Soft Decision • For the Golay (23, 12) code comparison Soft decision outperforms hard decisions, but at what computation and system costs? ECE 6640 43 Linear Block Codes • Repetition Code – (3,1) or (n,1) codes that repeat the value 0 or 1 n times. – dmin = n, therefore the first one with error correction is n=3 • • • • • Hamming Codes Golay Codes (24,12) and (23,12) Reed-Muller Codes Hadamard Codes Bose-Chadhuri-Hocquenghem (BCH) Codes – powerful class of cyclic codes – generalization of Hamming codes that allow multiple error correction ECE 6640 44 Code Generation Algorithm • See Wikipedia: http://en.wikipedia.org/wiki/Hamming_code • In MATLAB see the function hammgen – Produce parity-check and generator matrices for Hamming code. – H = hammgen(M) produces the parity-check matrix H for a given integer M, M >= 3. The code length of a Hamming code is N=2^M-1. The message length is K = 2^M - M - 1. The parity-check matrix is an M-by-N matrix. – [H, G, N, K] = hammgen(...) produces the parity-check matrix H as well as the generator matrix G, and provides the codeword length N and the message length K. ECE 6640 [m k n] 3 4 5 6 7 8 9 10 4 11 26 57 120 247 502 1013 7 15 31 63 127 255 511 1023 45 Hadamard Matrix Orthogonal Codes H D 1 HD H D 1 H D 1 H D 1 • Start with the data set for one bit and generate a Hadamard code for the data set 0 0 0 H 1 0 1 1 0 0 1 1 ECE 6640 0 1 H1 H2 0 H1 1 1 z ij 0 0 H1 0 H1 0 0 for i j for i j 0 1 0 1 0 0 1 1 0 1 1 0 46 Hadamard Matrix Orthogonal Codes (2) 0 0 0 0 1 1 1 1 0 0 1 1 0 0 1 1 0 1 0 1 H H3 2 0 H 2 1 0 1 0 0 0 H 2 0 H 2 0 0 0 0 0 1 0 1 0 1 0 1 0 0 1 1 0 0 1 1 0 1 1 0 0 1 1 0 See Hadamard in MATLAB H4=hadamard(16) H4xcor=H4'*H4=H4*H4' ans = 16*eye(16) ECE 6640 0 0 0 0 1 1 1 1 0 1 0 1 1 0 1 0 0 0 1 1 1 1 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 0 1 1 0 1 0 0 1 Data Set Codeword 0 0 0 0 0 1 0 1 0 0 1 1 1 0 0 1 0 1 1 1 0 1 1 1 H H4 3 0 0 0 H3 0 0 1 0 1 0 0 1 1 1 0 0 1 0 1 1 1 0 1 1 1 0 0 0 0 0 0 0 H 3 0 H 3 0 0 0 0 0 0 0 0 0 1 0 1 0 1 0 1 0 1 0 0 0 1 1 0 0 1 1 0 0 1 0 1 1 0 0 1 1 0 0 1 1 0 0 0 0 1 1 1 1 0 0 0 0 1 0 1 1 0 1 0 0 1 0 0 0 1 1 1 1 0 0 0 0 1 0 1 1 0 1 0 0 1 0 1 1 0 0 0 0 0 0 0 0 1 1 1 0 1 0 1 0 1 0 1 1 0 1 0 0 1 1 0 0 1 1 1 1 0 0 1 1 0 0 1 1 0 1 0 0 0 0 0 0 1 1 1 1 1 1 1 0 1 0 1 1 0 1 0 1 0 1 0 0 1 1 1 1 0 0 1 1 0 1 0 1 0 1 1 0 0 1 1 0 0 1 1 0 0 1 1 1 1 1 1 0 1 0 1 1 1 0 0 0 1 0 0 1 1 1 1 1 1 0 1 0 1 0 0 1 1 0 0 1 1 0 0 1 1 0 0 0 0 0 0 1 0 1 0 0 0 1 1 0 1 1 0 1 0 0 1 1 0 0 1 0 1 1 0 47 Hadamard Matrix Orthogonal Codes (2) • The Hadamard Code Set generates orthogonal code for the bit representations Letting for i j 1 z ij 0 = +1V 0 for i j 1 = -1V • For a D-bit pre-coded symbol a 2^D bit symbol is generated from a 2^D x 2^D matrix – Bit rate increases from D-bits/sec to 2^D bits/sec! • For equally likely, equal-energy orthogonal signals, the probability of codeword (symbol) error can be bounded as Es PE M M 1 Q N0 ECE 6640 M 2D Es D E b 48 Golay Codes • See wikipedia: https://en.wikipedia.org/wiki/Binary_Golay_code • The extended binary Golay code, (24,12) encodes 12 bits of data in a 24-bit word in such a way that any 3-bit errors can be corrected or any 7-bit errors can be detected. • The perfect binary Golay code (23,12) has codewords of length 23 and is obtained from the extended binary Golay code by deleting one coordinate position. ECE 6640 49 BCH Codes • Bose-Chadhuri-Hocquenghem (BCH) • From Wikipedia: http://en.wikipedia.org/wiki/BCH_code – One of the key features of BCH codes is that during code design, there is a precise control over the number of symbol errors correctable by the code. In particular, it is possible to design binary BCH codes that can correct multiple bit errors. – BCH codes are used in applications such as satellite communications,[2] compact disc players, DVDs, disk drives, solid-state drives[3] and two-dimensional bar codes. • Table 6.4 provides generators of primitive BCH Codes based on the desired (n,k) and t ECE 6640 50 BCH Code Performance Figure 6.23 ECE 6640 51 Comparative Code Performance ECE 6640 Notes and figures are based on or taken from materials in the course textbook: Bernard Sklar, Digital Communications, Fundamentals and Applications, Prentice Hall PTR, Second Edition, 2001. 52 Cyclic Codes • An important subclass of linear clock codes • Easily implemented using a feedback shift register – all cyclic shifts of a codeword forms another codeword • The components of the codeword can be treated like a polynomial U X u0 u1 X u2 X 2 un 1 X n 1 ECE 6640 53 Algebraic Structure of Cyclic Codes • Based on U X u0 u1 X u2 X 2 un 1 X n 1 – U(X) (n-1) degree polynomial code word with the property U i X X i U X q X n n X 1 X 1 X i U X q X X n 1 U i X – where the final term is the remainder of the division process and it is also a codeword – The remainder can also be described in terms of modulo arithmetic as U i X X i U X modulo X n 1 ECE 6640 54 Example U X u0 u1 X u2 X 2 un 1 X n 1 • Multiplying a codeword by X and adding “two” un-1which is equal to zero in a binary sense X U X un 1 u0 X u1 X 2 u2 X 2 un 2 X n 1 un 1 X n un 1 X U X un 1 u0 X u1 X 2 u2 X 2 un 2 X n 1 un 1 X n 1 • The final term module Xn+1 results in a new codeword X U X un 1 u0 X u1 X 2 u2 X 2 un 2 X n 1 • Repeat for the rest of the codewords …. ECE 6640 55 Binary Cyclic Codes • We can generate a cyclic code using a generator polynomial in much the same way that we generated a block code using a generator matrix. – The generator polynomial g(x) for an (n,k) cyclic code is unique and is of the form (with the requirement that g0=1 and gp=1) g X g 0 g1 X g 2 X 2 g p X p – Every codeword in the space is of the form U X m X g X – with the message polynomial m X m0 m1 X m2 X 2 mn p 1 X n p 1 – such that k-1=n-p-1 or the number of parity bits is p nk ECE 6640 56 Binary Cyclic Codes • U is said to be a valid codeword of the subspace S if, and only if, g(X) divides into U(X) without a remainder. U X m X g X U i X m X gX • A generator polynomila g(X) of an (n,k) cyclic code is a factor of Xn+1. (A result of the codewords being cyclic). • Therefore some example generators are X 7 1 X 3 X 1 X 4 X 2 X 1 – g(X) for (7,3) with p=3 – g(X) for (7,4) with p=4 More polynomials ECE 6640 are in Table 7.9-1 57 Encoders for Cyclic Codes • The encoding operations for generating a cyclic code may be performed by a linear feedback shift register based on the use of either the generator polynomial or the parity polynomial. • The division of the polynomial A(X) by the polynomial g(X) may be accomplished as ECE 6640 58 Encoder • Using the polynomial divider, encoding can be accomplished with “switches” as shown … – The first k bits at the output of the encoder are simply the k information bits. After the k information bits are all clocked into the encoder, the positions of the two switches are reversed. At this time, the contents of the shift register are simply the n − k parity ECE 6640 check bits. 59 Decoding • The cyclic structure of these codes makes it possible to implement syndrome computation and the decoding process using shift registers with considerable less complexity compared to the general class of linear block codes. – A table lookup may be performed to identify the most probable error vector. – Note that if the code is used for error detection, a nonzero ECE 6640 syndrome detects an error in transmission of the codeword. 60 Maximum-Length Shift Register Codes • The following table can be used to generate binary sequences that only repeat after 2m-1 clock cycles. • As such the m-bit words that result are unique and a (n,k)=(2m-1,m) code could be generated (dual codes to a cyclic Hamming code). ECE 6640 61 BCH Codes • Bose-Chadhuri-Hocquenghem (BCH) • From Wikipedia: http://en.wikipedia.org/wiki/BCH_code – One of the key features of BCH codes is that during code design, there is a precise control over the number of symbol errors correctable by the code. In particular, it is possible to design binary BCH codes that can correct multiple bit errors. – BCH codes are used in applications such as satellite communications,[2] compact disc players, DVDs, disk drives, solid-state drives[3] and two-dimensional bar codes. • Table 7.10-1 provides generators of primitive BCH Codes based on the desired (n,k) and t ECE 6640 62 A portion of the Table ECE 6640 63 BCH Code Performance Figure 6.23 ECE 6640 Notes and figures are based on or taken from materials in the course textbook: Bernard Sklar, Digital Communications, Fundamentals and Applications, Prentice Hall PTR, Second Edition, 2001. 64 Matlab Block Code Examples • ChCode_MPSK_BJB – BPSK, QPSK or 8-PSK Time Symbols at a fixed 1 Mbps channel symbol bit rate (1 Msps for BPSK, ½ Msps for QPSK, and 1/3 Msps for 8-PSK) – Linear Block Channel Encoding • (3,1) with t=1, (6,3) with t=1, (7,4) with t=1, and (8,2) with t=2 – Comparison curve based on symbol bit error rate versus corrected bit-error rates for the data stream • See the word document for a range of results. ECE 6640 65 Alternate Lecture Slides on BCH • http://www.signal.uu.se/Staff/sf/sf.html • Sorour Falahati, PhD, Research Engineer – Uppsala Universitet, Sweden – Digital Communications I: Modulation and Coding Course – 2009 – Lecture 9 ECE 6640 66 7.11 Reed-Solomon Codes • Reed-Solomon codes are a special class of nonbinary BCH codes that were first introduced in Reed and Solomon. • An good overview can be found at: – http://www.cs.cmu.edu/~guyb/realworld/reedsolomon/reed_solom on_codes.html • Matlab Information – http://www.mathworks.com/help/comm/ug/error-detection-andcorrection.html#bsxtjo1 ECE 6640 67 Reed-Solomon Codes • Nonbinary cyclic codes with symbols consisting of m-bit sequences – (n, k) codes of m-bit symbols exist for all n and k where 0 k n 2m 2 – Convenient example n, k 2m 1, 2m 1 2 t – An “extended code” could use n=2m and become a perfect length hexidecimal or byte-length word. • R-S codes achieve the largest possible code minimum distance for any linear code with the same encoder input and output block lengths! d min n k 1 ECE 6640 d 1 n k t min 2 2 68 Comparative Advantage to Binary • For a (7,3) binary code: – 2^7=128 n-tuples – 2^3=8 3-“symbol” codewords – 8/128=1/16 of the n-tuples are codewords • For a (7,3) R-S with 3-bit symbols (t=2) – (2^7)^3 =2,097,152 n-tuples – (2^3)^3= 512 3-“symbol” codewords – 2^9/2^21=1/2^12=1/4,096 of the n-tuples are codewords • Significantly increasing hamming distances are possible! ECE 6640 Notes and figures are based on or taken from materials in the course textbook: Bernard Sklar, Digital Communications, Fundamentals and Applications, Prentice Hall PTR, Second Edition, 2001. 69 Reed Solomon Code Options • m=3 – (7,5) 3-bit symbols, t=1 – (7,3) 3-bit symbols, t=2 • m=4 – – – – – (15,13) 4-bit symbols, t=1 (15,11) 4-bit symbols, t=2 (15, 9) 4-bit symbols, t=3 (15, 7) 4-bit symbols, t=4 (15, 5) 4-bit symbols, t=5 • Byte wide coding • m=8 – (255,223) 8-bit symbols, t=16 – (255,239) 8-bit symbols, t=8 t represents m-bit “symbol” error corrections Note: The symbols may be transmitted as m-ary elements. (i.e. m=3 8-psk or m=4 16-QAM) ECE 6640 70 R-S Error Probability • Useful for burst-error corrections – Numerous systems suffer from burst-errors • Error Probability - Symbol 2 1 m m 2 1 j 1 p 1 p 2 1 j j PE m 2 1 j t 1 j m • The bit error probability can be upper bounded by the symbol error probability for specific modulation types. For MFSK m 1 PB 2 m PE 2 1 ECE 6640 71 Example 7.11-2 ECE 6640 72 R-S and Finite Fields • R-S codes use generator polynomials – Encoding may be done in a systematic form – Operations (addition, subtraction, multiplication and division) must be defined for the m-bit symbol systems. • Galois Fields (GF) allow operations to be readily defined ECE 6640 73 R-S Encoding/Decoding • Done similarly to binary cyclic codes – GF math performed for multiplication and addition of feedback polynomial • U(X)=m(X) x g(X) with p(X) parity computed • Syndrome computation performed • Errors detected and corrected, but with higher complexity (a binary error calls for flipping a bit, what about an m-bit symbol?) – r(X)=U(X) + e(X) – Must determine error location and error value … ECE 6640 74 Reed-Solomon Summary • Widely used in data storage and communications protocols • You may need to know more in the future (systems you work with may use it) ECE 6640 75 7.12 Coding for Channels with Burst Errors • Most of the well-known codes that have been devised for increasing reliability in the transmission of information are effective when the errors caused by the channel are statistically independent. • However, there are channels that exhibit bursty error characteristics. • Such error clusters are not usually corrected by codes that are optimally designed for statistically independent errors. • Some of the codes designed for random error correction, i.e., nonburst errors, have the capability of burst error correction. • Reed-Solomon is a prime example! ECE 6640 76 Burst Errors • Result in a series of bits or symbols being corrupted. • Causes: – – – – Signal fading (cell phone Rayleigh Fading) Lightening or other “impulse noise” (radar, switches, etc.) Rapid Transients CD/DVD damage • See Wikipedia for references: http://en.wikipedia.org/wiki/Burst_error ECE 6640 77 Burst Error Correction Capability • The burst error correction capability of a code is defined as 1 less than the length of the shortest uncorrectable burst. • It is relatively easy to show that a systematic (n, k) code, which has n − k parity check bits, can correct bursts of length b < ½ (n − k). • For Reed-Solomon this is potentially on the order of t x m-1 bits. • An effective method for dealing with burst error channels is to interleave the coded data in such away that the bursty channel is transformed to a channel having independent errors. Making long burst into multiple short bursts! ECE 6640 78 Interleaving • Convolutional codes are suitable for memoryless channels with random error events. • Some errors have bursty nature: – Statistical dependence among successive error events (time-correlation) due to the channel memory. • Like errors in multipath fading channels in wireless communications, errors due to the switching noise, … • “Interleaving” makes the channel looks like as a memoryless channel at the decoder. Digital Communications I: Modulation and Coding Course, Period 3 – 2006, Sorour Falahati, Lecture 13 ECE 6640 79 Interleaving … • Interleaving is done by spreading the coded symbols in time (interleaving) before transmission. • The reverse in done at the receiver by deinterleaving the received sequence. • “Interleaving” makes bursty errors look like random. Hence, Conv. codes can be used. • Types of interleaving: – Block interleaving – Convolutional or cross interleaving Digital Communications I: Modulation and Coding Course, Period 3 – 2006, Sorour Falahati, Lecture 13 ECE 6640 80 Interleaving … • Consider a code with t=1 and 3 coded bits. • A burst error of length 3 can not be corrected. A1 A2 A3 B1 B2 B3 C1 C2 C3 2 errors • Let us use a block interleaver 3X3 A1 A2 A3 B1 B2 B3 C1 C2 C3 Interleaver A1 B1 C1 A2 B2 C2 A3 B3 C3 A1 B1 C1 A2 B2 C2 A3 B3 C3 Deinterleaver A1 A2 A3 B1 B2 B3 C1 C2 C3 1 errors 1 errors 1 errors ECE 6640 Digital Communications I: Modulation and Coding Course, Period 3 – 2006, Sorour Falahati, Lecture 13 81 A Block Interleaver • A block interleaver formats the encoded data in a rectangular array of m rows and n columns. Usually, each row of the array constitutes a codeword of length n. An interleaver of degree m consists of m rows (m codewords) as illustrated in Figure 7.12–2. ECE 6640 82 Convolutional Interleaving • A simple banked switching and delay structure can be used as proposed by Ramsey and Forney. – Interleave after encoding and prior to transmission – Deinterleave after reception but prior to decoding ECE 6640 83 Forney Reference • ECE 6640 Forney, G., Jr., "Burst-Correcting Codes for the Classic Bursty Channel," Communication Technology, IEEE Transactions on , vol.19, no.5, pp.772,781, October 1971. 84 Convolutional Example • Data fills the commutator registers • Output sequence (in repeating blocks of 16) – 1 14 11 8 – 5 2 15 12 – 9 6 3 16 – 13 10 7 4 – 1 14 11 8 – 5 2 15 12 – 9 6 3 16 – 13 10 7 4 ECE 6640 85 7.13 Combining Codes • • • The problem, however, is that the decoding complexity of a block code generally increases with the block length, and this dependence in general is an exponential dependence. Therefore improved performance through using block codes is achieved at the cost of increased decoding complexity. One approach to design block codes with long block lengths and with manageable complexity is to begin with two or more simple codes with short block lengths and combine them in a certain way to obtain codes with longer block length that have better distance properties. Then some kind of suboptimal decoding can be applied to the combined code based on the decoding algorithms of the simple constituent codes. – Product Codes – Concatenated Codes ECE 6640 86 Product Codes • A simple method of combining two or more codes is described in this section. Let us assume we have two systematic linear block codes; code Ci is an (ni , ki ) code with minimumdistance dmin i for i = 1, 2. The product of these codes is an (n1n2, k1k2) linear block code whose bits are arranged in a matrix form as shown in Figure 7.13–1. ECE 6640 87 Concatenated codes • A concatenated code uses two levels on coding, an inner code and an outer code (higher rate). – Popular concatenated codes: Convolutional codes with Viterbi decoding as the inner code and Reed-Solomon codes as the outer code • The purpose is to reduce the overall complexity, yet achieving the required error performance. Outer encoder Interleaver Inner encoder Modulate Output data Outer decoder Deinterleaver Inner decoder Demodulate Channel Input data ECE 6640 Digital Communications I: Modulation and Coding Course, Period 3 – 2006, Sorour Falahati, Lecture 13 88 Practical example: Compact Disc “Without error correcting codes, digital audio would not be technically feasible.” • Channel in a CD playback system consists of a transmitting laser, a recorded disc and a photo-detector. • Sources of errors are manufacturing damages, fingerprints or scratches • Errors have bursty like nature. • Error correction and concealment is done by using a concatenated error control scheme, called cross-interleaver Reed-Solomon code (CIRC). ECE 6640 Digital Communications I: Modulation and Coding Course, Period 3 – 2006, Sorour Falahati, Lecture 13 89 CD CIRC Specifications Maximum correctable burst length Maximum interpolatable burst length Sample interpolation rate Undetected error samples (clicks) New discs are characterized by ECE 6640 4000 bits (2.5 mm track length) 12,000 bit (8 mm) One sample every 10 hors at PB=10-4 1000 samples/min at PB=10-3 Less than one every 750 hours at PB=10-3 Negligible at PB=10-3 PB=10-4 90 Compact disc – cont’d • CIRC encoder and decoder: Encoder interleave C2 encode D* interleave C1 encode D interleave deinterleave C2 decode D* deinterleave C1 decode D deinterleave Decoder ECE 6640 Digital Communications I: Modulation and Coding Course, Period 3 – 2006, Sorour Falahati, Lecture 13 91 CD Encoder Process 16-bit Left Audio 16-bit Right Audio (24 byte frame) RS code 8-bit symbols RS(255, 251) 24 Used Symbols 227 Unused Symbols Equ. RS(28, 24) RS(255, 251) 28 Used Symbols 223 Unused Symbols Equ. RS(32, 28) ECE 6640 Overall Rate 3/4 92 CD Decoder Process ECE 6640 93