The General Linear Model A. Assumptions

advertisement

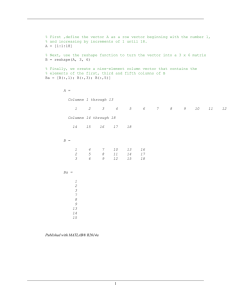

http://elsa.berkeley.edu/sst/regression.html The General Linear Model The garden variety linear model can be written as Y = X + U A. Assumptions 1. The design matrix, n observations on each of k variables, is fixed in repeated samples of size n. This implies that X : nxk is not stochastic. Also, n > k. 2. The n x k design matrix is of full column rank. That is, the columns of the design matrix are linearly independent. The implication is that the columns of X form a basis for a k-dimension vector space. 3.a. The n-dimension disturbance vector U consists of n i.i.d. random variables such that E(U) = 0 E(UU') = 2In where 2 is an unknown parameter. Or, b. The disturbance vector is an n-variate normal r.v. The assumption that the design matrix be non stochastic is unnecessarily stringent. We only need assume that the disturbances and the independent variables are independent of one another. B. Statement of the Model Let Y be an n x 1 vector of observations on a dependent variable. For example, the crime rate in each of a number of communities at a point in time, or in one community over a number of time periods. Let X be n observations on each of k independent variables, n>k. For example, distance of community from the urban center, relative wealth of the community, and probability of apprehension. While Y is an n-dimensional vector, we have only k variables to explain it. This leaves us with two observations. First, we have too many equations, n, and too few unknowns, k. Second, we will need a rule for mapping the n-vector into the k-dimensional space spanned by the columns of X. We postulate the following linear model Y = X +U where is a k x 1 parameter vector and U is an n x 1 disturbance vector. is not observable. C. Least Squares Estimation of the Slope Coefficients 1. The Estimator We wish to choose to minimize the sum of squared deviations between the observed values of the dependent variable and the fitted values for our given data on X. That is Xi denotes the ith realization of the k independent variables. In vector notation we wish to minimize, by our best guess for the unknown parameter vector, a quadratic form which we will denote by Q We proceed in the usual fashion by deriving the k first order conditions Set each of the equations to zero and solve for the unknown parameters. Note that in solving the system of equations for the k unknowns it was critical that the columns of X be linearly independent. Were they not independent it would not have been possible to construct the necessary inverse. 2. The Mean of the Estimator We should note several things: Expectation is a linear operator. The error term is assumed to have a mean of zero. X'X cancels with its inverse. Initially we assumed that X is non-stochastic. So The least squares estimator is linear in Y, and by substitution it is linear in the error term. It is also unbiased. 3. The Variance of the Estimator Our starting point is the definition of the variance of any random variable. Substituting in from the expression for the mean of the parameter vector Again, since the X are non-stochastic and expectation is a linear operator we can cut right to the heart