FOREWORD

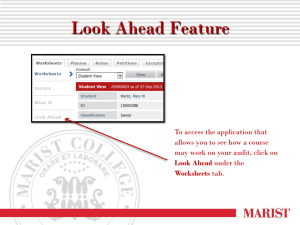

advertisement