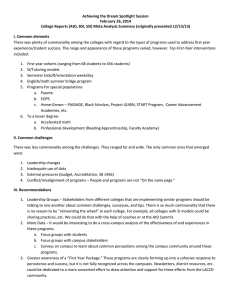

PROgRESS iN ThE FiRST FivE YEARS An Evaluation of Achieving the Dream

advertisement