Kernel methods for comparing distributions, measuring dependence Le Song

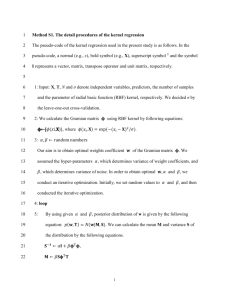

advertisement

Kernel methods for comparing distributions, measuring dependence Le Song Machine Learning II: Advanced Topics CSE 8803ML, Spring 2012 Principal component analysis Given a set of 𝑀 centered observations 𝑥𝑘 ∈ 𝑅𝑑 , PCA finds the direction that maximizes the variance 𝑋 = 𝑥1 , 𝑥2 , … , 𝑥𝑀 𝑤∗ = 1 ⊤ 𝑎𝑟𝑔𝑚𝑎𝑥 𝑤 ≤1 𝑘 𝑤 𝑥𝑘 2 𝑀 = 𝑎𝑟𝑔𝑚𝑎𝑥 1 ⊤ ⊤ 𝑤 ≤1 𝑀 𝑤 𝑋𝑋 𝑤 1 𝑋𝑋 ⊤ , 𝑀 𝐶= 𝑤 ∗ can be found by solving the following eigen-value problem 𝐶𝑤 = 𝜆 𝑤 2 Alternative expression for PCA The principal component lies in the span of the data 𝑤 = 𝑘 𝛼𝑘 𝑥𝑘 = 𝑋𝛼 Plug this in we have 𝐶𝑤 = 1 𝑋𝑋 ⊤ 𝑋𝛼 𝑀 = 𝜆 𝑋𝛼 Furthermore, for each data point 𝑥𝑘 , the following relation holds 1 ⊤ 𝑥𝑘 𝑋𝑋 ⊤ 𝑋𝛼 = 𝜆 𝑥𝑘⊤ 𝑋𝛼, ∀𝑘 𝑀 1 matrix form, 𝑋 ⊤ 𝑋𝑋 ⊤ 𝑋𝛼 = 𝜆𝑋 ⊤ 𝑋𝛼 𝑀 𝑥𝑘⊤ 𝐶𝑤 = In Only depends on inner product matrix 3 Kernel PCA Key Idea: Replace inner product matrix by kernel matrix 1 ⊤ PCA: 𝑋 𝑋𝑋 ⊤ 𝑋𝛼 𝑀 = 𝜆𝑋 ⊤ 𝑋𝛼 𝑥𝑘 ↦ 𝜙 𝑥𝑘 , Φ = 𝜙 𝑥1 , … , 𝜙 𝑥𝑘 , 𝐾 = Φ⊤ Φ Nonlinear component 𝑤 = Φ𝛼 Kernel PCA: 1 𝐾𝐾𝛼 𝑀 = 𝜆𝐾𝛼, equivalent to 1 𝐾𝛼 𝑀 =𝜆𝛼 First form an 𝑀 by 𝑀 kernel matrix 𝐾, and then perform eigendecomposition on 𝐾 4 Kernel PCA example Gaussian RBF kernel exp − 𝑥−𝑥 ′ 2𝜎 2 2 over 2 dimensional space Eigen-vector evaluated at a test point 𝑥 is a function 𝑤 ⊤ 𝜙 𝑥 = 𝑘 𝛼𝑘 𝑘(𝑥𝑘 , 𝑥) 5 Spectral clustering 6 Spectral clustering Form kernel matrix 𝐾 with Gaussian RBF kernel Treat kernel matrix 𝐾 as the adjacency matrix of a graph (set diagonal of 𝐾 to be 0) Construct the graph Laplacian 𝐿 = 𝐷 −1/2 𝐾𝐷−1/2 , where 𝐷 = 𝑑𝑖𝑎𝑔(𝐾 1) Compute the top 𝑘 eigen-vector 𝑉 = (𝑣1 , 𝑣2 , … , 𝑣𝑘 ) of 𝐿 Use 𝑉 as the input to K-means for clustering 7 Canonical correlation analysis 8 Canonical correlation analysis Given Estimate two basis vectors 𝑤𝑥 and 𝑤𝑦 Estimate the two basis vectors so that the correlations of the projections onto these vectors are maximized. 9 CCA derivation II Define the covariance matrix of 𝑥, 𝑦 The optimization problem is equal to We can require the following normalization, and just maximize the numerator 10 CCA as generalized eigenvalue problem The optimality conditions say C xy wy C xx wx C yx wx C yy wy Put these conditions into matrix format 0 C yx w x C xx 0 0 wy C xy 0 w x C yy wy Generalized eigenvalue problem 𝐴𝑤 = 𝜆𝐵𝑤 11 CCA in inner product format Similar to PCA, the directions of projection lie in the span of the data 𝑋 = 𝑥1 , … , 𝑥𝑚 , 𝑌 = (𝑦1 , … , 𝑦𝑚 ) 𝑤𝑥 = 𝑋𝛼, 𝑤𝑦 = 𝑌𝛽 𝐶𝑥𝑦 = 1 𝑋𝑌 ⊤ , 𝐶𝑥𝑥 𝑚 = 1 𝑋𝑋 ⊤ , 𝐶𝑦𝑦 𝑚 = 1 𝑌𝑌^⊤ 𝑚 Earlier we have Plug in 𝑤𝑥 = 𝑋𝛼, 𝑤𝑦 = 𝑌𝛽, we have max , T X T XX T X T T XY X T Data only appear in inner products Y Y YY T T T Y 12 Kernel CCA Replace inner product matrix by kernel matrix max , T T K xK y K x K x T K yK y Where 𝐾𝑥 is kernel matrix for data 𝑋, with entries 𝐾𝑥 𝑖, 𝑗 = 𝑘 𝑥𝑖 , 𝑥𝑗 Solve generalized eigenvalue problem 0 K K y K xK x 0 y K xK 0 x 0 K yK y 13 Comparing two distributions For two Gaussian distributions 𝑃 𝑋 and 𝑄 𝑋 with unit variance, simply test 𝐻0 : 𝜇1 = 𝜇2 ? For general distributions, we can also use KL-divergence 𝐻0 : 𝑃 𝑋 = 𝑄 𝑋 ? 𝐾𝐿(𝑃| 𝑄 = 𝑋 𝑃 𝑋 𝑃(𝑋) log 𝑑𝑋 𝑄(𝑋) Given a set of samples 𝑥1 , … , 𝑥𝑚 ∼ 𝑃 𝑋 , 𝑥1′ , … , 𝑥𝑛′ ∼ 𝑄 𝑋 𝜇1 ≈ 1 𝑚 Need to estimate the density function first 𝑖 𝑥𝑖 𝑃 𝑋 log 𝑋 𝑃(𝑋) 𝑑𝑋 𝑄(𝑋) ≈ 1 𝑚 𝑖 log 𝑃(𝑥𝑖 ) 𝑄 (𝑥𝑖 ) 14 Embedding distributions into feature space Summary statistics for distributions Mean Covariance expected features Pick a kernel, and generate a different summary statistic 15 Pictorial view of embedding distribution Transform the entire distribution to expected features Feature space Feature map: 16 Finite sample approximation of embedding One-to-one mapping from to for certain kernels (RBF kernel) Sample average converges to true mean at 17 Embedding Distributions: Mean Mean reduces the entire distribution to a single number Representation power very restricted 1D feature space 18 Embedding Distributions: Mean + Variance Mean and variance reduces the entire distribution to two numbers Variance Richer representation But not enough Mean 2D feature space 19 Embedding with kernel features Transform distribution to infinite dimensional vector Rich representation Feature space Mean, Variance, higher order moment 20 Estimating embedding distances Given samples 𝑥1 , … , 𝑥𝑚 ∼ 𝑃 𝑋 , 𝑥1 , … , 𝑥𝑚′ ∼ 𝑄 𝑋 Distance can be expressed as inner products 21 Estimating embedding distance Finite sample estimator Form a kernel matrix with 4 blocks Average this block Average this block Average this block Average this block 22 Optimization view of embedding distance Optimization problem 𝜇𝑋 − 𝜇𝑋′ 2 = 2 sup < 𝑤, 𝜇𝑋 − 𝜇𝑋′ > 𝑤 ≤1 sup < 𝑤, 𝐸𝑋∼𝑃 𝜙 𝑋 𝑤 ≤1 − 𝐸𝑋∼𝑄 𝜙 𝑋 = > 2 Witness function 𝑤∗ 1 𝑚 1 𝑚 = 𝑖𝜙 𝐸𝑋∼𝑃 𝜙 𝑋 −𝐸𝑋∼𝑄 𝜙 𝑋 𝐸𝑋∼𝑃 𝜙 𝑋 −𝐸𝑋∼𝑄 𝜙 𝑋 1 𝑥𝑖 − ′ 𝑖 𝜙(𝑥𝑖′ ) 𝑚 = ′ 𝜇𝑋 −𝜇𝑋 ′ 𝜇𝑋 −𝜇𝑋 ≈ 1 ′ 𝑖 𝜙 𝑥𝑖 −𝑚′ 𝑖 𝜙(𝑥𝑖 ) 𝜇𝑋 − 𝜇𝑋′ 𝑤 𝑤∗ 23 Plot the witness function values 𝑤 ∗ 𝑥 = 𝑤 ∗⊤ 𝜙 𝑥 ∝ 1 𝑚 𝑖 𝑘 𝑥𝑖 , 𝑥 − 1 𝑚′ ′ 𝑘(𝑥 𝑖 𝑖 , 𝑥) Gaussian and Laplace distribution with the same mean and variance (Use Gaussian RBF kernel) 24 Application of kernel distance measure 25 Covariate shift correction Training and test data are not from the same distribution Want to reweight training data points to match the distribution of test data points Argmin𝛼≥0, 𝛼 1 =1 𝑖 𝛼𝑖 𝜙 1 𝑥𝑖 − ′ 𝑚 𝑖𝜙 𝑦𝑖 2 26 Embedding Joint Distributions Transform the entire joint distribution to expected features maps to Cross Covariance (Cov.) 1 maps to X Mean Y Mean Cov. 1 X Mean Y Mean Cov. maps to … … Higher order feature … … 27 Embedding Joint: Finite Sample Feature space Weights [Smola, Gretton, Song and Scholkopf. 2007] Feature mapped data points 28 Measure Dependence via Embeddings Use squared distance to measure dependence between X and Y Feature space Dependence measure useful for: •Dimensionality reduction •Clustering •Matching •… [Smola, Gretton, Song and Scholkopf. 2007] 29 Estimating embedding distances Given samples (𝑥1 , 𝑦1 ), … , (𝑥𝑚 , 𝑦𝑚 ) ∼ 𝑃 𝑋, 𝑌 Dependence measure can be expressed as inner products 𝜇𝑋𝑌 − 𝜇𝑋 ⊗ 𝜇𝑌 2 = 𝐸𝑋𝑌 [𝜙 𝑋 ⊗ 𝜓 𝑌 ] − 𝐸𝑋 𝜙 𝑋 ⊗ 𝐸𝑌 [𝜓 𝑌 ] 2 =< 𝜇𝑋𝑌 , 𝜇𝑋𝑌 > −2 < 𝜇𝑋𝑌 , 𝜇𝑋 ⊗ 𝜇𝑌 >+< 𝜇𝑋 ⊗ 𝜇𝑌 , 𝜇𝑋 ⊗ 𝜇𝑌 > Kernel matrix operation (𝐻 = 𝐼 𝑡𝑟𝑎𝑐𝑒( 𝐻 1 − 11⊤ ) 𝑚 𝑘(𝑥𝑖 , 𝑥𝑗 ) 𝐻 X and Y data are ordered in the same way 𝑘(𝑦𝑖 , 𝑦𝑗 ) ) 30 Optimization view of the dependence measure Optimization problem 𝜇𝑋𝑌 − 𝜇𝑋 ⊗ 𝜇𝑌 2 = sup < 𝑤, 𝜇𝑋𝑌 − 𝜇𝑋 ⊗ 𝜇𝑌 > 2 𝑤 ≤1 𝑤 ∗ ∝ 𝜇𝑋𝑌 − 𝜇𝑋 ⊗ 𝜇𝑌 Witness function 𝑤 ∗ 𝑥, 𝑦 = 𝑤 ∗⊤ (𝜙 𝑥 ⊗ 𝜓 𝑦 ) A distribution with two stripes Two stripe distribution vs Uniform over [-1,1]x[-1,1] 31 Application of kernel distance measure 32 Application of dependence meaure Independent component analysis Transform the times series, such that the resulting signals are as independent as possible (minimize kernel dependence) Feature selection Choose a set of features, such that its dependence with labels are as large as possible (maximize kernel dependence) Clustering Generate labels for each data point, such that the dependence between the labels and data are maximized (maximize kernel dependence) Supervised dimensionality reduction Reduce the dimension of the data, such that its dependence with side information in maximized (maximize kernel dependence) 33 PCA vs. Supervised dimensionality reduction 20 news groups 34 Supervised dimensionality reduction 10 years of NIPS papers: Text + Coauthor networks 35 Visual Map of LabelMe Images 36 Imposing Structures to Image Collections Adjacent points on the grid are similar High dimensional image features … Layout (sort/organize) images according to image features and maximize its dependence with an external structure color feature texture feature sift feature composition description 37 Compare to Other Methods Other layout algorithms do not have exact control of what structure to impose Kernel Embedding Method [Quadrinato , Song and Smola 2009] Generative Topographic Map (GTM) [Bishop et al. 1998] Self-Organizing Map (SOM) [Kohonen 1990] 38 39 Reference 40