Document 14242142

advertisement

Speeding up Relational Reinforement Learning

Through the Use of an Inremental First Order

Deision Tree Learner

Kurt Driessens, Jan Ramon and Hendrik Blokeel

Department of Computer Siene K.U.Leuven

Celestijnenlaan 200A, B-3001 Leuven, Belgium

fkurt.driessens,jan.ramon,hendrik.blokeelgs.kuleuven.a.be

Abstrat. Relational reinforement learning (RRL) is a learning tehnique that ombines standard reinforement learning with indutive logi

programming to enable the learning system to exploit strutural knowledge about the appliation domain.

This paper disusses an improvement of the original RRL. We introdue

a fully inremental rst order deision tree learning algorithm TG and

integrate this algorithm in the RRL system to form RRL-TG.

We demonstrate the performane gain on similar experiments to those

that were used to demonstrate the behaviour of the original RRL system.

1

Introdution

Relational reinforement learning is a learning tehnique that ombines reinforement learning with relational learning or indutive logi programming. Due to

the use of a more expressive representation language to represent states, ations

and Q-funtions, relational reinforement learning an be potentially applied

to a wider range of learning tasks than onventional reinforement learning. In

partiular, relational reinforement learning allows the use of strutural representations, the abstration from spei goals and the exploitation of results from

previous learning phases when addressing new (more omplex) situations.

The RRL onept was introdued by Dzeroski, De Raedt and Driessens in

[5℄. The next setions fous on a few details and the shortomings of the original

implementation and the improvements we suggest. They mainly onsist of a

fully inremental rst order deision tree algorithm that is based on the G-tree

algorithm of Chapman and Kaelbling [4℄.

We start with a disussion of the implementation of the original RRL system.

We then introdue the TG-algorithm and disuss its integration into RRL to

form RRL-TG. Then an overview of some preliminary experiments is given to

ompare the performane of the original RRL system to RRL-TG and we disuss

some of the harateristis of RRL-TG.

Initialise Q^ 0 to assign 0 to all (s; a) pairs

Initialise Examples to the empty set.

e := 0

while true

generate an episode that onsists of states s0 to si and ations a0 to ai 1

(where aj is the ation taken in state sj ) through the use of a standard

^e

Q-learning algorithm, using the urrent hypothesis for Q

for j=i-1 to 0 do

generate example x = (sj ; aj ; q^j ),

^ e (sj +1 ; a0 )

where q^j := rj + maxa0 Q

if an example (sj ; aj ; q

^old ) exists in Examples, replae it with x,

else add x to Examples

^ e using TILDE to produe Q^ e+1 using Examples

update Q

for j=i-1 to 0 do

for all ations ak possible in state sj do

^ e+1

if state ation pair (sj ; ak ) is optimal aording to Q

then generate example (sj ; ak ; ) where = 1

else generate example (sj ; ak ; ) where = 0

update P^e using TILDE to produe Pe^+1 using these examples (sj ; ak ; )

e := e + 1

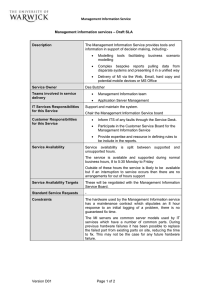

Fig. 1.

2

The RRL algorithm.

Relational Reinforement Learning

The RRL-algorithm onsists of two learning tasks. In a rst step, the lassial

Q-learning algorithm is extended by using a relational regression algorithm to

represent the Q-funtion with a logial regression tree, alled the Q-tree. In

a seond step, this Q-tree is used to generate a P-funtion. This P-funtion

desribes the optimality of a given ation in a given state, i.e., given a stateation pair, the P-funtion desribes whether this pair is a part of an optimal

poliy. The P-funtion is represented by a logial deision tree, alled the P-tree.

More information on logial deision trees (lassiation and regression) an be

found in [1, 2℄.

2.1 The Original RRL Implementation

Figure 1 presents the original RRL algorithm. The logial regression tree that

represents the Q-funtion in RRL is built starting from a knowledge base whih

holds orret examples of state, ation and Q-funtion value triplets. To generate

the examples for tree indution, RRL starts with running a normal episode

just like a standard reinforement learning algorithm [10, 9, 6℄ and stores the

states, ations and q-values enountered in a temporary table. At the end of

eah episode, this table is added to a knowledge base whih is then used for the

tree indution phase. In the next episode, the q-values predited by the generated

tree are used to alulate the q-values for the new examples, but the Q-tree is

generated again from srath.

The examples used to generate the P-tree are derived from the obtained Qtree. For eah state the RRL system enounters during an episode, it investigates

all possible ations and lassies those ations as optimal or not aording to the

q-values predited by the learned Q-tree. Eah of these lassiations together

with the aording states and ations are used as input for the P-tree building

algorithm.

Note that old examples are kept in the knowledge base at all times and never

deleted. To avoid having too muh old (and noisy) examples in the knowledge

base, if a state{ation pair is enountered more than one, the old example in

the knowledge base is replaed with a new one whih holds the updated q-value.

2.2 Problems with the original RRL

We identify four problems with the original RRL implementation that diminish

its performane. First, it needs to keep trak of an ever inreasing amount of

examples: for eah dierent state-ation pair ever enountered a Q-value is kept.

Seond, when a state-ation pair is enountered for the seond time, the new

Q-value needs to replae the old value, whih means that in the knowledge base

the old example needs to be looked up and replaed. Third, trees are built

from srath after eah episode. This step, as well as the example replaement

proedure, takes inreasingly more time as the set of examples grows. A nal

point is that leaves of a tree are supposed to identify lusters of equivalent stateation pairs, "equivalent" in the sense that they all have the same Q-value. When

updating the Q-value for one state-ation pair in a leaf, the Q-value of all pairs

in the leaf should automatially be updated; but this is not what is done in the

original implementation; an existing (state,ation,Q) example gets an updated

Q-value at the moment when exatly the same state-ation pair is enountered,

instead of a state-ation pair in the same leaf.

2.3 Possible Improvements

To solve these problems, a fully inremental indution algorithm is needed. Suh

an algorithm would relieve the need for the regeneration of the tree when new

data beomes available. However, not just any inremental algorithm an be

used. Q-values generated with reinforement learning are usually wrong at the

beginning and the algorithm needs to handle this appropriately.

Also an algorithm that doesn't require old examples to be kept for later referene, eliminates the use of old information to generalise from and thus eliminates

the need for replaement of examples in the knowledge base. In a fully inremental system, the problem of storing dierent q-values for similar but distint

state-ation pairs should also be solved.

In the following setion we introdue suh an inremental algorithm.

3

The TG Algorithm

3.1 The G-algorithm

We use a learning algorithm that is an extension of the G-algorithm [4℄. This

is a deision tree learning algorithm that updates its theory inrementally as

examples are added. An important feature is that examples an be disarded

after they are proessed. This avoids using a huge amount of memory to store

examples.

On a high level (f. Figure 2), the G-algorithm (as well as the new TGalgorithm) stores the urrent deision tree, and for eah leaf node statistis for

all tests that ould be used to split that leaf further. Eah time an example is

inserted, it is sorted down the deision tree aording to the tests in the internal

nodes, and in the leaf the statistis of the tests are updated.

reate an empty leaf

data available do

split data down to leafs

update statistis in leaf

if split needed then

grow two empty leafs

while

endwhile

Fig. 2.

The TG-algorithm.

The examples are generated by a simulator of the environment, aording to

some reinforement learning strategy. They are tuples (S tate; Ation; QV alue).

3.2 Extending to rst order logi

We extend the G algorithm in that we use a relational representation language

for desribing the examples and for the tests that an be used in the deision

tree. This has several advantages. First, it allows us to model examples that

an't be stored in a propositional way. Seond, it allows us to model the feature

spae. Even when it would be possible in theory to enumerate all features, as in

the ase of the bloks world with a limited number of bloks, a problem is only

tratable when a smaller number of features is used. The relational language

an be seen as a way to onstrut useful features. E.g. when there are no bloks

on some blok A, it is not useful to provide a feature to see whih bloks are

on A. Also, the use of a relational language allows us to struture the feature

spae as e.g. on(State,blok a,blok b) and on(State,blok ,blok d) are treated

in exatly the same way.

The onstrution of new tests happens by a renement operator. A more

detailed desription of this part of the system an be found in [1℄.

on(State,BlockB,BlockA)

yes

no

clear(State,BlockB)

yes

Qvalue = 0.5

no

Qvalue=0.4

Qvalue=0.3

on(State,BlockA,BlockB)

on(State,BlockC,BlockB)

...

clear(State,BlockA)

Fig. 3.

A logial deision tree

Figure 3 gives an example of a logial deision tree. The test in some node

should be read as the existentially quantied onjuntion of all literals in the

nodes in the path from the root of the tree to that node.

The statistis for eah leaf onsist of the number of examples on whih eah

possible test sueeds, the sum of their Q values and the sum of squared Q

values. Moreover, the Q value to predit in that leaf is stored. This value is

obtained from the statistis of the test used to split its parent when the leaf

was reated. Later, this value is updated as new examples are sorted in the leaf.

These statistis are suÆient to ompute whether some test is signiant, i.e.

the variane of the Q-values of the examples would be redued by splitting the

node using that partiular test. A node is split after some minimal number of

examples are olleted and some test beomes signiant with a high ondene.

In ontrast to the propositional system, keeping trak of the andidate-tests

(the renements of a query) is a non-trivial task. In the propositional ase the

set of andidate queries onsists of the set of all features minus the features that

are already tested higher in the tree. In the rst order ase, the set of andidate

queries onsists of all possible ways to extend a query. The longer a query is and

the more variables it ontains, the larger is the number of possible ways to bind

the variables and the larger is the set of andidate tests.

Sine a large number of suh andidate tests exist, we need to store them

as eÆiently as possible. To this aim we use the query paks mehanism introdued in [3℄. A query pak is a set of similar queries strutured into a tree;

ommon parts of the queries are represented only one in suh a struture. For

instane, a set of onjuntions f(p(X ); q (X )); (p(X ); r(X ))g is represented as a

term p(X ); (q (X ); r(X )). This an yield a signiant gain in pratie. (E.g., as-

suming a onstant branhing fator of b in the tree, storing a pak of n queries

of length l (n = bl ) takes O(nb=(b 1)) memory instead of O(nl).)

Also, exeuting a set of queries strutured in a pak on the examples requires onsiderably less time than exeuting them all separately. An empirial

omparison of the speeds with and without paks will be given in setion 1.

Even storing queries in paks requires muh memory. However, the paks

in the leaf nodes are very similar. Therefore, a further optimisation is to reuse

them. When a node is split, the pak for the new right leaf node is the same

as the original pak of the node. For the new left sub-node, we urrently only

reuse them if we add a test whih does not introdue new variables beause in

that ase the query pak in the left leaf node will be equal to the pak in the

original node (exept for the hosen test whih of ourse an't be taken again).

In further work, we will also reuse query paks in the more diÆult ase when a

test is added whih introdues new variables.

3.3 RRL-TG

To integrate the TG-algorithm into RRL we removed the alls to the TILDE

algorithm and use TG to adapt the Q-tree and P-tree when new experiene (in

the form of (S tate; Ation; QV alue) triplets) is available. Thereby, we solved the

problems mentioned in Setion 2.2.

{ The trees are no longer generated from srath after eah episode.

{ Beause TG only stores statistis about the examples in the tree and only

referenes these examples one (when they are inserted into the tree) the

need for remembering and therefore searhing and replaing examples is

relieved.

{ Sine TG begins eah new leaf with ompletely empty statistis, examples

have a limited life span and old (possibly noisy) q-value examples will be

deleted even if the exat same state-ation pair is not enountered twie.

Sine the bias used by this inremental algorithm is the same as with TILDE, the

same theories an be learned by TG. Both algorithms searh the same hypothesis

spae and although TG an be misled in the beginning due to its inremental

nature, in the limit the quality of approximations of the q-values will be the

same.

4

Experiments

In this setion we ompare the performane of the new RRL-TG with the original

RRL system. Further omparison with other systems is diÆult beause there

are no other implementations of RRL so far. Also, the number of rst order

regression tehniques is limited and a full omparison of rst order regression is

outside the sope of this paper.

In a rst step, we reran the experiments desribed in [5℄. The original RRL

was tested on the bloks world [8℄ with three dierent goals: unstaking all bloks,

staking all bloks and staking two spei bloks. Bloks an be on the oor

or an be staked on eah other. Eah state an be desribed by a set (list) of

fats, e.g., s1 = flear(a); on(a; b); on(b; ); on(; f loor)g. The available ations

are then move(x; y ) where x 6= y and x is a blok and y is a blok or the oor.

The unstaking goal is reahed when all bloks are on the oor, the staking goal

when only one blok is on the oor. The goal of staking two spei bloks (e.g.

a and b) is reahed when the fat on(a; b) is part of the state desription, note

however that RRL learns to stak any two spei bloks by generalising from

on(a; b) or on(; d) to on(X; Y ) where X and Y an be substituted for any two

bloks. In the rst type of experiments, a Q-funtion was learned for state-spaes

with 3, 4 and 5 bloks. In the seond type, a more general strategy for eah goal

was learned by using poliy learning on state-spaes with a varying number of

bloks.

Afterwards, we disuss some experiments to study the onvergene behaviour of the TG-algorithm and the sensitivity of the algorithm to some of its

parameters.

1

1

0.9

0.9

0.8

0.8

0.7

0.7

Average Reward

Average Reward

4.1 Fixed State Spaes

0.6

0.5

0.4

0.3

0.2

0

0

50

100

150

Number of Episodes

0.5

0.4

0.3

0.2

’stack 3’

’stack 4’

’stack 5’

0.1

0.6

’unstack 3’

’unstack 4’

’unstack 5’

0.1

0

200

0

50 100 150 200 250 300 350 400 450 500

Number of Episodes

1

0.9

Average Reward

0.8

0.7

0.6

0.5

0.4

0.3

0.2

’on(a,b) 3’

’on(a,b) 4’

’on(a,b) 5’

0.1

0

0

Fig. 4.

500 1000 1500 2000 2500 3000 3500 4000 4500 5000

Number of Episodes

The learning urves for xed numbers of bloks

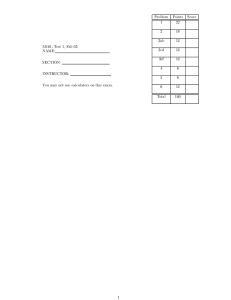

For the experiments with a xed number of bloks, the results are shown

in Figure 4. The generated poliies were tested on 10 000 randomly generated

examples. A reward of 1 was given if the goal-state was reahed in the minimal

number of steps. The graphs show the average reward per episode. This gives an

indiation of how well the Q-funtion generates the optimal poliy. Compared to

the original system, the number of episodes needed for the algorithm to onverge

to the orret poliy is muh larger. This an be explained by two harateristis

of the new system. Where the original system would automatially orret its

mistakes when generating the next tree by starting from srath, the new system

has to ompensate for the mistakes it makes near the root of the tree | due

to faulty Q-values generated at the beginning | by adding extra branhes to

the tree. To ompensate for this fat a parameter has been added to the TGalgorithm whih speies the number of examples that need to be led in a leaf,

before the leaf an be split. These two fators | the overhead for orreting

mistakes and the delay put on splitting leaves | ause the new RRL system to

onverge slower when looking at the number of episodes.

However, when omparing timing results the new system learly outperforms

the old one, even with the added number of neessary episodes. Table 1 ompares

the exeution times of RRL-TG with the timings of the RRL system given in

[5℄. For the original RRL system, running experiments in state spaes with more

than 5 bloks quikly beame impossible. Learning in a state-spae with 8 bloks,

RRL-TG took 6.6 minutes to learn for 1000 episodes, enough to allow it to

onverge for the staking goal.

Table 1.

Exeution time of the RRL algorithm on Sun Ultra 5/270 mahines.

Original RRL

Staking (30 episodes)

Unstaking (30 episodes)

On(a,b) (30 episodes)

RRL-TG

Staking (200 episodes)

without paks Unstaking (500 episodes)

On(a,b) (5000 episodes)

RRL-TG

Staking (200 episodes)

with paks

Unstaking (500 episodes)

On(a,b) (5000 episodes)

3 bloks

4 bloks

5 bloks

6.15 min

62.4 min

306 min

8.75 min not stated not stated

20 min not stated not stated

19.2 se

26.5 se

39.3 se

1.10 min

1.92 min

2.75 min

25.0 min

57 min

102 min

8.12 se

11.4 se

16.1 se

20.2 se

35.2 se

53.7 se

5.77 min

7.37 min

11.5 min

4.2 Varying State Spaes

In [5℄ the strategy used to ope with multiple state-spaes was to start learning

on the smallest and then expand the state-spae after a number of learning

episodes. This strategy worked for the original RRL system beause examples

were never erased from the knowledge base, so when hanging to a new statespae the examples from the previous one would still be used to generate the Qand P-trees.

However, if we apply the same strategy to RRL-TG, the TG-algorithm rst

generates a tree for the small state-spae and after hanging to a larger statespae, it expands the tree to t the new state spae, ompletely forgetting what

it has learned by adding new branhes to the tree, thereby making the tests in

the original tree insigniant. It would never generalise over the dierent statespaes. Instead, we oer a dierent state-spae to RRL-TG every episode. This

way, the examples the TG-algorithm uses to split leaves will ome from dierent

state-spaes and allow RRL-TG to generalise over them. In the experiments on

the bloks world, we varied the number of bloks between 3 and 5 while learning.

To make sure that the examples are spread throughout several state-spaes we

set the minimal number of examples needed to split a leaf to 2400, quite a bit

higher than for xed state-spaes. As a result, RRL-TG requires a higher number

of episodes to onverge.

At episode 15000, P-learning was started. Note that sine P-learning depends

on the presene of good Q-values, in the inremental tree learning setting it is

unwise to start building P-trees from the beginning, beause the Q-values at

that time are misleading, ausing suboptimal splits to be inserted into the P-tree

in the beginning. Due to the inremental nature of the learning proess, these

suboptimal splits are not removed afterwards, whih in the end leads to a more

omplex tree that is learned more slowly. By letting P-learning start only after

a reasonable number of episodes, this eet is redued, although not neessarily

entirely removed (as Q-learning ontinues in parallel with P-learning).

1

0.9

Average Reward

0.8

0.7

0.6

0.5

0.4

0.3

0.2

’stack Var’

’unstack Var’

’on(a,b) Var’

0.1

0

0

5000

10000

15000

20000

25000

30000

Number of Episodes

Fig. 5. The learning urves for varying numbers of bloks. P-learning is started at

episode 15 000.

Figure 5 shows the learning urves for the three dierent goals. The generated

poliies were tested on 10 000 randomly generated examples with the number of

bloks varying between 3 and 10. The rst part of the urves indiate how well

the poliy generated by the Q-tree performs. From episode 15000 onwards, when

P-learning is started, the test results are based on the P-tree instead of the Qtree; on the graphs this is visible as a sudden drop of performane bak to almost

zero, followed by a new learning urve (sine P-learning starts from srath). It

an be seen that P-learning onverges fast to a better performane level than Qlearning attained. After P-learning starts, the Q-tree still gets updated, but no

longer tested. We an see that although the Q-tree is not able to generalise over

unseen state-spaes, the P-tree | whih is derived from the Q-tree | usually

an.

The jump to an auray of 1 in the Q-learning phase for staking the bloks

is purely aidental, and disappears when the Q-tree starts to model the enountered state-spaes more aurately. The generated Q-tree at that point in

time is shown in Figure 6. Although this tree does not represent the orret

Q-funtion in any way, it does | by aident | lead to the orret poliy for

staking any number of bloks. Later on in the experiment, the Q-tree will be

muh larger and represent the Q-funtion muh loser, but it does not represent

an overall optimal poliy anymore.

state(S),ation_move(X,Y),numberofbloks(S,N)

height(S,Y,H),diff(N,H,D),D<2 ?

yes:

qvalue(1.0)

no:

height(S,B,C),height(S,Y,D), D < C ?

yes:

qvalue(0.149220955007456)

no:

qvalue(0.507386078412001)

Fig. 6.

The Q-tree for Staking after 4000 episodes

The algorithm did not onverge for the On(A; B ) goal but we did get better

performane than the original RRL. This is probably due to the fat that we

were able to learn more episodes.

4.3 The Minimal Sample Size

We deided to further explore the behaviour of the RRL-TG algorithm with

respet to the delay parameter whih speies the minimal sample size for TG

to split a leaf. To test the eet of the minimal sample size, we started RRL-TG

for a total of 10 000 episodes and started P-learning after 5000 episodes. We then

varied the minimal sample size from 50 to 2000 examples. We ran these tests

with the unstaking goal.

Figure 7 shows the learning urves for the dierent settings, Table 2 shows

the sizes of the resulting trees after 10 000 episodes ( with P-learning starting at

1

0.9

0.8

Average Reward

0.7

0.6

0.5

0.4

0.3

’50’

’400’

’1000’

’2000’

0.2

0.1

0

0

Fig. 7.

2000

4000

6000

Number of Episodes

8000

10000

The learning urves for varying minimal sample sizes

episode 5000. The algorithm does not onverge for a sample size of 50 examples.

Even after P-learning optimality is not reahed. The algorithm makes too many

mistakes by relying on too small a set of examples when hoosing a test. With

an inreasing sample size omes a slower onvergene to the optimal poliy. For

a sample size of 1000 examples, the dip in the learning urve during P-learning

is aused by a hange in the Q-funtion (whih is still being learned during the

seond phase). When looking at the sizes of the generated trees, it is obvious

that the generated poliies benet from a larger minimal sample size, at the ost

of slower onvergene. Although experiments with a smaller minimal sample size

have to orret for their early mistakes by building larger trees, they often still

sueed in generating an optimal poliy.

Table 2.

The generated tree sizes for varying minimal sample sizes

50 400 1000 2000

Q-tree 31 26 16

9

P-tree 9 10

8

8

5

Conluding Remarks

This paper desribed how we upgraded the G-tree algorithm of Chapman and

Kaelbling [4℄ to the new TG-algorithm and by doing so greatly improved the

speed of the RRL system presented in [5℄.

We studied the onvergene behaviour of RRL-TG with respet to the minimal sample size TG needs to split a leaf. Larger sample sizes mean slower

onvergene but smaller funtion representations.

We are planning to apply the RRL algorithm to the Tetris game to study

the behaviour of RRL in more omplex domains than the bloks world.

Future work will ertainly inlude investigating other representation possibilities for the Q-funtion. Work towards rst order neural networks and Bayesian

networks [7℄ seems to provide promising alternatives.

Further work on improving the TG algorithm will inlude the use of multiple

trees to represent the Q- and P-funtions and further attempts to derease the

amount of used memory. Also, some work should be done to automatially nd

the moment to start P-learning and the optimal minimal sample size. Both will

inuene the size of the generated poliy and the onvergene speed of TG-RRL.

Aknowledgements

The authors would like to thank Saso Dzeroski, Lu De Raedt and Maurie

Bruynooghe for their suggestions onerning this work. Jan Ramon is supported

by the Flemish Institute for the Promotion of Siene and Tehnologial Researh

in Industry (IWT). Hendrik Blokeel is a post-dotoral fellow of the Fund for

Sienti Researh of Flanders (FWO-Vlaanderen).

Referenes

1. H. Blokeel and L. De Raedt. Top-down indution of rst order logial deision

trees. Artiial Intelligene, 101(1-2):285{297, June 1998.

2. H. Blokeel, L. De Raedt, and J. Ramon. Top-down indution of lustering trees.

In Proeedings of the 15th International Conferene on Mahine Learning, pages

55{63, 1998. http://www.s.kuleuven.a.be/~ml/PS/ML98-56.ps.

3. H. Blokeel, B. Demoen, L. Dehaspe, G. Janssens, J. Ramon, and H. Vandeasteele.

Exeuting query paks in ILP. In J. Cussens and A. Frish, editors, Proeedings of

the 10th International Conferene in Indutive Logi Programming, volume 1866

of Leture Notes in Artiial Intelligene, pages 60{77, London, UK, July 2000.

Springer.

4. David Chapman and Leslie P. Kaelbling. Input generalization in delayed reinforement learning: An algorithm and performane omparisions. In Proeedings of the

International Joint Conferene on Artiial Intelligene, 1991.

5. S. Dzeroski, L. De Raedt, and K. Driessens. Relational reinforement learning.

Mahine Learning, 43:7{52, 2001.

6. L. Kaelbling, M. Littman, and A. Moore. Reinforement learning: A survey.

Journal of Artiial Intelligene Researh, 4:237{285, 1996.

7. K. Kersting and L. De Raedt. Bayesian logi programs. In Proeedings of the tenth

international onferene on indutive logi programming, work in progress trak,

2000.

8. P. Langley. Elements of Mahine Learning. Morgan Kaufmann, 1996.

9. T. Mithell. Mahine Learning. MGraw-Hill, 1997.

10. R. Sutton and A. Barto. Reinforement Learning: an introdution. The MIT Press,

Cambridge, MA, 1998.