The Infinite Hidden Markov Model (Part 1) Pfunk

advertisement

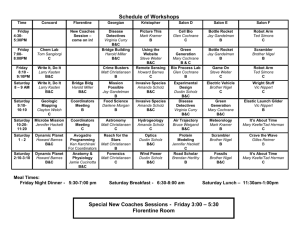

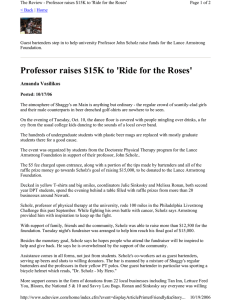

The Infinite Hidden Markov Model (Part 1) Pfunk November 11, 2011 J. Scholz (RIM@GT) 08/26/2011 1 Motivation: modeling sequential data • Laser ranges or observations (localization) • Phonemes (speech recognition) • Visual features (tracking) • Human Actions (learning from demonstration J. Scholz (RIM@GT) 08/26/2011 2 The classical HMM • Encodes markov assumption for state transitions • Each state has it’s own distribution over possible observations (“emissions”) J. Scholz (RIM@GT) 08/26/2011 3 Parameterizing an HMM Transition matrix (N x N) Emission matrix (N x M) (Both are conditional probability tables) J. Scholz (RIM@GT) 08/26/2011 4 Things people might want to do with an HMM • • Sample a hidden state trajectory • • • Find most likely sequence (Viterbi) • Learn the underlying CPTs (Baum-Welsch/EM) Filtering/Smoothing to find hidden state marginals (forward-backward) Evaluate likelihood of a hidden state trajectory Evaluate likelihood of an emission sequence (dynamic programming) J. Scholz (RIM@GT) 08/26/2011 5 Information Required? • • Sample a hidden state trajectory CPTs, Emissions Filtering/Smoothing to find hidden state marginals (forward-backward) CPTs, Emissions • • • Find most likely sequence (Viterbi) CPTs, Emissions • Learn the underlying CPTs (Baum-Welsch/EM) Evaluate likelihood of a hidden state trajectory CPTs, Emissions Evaluate likelihood of an emission sequence (dynamic programming) J. Scholz (RIM@GT) 08/26/2011 CPTs Emissions 6 Running Example • • • 10 States 6 Emission tokens Emission Sequence: 10 copies of “ABCDEFEDCB” Perfect Model (log-likelihood = 0) J. Scholz (RIM@GT) 08/26/2011 7 Things WE might want to do with an HMM • First figure out the number of states! • Then do the rest of that stuff J. Scholz (RIM@GT) 08/26/2011 8 Infinite Version Will let us get from here: • R.runHDP(50); J. Scholz (RIM@GT) 08/26/2011 9 Infinite Version To here: • R.runHDP(50); J. Scholz (RIM@GT) 08/26/2011 10 Learning the CPTs • Fixed-size versions can use EM approach • Won’t work for DP (why??) • Can’t compute analytical marginals over an infinite number of states • DP is a generative model, so we’ll use MCMC instead J. Scholz (RIM@GT) 08/26/2011 11 Gibbs Sampling the state sequence • Algorithm: • Iterate from t=1:end • Resample S(t) given markov blanket * • Update CPTs accordingly ** *How do we condition on the MB? ** Decrement old transition/emission, increment new J. Scholz (RIM@GT) 08/26/2011 12 Likelihoods in an HMM • Forward likelihoods are rows • Backward likelihoods are columns J. Scholz (RIM@GT) 08/26/2011 13 Adding the dirichlet proces • Replace the fixed size CPT with a DP version • each row is it’s own DP • problems? • Yes! Separate DP’s have no common support. Doesn’t work for a CPT J. Scholz (RIM@GT) 08/26/2011 14 Dirichlet Processes Dirichlet Processes A Dirichlet Process (DP) is aDefinition distribution over probability Definition chlet Processes measures. A Dirichlet Process (DP) is a distribution ov tion A Dirichlet Process (DP) is a distribution over probability A DP has two parameters: measures. Quick review of DP math measures. Base Process distribution H, which is like the mean of the DP. A Dirichlet (DP) is a distribution over probability A DP has two parameters: A DP has two parameters: • A DP has two parameters: measures. Strength parameter α, which an mean inverse-variance thethe DP. distribution H, which of is like mean Base distribution H, whichisislike likeBase the of the DP. A DP has two parameters: Strength parameter Base distribution H,α, which is like thean mean of the DP α, which Strength parameter which is like inverse-variance of the is DP.like an inv We write: • • Base distribution H, which is like the mean of the DP. We write: We write: Strength parameter α, which is likeα,an inverse-variance of the DP. of the DP Strength parameter which is like an inverse-variance We write: G ∼GDP(α, H) ∼ DP(α, H) G ∼ DP(α, H) We write: • forX:any partition (A1 , . . . , An ) of X: for any partition (A G, .∼.(A .DP(α, ,1 ,A. .n.)H) ofn )ifX: if for any partition ,A of 1 if for any partition (A , A. .n .) ,of X: n )) ∼ Dirichlet(αH(A 1 , . .1.), (G(A1 ),1.),. . ., .G(A Dirichlet(αH( (G(A G(A , αH(A )) n )) n∼ (G(A1 ), . . . , G(An )) ∼ Dirichlet(αH(A1 ), . . . , αH(An )) (G(A1 ), . . . , G(An )) ∼ Dirichlet(αH(A1 ), . . . , αH(An )) A4 A1 A1 A1 A3 A2 Yee Whye Teh (Gatsby) Yee Whye Teh (Gatsby) Whye Teh (Gatsby) J. Scholz (RIM@GT) A2 A5 6 3 A2 A6 A3A A A5 A1 A4 A4 A4 A6 A3 A2 A5 A5 Yee Whye Teh (Gatsby) DP and HDP Tutorial DP and HDP Tutorial DP and 08/26/2011 HDP Tutorial Mar 1, 2007 / CUED DP and HDP Tutorial Mar 1, 2007 / CUED 5 / 53 5 / 53 try it! >> Prelim 2.1 Mar 1, 2007 / CUED 155 / 5 A closer look • A DP has two parameters: • • • Base distribution H, which is like the mean of the DP Strength parameter α, which is like an inverse-variance of the DP We write: What is the form of H? ∼ G ∼ DP(α, H) if for any partition (A1 , . . . , An ) of X: (G(A1 ), . . . , G(A ∼n )) ∼ Dirichlet(αH(A1 ), . . . , αH(An )) A4 A1 A3 A2 J. Scholz (RIM@GT) A6 A5 08/26/2011 16 What is the form of H? • Can be any distribution defined over our event space (e.g. gaussian) • continuous or discrete: both legal • only condition is that it has to return a density for any partition A we give it J. Scholz (RIM@GT) 08/26/2011 17 Topics • Discrete N-D probability distributions (categorical, multinomial, dirichlet) • • Dirichlet Process Definition Dirichlet Process Metaphors • • • Polya Urn Chinese Restaurant Process Stick-breaking process J. Scholz (RIM@GT) 08/26/2011 18 So where is the Process in a DP? • 3 Metaphors: • Polya-urn • Involves drawing and replacing balls from an urn • Chinese Restaurant • Involves customers sitting at tables in proportion to their popularity • Stick-breaking • Involves breaking off pieces of a stick of unit length J. Scholz (RIM@GT) 08/26/2011 19 So where is the Process in a DP? • 3 Metaphors: • Polya-urn • Involves drawing and replacing balls from an urn • Chinese Restaurant • Involves customers sitting at tables in proportion to their popularity • Stick-breaking • J. Scholz (RIM@GT) Involves breaking off pieces of a stick of unit length 08/26/2011 20 The Polya-urn Scheme ya’s Urn Scheme Pòlya’s urn scheme produces a sequence θ1 , θ2 , . . . with the Polya urn scheme produces a sequence θ1, θ2, . . . with the following conditionals: • following conditionals: eq 1: θn |θ1:n−1 ∼ �n−1 δθi + αH n−1+α i=1 number of θi colored balls Imagine picking balls of different colors from an urn: Imagine picking balls of different colors from an urn: • Start with no balls in the urn. with probability ∝ α, draw θ ∼ H, and add a ball of Start with no balls in the urn. • that color into the urn. With probability α,pick drawa θball∼ at H,random and add from a ball of that With ∝ n −∝1, • probability n n color into the the urn, record θn tourn. be its color, return the ball into the urn and place a second ball of same color into urn. With probability ∝ n − 1, pick a ball at random from the urn, record θn to be its color, return the ball into the urn and place a second ball of same color into urn. • Yee Whye Teh (Gatsby) J. Scholz (RIM@GT) DP and HDP Tutorial 08/26/2011 Mar 1, 2007 / CUED 10 / 53 21 Polya sampling in practice • Equation 1 is of the form (p)f(Ω) + (1-p)g(Ω) • Implies that proportion p of density is associated with f, so we can split the task in half: • first flip a bern(p) coin. If heads, draw from f, if tails, draw from g • for polya urn, gives us either a sample from existing balls (f), or a new color (g)* *if g is a continuous density on Ω, then the probability of sampling an existing cluster from g is zero. (why?) J. Scholz (RIM@GT) 08/26/2011 22 ya’s Urn Scheme Analyzing Polya Urn One (infinitely long) “run” of our process ∼ ADP(α, is a random probability measure. draw GH) ∼ DP(α, H) is a random probability measure. A draw G ∼ DP(α,H) is a random probability measure • Treating G as a distribution, consider i.i.d. draws from as a distribution, consider i.i.d. draws from G:G: • Treating G as a distribution, consider i.i.d. draws from G: θi |G ∼ G • θi |G ∼ G One component drawn from G Marginalizing out G, marginally each H, while the conditional i ∼ Marginalizing out G, each θi ∼θH, while the conditional ng out G, marginally each θi ∼ H, while the conditional distributions are, distributions are: s are, �n−1 i=1 δθi + αH θn |θ1:n−1 ∼ �n−1 n − 1 + α δ + αH θn |θ1:n−1 ∼ • i=1 θi This is the Pòlya scheme. n −we 1 did + αin the Polya urn scheme* This isurn precisely what Pòlya urn * This is scheme. why people say the that the DP is the “De Finetti distribution underlying the Urn process. It’s what makes the θi exchangeable. (Since θi are i.i.d. ∼ G, their joint distribution is invariant to permutations) J. Scholz (RIM@GT) 08/26/2011 23 Problem for CPT • Each time one of the row DP’s draws a new state, it draws from H • However, each draw from H will be unique • Thus, each DP builds its own state representation J. Scholz (RIM@GT) 08/26/2011 24 Solution? • Need some way to allow each row DP to SHARE states, without limiting their number • Answer: another DP! • Each time a row DP tries to draw from it’s base distribution, we draw from a high-level DP (“Oracle”) • This oracle is shared across all row DPs J. Scholz (RIM@GT) 08/26/2011 25 Compared to infinite gaussian mixture model • Traded one form of complexity for another • In the iGMM, atoms were associated with Gaussian components (thus atoms had an associated mean and variance) • In the iHMM, atoms are not (necessarily) mixture components, but they need to be shared somehow J. Scholz (RIM@GT) 08/26/2011 26 Comparing the Graphical Models distribution over components π Zi GMM θ Xi distribution over values given components HMM Models using the i = 1, . . . , n iGMM modelling xi , while over parameters odel. G "i ei π Xi θ iHMM ! G0 ! % "0 Gj "0 $j xi (a) J. Scholz (RIM@GT) ∑ H H ! Si #ji zji xji xji nj J nj J (b) 08/26/2011 Figure 3: (a) A hierarchical Dirichlet process mixture model.27(b) A A little trick... • Original Paper used pure gibbs sampling approach with full generative model • Each step we decrement all DP data structures • What if we don’t want to mess around with the oracles? • Can re-draw the oracle counts after each sweep, and then redraw the oracle distribution from a dirichlet (problems?) J. Scholz (RIM@GT) 08/26/2011 28 Augmenting a dirichlet distribution online • In a DP, π is a distribution over all represented states, plus the probability of sampling a new state • New state probability was proportional to number of “black balls” • If we sample a new state, can augment π using the “stick-breaking construction” J. Scholz (RIM@GT) 08/26/2011 29 Original iHMM (Beal et al. 2002) nij nii + ! # nij + " + ! j self transition a) b) c) d) " # nij + " + ! #nij + " + ! j j existing transition oracle j=i njo $ # njo + $ # njo + $ existing state new state j j Figure 1: (left) State transition generative mechanism. (right a-d) Sampled state trajectories of length T = 250 (time along horizontal axis) from the HDP: we give examples of four modes of behaviour. (a) α = 0.1, β = 1000, γ = 100, explores many states with a sparse transition matrix. (b) 10 2500 e ! α = 0, β = 0.1, γ = 100, retraces multiple interacting trajectory segments. (c) α = 8, β = 2, γ = 2, switches between ea few different states. (d) α = 1, β = 1, γ = 10000, has strict left-to-right transition miq +long ! linger time.2000 dynamics#q with 2 miq #miq + !e q existing emission oracle 1500 Under the oracle, with probability proportional to γ an entirely new state10 is transitioned to. This is the only mechanism 1000 for visiting new states from the infinitely many available to e m " us. After each transition we set nij ← nij + 1 and, if we transitioned to the state j via the q o o oracle DP just described then o e o e 500in addition we set nj ← nj + 1. If we transitioned to a new mq + " mq + " o #qstate then the size # qof n and n will increase. 1 existing new 0 0 0.5 1 1.5 10over which the Self-transitions aresymbol special because their probability defines 2a time 2.5scale symbol 0 20 40 x 10 dynamics of the hidden state evolves. We assign a finite prior mass α to self transitions for each state; this is the third hyperparameter in our model. Therefore, when first visited (via 2: (left) State emission generative mechanism. (middle) Word occurence γ in the HDP), its self-transition count is initialised to α. 0 4 Figure 60 80 100 for entire Alice novel: each is assigned a unique integeris identity it appears. Wordinidentity The word full hidden state transition mechanism a two-levelasDP hierarchy shown decision (vertical) is plotted the position the text. (right) (Exp 1) under Evolution ofwith number of represented treeword form in Figure 1.(horizontal) Alongside areinshown typical state trajectories the prior J. Scholz against (RIM@GT) 08/26/2011 30 The Stick-Breaking Construction Stick-breaking Construction Stick-breaking Construction • But how do But draws ∼ draws DP(α,G H)∼look like? howGdo DP(α, H) look like? G is discreteGwith probability so: is discrete withone, probability one, so: mixing proportion But what do draws G ∼ DP(α,H) look like? • ∞ � G one, = so: πk δG θk∗ = G is discrete with probability k =1 ∞ � point mass πk δθk∗ k =1 The stick-breaking construction shows that G ∼ DP(α,H) if: • The stick-breaking construction shows thatshows G ∼ DP(α, The stick-breaking construction that GH) ∼ if: DP(α, H) if: πk = βk k� −1 l=1 (1 πk − = ββlk) k� −1 l=1 (1 − βl ) βkα) ∼ Beta(1, α) βk ∼ Beta(1, ! ∗ ∗ θk ∼ H θk ∼ H ! !(3) !(4) !(4) (5) (6) • !(6) !(2) !(3) !(1) !(2) !(1) !(5) WeGEM(α) write π∼ π (π . . distributed .) is distributed We write ∼ if πGEM(α) = if(ππ πif2(π , .= .1,.)π is21, ,distributed as above. 1, = Weπwrite π ∼ GEM(α) . π. .)2 , is as as above. above Yee Whye Teh (Gatsby) Yee Whye Teh (Gatsby) J. Scholz (RIM@GT) DP and HDP Tutorial DP and HDP Tutorial 08/26/2011 Mar 1, 2007 / CUED Mar 1, 2007 / CUED 15 / 53 31 15 The Stick-Breaking Construction Stick-breaking Construction • But how do draws G ∼ DP(α, H) look like? Why does this make G issense? discrete with probability one, so: • • ∞ � Draws from the beta(1,alpha) give G =a π δ distribution over the interval (0,1), which we The stick-breaking construction shows that G ∼ DP(α, H) if: can think of as where to break the stick k θk∗ k=1 k−1 � th sample The product scales the k ! l=1 ! β ∼ Beta(1, α) according to how much has been broken off ! k ! ∗ θk ∼ H ! already πk = βk (1 − βl ) !(1) (2) (3) (4) (5) (6) • We write π ∼ GEM(α) if π = (π1 , π2 , . . .) is distributed as above. In the limit we get another infinite partitioning of our interval [0,1], and therefore a (discrete) probability measure Yee Whye Teh (Gatsby) J. Scholz (RIM@GT) 08/26/2011 DP and HDP Tutorial Mar 1, 2007 / CUED 32 1