Physics-Based Reinforcement Learning for Mobile Manipulation

advertisement

Physics-Based Reinforcement

Learning for Mobile Manipulation

PhD Dissertation Defense

Jonathan Scholz

August 17, 2015

Committee:

Dr. Charles Isbell (IC, Georgia Institute of Technology)

Dr. Andrea Thomaz (IC, Georgia Institute of Technology)

Dr. Henrik Christensen (IC, Georgia Institute of Technology)

Dr. Magnus Egerstedt (ECE, Georgia Institute of Technology)

Dr. Michael Littman (CS, Brown University)

Thesis Vision

Robots operating in human environments

Learning behaviors from experience

Good Ideas

•

Self-guided

exploration

•

Shaping behavior

through feedback

Reinforcement Learning

Action at

Agent, State st

World

Reward rt

Next State st+1

➡

➡

➡

Pacman states: all positions of pacman, ghosts, food, & pellets

Pacman actions: {N,S,E,W}

Pacman rewards: -1 per step, +10 food, -500 die,+500 win,...

RL+Robotics: Prior work

16

•

•

•

Learning to Control a Low-Cost Manipulator us

Humanoid Walking

(Peters, Schaal, Vijayakumar 2003) Data-Efficient Reinforcement Learning

Acrobatic Helicopter

(Ng et al. 2003)

Marc Peter Deisenroth

Carl Edward Rasmussen

Dept. of Computer Science & Engineering

University of Washington

Seattle, WA, USA

Dept. of Engineering

University of Cambridge

Cambridge, UK

Dieter Fox

Dept. of Computer Science & Engine

University of Washington

Seattle, WA, USA

Fig. 3. Episodic natural actor-critic for learning dynamic movement primitives. (a)

Learning curves comparing the episodic Natural Actor Critic to episodic REINFORCE.

(b) Humanoid robot DB which was used for this task. Note that the variance of the

episodic Natural Actor Critic learning is significantly lower than the one of episodic

REINFORCE, with about 10 times faster convergence.

Abstract—Over the last years,

there has been substantial

Ball-in-Cup

progress in robust manipulation in unstructured environments.

long-term goal of our work is to get away from precise,

(Kolber 4.2

& Peters

2009) The

Example II: Optimizing Nonlinear

Motor

Primitives

for

but very

expensive

robotic

systems and to develop affordable,

Humanoid Motion Planningpotentially

imprecise, self-adaptive manipulator systems that can

interactively

perform

tasks such

as playing with children. In

While the previous example demonstrated

the feasibility

and performance

of

the Natural Actor Critic in a classicalthis

example

of

motor

control,

this

section

will

paper, we demonstrate how a low-cost off-the-shelf robotic

turn towards an application of optimizing nonlinear dynamic motor primitives

system

can learn closed-loop policies for a stacking task in only

for a humanoid robot. In [17, 16], a novel form of representing movement plans

a handful

of trials—from

scratch.

Our manipulator is inaccurate

(q d , q̇ d ) for the degrees of freedom (DOF)

of a robotic

system was suggested

in

and provides

no pose feedback. For learning a controller in the

terms of the time evolution of the nonlinear

dynamical systems

work space of a Kinect-style depth camera, we use a model-based

q̇d,k = h(qd,k , z k , gk , ⌧, ✓k )

(30)

reinforcement learning technique. Our learning method is data

where (qd,k , q̇d,k ) denote the desiredefficient,

position and

velocity model

of a joint,

z k and

the deals with several noise sources

reduces

bias,

internal state of the dynamic system,ingk atheprincipled

goal (or pointway

attractor)

state

of

during long-term

planning. We present a

each DOF, ⌧ the movement duration shared by all DOFs, and ✓k the open

way of

state-space

constraints into the learning

parameters of the function h. The original

workincorporating

in [17, 16] demonstrated

how

and analyze

thebylearning

the parameters ✓k can be learned to process

match a template

trajectory

means of gain by exploiting the sequential

supervised learning – this scenario is,structure

for instance,of

useful

as

the

first

step

of an

the stacking task.

Fig. 1. Low-cost robotic arm by Lynxmotion [1] performing a blo

task. Since the manipulator does not provide any pose feedback,

learns a controller directly in the task space using visual feedba

Kinect-style depth camera.

progress in

robust manipulation in unstructured environments. While existing techniques have the potential to solve various household

manipulation tasks, they typically rely on extremely expensive

robot hardware [12]. The long-term goal of our work is to

develop affordable, light-weight manipulator systems that can

interactively play with children. A key problem of cheap

a typical problem of model-based methods: P ILCO

a flexible probabilistic non-parametric Gaussian proc

dynamics model and takes model uncertainty con

into account during long-term planning. P ILCO lea

controllers from scratch, i.e., with random initializa

deep understanding of the system is required. In this p

show how obstacle information provided by the dept

can be incorporated into PILCO’s planning and lea

•

PILCO: Cart-Pole

(Diesenroth et al. 2011)

•

PILCO: Block-Stacking

(Diesenroth et al. 2011)

imitation learning system. Here we will add the ability of self-improvement of

the movement primitives in Eq.(30) by means of reinforcement learning,

which

I. I NTRODUCTION

is the crucial second step in imitation learning.

The system in Eq.(30) is a point-to-point

an episodic

Overmovement,

the lasti.e.,years,

theretaskhas been substantial

from the view of reinforcement learning – continuous (e.g., periodic) movement

3 71

0 7 A90 7

08

Not achieved

using RL methods

Limitations

Feedback

k

s

a

T

??

??

??

??

r

o

t

o

m

i

r

o

s

Sen

Decisions

Sensorimotor Level

What we see

What the robot sees

Pixel Intensities

2,073,618 Dimensional Time-Series!

Joint Currents

}

What the robot does

Physical Object Level

What we see

What the robot sees

Square Table Pose

Rectangular Table Pose

}

}

It is hard to imagine a truly intelligent agent that

does not conceive of the world in terms of objects

Net Applied Force-Torque (Square Table)

and their properties and relations to other objects.

- Leslie Kaelbling, 2001

9 Dimensional Time-Series

Reinforcement Learning

Method Comparison

Policy

Model

Horizon

Problem Space

Algorithm

Data

Ball in Cup

DMP

None

Short

Robot-Space

EM w/ Natural

Gradient

Autonomous

Humanoid Walking

RBF

None

Short

Robot-Space

LSTD w/ Natural

Gradient

Autonomous

Helicopter

Neural-Net

Locally Weighted

Regression

RBF

PILCO Cart-Pole

PILCO BlockStacking

Robotics

Cart Pushing

Domain General

Short

Robot-Space

Hill-Climbing w/

Monte-Carlo Eval.

LfD

Gaussian Process

Short

Robot-Space

CG/L-BFGS

Autonomous

Linear

Gaussian Process

Short

Mixed

CG/L-BFGS

Autonomous

Policy

Model

Horizon

Problem Space

Algorithm

Data

Long

Task-Space

A* Variant

N/A

Long

Task-Space

State Machine

N/A

Jacobian PD-Control, Geometric (Projected

Primitives

2D)

PR2 Towels

Scripted Grasp and

Manipulation Prim.

Implicit Cloth Physics

PR2 Socks

Scripted Grasp and

Manipulation Prim.

Implicit Cloth Physics

HRP-2 NAMO

ZMP Walking,

Jacobian PD Grasp

PR2 NAMO

HRP-2 MacGyver

Physics-Based

Long

Task-Space

State Machine

N/A

2D Rigid Body

Physics

Long

Task-Space

A* Variant

N/A

RRT Path Planner, PD

Control

2/3D Geometric

Simulation

Long

Mixed

Hierarchical

Backchaining

N/A

ZMP Walking,

Scripted Primitives

3D Physical

Simulation

Long

Mixed

A* Variant

N/A

Thesis statement

Physics-based Reinforcement Learning is a feasible and

efficient method for autonomous robot

manipulation, and enables adaptive behavior in

natural environments.

Reinfo

rceme

s

otic

nt Lea

Rob

rning

Thesis intuition

-3 3 3 0 7

09

0

Thesis Wish List

1. Whole-Body Manipulation

➡

Utilizes full robot capabilities

➡

Challenging domestic robotics

tasks

3. Stochastic Planning

➡

Handles model uncertainty

4. Model Learning

➡

Adapt to novel environments

5. Dynamic Constraints

➡

Capture rigid body behavior

2. Multi-Object Planning

A 97 3

1

Physics-based

Reinforcement Learning

3

2

Navigation Among Movable

Objects with Uncertain Dynamics

Online Learning for Navigation

Among Movable Objects

A 97 3

1

Physics-based

Reinforcement Learning

3

2

Navigation Among Movable

Objects with Uncertain Dynamics

Online Learning for Navigation

Among Movable Objects

Efficiency in Model-Based RL

Models let you

simulate experience

Online Learning Curves

Reward

Faster model

learning = faster

policy learning

Time

10

3

2

f (R6n+4 ) ! R1

3

f (x1 , . . . , fx , . . . ; x1 ) + ✏

3

2

3

05

+1

= 4 f (x1 , . . . .,.f.x , . . . ; x1 ) +5✏

.1

1

5

. . 5 = 4f (x1 , . . . , fx , . .. .. ;. ✓˙n ) + ✏

t+1

f (x1 , . . . , fx , . . . ; ✓˙n ) + ✏

State:

n

Object-Oriented MDPs

s = [ni=1 oi .attributes

s = [ni=1 oi .attributes

Diuk et al. (ICML 2007)

Attributes

Classes

Relations

x

Person

in(person, taxi)

y

Taxi

contactN(o1,o2)

inTaxi

Wall

contactS(o1,o2)

Destination

contactE(o1,o2)

Condition:

c = [R

k=1 rk (oi , oj ) 8i 6= j

c=

1

[R

k=1 rk (oi , oj )

8i 6= j

2

contactW(o1,o2)

s1≠s2≠s3 c1≠c2=c3

3

key idea

An Object-Oriented Representation for Effici

OO-MDP limitations

Discrete state-space

Deterministic boolean effects

go-right(s, c)

wall

no wall

OO-M

mode

No plant dynamics

erty o

object has

that w

velocity

tion.

throug

experiences

forces

When

s.x=s.x s.x=s.x + 1

tion

b

do-nothing(s,c)

Diuk et al. (ICML 2007)

lished

mines

tribute

s.x = ?

is defi

Figure 1. The taxi domain. (a) Original 5 × 5 Taxi problem. (b)

that ar

Extended 10 × 10 version, with a different wall distribution and 8

when

possible passenger locations and destinations.

i2N

EXTRA MATH FOR PROPOSAL

Model signature

(23)

P (s0 |s, a) = P (x01 , y10 , ✓10 , ẋ01 , ẏ10 , ✓˙10 , ..., x0n , yn0 , ✓n0 , ẋ0n ,(ICML

ẏn0 , ✓˙n0 |x1 ,2014)

y1 , ✓1 , ẋ1 , ẏ1 , ✓˙1 , .

(22)

Object-Oriented

• actions Regression

(20)

As a regression problem:

1) Define oriented collision predicates

(21)

contactθ=0(oi)

(22)

contactθ=20(oi)

a = [fx , ff(R

⌧, i]) | ! R6n

y ,6n+4

(24)

2) Use regression model

fx , fy , ⌧ 26n+4

R

for each

f (R condition

) ! R1

True

(25)

{

s’

2

i

3

2 2N

3

f (x1 , . . . , fx , . . . ; x1 ) + ✏

Model signature (26)

4 ... 5 = 4

5

...

f (x1 , . . . , fx , . . . ; ✓˙n ) + ✏

✓˙nt+1

(23) …

P (s0 |s, a) = P (x01 , y10 , ✓10 , ẋ01 , ẏ10 , ✓˙10 , ..., x0n , yn0 , ✓n0 , ẋ0n , ẏn0 , ✓˙n0 |x1 , y1 , ✓1 , ẋ1 , ẏ1 , ✓˙1

contactθ=180(oi) False

True

0

xt+1

1

As

a regression

problem:

(controlled

dynamical system)

is estimated online from avail-

a

s

where f (s, a; i ) denotes the predictor for the ith

able input-output measurements [15]. Adaptation is typically 6n+4

dimension parameterized

by i .2

6n

(24)

f (R Defining

) !X̃ R:= [s, a] for notational convenience,

done in two stages: (1) estimation of the plant parameters

Need 6n+4

ofcanthese

per

using a Parameter Adaptation Algorithm (PAA) (2) updating

predictors

be fit for

eachcondition

output dimension using

controller parameters based on the current plant parameter squares:

T

1

0

ˆ

estimates. PBR can be understood as a PAA generalized to 6n+4

1 i = (X̃ X̃) X̃si

(25)

f (R

) !R

support non-linear model estimation using Bayesian approxB.

Locally

Weighted State-Space Regression

imate inference. Alternative approaches to non-linear system

Locally-Weighted Regression is structured similarl

identification can be found in [?].

Finally, there are several results in object pushing with- introduces a query-dependent kernel whose role is to

out explicit physical knowledge [18], [24], however these notion of similarity that allows predictions to be bias

approaches are restricted to holonomic objects. We are inter- their nearby training points. The kernel function is us

⇤

j

C=[001100…01010]

ested in tasks

in human environments, which can frequently compute a positive distance wj = k(X̃ , X̃ ) betwee

query point X̃ ⇤ and each element X̃ j of the trainin

include objects with wheels and hinges.

used locallyThese weights are collected

into a diagonal matrix W

(240 dim, n=12)

used to produceweighted

predictions with

weighted least-squar

regression

III. OVERVIEW

Applied to entire scene

Existing work on data driven dynamics modeling for

robotics typically focuses on the low-level control problem

(e.g. [21]), and requires learning the dynamics of the robot

itself. Non-parametric regression is an appropriate choice

for these situations as model fidelity is critical and robot

mechanisms are generally complex and difficult to model.

However, the mobile manipulation tasks we consider here

are unique in that (a) tasks often involve many objects with

⇤

i

s0i

= ((X̃ T W X̃)

= X̃ ⇤T

1

X̃ T W yi 0

⇤

i

In contrast to the parametric approach in Eq. 3, the m

parameters ⇤ are re-computed for each query. As a

the regression coefficients are free to vary across the

space, allowing LWR to model nonlinear functions

least-squares.

OO-LWR limitations

Must discretize the collision space

Latex Math

θ5

θ4 θ3

Jonathan Scholz

θ6

θ⇤2

April

16,

2014

θ

7

θ8

) ! s0

θ9 θ10

θ1

|C| = n2P

θ12

C: number of conditions

n: number of objects

P: number of collision sectors

θ11

h) / P (h| )P ( )

Need exponential

number

0

0 of predicates!

= arg maxa P (s |s, a, )V (s )

3

2

0

s 1 a1 s 1

6 s2 a2 s02 7

6

7

h=6 .

..

.. 7

4 ..

.

. 5

sT

aT

s0T

Limits data

per condition

(1)

c1

c2

c3

c4

…

Dynamic physical inferences

Static physical inferences

Will it fall?

Which color is heavier?

Physical Understanding in Humans

speed, generality, a

speed, generality,

and

speed,

general

enough

forthe

theabil

pu

enough for theTo

purposes

ofth

e

enough

make for

this

pr

Tothe

make

th

To make this

proposal

con

specify

nature

the

na

specify the nature

of these

finespecify

grained

they

practice:

fineare.

grained

fine grainedneering

they

Heret

roundings

often

neering

practeh

neering practice:

People’s

relaxed

faultmuch

toler

roundings

oft

roundings often

have

generality

over

th

relaxed

fault

relaxed

fault

tolerances,

lea

Battaglia

et al.

2013

Fig. 1. Everyday scenes,

activities,

and art that evoke

strong

physical

speed,

generality,

and

the

ability

problems.

Our

ini

generality

ove

itions. (A) A cluttered workshop that

exhibits many

nuanced

physical pro

generality

over

the degree

eral-purpose

appro

enough

for

the

purposes

of

ever

ties. (B) A 3D object-based

representation

of

the

scene

in

A

that

can

sup

problems.

Ou

problems. Our

initial

IPE

m

Dynamics

Engine

physical inferences based on

simulation.

(C)

A precarious

stack of

dishesa

To

make

this

proposal

concre

eral-purpose

eral-purpose

approximation

t

approximate

rigidlike an accident waiting to happen. (D) A child exercises his physical reaso

specify Dynamics

the nature

of

these

app

Dynamics

En

Engine

(ODE)

(

Carlo

appr

by stacking blocks. (E) Jenga puts players’ physicalMonte

intuitions

to the

tes

approximate

mechanism

for

approximate

dyna

fineourgrained

theyarigid-body

are.

Here(Photo

thr

“Stone balancing” exploits

powerful

physical

expectations

throu

Monte Carlo

Monte

Carloprobabilities

approach

of bl

stone balance by Heiko neering

Brinkmann).

practice:

People’s

ever

sents

objects’

geo

a

mechanism

a

mechanism

for

represent

Fig. 1. Everyday scenes, activities, and art that evoke

strong physicaloften

inturoundings

have

much

tig

tributions

by

inert

probabilities

t

probabilities

through

these

p

itions. (A) A cluttered workshop

many

nuanced

physical

properFig.that

1. exhibits

Everyday

scenes,

activities,

and

art

that

evoke

strong

physic

relaxed

fault

tolerances,

leadin

thesents

conservation

planning,

action,

reasoning,

and

language

(Fig.

2A).

At

its coa

objects’

ties. (B) A 3D object-based representation

the scene

in A thatthat

can exhibits

support

sents

objects’

geometries

itions. (A) A of

cluttered

workshop

many

nuanced

physical

implicitly

via coar

Fig. 1.

Everyday

scenes,

art

that

evoke

strong

physical

intuan and

object-based

representation

ofover

a 3D

scene—analogous

top

generality

the

degree

of

scenes,

activities,

and

art activities,

that

evoke

strong

physical

intuphysical

inferences

based

on simulation.

(C)

A

precarious

stack

of

dishes

looks

tributions

by

i

ties. (B) A 3D object-based representation

of the

scene

in

A

that

can

s

tributions

by

inertial

tensors

Our

model

runs

t

geometric

models

underlying

computer-aided

design

progr

itions.

(A)

cluttered

workshop

that

exhibits

many

nuanced

physical

properred

workshop

that exhibits

nuanced

physical

properlike

anAaccident

waiting

tomany

happen.

(D)

A child

exercises

his

reasoning

problems.

Our

initial

IPE

mod

the

conservat

physical

inferences

based

onphysical

simulation.

(C)

A

precarious

stack

of

dishe

the

conservation

of

energy

from

the

observe

(Fig.

the

physical

governing

the scene’s dynam

ties. by

(B) stacking

Arepresentation

3D object-based

representation

ofphysical

the

scene

in A that

can

support

ct-based

the

scene

in1B)—and

A that

can

support

blocks. (E)ofJenga

puts

players’

intuitions

toforces

the

test.

(F)

like an accident waiting to happen. (D) A child exercises

his physical

implicitly

viarea

The Intuitive Physicist

•

Physics-engine with random variables

•

Works by sampling model beliefs and

simulating to generate predictions

...

Battaglia et al. 2013

Learning Kinematic and Dynamic Constraints

Learning

Kinematic and

Dynamic

Constraints

Whole-Body

Manipulation

Multi-Object

Planning

Stochastic

Planning

Model

Learning

Dynamic

Constraints

No

Yes

No

Yes

Yes

•

Domain: Planar object manipulation (arbitrary)

•

Objective: Learn compact model

•

Learning approach:

•

Stochastic physics engine model representation

•

MCMC estimator for unknown physical parameters

6 71

0 32 0

016

Model-Based RL Loop

PyMC

Idea:

Use physics-engine as model representation

Technical

contribution:

Formalize model learning problem

and develop inference method

6 71

0 32 -37 4 13 3

30 7 5

(ICML 2014)

f (s, a) ! s0

Physics API is

Learning Target

f (s, a; ) ⇡ s0

Place prior on API

parameters

8s, a

Estimate posterior

from data 0

L( |h) = P (s |s, a; )

body {

mass

inertia

joint.wheel

joint.hinge

| |

P ( |h) / P (h| )P ( )

P (s0 |s, a, )

⇡(s) = arg maxa P (s0 |s, a,

h

Model Parameters

Three classes of parameters

Rigid-body

parameters

Anisotropic friction

constraints

Distance

constraints

⇡(s)

Rigid body parameters

(m, r, µc )

Mass

For computing accelerations

We assume uniform density

ms

i

(wx , wy , w✓ , µx , µy )

Restitution

Friction

For computing perpendicular

contact forces

For computing tangential

contact forces

(ia , ib , ax , ay , bx , by )

Proportional to normalforce (Coulomb friction)

:= (m, r, µc , wx , wy , w✓ , µx , µy , ia , ib , ax , ay , bx , by )

heel

⌧

↵= =I

I

Anisotropic friction

1

⌧

(wx , wy , w✓ , µx , µy )

stance

Pose parameters

Friction Coefficients

(ia ,oniba, body

ax , ay , The

bx , orthogonal

by )

For placing constraint

friction components

l params

i

:= (m, r, µc , wx , wy , w✓ , µx , µy(-0.3,

, ia , ib0,

, ax0,, a0.1,

, by )

y , bx0.8)

"y

wx

wy

Object-frame

wθ

"x

wheel

12)

(wx , wy , w✓ , µx , µy )

13)

(ia , ib , ax , ay , bx , by )

Distance

constraint

distance

All params

14)

=

PositionB

Body indices

PositionA

:=

(m,

r,

µ

,

w

,

w

,

w

,

µ

,

µ

,

i

,

i

,

a

,

i

c

x

y

x y a b x ay , bx , by )

✓

Indicates two bodies to

anchor the constraint

Anchor offset on the

first body

bx

by

body a

ax

ay

body b

anchor a

l

anchor b

Anchor offset on the

second body

Inverted pendulum

)

B3

099

Random ⇡(s)

Paccept (

t|

239

t

✓

q( t )

1 ) = min 1,

q( t 1 )

013

◆

(s0 |s, a)

14 physical parameters per object

0)

i

:= (m, r, µc , wx , wy , w✓ , µx , µy , ia , ib , ax , ay , bx , by )

Scenes have

many objects

φ2

φ3

φ4

φ5

φ1

Φ

Gathering data

Touch or

Grasp Object

Apply

forces

Track

object

Unlocked Caster

Locked Caster

Data = State Trajectory & Applied forces

Compute equivalent

force in body-frame

0

L( |h) = P (s |s, a; )

Learning Parameters

| |

Bayesian Inference:

Φ is a generative model of h

P ( |h) / P (h| )P ( )

0

P

(s

|s,

a,

)

Posterior: will reflect robot’s updated beliefs

after observing h

0

0

⇡(s)

=

arg

max

P

(s

|s,

a,

)V

(s

)

a

Likelihood: should prefer accurate predictions

Prior:

should support only legal values2

s1

6 s2

6

h=6 .

y their

2⇡

w

J =4

respectivet=1

coefficients

2

ẏ 5 + 4 0 5 ⇥ R

˙

(✓)

✓˙

wx

wy (11)

535

536

µ

0

b (wheel)

(2)

body

velocityb Jtofor

body

frame (or

in principle

to 537

joint frame, if we

Eq.rotate

11 tells

us bthat

proposed

model

paf = the xlikelihood

ever include

rotation)0on aµyGaussian centered on the prerameters

are evaluated

538

b

1w

force

(impulse)

back

to

the

world

frame

dicted next state for a generative physics

J = R world

J parameter539

ized

by ˜ (i.e.,

with force

known

and– proposed

(3)

compute

friction

inbgeometry

body frame

just scaledynamthe components

540of the velocity

w

f noise,

= R( fthe

) coefficients

ics).

Due(2)toby

Gaussian

log-likelihood for is obfrom

their respective

541

the

forceby

accumulator

...

tained

summing squared

distances

542

between the observed

ntvalue

as single

expression:

0andb action:

and

the

predicted

state for

eachµstate

x

b trajectories

L2 penalty

on

sample

543

f

=

J 1

02

3 2 30 µ

✓ y

◆

ẋn

0

544

⇣

⌘

µx 0

w

2

x

1 @4 X

40 0to5the

ẏ 5 +

= Rrotate this force

R (impulse)

⇥ world

R ˜ frameA

(4)

back

545

ln0P (h|

(st ˙ f (st , at ; w)y

(12)

µy , ) =

˙

(✓)

✓

546

t=1

w

b

f = R( f )

547

Along with the prior defined in Table 1, this provides the

(5) add in to the force accumulator ...

548

necessary

components

for

a

Metropolis

sampler

for

Eq.

10.

) = ln (Pas

(h|single

)P ( expression:

))

Wheel q(

constraint

549

Learning Parameters

n ⇣

2X 3 2 3

⌘0

X

2

0

✓

ẋ P (p) 0

˜

=

s

f

(s

,

a

;

)

+

t

t

0

tµx generated

w

Posterior

samples

MCMC

5 + 4 0 5 ⇥ R wx

ẏ

f =t=1

R

R 1 @4by

p2

0 µy

wy

˙

˙

(

✓)

✓

✓

◆

MCMC Paccept ( t | t 1 ) = min 1, q( t )

q( t 1 )

q( ) =1 ln (P (h| )P ( ))

n ⇣

⌘2 X

X

0

=

st f (st , at ; ˜ ) +

P (p)

t=1

Paccept (

t| t

p2

✓

q( t )

1 ) = min 1,

q( t 1 )

1

◆

◆

1

A

{wx, wy, 0, 0.1, 0.8}

Online Performance

ICML 2014

−100

TRUE

PBRL

OOLWR

LWR

−300

Reward

Reward

0

Shopping Cart MDP

0

200

Apartment MDP

400

600

800 1000

−40

−80

TRUE

PBRL

OOLWR

−120

Reward

Reward

0

Step

0

200

400

600

Step

Step

800 1000

Learning on Steel Cart

ICRA 2014

Prior Prediction

Updated Beliefs

Gathers Data

Contributions

Formalized learning

from manipulation

data

Allows autonomous

learning on arbitrary

objects

Compact physicsbased model

Very data-efficient vs.

OO-LWR

Successfully

applied to real robot

One-shot learning on

tables and cart

Limitations

Not demonstrated

in real online task

Up Next!

A 97 3

1

Physics-based

Reinforcement Learning

3

2

Navigation Among Movable

Objects with Uncertain Dynamics

Online Learning for Navigation

Among Movable Objects

Navigation Among Movable Objects with Uncertain Dynamics

Objective:

•

Create collision-free path to goal

Challenge:

•

Which objects are relevant?

A Well-Studied Problem

M.#S%lman#and#J.#Kuffner.#Naviga&on)Among)Movable)Obstacles:)Real6&me)

Reasoning)in)Complex)Environments.#In#Journal#of#Humanoid#Robo%cs,#2004.#

M.#S%lman,#K.#Nishiwaki,#S.#Kagami,#and#J.#Kuffner.#Planning)and)execu&ng)

naviga&on)among)movable)obstacles.#IROS#2006.#

M.#S%lman#and#J.#Kuffner.#Planning)among)movable)obstacles)with)ar&ficial)

constraints.#In#WAFR,#2006.#

Jur#van#den#Berg,#Mike#S%lman,#James#Kuffner,#Ming#Lin,#Dinesh#Manocha.#Path#

Planning#Among#Movable#Obstacles:#A#Probabilis%cally#Complete#Approach.##

WAFR#2008.#

D.#Nieuwenhuisen,#A.#van#der#Stappen,#and#M.#Overmars.#An)effec&ve)framework)

for)path)planning)amidst)movable)obstacles.##WAFR,#2008.#

…

Curse of Dimensionality

BOOMDP

State space: configuration

330 of Based

Reinforcement

Learning

(PBRL), which trades flexrobot

and every

object

•

331

ibility for efficiency.

332

333 • 2.

Assuming

resolution n:

NAMO Stuff

334

335

Cr = {x, y, ✓} ! |Cr | = O(n3 )

n

336

Coi = {x, y, ✓} ! |Coi | = O(n3 )

337

Qk

338

=) C = Cr ⇥ i=0 Coi = O(n3(k+1) )

339

⇤

V

(s) = maxa2A Q(s, a)

340

341

⇡ ⇤ (s) = arg maxa2A Q(s, a)

342

• Search is exponential in the

343

References

344 number of objects

345

Bhat, K.S., Twigg, C.D., Hodgins, J.K., Khosla, P.K.,

346

Popović, Z., and Seitz, S.M. Estimating cloth simula347

tion parameters from video. In SIGGRAPH. Eurograph348

ics Association, 2003.

n

Model Uncertainty

•

Low-level uncertainty: how does this object move?

•

High-level uncertainty: can I use this object?

NAMO MDP

(WAFR 2012, ICRA 2013

•

Natural state abstraction: free-space regions

•

Corresponding action abstraction: clear opening to

neighboring free-space

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

ibility for efficiency.

R = Rewards

(5)

Transition Model def:

Stam, J. Stable 3fluids.

a

a

a

P11

P12

. . . P1n

a conference

a 7 on C

6 P anual

P

.

.

.

P

22

2n 7

6 21

T (s, a, s0 ) = P (s0 |s, a) = 6 .. techniques.

ACM

..

..

.. 7 Pres

4 .

. 5

.

.

P a1999.

Pa ... Pa

2

Solving a NAMO-MDP

LowLevel

HighLevel

2. NAMO Stuff

Cr = {x, y, ✓} ! |Cr | (6)

= O(n3 )

control: a survey. Cog

Coi = Dynamics

{x, y, ✓} ! |Coi | = O(n3 )

Planningn1 n2

Transition Model def (no actions):

Qk

2

=) C = Cr ⇥ i=0 Coi = O(n3(k+1) )

P11 P12 . . .

Physics

⇤

(7)

V (s) = maxa2A Q(s, a)

⇡ ⇤ (s) = arg maxa2A Q(s, a)

0

P1n

. . . P2n

..

..

.

.

. . . Pnn

nn

3

Monte-Carlo

Search 77

6 Tree

6 P21 P22

0

0

T (s, s ) = P (s |s) = 6

4

..

..

.

.

Pn1 Pn2

Bellman equation (no actions)

7

5

P

X

2 (s = j|s = i) =

3

(8)

V (s) = R(s) +

P (s0 |s)V (s0 )

p1,1 p1,2 . . . p1,j . . .

s0

6p2,1 p2,2 . . . p2,j . . .7

6

7 equation

Bellman

6 ..

..

..

"

#

..

.. 7

6 .

7

X

.

.7 .

.

.

6

(9)

V (s) = max R(s, a) +

P (s0 |s, a)V (s0 )

6 pi,1 pi,2 . . . pi,j . . .7

a

s0

4

5

..

..

.. Bellman

. .Matrix

..

Stochastic

Iteration

as update rule, forValue

t steps to go

(value iteration)

.

.

.

.

.

"

⇤

(10)

Vt+1

(s)

P

(f

=

j|f

=

i)

=

t+1

t

2

3

f1,1 f1,2 ... f1,j

6 f2,1 f2,2 ... f2,j 7

6

7

6 ..

7

..

..

4 .

5

.

.

fi,1 fi,2 ... fi,j

P

V (s) := s0 P⇡(s) (s, s0 ) R⇡(s) (s, s0 ) + V (s0 )

References

max R(s, a) +

a

1

F1

F3

X

s0

P (s0 |s, a)Vt⇤ (s0 )

F2

F4

#

Scalability

•

Algorithm typically

linear in |O|

•

Adjacency graph

directly controls the

complexity of ValueIteration

F5

F4

F6

F7

Only 8 States!

F3

F2

F1

F0

Highlighted Behavior

•

•

Robot adapts to stationary object:

•

Initially selects table

•

Updates model and switches

to couch

Limitations:

•

Manipulation planning in

joint-space (KD-RRT)

•

Model “learning”:

thresholded movable flag

Contributions

Value-based

Planning

Combines reward and

model uncertainty

Compact high-level

dynamics

Solves object relevance

problem

Limitations

Stationary Belief

Distributions

No online model

learning

Implemented in

Simulation

Doesn’t use realistic

control stack

A 97 3

1

Physics-based

Reinforcement Learning

3

2

Navigation Among Movable

Objects with Uncertain Dynamics

Online Learning for Navigation

Among Movable Objects

Platform

GoToFreeSpace(Oi,Fj)

GetGraspPoint

NavigateToPoint

Grasp

ClearObstacle

(vobj, k)

} System Control

Body Controller

(pbase) x k

(pobj) x k

Base Controller

Manipulation Controller

(vl,vr)

Wheels

} Motor Control

(vm) x 16

IMU

Vision

FT

Modules

} Sensorimotor

Sensory

Motor

Whole-Body Manipulation

Free Parameter: vo (3-dim)

vo

vo

Desired Object

Velocity

vm

vb

Manipulation Controller

Robot Body

Trajectory

vb

vm

Grasp Sampling

Free Parameter: gθ (1-dim)

Model-specific distribution

•

Sample grasp angle:

gθ~ P(θ|Φ)

•

Compute projected

boundary point

•

Reject if not reachable

Manipulation Policies

P(%|Φ)

Planner operates over vo and gθ

Linear Velocity

(vx,vy)

Static

None

Unconstrained ±vx and ±vy

Angular Velocity

(vθ)

None

N/A

±vθ at

object center

Uniform

Anisotropic

±vu along unconstrained axis

±vθ at

(u = argminx,y($x,$y).

constraint anchor

Fixed-Point

±vθ at

constraint anchor

None

Grasp Points

(gθ)

Faces perpendicular to

unconstrained axis.

Top and bottom faces

opposite to constraint

Example

N/A

NAMO with Static Constraint

NAMO with Non-Static Constraint

Learning Via Contact

Summary and Contributions

Physics Engine +

Reinforcement Learning + ϵ

=

Adaptive Manipulation

1. Physics-Based Reinforcement Learning

•

Data-efficient model learning for multi-body dynamics

2. NAMO-MDP

•

Fast decision-theoretic solution for NAMO with uncertain dynamics

3. Online Learning for NAMO

•

First NAMO planner which adapts to novel objects

•

First collision-learning result in mobile manipulation

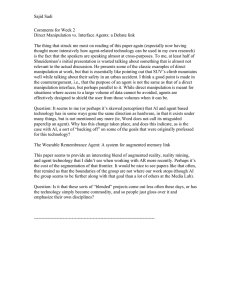

Publications

•

SCHOLZ, J. and STILMAN, M., “Combining motion planning and optimization for flexible robot

manipulation,” in Humanoids, 2010.

•

SCHOLZ, J., CHITTA, S., MARTHI, B., and LIKHACHEV, M., “Cart pushing with a mobile

manipulation system: Towards navigation with moveable objects,” in ICRA, 2011.

•

LEVIHN, M., SCHOLZ, J., and STILMAN, M., “Planning with movable obstacles in continuous

environments with uncertain dynamics,” in ICRA, 2013.

•

LEVIHN, M., SCHOLZ, J., and STILMAN, M., “Hierarchical decision theoretic planning for

navigation among movable obstacles,” in WAFR, 2012.

•

SCHOLZ, J., LEVIHN, M., ISBELL, C., “What Does Physics Bias: A Comparison of Model Priors

for Robot Manipulation,” in RLDM, 2013.

•

SCHOLZ, J., LEVIHN, M., ISBELL, C., and WINGATE, D., “A Physics-Based Model Prior for

Object-Oriented MDPs,” in ICML, 2014.

•

SCHOLZ, J., LEVIHN, M., ISBELL, C. L., CHRISTENSEN, H., and STILMAN, M., “Learning nonholonomic object models for mobile manipulation,” in ICRA, 2015.

Special thanks to my

committee, and Mike Stilman