Dirichlet Processes and Mixture Models An interactive tutorial: Part 2 Pfunk Meeting 2

advertisement

Dirichlet Processes and

Mixture Models

An interactive tutorial: Part 2

Pfunk Meeting 2

Fall ’11

*some content adapted from El-Arini 2008 & Teh 2007

Demo Code: http://www.cc.gatech.edu/~jscholz6/resources/code/DP_Tutorial/

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

1

Motivation:

TypicalModeling

Bayesian Observed

Approach Data

•

•

Say we observe x1,...,xn, with xi~F

•

A conventional bayesian would place a prior over

F from some functional family, and use data to

compute posterior

Parametric models suffer from over/under

fitting when there’s a misfit between the

complexity of the model and data

•

Traditional approaches (cross validation, model

averaging) involve user-defined criteria that are

error-prone in practice*

* e.g. thresholding likelihood functions and stuff

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

2

Topics

• Review of Dirichlet Process Concepts &

Metaphors from last week

• Running example: Density estimation with

mixture of gaussians

• Learning Gaussian Mixtures with EM

• Learning (infinite) Gaussian Mixtures with

MCMC (Gibbs Sampling)

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

3

Some Process Metaphors

•

We talked about 3 related processes:

Chinese Restaurant Process

• Polya-urn

• Involves drawing and replacing balls from an urn

• Chinese Restaurant

• Involves customers sitting at tables in proportion

Generating from the CRP:

First customer sits at the first table.

Customer n sits at:

nk

Table k with probability α+n−1

where nk is the number of customers

at table k .

α

A new table K + 1 with probability α+n−1

.

Customers ⇔ integers, tables ⇔ clusters.

The CRP exhibits the clustering property of the DP.

2

1

4

3

Yee Whye Teh (Gatsby)

7

6

DP and HDP Tutorial

9

Mar 1, 2007 / CUED

to their popularity

truction

• Stick-breaking

• Involves breaking off pieces of a stick of unit

∼ DP(α, H) look like?

probability one, so:

G=

8

5

∞

�

πk δθk∗

k =1

nstruction shows that G ∼ DP(α, H) if:

length

!(1)

!(3)

!(2)

!(4)

!(6)

!(5)

J. Scholz (RIM@GT)

) if π = (π1 , π2 , . . .) is distributed as above.

DP and HDPSeptember

Tutorial

Mar 1, 2007 / CUED

Friday,

2, 2011

15 / 53

09/02/2011

4

13 / 53

Some Process Metaphors

•

We talked about 3 related processes:

Chinese Restaurant Process

• Polya-urn

• Involves drawing and replacing balls from an urn

All

these

share

the

property

of

carving

up

Restaurant

•ourChinese

sample space into a discrete distribution

• Involves customers sitting at tables in proportion

Generating from the CRP:

First customer sits at the first table.

Customer n sits at:

nk

Table k with probability α+n−1

where nk is the number of customers

at table k .

α

A new table K + 1 with probability α+n−1

.

Customers ⇔ integers, tables ⇔ clusters.

The CRP exhibits the clustering property of the DP.

2

1

4

3

Yee Whye Teh (Gatsby)

7

6

DP and HDP Tutorial

9

Mar 1, 2007 / CUED

to their popularity

truction

• Stick-breaking

• Involves breaking off pieces of a stick of unit

∼ DP(α, H) look like?

probability one, so:

G=

8

5

∞

�

πk δθk∗

k =1

nstruction shows that G ∼ DP(α, H) if:

length

!(1)

!(3)

!(2)

!(4)

!(6)

!(5)

J. Scholz (RIM@GT)

) if π = (π1 , π2 , . . .) is distributed as above.

DP and HDPSeptember

Tutorial

Mar 1, 2007 / CUED

Friday,

2, 2011

15 / 53

09/02/2011

4

13 / 53

Characterizing the Process

•

We talked about how to describe their

dynamics, or “average behavior”

•

All are infinite-dimensional generalizations of

the Dirichlet distribution with params (αH)

•

“Any finite partition (marginal) of these

infinite processes will be Dirichletdistributed”

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

5

Why “Dirichlet”?

Tying it all together

•

•

Assuming a process exists with dirichlet marginals, we

can leverage the properties of dirichlet-multinomial

conjugacy for inference

β | X = (β1 , ..., βK ) ∼ M ult(X),

Recall: Can obtain dirichlet posterior given some

observed data β by simply adding α and β (vectors)

•

•

where i is the number of occurrences o

β | Xdistribution

= (β1 , ..., βK )on

∼M

ult(X),

if discrete

1, ..., K defined b

then: X | α, β ∼ Dir(α + β).

where i is the number of occurrences of i

discrete distribution on 1, ..., K defined by X

* where

β is the number of occurrences of i in a sample of n

α

points

distribution

1,...,K defined by X

Xfrom| the

β discrete

∼ Dir(α

+onβ).

i

µ

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

6

Why “Dirichlet”?

Tying it all together

So, just like with the dirichlet distribution,

•

Urn Scheme

the DP is closed under bayesian updating

(multiplication)

Can use this property to derive an

•

urn scheme produces a sequence θ1 , θ2 , . . . with the

expression for parameters given data:

ng conditionals:

θn |θ1:n−1 ∼

J. Scholz (RIM@GT)

Friday, September 2, 2011

�n−1

δθi + αH

n−1+α

i=1

09/02/2011

This is the

Polya-Urn

7

ya’s urn scheme produces a sequence θ1 , θ2 , . . . with the

wing conditionals:

A closer look at the DP posterior

θn |θ1:n−1 ∼

�n−1

δθi + αH

n−1+α

i=1

Weird equation: combines discrete (counts) with continuous (H)

•

gine picking balls of different colors from an urn:

Equation is of the form (p)f(Ω) + (1-p)g(Ω)

•

Start with no balls in the urn.

thatdraw

proportion

of density

is associated

with f, so

with probability

∝ α,

θn ∼pH,

and add

a ball of

• Implies

canurn.

split the task in half:

that color intowethe

With probability

∝ flip

n −a bern(p)

1, pickcoin.

a ball

at random

If heads,

draw fromfrom

f, if tails, draw

• first

the urn, record from

θn tog be its color, return the ball into

the urn and place

a

second

ball

of

same

color

into

for

polya

urn,

gives

us

either

a

sample

from

existing balls

•

(f), or a new color (g)*

urn.

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

8

DP Summary

•

Distributions drawn from a DP are discrete, but

cannot be described using finite parameters

(hence “non-parametric”)

•

Why “Dirichlet”? Because marginals and

posteriors match dirichlet distribution

•

•

This is where the urn scheme comes from, and

(more importantly) provides a way to do Gibbs

sampling

A more esoteric tutorial would explain that the DP is the “De Finetti distribution

underying the polya urn”, which is what makes the samples i.i.d. under a DP prior,

and therefore permutable in a sampler, making our lives much easier

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

9

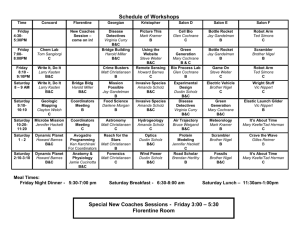

Topics

• Review of Dirichlet Process Concepts &

Metaphors

• Running example: Density estimation with

mixture of gaussians

• Learning Gaussian Mixtures with EM

• Learning (infinite) Gaussian Mixtures with

MCMC (Gibbs Sampling)

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

10

Running Example: Gaussian Mixtures

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

11

Running Example: Gaussian Mixtures

!"#$%&#$"'

We are given a bunch of data points and

` !"#$%"#&'(")#$#*$+$#,"+-#$)*#$%"#+./*#+0$+#'+#1$,#

told it was generated by a mixture of

&")"%$+"*#2%.3#$#3'4+5%"#.2#6$5,,'$)#*',+%'75+'.),8

gaussians

•

`

9)2.%+5)$+"/:-#).#.)"#0$,#$):#'*"$#!"#$%&'( 6$5,,'$),#

;%.*5<"*#+0"#*$+$8

J. Scholz2 (RIM@GT)

Friday, September 2, 2011

09/02/2011

11

Running Example: Gaussian Mixtures

!"#$%&#$"'

We are given a bunch of data points and

` !"#$%"#&'(")#$#*$+$#,"+-#$)*#$%"#+./*#+0$+#'+#1$,#

told it was generated by a mixture of

&")"%$+"*#2%.3#$#3'4+5%"#.2#6$5,,'$)#*',+%'75+'.),8

gaussians

•

`

•

Unfortunately, no one said how many

9)2.%+5)$+"/:-#).#.)"#0$,#$):#'*"$#!"#$%&'(

6$5,,'$),#

gaussians produced the data

;%.*5<"*#+0"#*$+$8

J. Scholz2 (RIM@GT)

Friday, September 2, 2011

09/02/2011

11

#$%&#$"'

Running Example:

Gaussian

Mixtures

Example:

Motivation

Gaussian

Mixtures

"#$%"#&'(")#$#*$+$#,"+-#$)*#$%"#+./*#+0$+#'+#1$,#

)"%$+"*#2%.3#$#3'4+5%"#.2#6$5,,'$)#*',+%'75+'.),

• Could it be this?

2.%+5)$+"/:-#).#.)"#0$,#$):#'*"$#!"#$%&'( 6$5,

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

12

#$%&#$"'

Running Example:

Gaussian

Mixtures

Example:

Motivation

Gaussian

Mixtures

"#$%"#&'(")#$#*$+$#,"+-#$)*#$%"#+./*#+0$+#'+#1$,#

)"%$+"*#2%.3#$#3'4+5%"#.2#6$5,,'$)#*',+%'75+'.),8

• Or perhaps this?

)2.%+5)$+"/:-#).#.)"#0$,#$):#'*"$#!"#$%&'( 6$5,,

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

13

What to do?

• We can guess the number of components,

run Expectation Maximization (EM) for

Gaussian Mixture Models, look at the

results, and then try again...

• We can run hierarchical agglomerative

clustering, and cut the tree at a visually

appealing level...

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

14

What to do?

• Really, we want to cluster the data in a

statistically principled manner, without

resorting to hacks...

>> for a preview, run demo 5.2

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

15

Real examples

• Brain Imaging: Model an unknown number

of spatial activation patterns in fMRI images

[Kim and Smyth, NIPS 2006]

• Topic Modeling: Model an unknown number

of topics across several corpora of

documents [Teh et al. 2006]

• Filtering and planning in unknown

state spaces (iHMM [Beal et. al. 2003],

iPOMDP [Doshi et. al. 2009])

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

16

Topics

• Review of Dirichlet Process Concepts &

Metaphors

• Running example: Density estimation with

mixture of gaussians

• Learning Gaussian Mixtures with EM

• Learning (infinite) Gaussian Mixtures with

MCMC (Gibbs Sampling)

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

17

Gaussian Mixture Modeling

•

•

β | X = (β1 , ..., βK ) ∼ M ult(X),

Gives a nice general way to fit densities to

multi-modal

data

where is the number of occurrences of i in a sa

i

discrete

distribution

on 1,sum

..., K

defined by X, then

Defined

as a weighted

of independent

gaussian

X | α, β RVs:

∼ Dir(α + β).

p(x | θ) =

α

K

�

i=1

πi G(x | µi , Σi )

µ

* Where θ is short for all parameters in the mixture (π, μ, Σ), and g

is short

σfor a gaussian distribution with mean μ and (co)variance Σ

J. Scholz Σ

(RIM@GT)

09/02/2011

i

Friday, September 2, 2011

i

try it! >> Prelim 3.1

18

Uses for GMM

•

•

Generic density estimation

•

Aims to fit parameters to bag of data as well as

possible

•

E.G. Modeling stock or commodity prices as

generated by hidden factors

Clustering!

•

Aims to uncover the latent classes of the

generating model, and where they live

•

E.G. Discovering goal regions to treat as subtasks

for an RL agent

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

19

How to fit toXdata:

EM

∼ M ulti(p) ⇒ P (X

X ∼ Dir(α) ⇒ P (Π =

Given a dataset of the form: X = x1 , x2 , ..., xn

How do we infer πi, μj, σj ???

try it! >> Demo 5.1

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

20

EM Overview

•

First lets associate a new latent variable z with each

data point that indicates which component the data

point came from

•

Then for however many components we have, assign

a mean and variance to the component:

Zi

π

Xi

θ

J. Scholz (RIM@GT)

Friday, September 2, 2011

xi: ith data point

π: overall mixing proportions

zi: ith component indicator

θ: component parameters

Too many

unknowns!

09/02/2011

21

EM Overview

• Chicken and Egg Dilemma:

• If we knew the proper component assignments, it'd

be trivial to work out μ and σ (just compute

sample mean and variance, or do bayesian thing if

we have prior beliefs)

•

•

If we knew μ and σ, it'd be trivial to work out the

component assignments (just do max likelihood

thing)

Solution:

•

Iteratively switch between these 2 things and wait

until the log-likelihood plateaus...

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

22

t

figuration, we wish to estimate the parameters oft=1

the GMM, λ, which in some

this expression

is a non-linear

the parameters

λ and

maximization

is not possible. However,

eely,

training

feature vectors.

There arefunction

several of

techniques

available

fordirect

estimating

the

i a special

eter

estimates

be obtained iteratively

case of (ML)

the expectation-maximization

(EM) algorithm [5].

st popular

andcan

well-established

method isusing

maximum

likelihood

estimation.

idea of

the EM which

algorithm

is, beginning

with of

an the

initial

model

totraining

estimate a new model λ̄, such that

eicmodel

parameters

maximize

the likelihood

GMM

givenλ,the

The

becomes

the initial

model

for the next between

iteration the

and the process is repeated until

rsp(X|λ).

X = {x

. . , xmodel

GMM

likelihood,

assuming

independence

1 , . new

T }, thethen

ergence threshold is reached. The initial model is typically derived by using some form of binary VQ estimation.

T

!

EM iteration, the following

re-estimation formulas are used which guarantee a monotonic increase in the model’s

Treat

component

assignments as latent variables,

and

p(xt |λ).

(4)

value, p(X|λ) =

EM Algorithm

where is the number of occurrences of i

discrete distribution on 1, ..., K defined by X

X

|

α,

β

∼

Dir(α

+

β).

•

t=1

switch

between:

ightsfunction of the parameters λ and direct maximization is not possible. However,

near

K

teratively using a special case of the expectation-maximization

(EM) algorithm [5].

T

estimating

indicators

by

conditioning

on the

"

m is, beginning with an initial model λ, to 1estimate

a new model λ̄, such that

Pr(i|xt , λ).i

w̄i =

parameters

step)

n becomes the initial model

for the next(E

iteration

is repeated untili

T t=1and the process

i=1of binary VQ estimation.

The initial model is typically derived by using some form

-estimation formulas are used which guarantee a monotonic increase in the model’s

•

p(x | θ) =

�

π G(x | µ

component

, Σi )

z¯i ∝ G(xiPr(i|x

| µ,

Σ)

, λ) x

•

µ̄i =

T

1"

Then

go

Pr(i|xt , λ).

w̄i =

T t=1

T

"

t

(5)

t

t=1

T

"

.

(6)

Pr(i|xt , λ)

back and recompute

the component

(5)

t=1

α

parameters

(μ and σ) given the current assignments (M

diagonal covariance)

step)

µ

"

Pr(i|x , λ) x

Pr(i|x , λ) x

σ

µ̄ =

. σ̄ =

(6)

− µ̄ ,

"

"

Pr(i|x , λ)

Pr(i|x , λ)

Σ

x , and µ refer to arbitrary elements of the vectors σ , x , and µ , respectively.

λ

J. Scholz (RIM@GT)

09/02/2011

i

T

"

T

t

t

t=1

T

2

i

t=1

T

i

t

t=1

t

i

2

t

t

2

t

t=1

i

2

t

i

pendence assumption

is often incorrect but needed to make the problem tractable.

T

"

Friday, September 2, 2011

(7)

23

t

figuration, we wish to estimate the parameters oft=1

the GMM, λ, which in some

this expression

is a non-linear

the parameters

λ and

maximization

is not possible. However,

eely,

training

feature vectors.

There arefunction

several of

techniques

available

fordirect

estimating

the

i a special

eter

estimates

be obtained iteratively

case of (ML)

the expectation-maximization

(EM) algorithm [5].

st popular

andcan

well-established

method isusing

maximum

likelihood

estimation.

idea of

the EM which

algorithm

is, beginning

with of

an the

initial

model

totraining

estimate a new model λ̄, such that

eicmodel

parameters

maximize

the likelihood

GMM

givenλ,the

The

becomes

the initial

model

for the next between

iteration the

and the process is repeated until

rsp(X|λ).

X = {x

. . , xmodel

GMM

likelihood,

assuming

independence

1 , . new

T }, thethen

ergence threshold is reached. The initial model is typically derived by using some form of binary VQ estimation.

T

!

EM iteration, the following

re-estimation formulas are used which guarantee a monotonic increase in the model’s

Treat

component

assignments as latent variables,

and

p(xt |λ).

(4)

value, p(X|λ) =

EM Algorithm

where is the number of occurrences of i

discrete distribution on 1, ..., K defined by X

X

|

α,

β

∼

Dir(α

+

β).

•

t=1

switch

between:

ightsfunction of the parameters λ and direct maximization is not possible. However,

near

K

teratively using a special case of the expectation-maximization

(EM) algorithm [5].

T

estimating

indicators

by

conditioning

on the

"

m is, beginning with an initial model λ, to 1estimate

a new model λ̄, such that

Pr(i|xt , λ).i

w̄i =

parameters

step)

n becomes the initial model

for the next(E

iteration

is repeated untili

T t=1and the process

i=1of binary VQ estimation.

The initial model is typically derived by using some form

-estimation formulas are used which guarantee a monotonic increase in the model’s

•

p(x | θ) =

�

π G(x | µ

z¯i ∝ G(xiPr(i|x

| µ,

Σ)

, λ) x

•

µ̄i =

T

1"

Then

go

Pr(i|xt , λ).

w̄i =

T t=1

T

"

t

t

t=1

T

"

component

, Σi )

(5)

Just evaluate each gaussian

PDF at xi and normalize

.

(6)

Pr(i|xt , λ)

back and recompute

the component

(5)

t=1

α

parameters

(μ and σ) given the current assignments (M

diagonal covariance)

step)

µ

"

Pr(i|x , λ) x

Pr(i|x , λ) x

σ

µ̄ =

. σ̄ =

(6)

− µ̄ ,

"

"

Pr(i|x , λ)

Pr(i|x , λ)

Σ

x , and µ refer to arbitrary elements of the vectors σ , x , and µ , respectively.

λ

J. Scholz (RIM@GT)

09/02/2011

i

T

"

T

t

t

t=1

T

2

i

t=1

T

i

t

t=1

t

i

2

t

t

2

t

t=1

i

2

t

i

pendence assumption

is often incorrect but needed to make the problem tractable.

T

"

Friday, September 2, 2011

(7)

23

t

figuration, we wish to estimate the parameters oft=1

the GMM, λ, which in some

this expression

is a non-linear

the parameters

λ and

maximization

is not possible. However,

eely,

training

feature vectors.

There arefunction

several of

techniques

available

fordirect

estimating

the

i a special

eter

estimates

be obtained iteratively

case of (ML)

the expectation-maximization

(EM) algorithm [5].

st popular

andcan

well-established

method isusing

maximum

likelihood

estimation.

idea of

the EM which

algorithm

is, beginning

with of

an the

initial

model

totraining

estimate a new model λ̄, such that

eicmodel

parameters

maximize

the likelihood

GMM

givenλ,the

The

becomes

the initial

model

for the next between

iteration the

and the process is repeated until

rsp(X|λ).

X = {x

. . , xmodel

GMM

likelihood,

assuming

independence

1 , . new

T }, thethen

ergence threshold is reached. The initial model is typically derived by using some form of binary VQ estimation.

T

!

EM iteration, the following

re-estimation formulas are used which guarantee a monotonic increase in the model’s

Treat

component

assignments as latent variables,

and

p(xt |λ).

(4)

value, p(X|λ) =

EM Algorithm

where is the number of occurrences of i

discrete distribution on 1, ..., K defined by X

X

|

α,

β

∼

Dir(α

+

β).

•

t=1

switch

between:

ightsfunction of the parameters λ and direct maximization is not possible. However,

near

K

teratively using a special case of the expectation-maximization

(EM) algorithm [5].

T

estimating

indicators

by

conditioning

on the

"

m is, beginning with an initial model λ, to 1estimate

a new model λ̄, such that

Pr(i|xt , λ).i

w̄i =

parameters

step)

n becomes the initial model

for the next(E

iteration

is repeated untili

T t=1and the process

i=1of binary VQ estimation.

The initial model is typically derived by using some form

-estimation formulas are used which guarantee a monotonic increase in the model’s

•

p(x | θ) =

�

π G(x | µ

z¯i ∝ G(xiPr(i|x

| µ,

Σ)

, λ) x

•

µ̄i =

T

1"

Then

go

Pr(i|xt , λ).

w̄i =

T t=1

T

"

t

t

t=1

T

"

component

, Σi )

(5)

Just evaluate each gaussian

PDF at xi and normalize

.

(6)

Pr(i|xt , λ)

back and recompute

the component

(5)

t=1

α

parameters

(μ and σ) given the current assignments (M

diagonal covariance)

step)

µ

"

Pr(i|x , λ) x

Pr(i|x , λ) x

Just update component

σ

µ̄ =

. σ̄ =

(6)

− µ̄ ,

(7) wparameters by taking the

"

"

weighted mean and variance

Pr(i|x , λ)

Pr(i|x , λ)

Σ

x , and µ refer to arbitrary elements of the vectors σ , x , and µ , respectively.

λ

J. Scholz (RIM@GT)

09/02/2011

i

T

"

T

t

t

t=1

T

2

i

t=1

T

i

t

t=1

t

i

2

t

t

2

t

t=1

i

2

t

i

pendence assumption

is often incorrect but needed to make the problem tractable.

T

"

Friday, September 2, 2011

23

EM Summary

• Local hill-climbing of (log) likelihood

function

• Uses point-estimates of z, μ, and σ (ML)

• Searches space of:

assignment (z)

J. Scholz (RIM@GT)

Friday, September 2, 2011

x

09/02/2011

μ

x

σ

24

Relation to DPMM?

•

Why such detail?

•

Because it anticipates our approach to

solving the “how many components?”

problem

•

The Gibbs sampler we’ll use may look

different from this hill climbing algorithm,

but searches the same space (plus # of

components k too)!

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

25

Topics

• Review of Dirichlet Process Concepts &

Metaphors

• Running example: Density estimation with

mixture of gaussians

• Learning Gaussian Mixtures with EM

• Learning (infinite) Gaussian Mixtures with

MCMC (Gibbs Sampling)

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

26

Questions you should be thinking

about at this point

• Is it even possible to reframe the density

problem such that this DP stuff is relevant?

• If so, what does inference look like?

• Will our answer be different?

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

27

1) Can we combine a GMM with DP?

•

Yes! Just associate a component with each

particle in the DP

•

Generative approach:

1. Generate components by running the DP

2. Draw the parameters for each gaussian from H

•

H now gives a 2D output: µ and σ2

try it! >> Prelim 3.2

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

28

The Dirichlet Process Mixture Model

Dirichlet Process Mixture Models

•

•

We model a data set x1 , . . . , xn using the

following model:

A DPMM is the same as a regular GMM,

xi ∼ F (θi )

for i = 1, . . . , n

except we drawθ ∼

the

component

G

i

parameters from

a DP

G ∼ DP(α, H)

This model

letsθi isusa do

joint

inference

over

Each

latent

parameter

modelling

xi , while

2, but of

is theσunknown

distribution

parameters

not only µGand

α itselfover

(and

modelled using a DP.

therefore k)

H

!

G

"i

xi

This is the basic DP mixture model.

>> Demo 5.2 (later)

Yee Whye Teh (Gatsby)

J. Scholz (RIM@GT)

Friday, September 2, 2011

DP and HDP Tutorial

09/02/2011

Mar 1, 2007 / CUED

17 / 53

29

DPMM: controlling the density

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

30

DPMM: controlling the density

•

What happens when we play with our

parameters?

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

30

DPMM: controlling the density

•

What happens when we play with our

parameters?

•

alpha:

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

30

DPMM: controlling the density

•

What happens when we play with our

alpha=1, n_iter=100, n_samp=35

parameters?

•

alpha:

•

J. Scholz (RIM@GT)

Friday, September 2, 2011

bigger = more components

09/02/2011

30

DPMM: controlling the density

•

What happens when we play with our

alpha=1, n_iter=100, n_samp=35

parameters?

•

•

alpha:

•

# iterations:

J. Scholz (RIM@GT)

Friday, September 2, 2011

bigger = more components

09/02/2011

30

DPMM: controlling the density

•

What happens when we play with our

alpha=1, n_iter=100, n_samp=35

parameters?

•

•

alpha:

•

alpha=1, n_iter=1000, n_samp=35

# iterations:

•

J. Scholz (RIM@GT)

Friday, September 2, 2011

bigger = more components

more = more components

09/02/2011

30

DPMM: controlling the density

•

What happens when we play with our

alpha=1, n_iter=100, n_samp=35

parameters?

•

•

•

alpha:

•

alpha=1, n_iter=1000, n_samp=35

# iterations:

•

more = more components

# samples

J. Scholz (RIM@GT)

Friday, September 2, 2011

bigger = more components

09/02/2011

30

DPMM: controlling the density

•

What happens when we play with our

alpha=1, n_iter=100, n_samp=35

parameters?

•

•

•

alpha:

•

alpha=1, n_iter=1000, n_samp=35

# iterations:

•

more = more components

# samples

•

J. Scholz (RIM@GT)

Friday, September 2, 2011

bigger = more components

alpha=1, n_iter=1000, n_samp=350

more = more filled out histograms

09/02/2011

30

How do we use this?

•

•

Use DP as prior on the components

Critical part: condition on data

•

DP state is just a collection of components, so

we can ask “how likely is our data given our

parameters??”

•

This is a simple gaussian likelihood

calculation

•

Therefore we have conditional probabilities

required for Gibbs sampling

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

31

How

Gibbs

do we

Sampling

use this?

• Gibbs theory says that when this chain

mixes we effectively draw samples from the

posterior

• Posterior over what?

• Over anything we're sampling over,

which is anything that we care about

that we can derive a conditional

expression for (z, μ, σ, α)

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

32

Gibbs Sampling, the quick and dirty version

•

Gibbs sampling: a simulation scheme for sampling

from complicated distributions that we can’t

sample from analytically

•

Basic idea: do a random walk over parameter

space by resampling one variable at a time from

its conditional distribution, given the rest

•

Over time, the simulation spends time in states

in proportion to their posterior probability

•

Implies we have to derive likelihood

expressions for each parameter

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

33

The need for priors

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

34

The need for priors

•

Soon we’re going to try to compute µ,σ2, and α

from data, and we don’t want to overfit

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

34

The need for priors

•

Soon we’re going to try to compute µ,σ2, and α

from data, and we don’t want to overfit

•

We’ll put priors on both µ and σ2:

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

34

The need for priors

•

Soon we’re going to try to compute µ,σ2, and α

from data, and we don’t want to overfit

•

We’ll put priors on both µ and σ2:

•

J. Scholz (RIM@GT)

Friday, September 2, 2011

What’s the prior distribution for the mean of

a gaussian?

09/02/2011

34

The need for priors

•

Soon we’re going to try to compute µ,σ2, and α

from data, and we don’t want to overfit

•

We’ll put priors on both µ and σ2:

•

J. Scholz (RIM@GT)

Friday, September 2, 2011

What’s the prior distribution for the mean of

a gaussian? Another gaussian! [N(µ0,σ20]

09/02/2011

34

The need for priors

•

Soon we’re going to try to compute µ,σ2, and α

from data, and we don’t want to overfit

•

We’ll put priors on both µ and σ2:

•

What’s the prior distribution for the mean of

a gaussian? Another gaussian! [N(µ0,σ20]

•

What’s the prior distribution for the variance

of a gaussian?

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

34

The need for priors

•

Soon we’re going to try to compute µ,σ2, and α

from data, and we don’t want to overfit

•

We’ll put priors on both µ and σ2:

•

What’s the prior distribution for the mean of

a gaussian? Another gaussian! [N(µ0,σ20]

•

What’s the prior distribution for the variance

of a gaussian?

•

Are they independent?

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

34

The need for priors

•

Soon we’re going to try to compute µ,σ2, and α

from data, and we don’t want to overfit

•

We’ll put priors on both µ and σ2:

•

What’s the prior distribution for the mean of

a gaussian? Another gaussian! [N(µ0,σ20]

•

What’s the prior distribution for the variance

of a gaussian? Gamma(α,β)

•

Are they independent?

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

34

The need for priors

•

Soon we’re going to try to compute µ,σ2, and α

from data, and we don’t want to overfit

•

We’ll put priors on both µ and σ2:

•

What’s the prior distribution for the mean of

a gaussian? Another gaussian! [N(µ0,σ20]

•

What’s the prior distribution for the variance

of a gaussian? Gamma(α,β)

•

Are they independent?

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

No! (why?)

34

The need for priors

•

Soon we’re going to try to compute µ,σ2, and α

from data, and we don’t want to overfit

•

We’ll put priors on both µ and σ2:

•

What’s the prior distribution for the mean of

a gaussian? Another gaussian! [N(µ0,σ20]

•

What’s the prior distribution for the variance

of a gaussian? Gamma(α,β)

•

Are they independent?

No! (why?)

What would happen

without priors on μ and σ?

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

34

The need for priors

•

Soon we’re going to try to compute µ,σ2, and α

from data, and we don’t want to overfit

•

We’ll put priors on both µ and σ2:

•

What’s the prior distribution for the mean of

a gaussian? Another gaussian! [N(µ0,σ20]

•

What’s the prior distribution for the variance

of a gaussian? Gamma(α,β)

•

Are they independent?

What would happen

without priors on μ and σ?

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

No! (why?)

....It’d be jumpy!

34

2

osterior 4.

we1get

inverse

Gamma:

finition

/ σanother

is usually

called

the precision and is denoted by τ

� to put a �

�Now, we1want

prior on µ and σ 2 together. We could simply mu

β

+previous

(xitwo

− µ)

−(α+ n

−1

2

2 combination

2 mean

)

in

the

sections,

implicitly assuming µ and σ 2 are indepen

2

posterior mean isPusually

a

convex

of

the

prior

and

the MLE.

(σ | α, β) ∝ (σ )

exp −

σ 2 get a conjugate prior. One way to see this is that if w

we would not

� to theand

according

graphical

model

in Figure 1, we find that, conditioned

posterior precision is, in this case, the sum of the�prior

precision

the data

precision

βpost

2 −αpost −1

by a conjugate pr

∝ (σ )

exp −are,2in fact, dependent and this should be expressed(14)

τpost = τprior +στdata

A posterior expression for the mean

7. If xi | µ,our

σ2 ∼

N (µ,so

σ 2far:

) i.i.d.and σ 2 ∼ IG(α, β). Then:

summarize

results

�

�

2

2

�

mma 5. Assume x | µ2 ∼ N (µ, σ ) and µ ∼ N (µ0 , σn0 ). Then:

1

σ | x 1 , x2 , · · �

· , xn ∼ IG α + , β +

(xi − µ) � �

�

−1

2σ 2

2

σ02

1

1

µ|x ∼ N

x + 2

µ0 ,

+ 2

σ 2 + σ02

σ + σ02

σ02

σ

parametrize in terms of precisions, the conjugate prior is a Gamma distribution.

•

α

µ0

β

σ02

Updating the mean:

•

µ

σ2

x

β

Notice

it’s

a

weighted

combination

τ | α, β for

∼ Ga(α,

β)

P (τ | α,

τ α−1 exp (−τ β)

(15)

Posterior

multiple

measurements

(nβ)≥=1)

Γ(α)

We

will

use

the following prior distribution which, as we will show, is c

of the observed value and the

is: posterior update for multiple measurements. We could adapt our previousxderivation,

wposterior

look at the

| µ, τ ∼ N (µ,but

τ ) i.i.d.

prior

mean

� the� multivariate

��of Lemma 2. Instead

would be tedious since we would have to use

version

µ | τ ∼ we

N (µwill

, n τ)

�

α

Figure 1: µ and σ 2 are dependent conditio

i

0

0

�

1

α+ n

−1

(

)

2

uce the problem toPthe

with

sample

x̄ =

( µ)xi )/n as the new variable.

(τ | univariate

α, β) ∝ τ case,

expthe−τ

β +mean (x

i−

τ ∼ (16)

Ga(α, β)

2

�

�

2

σ

2

x

|

µ

∼

N

(µ,

σ

) i.i.d.

⇒ x̄ | µ ∼ N µ,

(10)

i

8. If xi | µ, τ ∼ N (µ, τ ) i.i.d.and τ ∼ Ga(α,

β). Then:

n

3.1 Posterior

�

�

�

�

�

n� 1

1

1

| x,µ)

τ . This is the simpler part, as we can use Lemma 8:

τ

|

x

,

x

,

·

·

·

,

x

∼

Ga

α

+

+

(x

2 µ

i−

P (x1 , x2 , · · ·1 , x2n | µ) ∝µn exp − 22 , βFirst

(xi2look

− µ)at

�

σ

2σ

nτ

n0 τ

�

�

µ

|

x,

τ

∼

N

x̄

+

µ0 ,

�

�

�

�should prefer both: nτ + n τ

1 precisions?

9. Should we prefer working with variances or

We

nτ

+

n

τ

0

0

∝µ exp − 2

x2i − 2µ

xi + nµ2

2σ

� n �

�at τ | x. We

� getnthis by expressing

�

Next, look

the joint density P (τ, µ |

�

iances add when we marginalize∝ exp −

2

2

−2µx̄ + µ

∝µ exp − 2try

(x̄ −it!

µ) >> Prelim 4.1-3

µ

2

P (τ, µ | x) ∝ P (τ ) · P2σ

(µ | τ ) · P (x | τ, µ)

2σ

� n τ

�

� τ�

cisions add when we condition ∝ P (x̄ | µ)

0

(11)

α−1

−βτ

1/2

2

n/2

µ

J. Scholz (RIM@GT)

09/02/2011 ∝ τ e τ exp − (µ − µ0 ) τ exp −35

•

Updating the variance:

Friday, September 2, 2011

2

2

What have we accomplished so far?

• Not too much, actually. We turned EM in

to a sampling algorithm to get a posterior

on μ and σ, but we’re not taking advantage

of the dirichlet prior yet!

• To use the DP, we need to be resampling

α too!

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

36

conditional prior for a single indicator given all the others; this is easily obtained from

Theeq.

latter

density

not of standard

it can beindicator

shown that p(log(β)|s

(12)

byiskeeping

all form,

but abutsingle

fixed: 1 , . . . , sk , w)

is log-concave, so we may generate independent samples from the distribution for log(β)

using the Adaptive Rejection Sampling (ARS) technique [Gilks & Wild, 1992], and transn−i,j + α/k

form these to get values for β.

p(ci = j|c−i , α) =

,

(13)

1+α

The mixing proportions, πj , are given a symmetric Dirichlet (also knownnas−

multivariate

beta) prior with concentration parameter α/k:

where the subscript −i indicates all indexes except

i and n−i,j is the number of observak

� α/k−1

Γ(α) component

tions,p(π

excluding

y

,

that

are

associated

with

The posteriors for the indicators

i

πj

, j. (10)

1 , . . . , πk |α) ∼ Dirichlet(α/k, . . . , α/k) =

k

Γ(α/k) j=1

A posterior expression for alpha

•

are derived in the next section.

We place a vague gamma prior on alpha (makes sense,

since all we know is that it’s greater than zero) parameter α:

where the mixing proportions must be positive and sum to one. Given the mixing proporLastly,

vague

prior of

inverse

shape

putdistribution

on the of

concentration

tions,

the priora for

the occupation

numbers,

nj , isGamma

multinomial

and theisjoint

the indicators becomes:

�

�

−1

−3/2

p(α )�

exp − 1/(2α) .

k∼ G(1, 1) =⇒

np(α) ∝ α

�

nj

p(c1 , . . . , cn |π1 , . . . , πk ) =

πj ,

nj =

δKronecker (ci , j).

(11)

•

j=1

(14)

i=1

We derive a likelihood expression for α:

Using the standard Dirichlet integral, we may integrate out the mixing proportions and

write the prior directly in terms of the indicators:

�

p(c1 , . . . , cn |α) = p(c1 , . . . , cn |π1 , . . . , πk )p(π1 , . . . , πk )dπ1 · · · dπk

(12)

Γ(α)

=

Γ(α/k)k

� �

k

n +α/k−1

πj j

dπj

j=1

k

Γ(α) � Γ(nj + α/k)

=

.

Γ(n + α) j=1 Γ(α/k)

In order to be able to use Gibbs sampling for the (discrete) indicators, ci , we need the

conditional prior for a single indicator given all the others; this is easily obtained from

eq. (12) by keeping all but a single indicator fixed:

•

simply

to obtain

posterior:

be derived fromMultiply

eq. (12), and

which

together

with thethe

prior

from

p(ci = j|c−i , α) =

n−i,j + α/k

,

n−1+α

(13)

�

�

number of observaα

exp − 1/(2α)

−i,j is the Γ(α)

Γ(α)

yi , that

,tions, excluding

p(α|k,

n)are∝associated with component j. The posteriors for the. indicators

(15)

+ α) are derived in the next section.

Γ(n + α)

k−3/2

where the subscript −i indicates all indexes except i and n

Lastly, a vague prior of inverse Gamma shape is put on the concentration parameter α:

�

�

−1

−3/2

p(α ) ∼ G(1, 1) =⇒ p(α) ∝ α

exp − 1/(2α) .

(14)

posterior for α depends only on number of observations, n, and

, k, and not on how the observations are distributed among the

J.p(log(α)|k,

Scholz (RIM@GT)

09/02/2011

onFriday,

n)

is

log-concave,

so

we

may

efficiently generate

September 2, 2011

37

conditional prior for a single indicator given all the others; this is easily obtained from

Theeq.

latter

density

not of standard

it can beindicator

shown that p(log(β)|s

(12)

byiskeeping

all form,

but abutsingle

fixed: 1 , . . . , sk , w)

is log-concave, so we may generate independent samples from the distribution for log(β)

using the Adaptive Rejection Sampling (ARS) technique [Gilks & Wild, 1992], and transn−i,j + α/k

form these to get values for β.

p(ci = j|c−i , α) =

,

(13)

1+α

The mixing proportions, πj , are given a symmetric Dirichlet (also knownnas−

multivariate

beta) prior with concentration parameter α/k:

where the subscript −i indicates all indexes except

i and n−i,j is the number of observak

� α/k−1

Γ(α) component

tions,p(π

excluding

y

,

that

are

associated

with

The posteriors for the indicators

i

πj

, j. (10)

1 , . . . , πk |α) ∼ Dirichlet(α/k, . . . , α/k) =

k

Γ(α/k) j=1

A posterior expression for alpha

•

are derived in the next section.

We place a vague gamma prior on alpha (makes sense,

since all we know is that it’s greater than zero) parameter α:

where the mixing proportions must be positive and sum to one. Given the mixing proporLastly,

vague

prior of

inverse

shape

putdistribution

on the of

concentration

tions,

the priora for

the occupation

numbers,

nj , isGamma

multinomial

and theisjoint

the indicators becomes:

�

�

−1

−3/2

p(α )�

exp − 1/(2α) .

k∼ G(1, 1) =⇒

np(α) ∝ α

�

nj

p(c1 , . . . , cn |π1 , . . . , πk ) =

πj ,

nj =

δKronecker (ci , j).

(11)

•

j=1

(14)

i=1

We derive a likelihood expression for α:

Using the standard Dirichlet integral, we may integrate out the mixing proportions and

write the prior directly in terms of the indicators:

�

p(c1 , . . . , cn |α) = p(c1 , . . . , cn |π1 , . . . , πk )p(π1 , . . . , πk )dπ1 · · · dπk

(12)

Γ(α)

=

Γ(α/k)k

� �

k

n +α/k−1

πj j

dπj

j=1

k

Γ(α) � Γ(nj + α/k)

=

.

Γ(n + α) j=1 Γ(α/k)

Ugh... thanks Rasmussen

In order to be able to use Gibbs sampling for the (discrete) indicators, ci , we need the

conditional prior for a single indicator given all the others; this is easily obtained from

eq. (12) by keeping all but a single indicator fixed:

•

simply

to obtain

posterior:

be derived fromMultiply

eq. (12), and

which

together

with thethe

prior

from

p(ci = j|c−i , α) =

n−i,j + α/k

,

n−1+α

(13)

�

�

number of observaα

exp − 1/(2α)

−i,j is the Γ(α)

Γ(α)

yi , that

,tions, excluding

p(α|k,

n)are∝associated with component j. The posteriors for the. indicators

(15)

+ α) are derived in the next section.

Γ(n + α)

k−3/2

where the subscript −i indicates all indexes except i and n

Lastly, a vague prior of inverse Gamma shape is put on the concentration parameter α:

�

�

−1

−3/2

p(α ) ∼ G(1, 1) =⇒ p(α) ∝ α

exp − 1/(2α) .

(14)

posterior for α depends only on number of observations, n, and

, k, and not on how the observations are distributed among the

J.p(log(α)|k,

Scholz (RIM@GT)

09/02/2011

onFriday,

n)

is

log-concave,

so

we

may

efficiently generate

September 2, 2011

37

conditional prior for a single indicator given all the others; this is easily obtained from

Theeq.

latter

density

not of standard

it can beindicator

shown that p(log(β)|s

(12)

byiskeeping

all form,

but abutsingle

fixed: 1 , . . . , sk , w)

is log-concave, so we may generate independent samples from the distribution for log(β)

using the Adaptive Rejection Sampling (ARS) technique [Gilks & Wild, 1992], and transn−i,j + α/k

form these to get values for β.

p(ci = j|c−i , α) =

,

(13)

1+α

The mixing proportions, πj , are given a symmetric Dirichlet (also knownnas−

multivariate

beta) prior with concentration parameter α/k:

where the subscript −i indicates all indexes except

i and n−i,j is the number of observak

� α/k−1

Γ(α) component

tions,p(π

excluding

y

,

that

are

associated

with

The posteriors for the indicators

i

πj

, j. (10)

1 , . . . , πk |α) ∼ Dirichlet(α/k, . . . , α/k) =

k

Γ(α/k) j=1

A posterior expression for alpha

•

are derived in the next section.

We place a vague gamma prior on alpha (makes sense,

since all we know is that it’s greater than zero) parameter α:

where the mixing proportions must be positive and sum to one. Given the mixing proporLastly,

vague

prior of

inverse

shape

putdistribution

on the of

concentration

tions,

the priora for

the occupation

numbers,

nj , isGamma

multinomial

and theisjoint

the indicators becomes:

�

�

−1

−3/2

p(α )�

exp − 1/(2α) .

k∼ G(1, 1) =⇒

np(α) ∝ α

�

nj

p(c1 , . . . , cn |π1 , . . . , πk ) =

πj ,

nj =

δKronecker (ci , j).

(11)

•

j=1

(14)

i=1

We derive a likelihood expression for α:

Using the standard Dirichlet integral, we may integrate out the mixing proportions and

write the prior directly in terms of the indicators:

�

p(c1 , . . . , cn |α) = p(c1 , . . . , cn |π1 , . . . , πk )p(π1 , . . . , πk )dπ1 · · · dπk

(12)

Γ(α)

=

Γ(α/k)k

� �

k

n +α/k−1

πj j

dπj

j=1

Ugh... thanks Rasmussen

k

Γ(α) � Γ(nj + α/k)

=

.

Γ(n + α) j=1 Γ(α/k)

In order to be able to use Gibbs sampling for the (discrete) indicators, ci , we need the

conditional prior for a single indicator given all the others; this is easily obtained from

eq. (12) by keeping all but a single indicator fixed:

•

simply

to obtain

posterior:

be derived fromMultiply

eq. (12), and

which

together

with thethe

prior

from

p(ci = j|c−i , α) =

n−i,j + α/k

,

n−1+α

(13)

Says that alpha depends only

�

�

number of observaα

exp − 1/(2α)

−i,j is the Γ(α)

Γ(α)

on the number of

tions,

excluding

y

,

that

are

associated

with

component

j.

The

posteriors

for

the

indicators

i

,

p(α|k,

n) ∝

. (15)

components and data points,

+ α) are derived in the next section.

Γ(n + α)

k−3/2

where the subscript −i indicates all indexes except i and n

Lastly, a vague prior of inverse Gamma shape is put on the concentration parameter α:

�

�

−1

−3/2

p(α ) ∼ G(1, 1) =⇒ p(α) ∝ α

exp − 1/(2α) .

(14)

posterior for α depends only on number of observations, n, and

, k, and not on how the observations are distributed among the

J.p(log(α)|k,

Scholz (RIM@GT)

09/02/2011

onFriday,

n)

is

log-concave,

so

we

may

efficiently generate

September 2, 2011

and not their distribution!

37

What’s all this math actually saying?

• That to update α, all we need to know is

the number of data points n and clusters k

• Larger values of n and k are associated with

larger values of α (duh)

• Lets see: (>> prelim 4.5)

n=40,k=10

J. Scholz (RIM@GT)

Friday, September 2, 2011

n=40,k=25

09/02/2011

n=60,k=25

38

Gibbs

Putting

foritthe

together

DPMM

•

Gibbs Sampling for the DPMM:

•

Our main loop follows this same pattern:

1. for i=1→n: resample all indicators Zi

2. for j=1→k: resample all component

parameters θj

3. resample α

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

39

Gibbs

Putting

foritthe

together

DPMM

•

Gibbs Sampling for the DPMM:

•

Our main loop follows this same pattern:

1. for i=1→n: resample all indicators Zi

2. for j=1→k: resample all component

parameters θj

3. resample α

Look familiar?? Just like EM, except we’re

doing sampling, and we included alpha!

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

39

Gibbs for the DPMM

•

Our specific approach to sampling is a block-gibbs

approach described by Neal (2000)

•

First, our goal is to sample:

•

Z (a nx1 vector of assignments of data points to

components*)

•

•

θ (a Kx2 matrix of gaussian means and variances)

α (the scalar DP concentration parameter)

* this implies k as well

J. Scholz (RIM@GT)

Friday, September 2, 2011

** this is not actually the full version discussed by Rasmussen (2000). There he included normal

and gamma priors on the hyperparameters u0, p0, a0, b0, which I chose to ignore for simplicity.

09/02/2011

40

DPMM Results

s=1...n

Draw samples 1...

through n

See for yourself:

>> Demo 5.2 (finally!)

* Can visualize posterior on θ too, but didn’t bother here

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

41

DPMM + MCMC Summary

• Random walk algorithm (not hill climbing)

• Gives samples of posterior over (w, μ, σ, α)

• Searches space of:

assignment (Z)

J. Scholz (RIM@GT)

Friday, September 2, 2011

x

μ

09/02/2011

x

σ

x

α

42

EM+GMM vs. GPMM

•

•

•

What are advantages of EM?

•

•

fast

soft component-assignment

What are advantages of DPMM?

•

•

Doesn’t depend critically on any user-set parameters

Not a hill-climbing method

Use EM when you know K (and what a good answer

looks like), use GPMM when finding K is interesting, or

you simply don’t have a clue

J. Scholz (RIM@GT)

Friday, September 2, 2011

09/02/2011

43