CONFIGURABLE CONSISTENCY FOR WIDE-AREA CACHING

advertisement

CONFIGURABLE CONSISTENCY FOR WIDE-AREA

CACHING

by

Sai R. Susarla

A dissertation submitted to the faculty of

The University of Utah

in partial fulfillment of the requirements for the degree of

Doctor of Philosophy

in

Computer Science

School of Computing

The University of Utah

May 2007

c Sai R. Susarla 2007

Copyright All Rights Reserved

THE UNIVERSITY OF UTAH GRADUATE SCHOOL

SUPERVISORY COMMITTEE APPROVAL

of a dissertation submitted by

Sai R. Susarla

This dissertation has been read by each member of the following supervisory committee and by

majority vote has been found to be satisfactory.

Chair:

John B. Carter

Wilson Hsieh

Jay Lepreau

Gary Lindstrom

Edward R. Zayas

THE UNIVERSITY OF UTAH GRADUATE SCHOOL

FINAL READING APPROVAL

To the Graduate Council of the University of Utah:

I have read the dissertation of

Sai R. Susarla

in its final form and

have found that (1) its format, citations, and bibliographic style are consistent and acceptable;

(2) its illustrative materials including figures, tables, and charts are in place; and (3) the final

manuscript is satisfactory to the Supervisory Committee and is ready for submission to The

Graduate School.

Date

John B. Carter

Chair: Supervisory Committee

Approved for the Major Department

Martin Berzins

Chair/Director

Approved for the Graduate Council

David S. Chapman

Dean of The Graduate School

ABSTRACT

Data caching is a well-understood technique for improving the performance and

availability of wide area distributed applications. The complexity of caching algorithms

motivates the need for reusable middleware support to manage caching. To support

diverse data sharing needs effectively, a caching middleware must provide a flexible

consistency solution that (i) allows applications to express a broad variety of consistency

needs, (ii) enforces consistency efficiently among WAN replicas satisfying those needs,

and (iii) employs application-independent mechanisms that facilitate reuse. Existing

replication solutions either target specific sharing needs and lack flexibility, or leave

significant consistency management burden on the application programmer. As a result,

they cannot offload the complexity of caching effectively from a broad set of applications.

In this dissertation, we show that a small set of customizable data coherence mechanisms can support wide-area replication effectively for distributed services with very

diverse consistency requirements. Specifically, we present a novel flexible consistency

framework called configurable consistency that enables a single middleware to effectively support three important classes of applications, namely, file sharing, shared database

and directory services, and real-time collaboration. Instead of providing a few prepackaged consistency policies, our framework splits consistency management into design choices along five orthogonal aspects, namely, concurrency control, replica synchronization, failure handling, update visibility and view isolation. Based on a detailed

classification of application needs, the design choices can be combined to simultaneously enforce diverse consistency requirements for data access. We have designed and

prototyped a middleware file store called Swarm that provides network-efficient wide

area peer caching with configurable consistency.

To demonstrate the practical effectiveness of the configurable consistency framework, we built four wide area network services that store data with distinct consistency

needs in Swarm. They leverage its caching mechanisms by employing different sets of

configurable consistency choices. The services are: (1) a wide area file system, (2) a

proxy-caching service for enterprise objects, (3) a database augmented with transparent

read-write caching support, and (4) a real-time multicast service. Though existing middleware systems can individually support some of these services, none of them provides a

consistency solution flexible enough to support all of the services efficiently. When these

services employ caching with Swarm, they deliver more than 60% of the performance of

custom-tuned implementations in terms of end-to-end latency, throughput and network

economy. Also, Swarm-based wide-area peer caching improves service performance by

300 to 500% relative to client-server caching and RPCs.

v

This dissertation is an offering to

The Divine Mother of All Knowledge, Gaayatri.

CONTENTS

ABSTRACT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

iv

LIST OF FIGURES . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

xi

LIST OF TABLES . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

GLOSSARY OF TERMS USED . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvi

ACKNOWLEDGMENTS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

CHAPTERS

1.

2.

INTRODUCTION . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1

1.1 Consistency Needs of Distributed Applications . . . . . . . . . . . . . . . . . . .

1.1.1 Requirements of a Caching Middleware . . . . . . . . . . . . . . . . . . . .

1.1.2 Existing Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2 Configurable Consistency . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2.1 Configurable Consistency Options . . . . . . . . . . . . . . . . . . . . . . . .

1.2.2 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2.3 Limitations of the Framework . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2.4 Swarm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.3 Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.4 Thesis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.5 Roadmap . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4

6

7

9

11

12

14

15

16

19

20

CONFIGURABLE CONSISTENCY: RATIONALE . . . . . . . . . . . . . . . . . 22

2.1 Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.1.1 Programming Distributed Applications . . . . . . . . . . . . . . . . . . . . .

2.1.2 Consistency . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.2 Replication Needs of Applications: A Survey . . . . . . . . . . . . . . . . . . . .

2.3 Representative Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.3.1 File Sharing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.3.2 Proxy-based Auction Service . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.3.3 Resource Directory Service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.3.4 Chat Service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.4 Application Characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.5 Consistency Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.5.1 Update Stability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.5.2 View Isolation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.5.3 Concurrency Control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.5.4 Replica Synchronization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.5.5 Update Dependencies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

22

22

23

25

27

27

29

30

30

31

32

32

32

34

35

36

2.5.6 Failure-Handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.6 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.7 Limitations of Existing Consistency Solutions . . . . . . . . . . . . . . . . . . . .

2.8 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.

CONFIGURABLE CONSISTENCY FRAMEWORK . . . . . . . . . . . . . . . 42

3.1 Framework Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.2 Data Access Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3 Configurable Consistency Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3.1 Concurrency Control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3.2 Replica Synchronization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3.2.1 Timeliness Guarantee . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3.2.2 Strength of Timeliness Guarantee . . . . . . . . . . . . . . . . . . . . .

3.3.3 Update Ordering Constraints . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3.3.1 Ordering Independent Updates . . . . . . . . . . . . . . . . . . . . . . .

3.3.3.2 Semantic Dependencies . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3.4 Failure Handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3.5 Visibility and Isolation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.4 Example Usage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.5 Relationship to Other Consistency Models . . . . . . . . . . . . . . . . . . . . . . .

3.5.1 Memory Consistency Models . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.5.2 Session-oriented Consistency Models . . . . . . . . . . . . . . . . . . . . . .

3.5.3 Session Guarantees for Mobile Data Access . . . . . . . . . . . . . . . . .

3.5.4 Transactions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.5.5 Flexible Consistency Schemes . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.6 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.6.1 Ease of Use . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.6.2 Orthogonality . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.6.3 Handling Conflicting Consistency Semantics . . . . . . . . . . . . . . . . .

3.7 Limitations of the Framework . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.7.1 Conflict Matrices for Abstract Data Types . . . . . . . . . . . . . . . . . .

3.7.2 Application-defined Logical Views on Data . . . . . . . . . . . . . . . . .

3.8 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.

38

38

40

41

42

43

45

45

47

48

49

49

51

51

53

54

56

58

58

62

64

65

67

69

69

70

70

71

71

72

72

IMPLEMENTING CONFIGURABLE CONSISTENCY . . . . . . . . . . . . . 74

4.1 Design Goals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.1.1 Scope . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.2 Swarm Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.2.1 Swarm Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.2.2 Application Plugin Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.3 Using Swarm to Build Distributed Services . . . . . . . . . . . . . . . . . . . . . .

4.3.1 Designing Applications to Use Swarm . . . . . . . . . . . . . . . . . . . . .

4.3.2 Application Design Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.3.2.1 Distributed File Service . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.3.2.2 Music File Sharing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.3.2.3 Wide-area Enterprise Service Proxies . . . . . . . . . . . . . . . . .

viii

74

76

77

78

80

81

85

87

87

87

88

4.4 Architectural Overview of Swarm . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.5 File Naming and Location Tracking . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.6 Replication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.6.1 Creating Replicas . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.6.2 Custodians for Failure-resilience . . . . . . . . . . . . . . . . . . . . . . . . . .

4.6.3 Retiring Replicas . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.6.4 Node Membership Management . . . . . . . . . . . . . . . . . . . . . . . . . .

4.6.5 Network Economy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.6.6 Failure Resilience . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7 Consistency Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.1.1 Privilege Vector . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.1.2 Handling Data Access . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.1.3 Enforcing a PV’s Consistency Guarantee . . . . . . . . . . . . . . .

4.7.1.4 Handling Updates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.1.5 Leases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.1.6 Contention-aware Caching . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.2 Core Consistency Protocol . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.3 Pull Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.4 Replica Divergence Control . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.4.1 Hard time bound (HT) . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.4.2 Soft time bound . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.4.3 Mod bound . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.5 Refinements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.5.1 Deadlock Avoidance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.5.2 Parallelism for RD Mode Sessions . . . . . . . . . . . . . . . . . . . .

4.7.6 Leases for Failure Resilience . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.7.7 Contention-aware Caching . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.8 Update Propagation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.8.1 Enforcing Semantic Dependencies . . . . . . . . . . . . . . . . . . . . . . . .

4.8.2 Handling Concurrent Updates . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.8.3 Enforcing Global Order . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.8.4 Version Vectors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.8.5 Relative Versions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.9 Failure Resilience . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.9.1 Node Churn . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.10 Implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.11 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.11.1 Security . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.11.2 Disaster Recovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.12 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.

88

89

91

91

95

95

95

96

96

97

98

98

98

100

100

102

102

105

106

109

110

110

111

112

112

112

113

114

119

121

121

122

123

124

125

126

127

128

128

129

129

EVALUATION . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

5.1 Experimental Environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

5.2 Swarmfs: A Flexible Wide-area File System . . . . . . . . . . . . . . . . . . . . . 133

5.2.1 Evaluation Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

ix

5.2.2 Personal Workload . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.2.3 Sequential File Sharing over WAN (Roaming) . . . . . . . . . . . . . . . .

5.2.4 Simultaneous WAN Access (Shared RCS) . . . . . . . . . . . . . . . . . . .

5.2.5 Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.2.6 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.3 SwarmProxy: Wide-area Service Proxy . . . . . . . . . . . . . . . . . . . . . . . . .

5.3.1 Evaluation Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.3.2 Workload . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.3.3 Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.3.4 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.4 SwarmDB: Replicated BerkeleyDB . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.4.1 Evaluation Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.4.2 Diverse Consistency Semantics . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.4.3 Failure-resilience and Network Economy at Scale . . . . . . . . . . . . .

5.4.4 Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.5 SwarmCast: Real-time Multicast Streaming . . . . . . . . . . . . . . . . . . . . . .

5.5.1 Results Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.5.2 Implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.5.3 Experimental Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.5.4 Evaluation Metrics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.5.5 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.6 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.

RELATED WORK . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

6.1 Domain-specific Consistency Solutions . . . . . . . . . . . . . . . . . . . . . . . . .

6.2 Flexible Consistency . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.3 Wide-area Replication Algorithms . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.3.1 Replica Networking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.3.2 Update Propagation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.3.3 Consistency Maintenance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.3.4 Failure Resilience . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.4 Reusable Middleware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7.

137

140

143

145

150

150

151

153

154

155

160

161

162

167

168

172

174

174

175

175

176

179

185

188

190

190

191

192

194

195

FUTURE WORK AND CONCLUSIONS . . . . . . . . . . . . . . . . . . . . . . . . . 196

7.1 Future Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7.1.1 Improving the Framework’s Ease of Use . . . . . . . . . . . . . . . . . . .

7.1.2 Security and Authentication . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7.1.3 Applicability to Object-based Middleware . . . . . . . . . . . . . . . . . .

7.2 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

196

196

197

197

198

REFERENCES . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

x

LIST OF FIGURES

2.1 A distributed application with five components A1..A5 employing the

function-shipping model. Each component holds a fragment of overall

state and operates on other fragments via messages to their holder. . . . . . .

24

2.2 A distributed application employing the data-shipping model. A1..A5

interact only via caching needed state locally. A1 holds fragments 1 and 2

of overall state. A2 caches fragments 1 and 3. A3 holds 3 and caches 5. . .

25

2.3 Distributed application employing a hybrid model. A1, A3 and A5 are in

data-shipping mode. A2 is in client mode, and explicitly communicates

with A1 and A3. A4 is in hybrid mode. It caches 3, holds 4 and contacts

A5 for 5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

26

3.1 Pseudo-code for a query operation on a replicated database. The cc options

are explained in Section 3.3. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

45

3.2 Pseudo-code for an update operation on a replicated database. . . . . . . . . .

46

3.3 Atomic sessions example: moving file fname from one directory to another. Updates u1,u2 are applied atomically, everywhere. . . . . . . . . . . . . .

51

3.4 Causal sessions example. Updates u1 and u2 happen concurrently and

are independent. Clients 3 and 4 receive u1 before their sessions start.

Hence u3 and u4 causally depend on u1, but are independent of each other.

Though u2 and u4 are independent, they are tagged as totally ordered, and

hence must be applied in the same order everywhere. Data item B’s causal

dependency at client 3 on prior update to A has to be explicitly specified,

while that of A at client 4 is implicitly inferred. . . . . . . . . . . . . . . . . . . . .

52

3.5 Update Visibility Options. Based on the visibility setting of the ongoing

WR session at replica 1, it responds to the pull request from replica 2

by supplying the version (v0, v2 or v3) indicated by the curved arrows.

When the WR session employs manual visibility, local version v3 is not

yet made visible to be supplied to replica 2. . . . . . . . . . . . . . . . . . . . . . . .

56

3.6 Isolation Options. When replica 2 receives updates u1 and u2 from replica

1, the RD session’s isolation setting determines whether the updates are

applied (i) immediately (solid curve), (ii) only when the session issues the

next manual pull request (dashed curve), or (iii) only after the session ends

(dotted curve). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

56

4.1 A centralized enterprise service. Clients in remote campuses access the

service via RPCs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

82

4.2 An enterprise application employing a Swarm-based proxy server. Clients

in campus 2 access the local proxy server, while those in campus 3 invoke

either server. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

83

4.3 Control flow in an enterprise service replicated using Swarm. . . . . . . . . . .

84

4.4 Structure of a Swarm server and client process. . . . . . . . . . . . . . . . . . . . . .

89

4.5 File replication in a Swarm network. Files F1 and F2 are replicated at

Swarm servers N1..N6. Permanent copies are shown in darker shade.

F1 has two custodians: N4 and N5, while F2 has only one, namely, N5.

Replica hierarchies are shown for F1 and F2 rooted at N4 and N5 respectively. Arrows indicate parent links. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

92

4.6 Replica Hierarchy Construction in Swarm. (a) Nodes N1 and N3 cache

file F2 from its home N5. (b) N2 and N4 cache it from N5; N1 reconnects

to closer replica N3. (c) Both N2 and N4 reconnect to N3 as it is closer

than N5. (d) Finally, N2 reconnects to N1 as it is closer than N3. . . . . . . .

94

4.7 Consistency Privilege Vector. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

99

4.8 Pseudo-code for opening and closing a session. . . . . . . . . . . . . . . . . . . . . 101

4.9 Consistency management actions in response to client access to file F2 of

Figure 4.5(d). Each replica is labelled with its currentPV, and ‘-’ denotes

∞.

104

4.10 Basic Consistency Management Algorithms. . . . . . . . . . . . . . . . . . . . . . . 107

4.11 Basic Pull Algorithm for configurable consistency. . . . . . . . . . . . . . . . . . . 108

4.12 Computation of relative PVs during pull operations. The PVs labelling the

edges show the PVin of a replica obtained from each of its neighbors, and

‘-’ denotes ∞. For instance, in (b), after node N3 finishes pulling from

N5, its N5.PVin = [wr, ∞, ∞, [10,4]], and N5.PVout = [rd, ∞, ∞,∞]. Its

currentPV becomes PVmin( [wr, ∞, ∞, [10,4]], [rd, ∞, ∞,∞], [wrlk]),

which is [rd, ∞, ∞,∞]. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

4.13 Basic Push Algorithm for Configurable Consistency. . . . . . . . . . . . . . . . . 111

4.14 Replication State for Adaptive Caching. Only salient fields are presented

for readability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

4.15 Adaptive Caching Algorithms. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

4.16 Master Election in Adaptive Caching. The algorithms presented here

are simplified for readability and do not handle race conditions such as

simultaneous master elections. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

4.17 Adaptive Caching of file F2 of Figure 4.5 with hysteresis set to (low=3,

high=5). In each figure, the replica in master mode is shown darkly

shaded, peers are lightly shaded, and slaves are unshaded. . . . . . . . . . . . . 120

5.1 Network topology emulated by Emulab’s delayed LAN configuration. The

figure shows each node having a 1Mbps link to the Internet routing core,

and a 40ms roundtrip delay to/from all other nodes. . . . . . . . . . . . . . . . . . 134

5.2 Andrew-Tcl Performance on 100Mbps LAN. . . . . . . . . . . . . . . . . . . . . . . 138

xii

5.3 Andrew-Tcl Details on 100Mbps LAN. . . . . . . . . . . . . . . . . . . . . . . . . . . 139

5.4 Andrew-tcl results over 1Mbps, 40ms RTT link. . . . . . . . . . . . . . . . . . . . . 139

5.5 Network Topology for the Swarmfs Experiments described in Sections

5.2.3 and 5.2.4. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

5.6 The replica hierarchy observed for a Swarmfs file in the network of Figure

5.5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

5.7 Roaming File Access: Swarmfs pulls source files from nearby replicas.

Strong-mode Coda correctly compiles all files, but exhibits poor performance. Weak-mode Coda performs well, but generates incorrect results

on the three nodes (T2, F1, F2) farthest from server U1. . . . . . . . . . . . . . . 143

5.8 Latency to fetch and modify a file sequentially at WAN nodes. Strong-mode

Coda writes the modified file synchronously to server. . . . . . . . . . . . . . . . . . . 144

5.9 Repository module access patterns during various phases of the RCS experiment. Graphs in Figures 5.10and 5.11 show the measured file checkout latencies on Swarmfs-based RCS at Univ. (U) and Turkey (T) sites

relative to client-server RCS. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

5.10 RCS on Swarmfs: Checkout Latencies near home server on the “University” (U) LAN. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

5.11 RCS on Swarmfs: Checkout Latencies at “Turkey” (T) site, far away from

home server. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

5.12 Network Architecture of the SwarmProxy service. . . . . . . . . . . . . . . . . . . 152

5.13 SwarmProxy aggregate throughput (ops/sec) with adaptive replication. . . . 156

5.14 SwarmProxy aggregate throughput (ops/sec) with aggressive caching. The

Y-axis in this graph is set to a smaller scale than in Figure 5.13 to show

more detail. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

5.15 SwarmProxy latencies for local objects at 40% locality. . . . . . . . . . . . . . . 158

5.16 SwarmProxy latencies for non-local objects at 40% locality. . . . . . . . . . . . 159

5.17 SwarmDB throughput observed at each replica for reads (lookups and

cursor-based scans). ‘Swarm local’ is much worse than ‘local’ because

SwarmDB opens a session on the local server (incurring an inter-process

RPC) for every read in our benchmark. . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

5.18 SwarmDB throughput observed at each replica for writes (insertions, deletions and updates). Writes under master-slave replication perform slightly

worse than RPC due to the update propagation overhead to slaves. . . . . . . 166

5.19 Emulated network topology for the large-scale file sharing experiment.

Each oval denotes a ‘campus LAN’ with ten user nodes, each running

a SwarmDB client and Swarm server. The labels denote the bandwidth

(bits/sec) and oneway delay of network links (from node to hub). . . . . . . 169

xiii

5.20 New file access latencies in a Swarm-based peer file sharing network of

240 nodes under node churn. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

5.21 The average incoming bandwidth consumed at a router’s WAN link by file

downloads. Swarm with proximity-aware replica networking consumes

much less WAN link bandwidth than with random networking. . . . . . . . . . 173

5.22 Data dissemination bandwidth of a single Swarm vs. hand-coded relay

for various number of subscribers across a 100Mbps switched LAN. With

a few subscribers, Swarm-based producer is CPU-bound, which limits its

throughput. But with many subscribers, Swarm’s pipelined I/O delivers

90% efficiency. Our hand-coded relay requires a significant redesign to

achieve comparable efficiency. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

5.23 End-to-end packet latency of a single relay with various numbers of subscribers across 100Mbps LAN, shown to a log-log scale.. Swarm relay

induces an order of magnitude longer delay as it is CPU-bound in our

unoptimized implementation. The delay is due to packets getting queued

in the network link to the Swarm server. . . . . . . . . . . . . . . . . . . . . . . . . . . 178

5.24 A SwarmCast producer’s sustained send throughput (KBytes/sec) scales

linearly as more Swarm servers are used for multicast (shown to log-log

scale), regardless of their fanout. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

5.25 Adding Swarm relays linearly scales multicast throughput on a 100Mbps

switched LAN. The graph shows the throughput normalized to a single

ideal relay that delivers the full bandwidth of a 100Mbps link. A network

of 20 Swarm servers deliver 80% of the throughput of 20 ideal relays. . . . 180

5.26 Swarm’s multicast efficiency and throughput-scaling. The root Swarm

server is able to process packets from the producer at only 60% of the

ideal rate due to its unoptimized compute-bound implementation. But its

efficient hierarchical propagation mechanism disseminates them at higher

efficiency. Fanout of Swarm network has a a minor impact on throughput. 181

5.27 End-to-end propagation latency via Swarm relay network. Swarm servers

are compute-bound for packet processing, and cause packet queuing delays. However, when multiple servers are deployed, individual servers

are less loaded. This reduces the overall packet latency drastically. With

a deeper relay tree (obtained with a relay fanout of 2), average packet

latency increases marginally due to the extra hops through relays. . . . . . . . 182

5.28 Packet delay contributed by various levels of Swarm multicast tree (shown

to a log-log scale). The two slanting lines indicate that the root relay and

its first level descendants are operating at their maximum capacity, and

are clearly compute-bound. Packet queuing at those relays contributes the

most to the overall packet latency. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

6.1 The Spectrum of available consistency management solutions. We categorize them based on the variety of application consistency semantics they

cover and the effort required to employ them in application design. . . . . . . 185

xiv

LIST OF TABLES

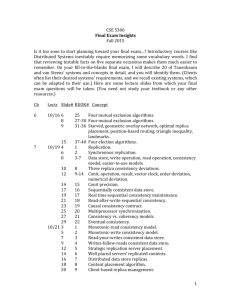

1.1 Consistency options provided by the Configurable Consistency (CC) framework. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

10

1.2 Configurable Consistency (CC) options for popular consistency flavors. . . .

13

2.1 Characteristics of several classes of representative wide-area applications.

28

2.2 Consistency needs of several representative wide-area applications. . . . . .

33

3.1 Concurrency matrix for Configurable Consistency. . . . . . . . . . . . . . . . . .

47

3.2 Expressing the needs of representative applications using Configurable

Consistency. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

50

3.3 Expressing various consistency semantics using Configurable Consistency

options. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

59

3.4 Expressing other flexible models using Configurable Consistency options.

60

4.1 Swarm API. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

79

4.2 Swarm interface to application plugins. . . . . . . . . . . . . . . . . . . . . . . . . . .

81

5.1 Consistency semantics employed for replicated BerkeleyDB (SwarmDB)

and the CC options used to achieve them. . . . . . . . . . . . . . . . . . . . . . . . . . 162

GLOSSARY OF TERMS USED

AFS Andrew File System, a client-server distributed file system developed at CMU

Andrew a file system benchmark created at CMU

CMU Carnegie-Mellon University

CVS Concurrent Versioning System, a more sophisticated version control software based

on RCS

Caching Creating a temporary copy of remotely located data to speed up future retrievals nearby

Coda An extension of the AFS file system that operates in weakly-connected network

environments

LAN Local-area Network

NFS Network File System developed by Sun Labs.

NOP null/no operation

NTP Network Time Protocol used to synchronize wall clock time among computers.

RCS Revision Control System, a public-domain file version control software

Replication Generally keeping multiple copies of a data item for any reason such as

performance or redundancy

TTCP A sockets-based Unix program to measure the network bandwidth between two

machines

VFS Virtual File System: an interface developed for an operating system kernel to

interact with file system-specific logic in a portable way

WAN Wide-area Network

ACKNOWLEDGMENTS

At the outset, I owe the successful pursuit of my Ph.D degree to two great people: my

advisor, John Carter and my mentor, Jay Lepreau. I really admire John’s immeasurable

patience and benevolence in putting up with a ghost student like me who practically

went away in the middle of PhD for four long years to work full-time (at Novell). I thank

John for giving me complete freedom to explore territory that was new to both of us,

while gently nudging me towards practicality with his wisdom. Thanks to John’s able

guidance, I could experience the thrill of systems research for which I joined graduate

school - conceiving and building a really ‘cool’, practically valuable system of significant

complexity all by myself, from scratch. Finally, I owe most of my writing skills to John.

However, all the errors you may find in this thesis are mine.

I would like to thank Jay Lepreau for providing generous financial as well as moral

support throughout my graduate study at Utah. Jay Lepreau and Bryan Ford have taught

me the baby steps in research. I thank Jay for allowing me to use his incredible tool,

the Emulab network testbed, extensively, without which my work would have been

impossible. I thank the members of the Flux research group at Utah, especially Mike

Hibler, Eric Eide, Robert Ricci and Kirk Webb for patiently listening to my technical

problems and ideas, and providing critical feedback that greatly helped my work. I really

enjoyed the fun atmosphere in the Flux group at Utah.

I feel fortunate to have been guided by Wilson Hsieh, a star researcher. From him, I

learnt a great deal how to clearly identify and articulate the novel aspects of my work that

formed my thesis. The critical feedback I received from Wilson, Gary Lindstrom and Ed

Zayas shaped my understanding of doctoral research. The positive energy, enthusiasm

and joy that Ed Zayas radiates is contagious. I cherish every moment I spent with him at

Novell and later at Network Appliance.

My graduate study has been a long journey during which I met many great friends of a

lifetime - especially Anand, Gangu, Vamsi and Sagar. Each is unique, inspiring, pleasant

and heart-warming in his own way. I am grateful to my family members including my

parents, grandparents, aunts and uncles (especially Aunt Ratna) and my wife Sarada,

without whose self-giving love and encouragement I would not have achieved anything.

Finally, my utmost gratitude to the Veda Maataa, Gaayatrii (the Divine Mother of

Knowledge), who nurtured me every moment in the form of all these people. She

provided what I needed just when I needed it, and guides me towards the goal of Life

- Eternal Existence (Sat), Infinite Knowledge and Power (Chit), and Inalienable Bliss

(Ananda).

xviii

CHAPTER 1

INTRODUCTION

Modern Internet-based services increasingly operate over diverse networks and cater

to geographically widespread users. Caching of service state and user data at multiple

locations is well understood as a technique to scale service capacity, to hide network

variability from users, and to provide a responsive and highly available service. Caching

of mutable data raises the issue of consistency management, which ensures that all

replica contents converge to a common final value in spite of updates. A dynamic

wide area environment poses several unique challenges to caching algorithms, such

as diverse network characteristics, diverse resource constraints, and machine failures.

Their complexity motivates the need for a reusable solution to support data caching and

consistency in new distributed services. In this dissertation, we address the following

question: can a single middleware system provide cached data access effectively in

diverse distributed services with a wide variety of sharing needs?

To identify the core desirable features of a caching middleware, we have surveyed

the data sharing needs of a wide variety of distributed applications ranging from personal file access (with little data sharing) to widespread real-time collaboration (with

fine-grain synchronization). We found that although replicable data is prevalent in many

applications, their data characteristics (e.g., the unit of data access, its mutability, and the

frequency of read/write sharing) and consistency requirements vary widely. To support

this diversity efficiently requires greater customizability of consistency mechanisms than

provided by existing solutions. Also, applications operate in diverse network environments ranging from well-connected corporate servers to intermittently connected mobile

devices. The ability to promiscuously replicate data

1

1

and synchronize with any avail-

The term replication has been used in previous literature [62, 78, 22] to refer both to keeping transient

copies/replicas of data to improve access latency and availability (also called caching or second-class

replication [38]) as well as to maintaining multiple redundant copies of data to protect against permanent

2

able replica, called pervasive replication [62], greatly enhances application availability

and performance in such environments. Finally, we observed that certain core design

choices recur in the consistency management of diverse applications, although different

applications need to make different sets of choices. This commonality in the consistency

enforcement options allows the development of a flexible consistency framework and its

implementation in a caching middleware to support diverse sharing needs.

Based on the above observations, this dissertation presents a novel flexible consistency management framework called configurable consistency that supports efficient

caching for diverse distributed applications running in non-uniform network environments (including WANs). The configurable consistency framework can express a broader

mix of consistency semantics than existing models (ranging from strong to eventual

consistency) by combining a small number of orthogonal design choices. The framework’s choices allow an application to make different tradeoffs between consistency,

availability, and performance over time. Its interface also allows different users to impose

different consistency requirements on the same data simultaneously. Thus, the framework is highly customizable and adaptable to varying user requirements. Configurable

consistency can be enforced efficiently among data replicas spread across non-uniform

networks. If a data service/middleware adopts the configurable consistency protocol to

synchronize peer replicas, it can support the read/write data sharing needs of a variety

of applications efficiently. For clustered services, read-write caching with configurable

consistency helps incrementally scale service capacity to handle client load. For wide

area services, it also improves end-to-end service latency and throughput, and reduces

WAN usage.

To support our flexibility and efficiency claims, we present the design of a middleware data store called Swarm2 that provides pervasive wide area caching, and supports

diverse application needs by implementing configurable consistency. To demonstrate

the flexibility of configurable consistency, we present four network services that store

loss of some of them (called first-class replication). In this dissertation, we use the unqualified term

replication to refer to caching, unless stated otherwise.

2

Swarm stands for Scalable Wide Area Replication Middleware.

3

data with distinct consistency needs in Swarm and leverage its caching support with

different configurable consistency choices. Though existing systems support some of

these services, none of them has a consistency solution flexible enough to support all of

the services efficiently. Under worst-case workloads, services using Swarm middleware

for caching perform only within 20% of equivalent hand-optimized implementations

in terms of end-to-end latency, throughput and network utilization. However, relative

to traditional client-server implementations without caching, Swarm-based caching improves service performance by at least 500% on realistic workloads. Thus, layering these

services on top of Swarm middleware only incurs a low worst-case penalty, but benefits

them significantly in the common case.

Swarm accomplishes this by providing the following features: (i) a failure-resilient

proximity-aware replica management mechanism that organizes data replicas into an

overlay hierarchy for scalable synchronization and adjusts it based on observed network

characteristics and node accessibility; (ii) an implementation of the the configurable

consistency framework to let applications customize consistency semantics of shared

data to match their diverse sharing and performance needs; and (iii) a contention-aware

replication control mechanism that limits replica synchronization overhead by monitoring the contention among replica sites and adjusting the degree of replication accordingly.

For P2P file sharing with close-to-open consistency semantics, proximity-aware replica

management reduces data access latency and WAN bandwidth consumption to roughly

one-fifth that of random replica networking. With configurable consistency, database

applications can operate with diverse consistency requirements without redesign. Relaxing consistency improves their throughput by an order of magnitude over strong

consistency and read-only replication. For enterprise services employing WAN proxies,

contention-aware replication control outperforms both aggressive caching and RPCs (i.e.,

no replication) at all levels of contention while still providing strong consistency.

In Section 1.1, we present three important classes of distributed applications that

we target for replication support. We discuss the diversity of their sharing needs, the

commonality of their consistency requirements, and the existing ways in which those

requirements are satisfied to motivate our thesis. In Section 1.2, we outline the config-

4

urable consistency framework and present an overview of Swarm. Finally, in Section

1.3, we outline the four specific representative applications we built using Swarm and

our evaluation of their efficiency relative to alternate implementations.

1.1 Consistency Needs of Distributed Applications

Previous research has revealed that distributed applications vary widely in their consistency requirements [77]. It is also well-known that consistency, scalable performance,

and high availability in the wide area are often conflicting goals [23]. Different applications need to make different tradeoffs based on application and network characteristics.

To understand the diversity in replication needs, we studied three important and broad

classes of distributed services: (i) file access, (ii) directory and database services, and

(iii) real-time collaborative groupware. Though efficient custom replication solutions

exist for many individual applications in all these categories, our aim is to see if a more

generic middleware solution is feasible that provides efficient support for a wide variety

of applications.

File systems are used by myriad applications to store and share persistent data, but

applications differ in the way files are accessed. Personal files are rarely write-shared.

Software and multimedia are widely read-shared. Log files are concurrently appended.

Shared calendars and address books are concurrently updated, but their results can often

be merged automatically. Concurrent updates to version control files produce conflicts

that are hard to resolve and must be prevented. Eventual consistency (i.e., propagating

updates lazily) provides adequate semantics and high availability in the normal case

where files are rarely write-shared. But during periods of close collaboration (e.g., an approaching deadline), users need tighter synchronization guarantees such as close-to-open

(to view latest updates) or strong consistency (to prevent update conflicts) to facilitate

productive fine-grained document sharing. Hence users need to make different tradeoffs

between consistency and availability for files at different times. Currently, the only

recourse for users during close collaboration over the wide area is to avoid distributed file

systems and resort to manual synchronization at a central server (via ssh/rsync or email).

5

A directory service locates resources such as users, devices, and employee records

based on their attributes. The consistency needs of a directory service depend on the

applications using it and their resources being indexed. A music file index used for

peer-to-peer music search such as KaZaa might require a very weak (e.g., eventual) consistency guarantee, but the index must scale to a large floating population of (thousands

of) users frequently updating it. Some updates to an employee directory may need to

be made effective “immediately” at all replicas (e.g., revoking a user’s access privileges

to sensitive data), while other updates can be performed with relaxed consistency. This

requires support for multiple simultaneous accesses to the same data with different consistency requirements.

Enterprise data services (such as auctions, e-commerce, inventory management) involve multi-user access to structured data. Their responsiveness to users spread geographically can be improved by deploying wide area proxies that cache enterprise objects

(e.g., sales and customer records). For instance, the proxy at a call center could cache

many client records locally, thereby speeding up response. However, enterprise services

often need to enforce strong consistency and integrity constraints in spite of wide area

operation. Node/network failures must not degrade service availability when proxies are

added. Also since the popularity of objects may vary widely, caching decisions must

be made based on the available locality. Widely caching data with poor locality leads

to significant coherence traffic and hurts, rather than improves, performance. These

applications typically have semantic dependencies among updates such as atomicity and

causality that must be preserved to ensure data integrity.

Real-time collaboration involves producer-consumer style interaction among multiple users or application components in real-time. The key requirement is to deliver data

from producers to consumers with minimal latency while utilizing network bandwidth

efficiently. Example applications include data logging for analysis, chat (i.e., manyto-many data multicast), stock/game updates, and multimedia streaming to wide area

subscribers. In the traditional organization of these applications, producers send their

data to a central server that disseminates it to interested consumers. Replicating the

central server’s task among a multicast network of servers helps ease load on the central

6

server and thus has the potential to improve scalability. Such applications differ in the

staleness of data tolerated by consumers.

1.1.1 Requirements of a Caching Middleware

In general, applications widely differ in several respects including their data access

locality, the frequency and extent of read and write sharing among replicas, the typical

replication factor, semantic interdependencies among updates, the likelihood of conflicts

among concurrent updates, and their amenability to automatic conflict resolution. Operating these applications in the wide area introduces several additional challenges. In

a wide area environment, network links typically have non-uniform delay and varying

bandwidth due to congestion and cross-traffic. Both nodes and links may become intermittently unavailable. To support diverse application requirements in such a dynamic

environment, we believe a caching solution must have the following features:

• Customizable Consistency: Applications require a high degree of customizability of consistency mechanisms to achieve the right tradeoff between consistency

semantics, availability, and performance. The same application might need to

operate with different consistency semantics based on its changing resources and

connectivity (e.g., file sharing).

• Pervasive Replication: Application components must be able to freely cache data

and synchronize with any available replica to provide high availability in the wide

area. Rigid communication topologies such as client-server or static hierarchies

prevent efficient utilization of network resources and restrict availability.

• Network Economy: The caching and consistency mechanisms must use network

capacity efficiently and hide variable delays to provide predictable response to

users.

• Failure Resilience: For practical deployment in the wide area, a caching solution

must continue to operate correctly in spite of the failure of some nodes and network

links, i.e., it must not violate the consistency guarantees given to applications.

7

1.1.2 Existing Work

Previous research efforts have proposed a number of efficient techniques to support

pervasive wide area replication [62, 56, 18] as well as consistency mechanisms that suit

distinct data sharing patterns [38, 74, 2, 52, 41]. However, as we explain below, existing

systems lack one or more of the listed features that we feel are essential to support the

data sharing needs of the diverse application classes mentioned above.

Many systems [38, 62, 52, 41, 46, 61] handle the diversity in replication needs by

devising packaged consistency policies tailored to applications with specific data and

network characteristics. For example, the Coda file system [38] provides two modes of

operation with distinct consistency policies, namely, close-to-open and eventual consistency, based on the connectivity of its clients to servers. Fluid replication [37] provides

three flavors of consistency on a per-file-access basis to support sharing of read-only

files, rarely write-shared personal files, and files requiring serializability. Their approach

adequately serves specific access patterns, but cannot provide slightly different semantics

without a system redesign. For instance, they cannot enforce different policies for file

reads and writes, or control replica synchronization frequency, which our evaluation

shows can significantly improve application performance.

Several research efforts [39, 77] have recognized the need to allow tuning of consistency to specific application needs, and have developed consistency interfaces that

provide options to customize consistency. TACT defines a continuous consistency model

that provides continuous control over the degree of divergence of replica contents [77].

TACT’s model provides three powerful orthogonal metrics in which to express the replica

divergence requirements of applications: numerical error, order error, and staleness.

However, to express important consistency constraints such as atomicity, causality and

isolation, applications such as file and database services need a session abstraction for

grouping multiple data accesses into a unit, which the TACT model lacks. Also, TACT

only supports strict enforcement of divergence bounds by blocking writers. Enforcing

strict bounds is overkill for chat and directory services, and reduces their throughput and

availability for update operations in the presence of failures.

8

Oceanstore [60] provides an Internet-scale persistent data store for use by arbitrary

distributed applications and comes closest to our vision of a reusable data replication

middleware. However, it takes an extreme approach of requiring applications to both

define and enforce a consistency model. It only provides a semantic update model that

treats updates as procedures guarded by predicates. An application or a consistency

library must design the right set of predicates to associate with updates to achieve the

desired consistency. Their approach leaves unanswered our question about the feasibility

of a flexible consistency solution for the application classes mentioned above. Hence,

although Oceanstore can theoretically support a wide variety of applications, programmers incur the burden of implementing consistency management. Several other systems

[12, 75] adopt a similar approach to flexible consistency.

In summary, the lack of a sufficiently flexible consistency solution remains a key

hurdle to building a replication middleware that supports the wide area data sharing

needs of diverse applications. The difficulty lies in developing a consistency interface

that allows a broad variety of consistency semantics to be expressed, can be enforced

efficiently by a small set of application-independent mechanisms in the wide area, and is

customizable enough to enable a large set of applications to make the right tradeoffs

based on their environment. This dissertation proposes such a consistency interface

and shows how it can be implemented along with the other three features in a single

middleware to support the diverse application classes mentioned above.

In addition to the features mentioned in Section 1.1.1, for practical deployment, a

full-fledged data management middleware must also address several important issues

including security and authentication, fault-tolerance for reliability against permanent

loss of replicas, and long-term archival storage and retrieval for disaster recovery. For the

purpose of this thesis, however, we limit our scope to flexible consistency management

for pervasive replication, as it is an important enabler for reusable data management

middleware.

9

1.2 Configurable Consistency

To aid us in designing a more flexible consistency interface, we surveyed a number of

applications in the three classes mentioned in the previous section, looking for common

traits in their diverse consistency needs. From the survey, described in Chapter 2, we

found that a variety of consistency needs can be expressed in terms of a small number

of design choices for enforcing consistency. Those choices can be classified into five

mostly orthogonal dimensions:

• concurrency - the degree to which conflicting (read/write) accesses can be tolerated,

• replica synchronization - the degree to which replica divergence can be tolerated,

including the types of interdependencies among updates that must be preserved

when synchronizing replicas,

• failure handling - how data access should be handled when some replicas are

unreachable or have poor connectivity,

• update visibility - the granularity at which the updates issued at a replica should be

made visible globally,

• view isolation - the duration for which the data accesses at a replica should be

isolated from remote updates.

There are multiple reasonable options along each of these dimensions that create a multidimensional space for expressing consistency requirements of applications. Based on this

classification, we developed the novel configurable consistency framework that provides

the options listed in Table 1.1. When these options are combined in various ways, they

yield a rich collection of consistency semantics for reads and updates to shared data,

covering the needs of a broad mix of applications.

For instance, this approach lets a proxy-based auction service employ strong consistency for updates across all replicas, while enabling peer proxies to answer queries

with different levels of accuracy by relaxing consistency for reads to limit synchronization cost. A user can still get an accurate answer by specifying a stronger consistency

10

Table 1.1. Consistency options provided by the Configurable Consistency (CC) framework.

Consistency semantics are expressed for an access session by choosing one of the

alternative options in each row, which are mutually exclusive. Options in bold italics

indicate reasonable defaults that suit many applications. In our discussion, when we

leave an option unspecified, we assume its default value.

Dimension

Concurrency

Control

Available Consistency Options

Access mode

Failure Handling

Update Visibility

View Isolation

excl (RDLK, WRLK)

time (staleness = 0..∞ secs)

mod (unseen writes = 0..∞)

Strength

hard soft

Semantic Deps.

none causal atomic causal+atomic

Update ordering

none total serial

optimistic (ignore replicas w/ RTT ≥ 0..∞) pessimistic

session per-update manual

session per-update manual

Timeliness

Replica

Synchronization

concurrent (RD, WR)

manual

requirement for queries, e.g., when placing a bid, when the operation warrants incurring

higher latency.

The configurable consistency framework assumes that applications access (i.e., read

or write) their data in sessions, and that consistency can be enforced at session boundaries

as well as before and after each read or write access within a session. The framework’s

definition of reads and writes is general and includes queries and updates of arbitrary

complexity. In this framework, an application expresses its consistency requirements for

each session as a vector of consistency options (one from each row of Table 1.1) covering

several aspects of consistency management. Each row of the table indicates several

mutually exclusive options available to control the aspect of consistency indicated in

its first column. The table shows reasonable default options in italics, which together

enforce the close-to-open consistency semantics provided by AFS [28] for coherent

read-write file sharing. An application can select a different set of options for subsequent

sessions on the same data item to meet dynamically varying consistency requirements.

Also, different application instances can select different sets of options on the same data

item simultaneously. In that case, the framework guarantees that all sessions achieve

11

their desired semantics by providing mechanisms that serialize sessions with conflicting requirements. Thus, the framework provides applications a significant amount of

customizability in consistency management.

1.2.1 Configurable Consistency Options

We now briefly describe the options supported by the configurable consistency framework, which are explained in detail in Chapter 3.

The framework provides two flavors of access modes to control the parallelism among

reads and writes. Concurrent flavors (RD, WR) allow arbitrary interleaving of accesses

across replicas, while the exclusive modes (RDLK, WRLK) provide traditional concurrentread-exclusive-write semantics globally [48].

Divergence of replica contents (called timeliness in Table 1.1) can be controlled via

limiting staleness in terms of time, the number of unseen remote updates, or both. The

divergence bounds can be hard, i.e., strictly enforced by stalling writes if necessary

(similar to TACT’s model [77]), or soft, i.e., enforced in a best-effort fashion without

stalling any accesses.

Two types of semantic dependencies can be expressed among multiple writes (to

the same or different data items), namely, causality and atomicity. When updates are

issued independently at multiple replicas, our framework allows them to be applied (1)

with no particular constraint on their ordering at various replicas (called ‘none’), (2)

in some arbitrary but common order everywhere (called ‘total’), or (3) sequentially via

serialization (called ‘serial’).

When not all replicas are equally well-connected or available, different consistency

options can be imposed dynamically on different subsets of replicas based on their

relative connectivity. For this, the framework allows qualifying the options with a cutoff

value for a link quality metric such as network latency. In that case, consistency options

will be enforced only relative to replicas reachable via network links of higher quality

(e.g., lower latency) than the cutoff. With this option, application instances using a

replica can optimistically make progress with available data even when some replicas

are unreachable due to node/network failures.

12

Finally, the framework provides control over how long a session is kept isolated from

the updates of remote sessions, as well as when its updates are made ready to be visible to

remote sessions. A session can be made to remain isolated entirely from remote updates

(‘session’, ensuring a snapshot view of data), to apply remote updates on local copies

immediately (‘per-update’, useful for log monitoring), or when explicitly requested via

an API (‘manual’). Similarly, a session’s updates can be propagated as soon as they are

issued (useful for chat), when the session ends (useful for file updates), or only upon

explicit request (‘manual’).

Table 1.2 shows how several popular consistency flavors can be expressed in terms of

configurable consistency options. In the proxy-based auction example described above,

strong consistency can be enforced for updates by employing exclusive write mode

(WRLK) sessions to ensure data integrity, while queries employ concurrent read mode

(RD) sessions with relaxed timeliness settings for high query throughput. A replicated

chat service needs to employ per-update visibility and isolation to force user messages

to be eagerly propagated among chat servers in real-time. On the other hand, updates

to source files and documents are not stable until a write session ends. Hence they need

to employ session visibility and isolation to ensure consistent contents. We discuss the

expressive power of the framework in the context of existing consistency models in detail

in Section 3.5.

1.2.2 Discussion

At first glance, providing a large number of options (as our framework does) rather

than a small set of hardwired protocols might appear to impose an extra design burden on

application programmers. Programmers need to determine how the selection of a particular option along one dimension (e.g., optimistic failure handling) affects the semantics

provided by the options chosen along other dimensions (e.g., exclusive write mode, i.e.,

WRLK). However, thanks to the orthogonality and composability of our framework’s

options, their semantics are roughly additive; each option only restricts the applicability

of the semantics of other options and does not alter them in unpredictable ways. For

example, employing optimistic failure handling and WRLK mode together for a data

13

Table 1.2. Configurable Consistency (CC) options for popular consistency flavors.

For options left unspecified, we assume a value from the options vector: [RD/WR,

time=0, mod=∞, hard, no semantic deps, total order, pessimistic, session-grain visibility

& isolation].

Consistency

Semantics

CC Options

Sample Applications

Existing

Support

Demo Apps.

locking

rd,

wr

(strong

consistency)

RDLK/WRLK

DB, objects, file

locking

SwarmProxy,

RCS, SwarmDB

master-slave wr

WR, serial

shared queue

close-to-open

rd, wr

bounded inconsistency

MVCC rd

time=0, hard

collaborative

file sharing

airline reservation

online shopping

inventory

queries

personal file access

Fluid

Replication

[16],

DBMS,

Objectstore [41]

read-only repl.

(mySQL)

AFS, Coda[38]

Swarmfs

TACT [77]

SwarmDB

eventual / optimistic

close-to-rd

optimistic

wr-to-rd

append consistency

time=x, mod=y,

hard

RD,

time=0,

soft,

causal+atomic

time=0, soft, optimistic,

per-update isolation

time=x, mod=y,

soft, optimistic

WR,

time=0,

soft,

none/total/serial,

per-update

Directory, stock

quotes

logging, chat,

SwarmDB

Objectstore[41],

Oracle

Pangaea [62],

Swarmfs,

Ficus,

Coda,

Fluid, NFS

Active

Directory[46]

WebFS

[74],

GoogleFS

SwarmDB

SwarmDB

SwarmCast

streaming,

games

access session guarantees exclusive write access for that session only within the replica’s

partition (i.e., among replicas connected well to that replica). Thus by adopting our

framework, programmers are not faced with a combinatorial increase in the complexity

of semantics to understand.

To ease the adoption of our framework for application design, we anticipate that middleware systems that adopt our framework will bundle popular combinations of options

as defaults (e.g., ‘Unix file semantics’, ‘CODA semantics’, or ‘best effort streaming’)

for object access, while allowing individual application components to refine their con-

14

sistency semantics when required. In those circumstances, programmers can customize

individual options along some dimensions while retaining the other options from the

default set.

Although the options are largely orthogonal, a few of them implicitly imply others.

For example, the exclusive access modes imply session-grain visibility and isolation,

a hard most-current timeliness guarantee, and serial update ordering. Likewise, serial

update ordering implicitly guarantees that updates are totally ordered as well.

1.2.3 Limitations of the Framework

Although we have designed our framework to support the consistency semantics

needed by a wide variety of distributed services, we have chosen to leave out semantics that are difficult to support in a scalable manner across the wide area or that require consistency protocols with application-specific knowledge. As a consequence, our

framework has two specific limitations that may restrict application-level parallelism in

some scenarios.

First, it cannot support application-specific conflict matrices that specify the parallelism possible among application-level operations [5]. Instead, applications must

map their operations into the read and write modes provided by our framework, which

may restrict parallelism. For instance, a shared editing application cannot specify that

structural changes to a document (e.g., adding/removing sections) can be safely allowed

to proceed in parallel with changes to individual sections, although their results can be

safely merged at the application level. Enforcing such application-level concurrency constraints requires the consistency protocol to track the application operations in progress at

each replica site, which is hard to track efficiently in an application-independent manner.

Second, our consistency framework allows replica divergence constraints to be imposed on data but not on application-defined views of data, unlike TACT’s conit concept

[77]. For instance, a user sharing a replicated bulletin board might be more interested in

tracking updates to specific threads of discussion than others, or messages posted by her

friends than others, etc. To support such requirements, views should be defined on the

bulletin board dynamically (e.g., all messages with subject ‘movie’) and updates should

15

be tracked by the effect they have on those views, instead of on the whole board. Our

framework cannot express such views precisely. It is difficult to efficiently manage such

dynamic views across a large number of replicas, because doing so requires frequent

bookkeeping communication among replicas that may offset the parallelism gained by

replication. An alternative solution is to split the bulletin board into multiple consistency

units (threads) and manage consistency at a finer grain when this is required. Thus, our

framework provides alternate ways to express both of these requirements that we believe

are likely to be more efficient due to their simplicity and reduced bookkeeping.

1.2.4 Swarm

To demonstrate the practicality of the configurable consistency framework, we have

designed and prototyped the Swarm middleware file store. Swarm is organized as a

collection of peer servers that provide coherent file access at variable granularity behind a

traditional session-oriented file interface. Applications store their shared state in Swarm

files and operate on their state via nearby Swarm servers. By designing applications

this way, application writers are relieved of the burden of implementing their own data

location, caching and consistency mechanisms. Swarm allows applications to access an

entire file or individual file blocks within sessions. When opening a session, applications can specify the desired consistency semantics via configurable consistency options,

which Swarm enforces for the duration of that session. Swarm files can be updated

by overwriting previous contents (physical updates) or by invoking a semantic update

procedure that Swarm later applies to all replicas (with the help of application plugins).

Swarm supports three distinct paradigms of shared data access: (i) whole-file access

with physical updates to support unstructured variable-length data such as files, (ii)

page-grained access to support persistent objects and other heap-based data structures,

and (iii) whole file access with semantic updates to support structured data such as

databases and directories.

Swarm builds failure-resilient overlay replica hierarchies dynamically per file to manage large numbers (thousands) of file replicas across non-uniform networks. By default,

Swarm aggressively caches shared data on demand, but dynamically restricts caching

16

when it observes high contention. We refer to this technique as contention-aware replication control. Swarm’s caching mechanism dynamically monitors network quality

between clients and replicas and reorganizes the replica hierarchy to minimize the use

of slow links, thereby reducing latency and saving WAN bandwidth. We refer to this

feature of Swarm as proximity-aware replica management. Finally, Swarm’s replica

hierarchy mechanism is resilient to intermittent node and network failures that are common in dynamic environments. Swarm’s familiar file interface enables applications to be

programmed for a location-transparent persistent data abstraction, and to achieve automatic replication. Applications can meet diverse consistency requirements by employing

different sets of configurable consistency options. The design and implementation of

Swarm and configurable consistency are described in detail in Chapter 4.

1.3 Evaluation

To support our claim that configurable consistency can meet the data sharing needs of