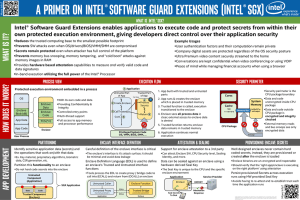

Shielding applications from an untrusted cloud with Haven Abstract Andrew Baumann Marcus Peinado

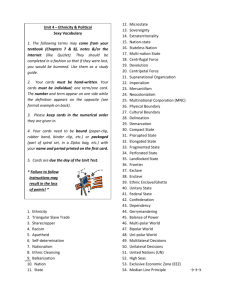

advertisement