RELATIVIZATION IN RANDOMNESS

advertisement

RELATIVIZATION IN RANDOMNESS

JOHANNA N.Y. FRANKLIN

Abstract. We examine the role of relativization in randomness through the lenses of van Lambalgen’s Theorem and lowness for randomness. Van Lambalgen’s Theorem states that the join of two

sequences is random if and only if each of the original sequences is random relative to the other; a

sequence is said to be low for randomness if it is not computationally strong enough to derandomize

any random sequence. The way in which one relativizes a randomness notion can affect the truth

of van Lambalgen’s Theorem or the class of sequences that are low for that randomness notion,

making these topics ideal approaches for examining different relativizations and thus for gaining

insight into the randomness notions we relativize.

1. Introduction

Relativization is one of the cornerstones of computability theory: it allows us to think about

sets of natural numbers not only as individual objects but in relation to each other. In this paper,

I will explore the ways in which we make use of the concept of relativization in the study of

algorithmic randomness. We begin with a basic introduction to relativization as it appears in

classical computability theory. In Section 2, we consider van Lambalgen’s Theorem, and in Section

3, we consider lowness for randomness. Finally, in Section 4, we summarize these results and

suggest some further areas for exploration.

1.1. Notation. Our notation will generally follow [13] and [45]. We will use lowercase Roman

letters such as n and m to represent natural numbers, lowercase Greek letters such as σ and τ

to represent binary strings of finite length, and uppercase Roman letters such as A and B to

represent subsets of the natural numbers. Often, we will associate a subset of the natural numbers

A with its characteristic function χA , which can be represented as the infinite binary sequence

χA (0)χA (1)χA (2) . . .. This means that we can discuss subsets of ω and infinite binary sequences

interchangeably. If we wish to consider the initial segment of length n of a binary sequence A, we

will write An, and if we want to discuss the length of a finite binary string σ, we will write |σ|.

When written without qualifiers, a “sequence” may be taken to be infinite and a “string” may be

taken to be finite.

We will work in the Cantor space, which is the probability space of infinite binary sequences. We

say that the basic open set defined by a finite binary string σ is [σ]: the set of all infinite binary

sequences extending σ. When we discuss measure in the Cantor space, we will always use Lebesgue

measure, or the standard “coin-flip” measure, and symbolize this with µ: the Lebesgue measure of

1

a basic open set [σ] is 2|σ|

, or the fraction of infinite binary sequences that begin with σ.

Date: May 31, 2015.

1

2

FRANKLIN

1.2. Relativization in computability theory. Before we consider relativization in the context of

algorithmic randomness, let us discuss it first in its most basic form: relativization of sets of natural

numbers. (For a basic reference, see [45] or Chapter 2 of [13].) The first functions we consider in

computability theory are the primitive recursive functions: those formed by the closure of the

constant function 0, the successor function, and the projection functions under the operations of

composition and primitive recursion. However, this does not allow us to consider partial functions in

general or functions associated with algorithms that involve unbounded searches. In order to do so,

we close the class of primitive recursive functions under unbounded search using the minimization

operator µ to get the class of partial recursive functions. This class can also be defined via the

λ-calculus, Turing machines, or register machines and is generally called the partial computable

functions [45]. Since all of the different approaches to computability that people have developed

thus far have resulted in the same class of functions, we adopt the Church-Turing thesis, which

states that if a function is partial recursive if and only if an informal description of an algorithm

that will compute this function can be given [6, 49].

This universality makes it reasonable to use these functions as the basic tools of computability

theory. If such a function ϕ is total, we call it simply a computable function, and a set that is the

domain of a partial computable function (or, alternately, the range of a computable function or

the empty set) is called a computably enumerable (c.e.) set. We note that since there is a natural

computable bijection between the natural numbers and the set of finite binary strings, we may

sometimes talk about a c.e. set of finite binary strings without loss of generality.

When we discuss relativized computation, though, we need one more thing: the use of an oracle.

Here, we allow the partial computable function to use an initial segment of a given set of natural

numbers A in its computation.1 Instead of saying, for instance, that ϕ(n) = m, we say that

Φ(n, σ) = m, meaning that given an input n and a finite binary string σ, Φ outputs m. Since only

a finite initial segment of a sequence A can be used in any particular computation, two infinite

sequences A and B may have the same initial segment σ and thus result in the same answer for

the functional Φ with input n but not necessarily result in the same answer for the functional Φ for

other inputs because longer initial segments may be required for those computations on which they

may not agree. Since we generally use an infinite sequence A as an oracle, we will write ΦA (n) = m

to indicate that there is an initial segment σ of A such that Φ(n, σ) = m.

This allows us to consider the relationship between two sets A and B. We say that A is Turing

reducible to B if there is a functional Φ such that ΦB (n) = χA (n) for all natural numbers n; that

is, if some algorithm can, given a natural number n and an appropriately long initial segment of

B, determine whether n is in A. We express this relationship as A ≤T B. For instance, any set

A is Turing reducible to its complement Ac : If we wish to find out whether n is in A, we look at

Ac (n + 1), which is Ac (0) . . . Ac (n). If Ac (n) = 0, n must be in A; if Ac (n) = 1, n cannot be

in A. In fact, for any set A, A ≤T Ac and Ac ≤T A. Two mutually reducible sets like these are

Turing equivalent and are said to be in the same Turing degree. Two sets in the same Turing degree

have the same computational strength since given knowledge of the first, we can know everything

about the second and vice versa. We can also compare the computational strength of two sets in

different Turing degrees: we can say that B is stronger computationally than A if A <T B (that

is, if knowledge of B allows us to compute A but not vice versa), or we can say that B and A are

incomparable if neither can compute the other (B|T A).

1We call a partial computable function that is permitted to use an oracle like this a functional.

RELATIVIZATION IN RANDOMNESS

3

Turing reducibility is actually quite weak. For instance, there is no limitation on the length of

the initial segment of B required to compute χA (n). We only know that such an initial segment

must exist. Furthermore, we do not know whether, for any given functional Φ, there is an oracle

A and an input n for which ΦA (n) does not converge. We now define two stronger reducibilities

to address these concerns, beginning with the first. If there is a functional Φ and a computable

function f such that Φ(n, B f (n)) = χA (n) for every natural number n, we say that A is weak

truth-table reducible to B and write A ≤wtt B. In this case, the only restriction on the computation

is that an initial segment of B with a length bounded computably in n must be enough to compute

χA (n). For instance, we can say that A is weak truth-table reducible to Ac for any A: we can see

from the example above that f (n) = n + 1 is an appropriate computable function.

Note that for Turing reductions and weak truth-table reductions, ΦX (n) may diverge for any X.

This leads us to an even stronger reducibility: truth-table reduction. We say that A is truth-table

reducible to B if it is weakly truth-table reducible to B via a functional Φ that converges not only

for B on every input, but for every possible oracle on every input.

We can talk about the weak truth-table degrees and the truth-table degrees just as we talked

about the Turing degrees before. It is clear that

A ≤tt B =⇒ A ≤wtt B =⇒ A ≤T B.

These different reducibilities will come into play when we discuss van Lambalgen’s Theorem in

Section 2. However, first we must discuss relativization specifically in the context of randomness.

1.3. Basic randomness concepts. Just as sets that are not themselves computable can be computed by stronger sets, random sequences can be “derandomized” if certain other sequences are

used as oracles. For instance, no sequence is random relative to itself. Furthermore, if A ≤T B,

then A is not random relative to B: B can be used to compute A and thus to predict it perfectly.

To treat this topic in proper detail, we begin by recalling the definitions of Martin-Löf randomness,

computable randomness, and Schnorr randomness, the three main types of randomness we will use

as case studies in this paper. We will present two types of definitions, one based on the idea that a

random sequence should not be predictable and the other based on the idea that a random sequence

should have no rare measure-theoretic properties. We first turn our attention to the predictability

characterization and define the type of function we will use to formalize this concept.

Definition 1.1. A martingale m is a function m : 2<ω → R≥0 such that for every finite binary

string σ,

m(σ a 0) + m(σ a 1)

m(σ) =

.

2

In other words, a martingale is a fair betting function: the amount you lose if you are wrong is

equal to the amount you win if you are right. We say that a martingale is c.e. if the values m(σ) are

uniformly left-c.e. real numbers (that is, computably approximable from below) and computable if

the values m(σ) are uniformly computable real numbers (that is, computably approximable from

both above and below).

Now we can discuss the “winning conditions” for a martingale m on a sequence: we say that m

succeeds on a sequence A if

lim sup m(An) = ∞.

n

4

FRANKLIN

This tells us that for any real number r, we can find an n such that m(An) ≥ r, so m succeeds on

A if the amount m can win by betting on A is unbounded.

Now we can define the three randomness notions mentioned above. Recall that an order function

is computable, nondecreasing, and unbounded.

Definition 1.2. Let A be an infinite binary sequence.

(1) A is Martin-Löf random if no c.e. martingale succeeds on it.

(2) A is computably random if no computable martingale succeeds on it.

(3) A is Schnorr random if there is no computable martingale m such that for some order

function h, m(An) ≥ h(n) for infinitely many n.

In short, Martin-Löf and computable randomness are distinguished by the type of martingale that

will not succeed on such a sequence, and a Schnorr random sequence is one on which a computable

martingale may succeed but not very quickly. We call the classes of Martin-Löf, computable, and

Schnorr random sequences ML, Comp, and Schnorr, respectively. We note that Schnorr randomness

is a strictly weaker notion than computable randomness and that computable randomness is a

strictly weaker notion than Martin-Löf randomness (so Schnorr ⊃ Comp ⊃ ML).

The other characterization that we will mention at this point is measure-theoretic.

Definition 1.3. A Martin-Löf test is a sequence of uniformly c.e. sets hVi i where each Vi is a set

of finite binary strings and µ([Vi ]) ≤ 21i for each i. An infinite binary sequence A is Martin-Löf

random if and only if for every Martin-Löf test hVi i, A 6∈ ∩i [Vi ].

In other words, a sequence is Martin-Löf random if it has no properties that can be “captured”

by a Martin-Löf test.

While the test definition of computable randomness is too complicated to give here [9], Schnorr

randomness can be defined similarly. A Schnorr test is a Martin-Löf test with an additional

requirement: instead of saying that µ([Vi ]) ≤ 21i for each i, we require that µ([Vi ]) = 21i for each

i. This leads to a more effective notion, since this means that we can tell whether σ is in Vi for a

1

of 21i . If σ has been enumerated

given i by enumerating Vi until the measure of [Vi ] is within 2|σ|

into Vi at this point, clearly it is in Vi ; otherwise, there is not enough space for it to go in later and

σ will never enter Vi . As for Martin-Löf randomness, a sequence A is Schnorr random if and only

if for every Schnorr test hVi i, A 6∈ ∩i [Vi ].

One difference between Martin-Löf randomness and computable and Schnorr randomness is the

presence or absence of a universal martingale or test. We note without ceremony that there is a

universal Martin-Löf test: a test hUi i such that for every Martin-Löf test hVi i, ∩i [Vi ] ⊆ ∩i [Ui ].

In short, if we are trying to determine whether a sequence is Martin-Löf random, we need only

check to see whether it is contained in the intersection of the universal test. However, there is no

universal test for Schnorr randomness. In fact, given any Schnorr test, we can find a computable

sequence that it does not capture. Since no computable sequence is Schnorr random, universality

fails in a very strong way indeed. The same situation holds for martingales: there is a universal

martingale for Martin-Löf randomness but not for computable or Schnorr randomness.

When we relativize a martingale to a sequence B, we permit the use of B as an oracle in

the calculation of the martingale m. Instead of m being c.e. or computable, it would be c.e. or

computable in B. For instance, we could build a martingale computable from B that succeeds on

B by betting its entire capital on the correct bit of B at each place.

RELATIVIZATION IN RANDOMNESS

5

When we relativize a test for randomness to a sequence B, we simply permit the use of B as

an oracle in the enumeration of the test components Vi . For instance, if we want to build a test

that captures B itself, we can use B as an oracle and define ViB to be {B(0) . . . B(i − 1)}. This

means that each ViB will consist of an initial segment of B of length i, and clearly we will have

B ∈ ∩i [ViB ]. We could also build a test that captures the sequences that were computable from B,

for instance. We can write the class of sequences that are Martin-Löf (or Schnorr) random relative

to B as MLB (or SchnorrB ). Note that for Martin-Löf randomness, defining MLB is as simple as

calculating hUiB i, but it is more complicated for Schnorr randomness because we must consider

each Schnorr test individually.

This gives us yet another way of comparing sequences. Turing reducibility allows us to compare

their computational strength; relative randomness allows us to compare their randomness content.

In fact, several reducibilities have been defined based on the idea of relative randomness (see Chapter

9 of [13]). However, for the moment, we will confine ourselves to a discussion of the consequences

of a sequence being Martin-Löf random, computably random, or Schnorr relative to another. Our

main topics of discussion will involve the following points:

• If two sequences are combined in a computable way, when does this result in a random

sequence?

• Which sequences are so information-poor that they are incapable of derandomizing any

random sequence?

The first of these questions will be addressed in Section 2 and the second in Section 3. At some

points, I may simply write “random.” If I do so, this means that what I am writing is true regardless

of which of these three randomness notions is under consideration.

2. van Lambalgen’s Theorem

We begin by considering what it means to combine two sequences. Our plan is to do so in such

a way that the resulting sequence will computably encode both of the original sequences. We say

that the join of two sets A and B is the set

A ⊕ B = {2n | n ∈ A} ∪ {2n + 1 | n ∈ B}.

This set codes A in its even bits and B in its odd bits. However, there is no reason we have to

partition the bits of the join based on their parity: we could choose any computable subset F of ω

and let the nth F -place be a 1 if n ∈ A and 0 otherwise and the nth F c -place be a 1 if n ∈ B and

0 otherwise. In cases where we do not use the traditional odd-even join and instead decompose ω

into F and F c for some other F , we will write A ⊕F B. By the Church-Turing thesis, we can see

that A ≤T A ⊕ B, B ≤T A ⊕ B, and A ⊕ B ≡T A ⊕F B for every computable set F .

Now we recall the first question presented above: If two sequences are combined in a computable

way, when does this result in a random sequence? We can see immediately that it need not always

do so. It is clearly necessary that both sequences be random themselves. However, that alone is

not enough to guarantee the randomness of their combination. Even if A is a random sequence,

A⊕A won’t be random because half of the bits will be predictable from the bits immediately before

them. What if we take A ⊕ B for two different random sequences A and B? This may still not

be random. For instance, suppose that A ≤T B. Since B can compute A, it may be possible to

predict the bits of A in A ⊕ B from the bits of B that have already appeared, which will result in

the same problem as before.

6

FRANKLIN

This question was answered by van Lambalgen for Martin-Löf randomness in 1990 [50]. Intuitively, the answer to this question for Martin-Löf randomness is precisely what it should be. For

A ⊕ B to be Martin-Löf random, not only do both A and B have to be Martin-Löf random, they

must be random relative to each other. We state this result formally here:

van Lambalgen’s Theorem. [50] The following statements are equivalent.

(1) A is Martin-Löf random relative to B and B is Martin-Löf random relative to A.

(2) A ⊕ B is Martin-Löf random.

The claim that (1) implies (2) is known as the “hard” direction and the claim that (2) implies

(1) is known as the “easy” direction.

To prove the “easy” direction, we simply assume that B is not Martin-Löf random relative to

A and that thus B ∈ ∩i UiA and use this to produce a Solovay test2 that contains A ⊕ B. This

contradicts the assumption that A ⊕ B is Martin-Löf random.

One of the standard proofs of the “hard direction” is by Nies [10]. We assume for a contradiction

that A ⊕ B is not Martin-Löf random and, from a Martin-Löf test containing A ⊕ B, produce a

sequence of sets hSi i. We then construct a Martin-Löf test based on these Si s. If this test contains

A, then we have a contradiction because A cannot even be Martin-Löf random unrelativized; if it

does not, we can use this information about A to construct an A-Martin-Löf test that contains B.

van Lambalgen’s Theorem has a very interesting porism. The proof shows us that A ⊕ B is

Martin-Löf random precisely when A is Martin-Löf random and B is Martin-Löf random relative

to A. However, the roles of A and B in this proof are interchangeable, so we can draw the following

conclusion:

Porism 2.1. A is Martin-Löf random relative to B if and only if B is Martin-Löf random relative

to A.

The argument is rather straightforward. If A is Martin-Löf random and B is Martin-Löf random

relative to A, then A ⊕ B is Martin-Löf random. Since A ⊕ B is Martin-Löf random, B ⊕ A must

be Martin-Löf random, and therefore B must be Martin-Löf random and A must be Martin-Löf

random relative to B.

In short, if two sequences are Martin-Löf random and the first is random relative to the second,

the second must also be random relative to the first. This sort of symmetry is quite surprising and

very pleasing.

However, we should consider van Lambalgen’s Theorem in the context of not only Martin-Löf

randomness but also computable and Schnorr randomness. For a long time, it was assumed that the

“hard” direction of van Lambalgen’s Theorem was also true for computable and Schnorr randomness

“with essentially the same proof” (p. 276 of [13]). However, no formal proof of either statement was

given until 2011, when Franklin and Stephan proved that the “hard” direction holds for Schnorr

randomness.

Theorem 2.2. [20] If A is Schnorr random and B is Schnorr random relative to A, then A ⊕ B

must be Schnorr random.

Their proof, like Nies’s for the Martin-Löf case, involves supposing that A is Schnorr random, B

is A-Schnorr random, and A ⊕ B is not Schnorr random for a contradiction and then considering

2A Solovay test gives us another measure-theoretic characterization of Martin-Löf randomness [46].

moment, simply note that anything contained in a Solovay test is not Martin-Löf random.

For the

RELATIVIZATION IN RANDOMNESS

7

two separate cases. However, unlike Nies’s proof, they use martingales instead of the test characterization. They use a computable martingale that succeeds on A ⊕ B in the sense of Schnorr to

construct a set S of lengths of strings on which this martingale has values above a certain level. If

there are infinitely many lengths in S with a certain technical property, then A can be shown to be

not Schnorr random; if there are finitely many, then B can be shown to be not A-Schnorr random.

Miyabe and Rute have an alternate proof of this result in [39] that uses integral tests.3 They

begin with a Schnorr integral test witnessing that A ⊕ B is not Schnorr random and use this to

construct a Schnorr integral test relative to A witnessing that B is not Schnorr random relative to

A.

No such proof is known for computable randomness, so the following question is still open:

Question 2.3. If A is computably random and B is computably random relative to A, must A ⊕ B

be computably random?

On the other hand, it has long been known that the “easy” direction of van Lambalgen’s Theorem

does not hold for Schnorr or computable randomness; namely, that there is a Schnorr (computably)

random sequence A ⊕ B such that at least one of A and B is not Schnorr (computably) random

relative to the other. While the first result to this effect appears in [35], the following argument

appearing in [41], due to Kjos-Hanssen, is simpler.

A high Turing degree is a Turing degree with an element that wtt-computes a function that

dominates every computable function, and a minimal Turing degree is one with no degree strictly

between itself and 0. The Cooper Jump Inversion Theorem states that a minimal high Turing

degree exists [7], and every high Turing degree contains a computably random sequence A ⊕ B [42].

Since A and B are Turing computable from A ⊕ B and A ⊕ B belongs to a minimal degree, A and

B must either be in the same Turing degree as A ⊕ B or computable. Since no random sequence

is computable, they must both be in the same Turing degree as A ⊕ B. However, this means that

A ≡T B, so A and B are mutually computable and therefore neither of them is computably random

relative to the other.

Since every high degree contains a Schnorr random sequence that is not computably random

[42], the above argument holds for Schnorr randomness as well.

Additional results have been obtained in this area. Yu proved that this direction of van Lambalgen’s Theorem fails for computably random sequences bounded below 00 by a c.e. set [51], and

Franklin and Stephan proved that every high degree contains a computably random sequence for

which van Lambalgen’s Theorem fails for both computable and Schnorr randomness [20].

Franklin and Stephan’s proof in particular sheds light on the reason that a Schnorr or computably

random sequence does not necessarily decompose into two mutually Schnorr or computably random

sequences. They begin with an arbitrary high degree and a set A in it that wtt-computes a function

that dominates all computable functions. As a preliminary, they use this dominating function

mentioned above to construct a martingale m that subsumes all computable martingales; that is,

if any computable martingale succeeds on a sequence, m will too. They then construct a sequence

B that is contained in that high degree on which m does not succeed. This sequence will therefore

be computably random.

3An integral test is a lower semicomputable function on 2ω whose integral is ≤ 1 (in the case of an Martin-Löf

integral test) or equal to 1 (in the case of a Schnorr integral test) [33, 37].

8

FRANKLIN

To ensure that van Lambalgen’s Theorem fails for B, they construct it in finite initial segments,

alternating two kinds of steps in the construction. In the first kind of step, they extend the current

finite segment of B by another that has two properties:

(1) m does not increase very much on this new part of B and

(2) this new part of B codes an initial segment of the set A.

The first condition ensures that B is not computably or Schnorr random itself; the second

ensures that A ≤T B. The rest of the construction makes it clear that B ≤T A: a martingale

that is computable from A determines B, and all other aspects of the construction are themselves

computable.

In the second kind of step, the current finite initial segment σ of B is extended by a single bit

that can be determined from σ. The locations of these bits can be calculated computably, which

determines the way the sequence is determined for the join: the locations of these bits form the

computable set F . If B is separated into two pieces, one comprised of the bits at locations in F

and one comprised of the bits at locations in F c , we get two infinite binary sequences. We call the

sequence formed by the F -bits X and the sequence formed by the F c -bits Y . Clearly, X ⊕F Y = B.

However, X ≤T Y , so X is not computably random relative to Y . In fact, a slight additional

argument can be used to show that X is not even Schnorr random relative to Y (this argument is

necessary because X can still be Schnorr random relative to Y if X is computable from Y as long

as the computation of X from Y is very slow).

Now we can develop our intuition for the ways in which this direction of van Lambalgen’s

Theorem can fail for computable or Schnorr randomness. Suppose that we have a computably

random sequence B that can be written as X ⊕ Y , where X is not computably random relative to

Y . This means that there must be a computable martingale m0 relative to Y that succeeds on X.

How, then, could that martingale not be converted to a computable martingale that would succeed

on X ⊕ Y ? There are two possible ways. The first is the simplest: it may not be possible to define

such a martingale on X ⊕ Z for every sequence Z. If that happens, then the result will not be a

computable martingale. The second is inherent in the argument above: perhaps m0 would have to

see too many bits of Y before betting on a bit of X. In Franklin and Stephan’s proof, their choice

of F guarantees that there is a computable bound on the amount of Y that must be seen before a

given bit of X is determined. If no such bound exists, a computable martingale cannot be found.

This situation is reminiscent of the difference between Turing reducibility and truth-table reducibility: the conditions for success mirror the additional requirements for a Turing reduction to

be a truth-table reduction. This leads us to consider a new question. If we consider a different

reducibility, can we reformulate van Lambalgen’s Theorem in such a way that this direction would

hold for computable randomness or for Schnorr randomness? It turns out that the answer is yes,

that van Lambalgen’s Theorem is indeed sensitive to the reducibility used in the relativization of

these randomness notions.

It had been clear for some time that computable and Schnorr randomness behaved much more like

each other than like Martin-Löf randomness. As previously mentioned, there is a universal MartinLöf test, but no universal test for computable or Schnorr randomness. Martin-Löf randomness

can be characterized simply in terms of predictability, measure, or Kolmogorov complexity (to be

discussed in Section 3); the definitions are more complicated for the other two. The ways these

randomness notions behave with respect to van Lambalgen’s Theorem only confirm this difference.

RELATIVIZATION IN RANDOMNESS

9

It is intuitive to see why this should be so. Martin-Löf randomness is based in c.e. notions.

The martingales are c.e. in the predictability formulation, and the test components need only be

approximable from below. Computable randomness and Schnorr randomness, on the other hand,

are based in computable notions. The martingales involved must be computable, and the test

components must be approximable from both above and below.

This leads us to draw an analogy between these randomness notions and the different reducibilities discussed in Section 1. Martin-Löf randomness seems to be analogous to Turing reducibility:

partial computable functions need not be defined on all inputs. Computable and Schnorr randomness, on the other hand, seem to be analogous to tt-reducibility, in which every reduction is total for

every oracle. These analogies have led several people to consider the relationship between Schnorr

randomness and tt-reducibility.

Franklin and Stephan were the first to do this. In a paper that will be discussed further in

Section 3, they showed that two ways of being far from Schnorr random that seemed very different

when Turing reducibility was considered actually coincide when tt-reducibility is used instead [19].

To relativize Schnorr randomness to a sequence A, instead of requiring that the martingale m be

Turing computable from A, they used the stronger condition that m ≤tt A. While they could have

chosen to relativize the bound function as well, they argued that the end result is the same and

opted for the simpler definition.

Miyabe argued in [36] that the fact that van Lambalgen’s Theorem does not hold for Schnorr

randomness suggests that the standard relativization for Schnorr randomness is not the most appropriate one. In [36] and [39], Miyabe (and then Miyabe and Rute) introduced variants on Schnorr

randomness and computable randomness in which the relativizations were based on truth-table

reductions rather than Turing reductions. Miyabe called the Schnorr randomness version “truthtable Schnorr randomness” in [36], and he and Rute changed the terminology to “uniformly relative

Schnorr randomness” in [39], where they prove that this is equivalent to Franklin and Stephan’s

notion in [19].

In Section 4 of [36], Miyabe claimed a proof that van Lambalgen’s Theorem holds for uniformly

relative Schnorr randomness that closely parallels the standard proof for Martin-Löf randomness

mentioned previously. However, an unwarranted assumption was made in this proof: it had been

assumed that, given a Schnorr test relativized to A, hViA i, the measure of ViA could be computed

uniformly from A, a code for Vi , and the measure of Vi . This is corrected in [39] with a new proof

using integral tests. Furthermore, the particular assumption that had been made sheds light on

the reason that van Lambalgen’s Theorem does not hold for Schnorr randomness with the standard

relativization: this type of computation may not allow us to distinguish between two sets with very

different measures provided that the difference in measure results from a small “gap” [39].

In addition, as mentioned above, they provide a remarkably short and elegant proof of the

“hard” direction of van Lambalgen’s Theorem for standard Schnorr randomness and then show the

following result for uniformly relative Schnorr randomness.

Theorem 2.4 (Theorem 4.7, [39]). A ⊕ B is Schnorr random if and only if A is Schnorr random

and B is Schnorr random uniformly relative to A.

The proof of the “easy” direction is given in [36]; the proof of the “hard” direction relies on

a technical lemma. We assume that A is Schnorr random and A ⊕ B is not and take a Schnorr

integral test t witnessing that A⊕B is not Schnorr random. We use this test to construct a uniform

10

FRANKLIN

Schnorr integral test tA witnessing that B is not Schnorr random uniformly relative to A. This test

is striking in its simplicity: for each X, we set tX (Y ) = t(X ⊕ Y ).

Miyabe and Rute also address the situation for computable randomness. While they do not

prove a theorem precisely parallel to Theorem 2.4, they are able to show a slightly weaker theorem

using a relatively traditional martingale approach:

Theorem 2.5 (Theorem 5.3, [39]). A ⊕ B is computably random if and only if A is computably

random uniformly relative to B and B is computably random uniformly relative to A.

To show the “hard” direction, we take a martingale m witnessing that A ⊕ B is not computably

A

random and use it as in the proof of Theorem 2.4 to construct two martingales mB

1 and m2 that

mimic the behavior of m on X ⊕ B and A ⊕ X, respectively. Then we show that either mB

1

succeeds on A or mA

succeeds

on

B.

The

“easy”

direction

is

proven

in

[36]

using

the

test

definition

2

of computable randomness and showing that if A ⊕ B is computably random, then B must be

computably random uniformly relative to A.

The authors noted that this theorem has an interesting corollary that shows immediately that

computable randomness differs from uniformly relative computable randomness. As previously

mentioned, several people have shown that there are two computably random sequences whose join

is computably random that are not computably random relative to the other. However, Theorem

2.5 shows that the two computably random sequences in question must still be computably random

uniformly relative to each other.

Miyabe and Rute also note that uniform relativization and the standard relativization coincide

for Kolmogorov-Loveland randomness and Martin-Löf randomness [39]. This, when combined with

the facts presented above, suggests to them that uniform relativization is the proper relativization

to consider for all randomness notions: for some randomness notions, uniform relativization does

not affect the results obtained, and for Schnorr randomness and computable randomness, theorems

that ought to be true become true in the context of uniform relativization. Later, Kihara and

Miyabe would show that van Lambalgen’s Theorem holds for Kurtz randomness when uniformly

relativized but not under the standard relativization, lending more support to this statement [26].4

3. Lowness for randomness

Now we consider the second question asked at the end of Section 1: Which sequences are so

information-poor that they are incapable of derandomizing any random sequence? Our instinct is

to say that that this will depend on the type of randomness we are considering. We will begin by

discussing this type of computational weakness, called lowness, within the context of other areas

of computability theory and then show how it developed within randomness. In addition to the

references to the original papers given below, a more thorough survey of lowness for randomness

notions can be found in [16].

Lowness is one of the simplest contexts in which we can discuss relativization. We begin with a

relativizable class C and say that a set is low for C if the class generated using the set as an oracle

is precisely the same as the class generated using no oracle at all, in other words, if C A = C, and we

denote the sets that are low for C by Low(C). The term “lowness” was first introduced in 1972 [44]

and applied to the class of Turing functionals. In other words, a set A is low if the Turing jump

of A was equivalent to the Turing jump of 0. Over the past four decades, this notion has been

4A sequence A is Kurtz random if it is contained in every conull Σ0 subclass of 2ω [31].

1

RELATIVIZATION IN RANDOMNESS

11

generalized to other areas such as computational learning theory [43] and computable structure

theory [18] but has been most thoroughly developed in the context of algorithmic randomness. For

instance, randomness is the only context in which lowness for pairs of relativizable classes has ever

been discussed.

The concept of lowness for pairs, first introduced in [29], is perhaps best illustrated by an

example. We already know that every Martin-Löf random sequence is Schnorr random, that is,

that ML ⊂ Schnorr. If a sequence A is low for Schnorr, we know that SchnorrA = Schnorr and

thus that ML ⊂ SchnorrA . However, it may be possible to find a sequence B that is not low for

Schnorr such that ML ⊆ SchnorrB . Any sequence B for which ML ⊆ SchnorrB is said to be in

Low(ML, Schnorr).

We generalize this example to the more general concept of lowness for pairs: we say that A is in

Low(C, D) if DA still contains C. We note that Low(C, C) is the same as Low(C) and that it makes

no sense to consider Low(C, D) unless C ⊆ D: if C is not a subset of D, then it can certainly never

b ⊆ D, we will have

be a subset of DA . We can also see that, given a chain of classes C ⊆ Cb ⊆ D

b

b

Low(C, D) ⊆ Low(C, D), a fact that will often be useful in this section.

Which sequences, then, can belong to Low(C) when C is a class of random sequences? We begin

by observing that for any A, C A will necessarily be a subclass of C: if A is computationally strong

enough to remove even one element of C, then we will have C A ⊂ C. For instance, if we take A to

be a Martin-Löf random sequence, it cannot be low for the class ML because A belongs to ML but

not to MLA .

We will begin by discussing the class Low(ML). Thereafter, we will discuss lowness for pairs

of notions grouped by the last entry in the pair Low(−, C), as the proofs and results tend to be

similar when the last entry in the pair is held constant. Finally, we will discuss some recent work

by Kihara and Miyabe in which they investigate lowness for pairs of randomness notions under

uniform relativization.

Just as Martin-Löf randomness is generally considered to be the gold standard for randomness

notions, Low(ML) is the most robust lowness class. When a sequence is low for a class C, it is, in

the sense of relativization, very far from being in C. However, there are other ways of being “far

from random,” and in the context of Martin-Löf randomness, all of these ways coincide with being

low. Several of them are based on a third characterization of randomness based in the idea that a

random sequence should be difficult to describe. Kolmogorov complexity provides us with a way

to calculate this difficulty of description for a finite binary string.

Definition 3.1. A prefix-free machine is a function M : 2<ω → 2<ω such that the domain of M is

prefix free. We say that the prefix-free Kolmogorov complexity of a string σ relative to a prefix-free

machine M is

KM (σ) = min{|τ | | M (τ ) = σ}.

A computable measure machine is a prefix-free machine whose domain has a computable measure

[12].

We note without ceremony that there is a universal prefix-free machine U ; if we use such a

machine in a Kolmogorov complexity calculation, we simply write K instead of KU . Levin [32]

and Chaitin [5] defined a sequence A to be random if there is a constant c such that for every n,

K(An) ≥ n − c. This is equivalent to Martin-Löf randomness [5], and it is this notion in which

the following properties are based. (There is a similar characterization of Schnorr randomness

using prefix-free Kolmogorov complexity that requires the use of computable measure machines.)

12

FRANKLIN

The properties in the first pair are truly lowness properties; the properties in the second pair are

based on the notion that a sequence is far from random if it is no more difficult to describe than a

computable sequence.

Definition 3.2. Let A be a sequence.

(1) A is low for K if there is some c such that for all σ, K(σ) ≤ K A (σ) + c (unpublished, from

Muchnik).

(2) A is low for computable measure machines if for every A-computable measure machine M ,

there is a computable measure machine M 0 and a constant csuch that for all σ, KM 0 (σ) ≤

KM (σ) + c [8].

Definition 3.3. Let A be a sequence.

(1) A is K-trivial if there is some c such that for all n, K(An) ≤ K(0n ) + c [4].

(2) A is Schnorr trivial if for every computable measure machine M , there is a computable

measure machine M 0 and a constant c such that for all n, KM 0 (An) ≤ KM (0n ) + c [9].

In each of these definitions, the first item corresponds to a notion for Martin-Löf randomness

and the second to a notion for Schnorr randomness. Neither lowness for a type of machine nor

triviality has a natural analogue for computable randomness since computable randomness has no

known natural definition in terms of Kolmogorov complexity. The following two properties also

indicate being “far from random.” In each definition, we can substitute “Schnorr” or “computable”

for “Martin-Löf” to obtain the corresponding definitions for the other two types of randomness.

Definition 3.4. Let A be a sequence.

• A is low for Martin-Löf tests if for every Martin-Löf test relative to A hViA i, there is an

unrelativized Martin-Löf test hTi i such that ∩i ViA ⊆ ∩i Ti .

• A is a base for Martin-Löf randomness if there is a sequence B ≥T A such that B is

Martin-Löf random relative to A.

The question is now how these ways of being far from random relate to lowness for randomness.

If they coincide for a given relativization, it suggests that the chosen relativization is a sensible one.

It is straightforward to see that lowness for Martin-Löf tests is equivalent to lowness for MartinLöf randomness since there is a universal Martin-Löf test. Being low for K, being K-trivial, and

being a base for Martin-Löf randomness are also equivalent to lowness for Martin-Löf randomness,

but more work is required to show these facts. Nies proved the equivalence of lowness for K and

lowness for Martin-Löf randomness in [40]. This paper also contains a proof by Nies and Hirschfeldt

that K-triviality and lowness for K are equivalent. We will begin by discussing these two results;

afterwards, we will discuss Hirschfeldt, Nies, and Stephan’s proof of the equivalence of K-triviality

and being a base for Martin-Löf randomness from [24].

It is relatively straightforward to see that lowness for K implies lowness for Martin-Löf randomness: since ML can be defined in terms of Kolmogorov complexity K, MLA can be defined in

terms of K A , so every sequence that is low for K is low for Martin-Löf randomness. The proof that

lowness for Martin-Löf randomness is equivalent to lowness for K is most easily done by considering

two reducibilities: ≤LK and ≤LR .

Definition 3.5. Let A and B be sequences. We say that A ≤LK B if for all σ and some c,

K B (σ) ≤ K A (σ) + c and that A ≤LR B if MLB ⊆ MLA .

RELATIVIZATION IN RANDOMNESS

13

Both of these reducibilities let us compare the derandomization abilities of two sequences: in

each case, A is reducible to B if B can derandomize at least as much as A in the relevant context.

Since Martin-Löf randomness can be defined in terms of Kolmogorov complexity, we can see that

A ≤LK B implies A ≤LR B. Kjos-Hanssen, J. Miller, and Solomon showed that LR-reducibility

implies LK-reducibility, so we have the reverse direction as well [28].

We now turn our attention to K-triviality. Showing that lowness for K implies K-triviality is

relatively straightforward [40]: given a constant c0 witnessing A’s lowness for K and a universal

prefix-free machine U , we simply build a universal prefix-free machine M such that for every X

and σ, M X (σ) = X|U (σ)| whenever this is defined. If a sequence A is low for K, this allows us

to first pass from information about the complexity the unrelativized U gives an initial segment of

A to the complexity the A-relativized M gives it to the complexity of a string of 0s of the same

length for only the cost of a constant.

Showing that K-triviality implies lowness for K, though, is far more difficult and makes use of

the “golden run” method.

Theorem 3.6. [40] Every sequence that is K-trivial is low for K.

The proof of this result is rather complicated, so we will simply give an idea of the ingredients

of the proof and how they fit together.

We begin with a computable approximation to our K-trivial A. Our primary tools are two

Kraft-Chaitin sets L and W .5 L will allow us to use A’s K-triviality in our proof, and W will

witness A’s lowness for K.

The construction involves a tree of “runs” of procedures. At each branching point, we try to

ensure that W generates a prefix-free machine that will witness A’s lowness for K. The complications arise when A’s approximation changes. We cannot simply compensate for this change every

time; if we do, L will be too large. Therefore, we refuse to allow an axiom to enter L until it is

“approved” at a certain level of the tree.

Our tree will have 2k − 2 levels at each stage for a k calculated in advance from the index for

the machine used and a constant witnessing A’s K-triviality. The root procedure is Pk , which

calls several procedures of type Qk−1 , each of which calls Pk−1 , and so on. Each procedure has a

certain measure as its goal, and the run of each procedure enumerates a set. The only way this

enumeration will halt is if it reaches its goal or if it is cancelled by a higher-level run. Pk ’s goal is

the enumeration of a measure 1 set Ck . If this is never achieved, there is a run of another procedure,

called the “golden run,” which never returns, although all its subprocedures either return or are

cancelled. In general, if the relevant approximation to A changes quickly, Pi can add an axiom to

its Kraft-Chaitin set immediately and cancel one of the Qi s; if that Qi returns a set D, Pi will wait

for the appropriate approximation to A to change and, if it does, add D to its own Kraft-Chaitin

set.

We establish a “garbage quota” for L to account for changes in the approximation to A that

occur after we have committed some measure to an earlier version. When runs are cancelled, they

contribute to this garbage quota for L. By careful balancing of the measure goals and the garbage

quotas, we ensure that L will be a Kraft-Chaitin set. Furthermore, we can see that there is a golden

run of some procedure by assuming that every run of every procedure is either returned or cancelled

and obtaining a contradiction. We then use this golden run to enumerate the Kraft-Chaitin set W

5A Kraft-Chaitin set is a subset of ω × 2<ω with bounded measure that allows us to build a prefix-free machine

with a certain targeted property. The elements of a Kraft-Chaitin set are called “axioms.”

14

FRANKLIN

witnessing that A is low for K. Our previous balancing of measure goals and garbage quotas will

ensure that the measure of W is small enough, and the way in which we choose axioms to include

will ensure that K(τ ) is no bigger than K A (τ ) plus some constant.

At this point, we have shown that lowness for Martin-Löf randomness, lowness for Martin-Löf

tests, lowness for K, and K-triviality are all equivalent. We now turn our attention to the last

property: being a base. Kučera observed that the Kučera-Gács Theorem [22, 30] can be applied

to show that if A is low for Martin-Löf randomness, then it is a base for Martin-Löf randomness.

Hirschfeldt, Nies, and Stephan completed the proof of this equivalence:

Theorem 3.7. [24] If a sequence is a base for Martin-Löf randomness, then it is K-trivial.

Here, we fix a sequence B ≥T A that is A-Martin-Löf random and enumerate a Kraft-Chaitin set

Ln for each n ∈ ω in such a way that one of them will witness A’s K-triviality. An A-Martin-Löf test

is built from uniformly Σ01 classes with certain technical properties, and the Ln are defined based

on these Σ01 classes. Therefore, all the mentioned characterizations of being far from Martin-Löf

random coincide using the standard relativization.

We now turn our attention to classes of the form Low(−, Comp). Nies proved the following

results in [40]. Interestingly, the characterization of Low(ML, Comp) is necessary for his proof of

the characterization of Low(Comp).

Theorem 3.8. [40] A sequence A is in Low(ML, Comp) if and only if A is low for K.

We note that since ML ⊆ ML ⊆ ML ⊆ Comp, Low(ML) ⊆ Low(ML, ML) ⊆ Low(ML, Comp).

Therefore, we simply need to show that any element of Low(ML, Comp) is low for K.

This proof uses the martingale characterization of computable randomness: we construct a

martingale functional L such that A is low for K if LA only succeeds on sequences that are not

Martin-Löf random.6 Rather than build L directly, we set L to be the sum of the martingale

functionals Ln that we actually construct.

As before, we build a Kraft-Chaitin set W witnessing that such an A must be low for K. We use

an auxiliary set R, a c.e. open subset of the Cantor space with measure less than one containing

all sequences that are not Martin-Löf random. This set R is used to ensure that W is truly a

Kraft-Chaitin set and has measure no greater than 1: we wait to see if a certain set enters R before

committing a certain axiom to W . As we carry out this procedure to construct our W , we build

Ln by choosing certain strings that may be initial segments of non-Martin-Löf random sequences

and ensuring that all sequences of a certain type have a high value on those strings. If this happens

infinitely often, then L will succeed on these sequences.

We now turn our attention to Low(Comp). Since ML ⊆ Comp ⊆ Comp ⊆ Comp, Low(Comp) ⊆

Low(Comp, Comp) ⊆ Low(ML, Comp). A result of Bedregal and Nies states that every element of

Low(Comp) is hyperimmune free [2]. Since every sequence that is low for K is ∆02 , every element

of Low(Comp) must be ∆02 , and since the only hyperimmune-free ∆02 sequences are the computable

sequences, Low(Comp) is simply the computable sequences.

Although we cannot discuss any analogue to K-triviality or lowness for K for computable randomness, the bases for computable randomness have been partially characterized with the following

two results. Recall that a sequence is DNC if it computes a total function f such that f (e) is never

equal to Φe (e) for any e and that a Turing degree is PA if it can compute a complete extension of

Peano Arithmetic.

6A martingale functional is a Turing functional that is a martingale for every oracle.

RELATIVIZATION IN RANDOMNESS

15

Theorem 3.9. [24] Every non-DNC ∆02 sequence is a base for computable randomness, but no

sequence in a PA degree is.

Corollary 3.10. [24] An n-c.e. sequence is a base for computable randomness if and only if it is

Turing incomplete.

To show that a non-DNC ∆02 sequence is a base for computable randomness, we take such a

sequence A and construct a generic sequence G such that (A ⊕ G)0 ≡T A00 . This sequence is used

as an oracle to build a Z ≥T A that is A-computably random. The other half of this theorem is

proven by noting that a sequence A with PA degree will compute a sequence B with PA degree

such that A is PA relative to B. We then show that if there is a sequence computing A that is

A-computably random, we can conclude that B is both K-trivial and not K-trivial and derive a

contradiction.

The proof of the corollary uses a theorem in [25] that states that a Turing incomplete n-c.e.

sequence is not DNC and is thus a base for computable randomness. Furthermore, such a sequence

that is Turing complete will necessarily have PA degree and thus cannot be a base.

We now turn our attention to lowness for Schnorr randomness. While the study of van Lambalgen’s Theorem for Schnorr randomness suggested the importance of choosing a nonstandard

relativization, the study of lowness for Schnorr randomness emphasizes that Miyabe’s relativization is far more appropriate than the standard one.

We can begin by observing that lowness for Schnorr tests clearly implies lowness for Schnorr

randomness; however, the converse is not obvious since there is no universal Schnorr test. In order

to characterize the Low(−, Schnorr) classes, we need a new concept: traceability.

Definition 3.11. A sequence A is c.e. traceable if there is an order function p such that for all

f ≤T A, there is a computable function r such that the following conditions hold for all n:

• f (n) ∈ Wr(n) and

• |Wr(n) | ≤ p(n).

A sequence is computably traceable if we can replace Wr(n) with Dr(n) in the above definition, where

Dk is the k th canonical finite set.

We note that all computably traceable sequences are hyperimmune free and that we may think

of a computably traceable sequence as uniformly hyperimmune free.

This notion allowed Terwijn and Zambella to characterize the sequences that were low for Schnorr

tests.

Theorem 3.12. [48] A sequence is computably traceable if and only if it is low for Schnorr tests.

Later, Kjos-Hanssen, Nies, and Stephan showed that the computably traceable sequences were

precisely those that were low for Schnorr randomness. They began by showing that the c.e. traceable

sequences were precisely those in Low(ML, Schnorr).

Theorem 3.13. [29] A sequence is c.e. traceable if and only if it is in Low(ML, Schnorr).

To prove this, we assume that if a sequence A is c.e. traceable, we can take a Schnorr test relative

to A and use a trace for A to approximate the components of this Schnorr test in a computable

way. This approximations allow us to construct a Martin-Löf test whose intersection contains the

intersection of the relativized Schnorr test.

16

FRANKLIN

Conversely, if A is in Low(ML, Schnorr), we need to construct a c.e. trace for an arbitrary f ≤T A.

We code this f into a Schnorr test relative to A and recognize that the intersection of this A-Schnorr

test is contained in each element of the universal Martin-Löf test. We can use one element of this

unrelativized universal Martin-Löf test to build a c.e. trace for f . When we build this trace, we

must ensure that we don’t include too many options for each value f (n) while ensuring at the same

time that we include the correct value. This is possible due to our method of encoding the initial

segments of f into the A-Schnorr test.

Finally, we use this result to find the other classes Low(−, Schnorr).

Theorem 3.14. [29] Let A be a sequence. The following are equivalent:

(1) A is computably traceable.

(2) A is in Low(Schnorr).

(3) A is in Low(Comp, Schnorr).

Terwijn and Zambella had already shown that (1) implies (2), and it can be seen from the

relationship between Comp and Schnorr that (2) implies (3). Therefore, the only thing to do is to

show that (3) implies (1). This follows from the same paper of Bedregal and Nies mentioned earlier

[2]: they showed that every sequence in Low(Comp, Schnorr) must be hyperimmune free, and since

every hyperimmune free c.e. traceable sequence is actually computably traceable, we are done.

We now consider lowness for computable measure machines, which is analogous to lowness for

K. This is also equivalent to computable traceability:

Theorem 3.15. [8] A sequence A is low for computable measure machines if and only if it is

computably traceable.

Since lowness for computable measure machines implies lowness for Schnorr randomness, it is

easy to prove the forward direction. To prove the other direction, we assume that A is a computably

traceable sequence and choose a computable measure machine; we then use the computable traceability of A to construct a sequence of small finite sets with a certain technical property. We then

build a Kraft-Chaitin set from this sequence that allows us to construct a computable measure

machine witnessing A’s lowness for computable measure machines.

Bases for Schnorr randomness and Schnorr triviality have also been the subject of study. At first

glance, the Schnorr trivial sequences appear to be very different from the sequences that are low

for Schnorr randomness. Downey, Griffiths, and LaForte proved that there is a Turing complete

Schnorr trivial sequence [9], and Franklin proved that, in fact, every high Turing degree contains

a Schnorr trivial sequence [15]. The Schnorr trivial Turing degrees do not form an ideal since they

are not closed downward, as the K-trivial Turing degrees do [40]. However, Franklin showed the

following relationship between Schnorr triviality and lowness for Schnorr randommness:

Theorem 3.16. [14] A sequence is low for Schnorr randomness if and only if it is Schnorr trivial

and hyperimmune free.

To see this, we begin by assuming that A is low for Schnorr randomness and fixing an arbitrary

computable measure machine M . We use an enumeration of this machine and the computable

traceability of A to construct another computable measure machine M 0 witnessing the Schnorr

triviality of A with respect to M . To prove the other direction, we assume that A is hyperimmune

free but not Schnorr low. We then take a function g ≤T A that witnesses that A is not computably

traceable and, since A is hyperimmune free, observe that the use function for this g relative to

RELATIVIZATION IN RANDOMNESS

17

all possible A is a computable function. Now, for every computable measure machine Mi , we can

define a computable trace that contains all strings of a certain length with low complexity. Since

A is not Schnorr low, there is an n for which g A (n) is not in this trace, and thus A will not be

Schnorr trivial with respect to this Mi .

This result caused Franklin to suggest that Turing reducibility was not the right framework for

Schnorr randomness and that a more natural framework might be the truth-table degrees [14].

As mentioned in Section 2, she and Stephan later showed that Schnorr triviality and lowness for

Schnorr randomness actually coincide when one considers truth-table reducibility. They use the

relativization below that is equivalent to Miyabe’s in [19]:

Definition 3.17. A is tt-low for Schnorr randomness if for every Schnorr random set B, there is no

martingale m ≤tt A and no computable function g such that for infinitely many n, m(Bg(n)) ≥ n.

This allows them to prove the following theorem that is far more parallel to the results for

Martin-Löf randomness mentioned earlier.

Theorem 3.18. [19] Let A be a sequence. The following are equivalent:

(1) A is Schnorr trivial.

(2) A is tt-low for Schnorr randomness.

(3) The tt-degree of A is computably traceable (here, we trace the functions f that are tt-reducible

to A instead of all f ≤T A).

The proof of this result involves a characterization of Schnorr triviality using computable probability distributions. This characterization is used to show that any function tt-computable from

a Schnorr trivial sequence can be computably traced given an arbitrary bound function (in other

words, that (1) implies (3)). We then translate this trace into information about martingales with

the help of a technical lemma and show that A is tt-low for Schnorr randomness (so (3) implies

(2)). At this point, we can do a highly calculational proof, once again using computable probability

distributions, to show that (2) implies (1).

An additional characterization of Schnorr triviality found in [19] allows us to prove a corollary

that serves as another indication that the truth-table degrees are the natural habitat of Schnorr

randomness:

Corollary 3.19. [19] The join of any two Schnorr trivial sequences is also Schnorr trivial, so the

Schnorr trivial sequences form an ideal in the truth-table degrees.

We conclude our discussion of lowness for Schnorr randomness with a discussion of bases. The

bases for Schnorr randomness are precisely the sequences that can’t compute 00 [21], which is

clearly a different class from those that are low for Schnorr randomness. Franklin and Stephan also

considered a truth table version of bases: one in which A is a tt-base for Schnorr randomness if

and only if there is a B ≥tt A such that B is A-Schnorr random. While they showed that this is

not equivalent to Schnorr triviality, as hoped, they do show in [19] that it is sufficient for Schnorr

triviality.

Miyabe, once again, took this study a step farther by defining bases for uniform Schnorr tests

and uniformly computable martingales:

Definition 3.20. [38] Let A ∈ 2ω , and let O be the class of open sets in 2ω .

18

FRANKLIN

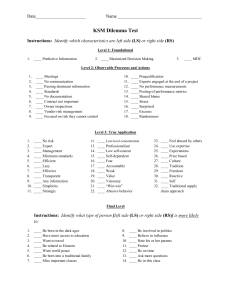

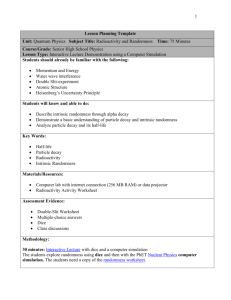

ML

Comp

Schnorr

ML K-trivial

K-trivial

c.e. traceable

Comp

computable computably traceable

Schnorr

computably traceable

Table 1. Characteristics of lowness classes: standard relativization

(1) A uniform Schnorr test is a computable function f : 2ω × ω → O such that hX, ii 7→

µ(f (X, i)) is computable and µ(f (X, i)) ≤ 21i for all n. A sequence hUiA i is a Schnorr test

uniformly relative to A if UiA = f (A, i) for all i and some uniform Schnorr test f .

(2) A uniform martingale test is a computable function m : 2ω × 2<ω → R>0 such that mZ =

m(Z, −) is a martingale for every sequence Z.

Definition 3.21. [38] Let A be a sequence.

(1) A is a base for uniform Schnorr tests if for every computable martingale m uniformly

relative to A, there is a B ≥tt A that is Schnorr random uniformly relative to A for m.

(2) A is a base for uniformly computable martingales if for every computable martingale m

uniformly relative to A, there is a B ≥tt A that is computably random uniformly relative

to A for m.

Miyabe then goes on to prove that these notions are equivalent to Schnorr triviality:

Theorem 3.22. [38] Let A be a sequence. The following are equivalent:

(1) A is Schnorr trivial.

(2) A is a base for uniformly computable martingales.

(3) A is a base for uniform Schnorr tests.

It is clear that (2) implies (3), and the proof that (3) implies (1) is inherent in Franklin and

Stephan’s work [19]. To prove that (1) implies (2), we construct our set B using the Space Lemma

[34] and the fact that A must be computably tt-traceable.

A summary of these lowness classes can be found in Table 1. Lowness for other randomness notions such as Kurtz randomness, weak 2-randomness, and difference randomness have been studied

as well [3, 11, 17, 23, 28, 47], but we will not consider these in this survey since they do not cast

light on the role of relativization in the same way that a comparison of lowness for Martin-Löf

randomness and Schnorr randomness, in particular, does.

Kihara and Miyabe have also considered an idea they call LowF for pairs of randomness notions:

LowF (C, D) is the set of sequences A such that C is contained in the class of sequences that are

in D uniformly relative to A [27]. They characterize LowF (C, D) for several pairs of randomness

notions where C and D range over ML, Schnorr, and Kurtz randomness in terms of various forms

of traceability and anticomplexity [27]. Their proofs rely heavily on previous characterizations of

Low(C, D) and technical means of uniformizing the proofs of these characterizations. An example

of their results is given below:

Theorem 3.23. [27]

(1) A is in LowF (ML, Schnorr) if and only if A is c.e. tt-traceable.

(2) A is in LowF (Schnorr) if and only if A is computably tt-traceable.

RELATIVIZATION IN RANDOMNESS

19

The first of these results is proven using a covering property of Bienvenu and Miller [3] and

several technical lemmas; the second is inherent in Franklin and Stephan [19].

We can see that these results are parallel to the results with the standard relativization: the only

difference is that here, we use tt-traceability instead of Turing traceability.

All the natural ways in which a sequence can be far from Martin-Löf random coincide, and Miyabe

and Rute demonstrated that uniform relativization coincides with the standard relativization in

that setting. When we consider Schnorr randomness under the standard relativization, the lowness

notions (lowness for Schnorr randomness, lowness for Schnorr tests, and lowness for computable

measure machines) all coincide, but triviality and being a base coincide neither with the lowness

notions nor with each other. However, under uniform relativization, all these notions coincide.

This lends incredible strength to the argument that uniform relativization is the most reasonable

approach to relativizing definitions related to Schnorr randomness and possibly any randomness

notion.

4. Conclusion

As we have seen, the truth of van Lambalgen’s Theorem and the class of sequences that are low

for a certain type of randomness are highly dependent on the relativization used. Based on what

“ought” to be true (van Lambalgen’s Theorem should be true; lowness and triviality should coincide

when both notions are defined), we can determine which type of relativization is most appropriate

for which randomness notion. It is apparent that the standard Turing-based relativization is the

most appropriate for Martin-Löf randomness, while a relativization based in truth-table reducibility

is the most appropriate for Schnorr randomness.

We have said very little about the role that choosing a relativization plays with computable

randomness. This is a much more difficult notion to work with than Martin-Löf or Schnorr randomness since its characterization in terms of martingales is the only simple one it has. While

Miyabe and Rute have shown that uniformly relative computable randomness makes van Lambalgen’s Theorem hold when the standard relativization does not, it is unclear whether a somewhat

weaker reducibility would also work. Since we have so few useful characterizations of ways in which

a sequence can be far from computable randomness, it is difficult to study relativization in this

context. The difficulty of characterizing bases using the standard Turing reduction suggests, once

again, that this is not the correct reducibility to use; however, it does not help us identify a more

useful relativization.

There are other components of the study of randomness in which relativization is also important,

such as highness: given two classes C and D such that D ⊆ C, a sequence A is high for the pair

(C, D) if C A ⊆ D, that is, if A is computationally strong enough to shrink C enough to fit inside of

D. This concept was introduced in [21] and pursued in [1], but only the standard relativizations

have ever been considered, and there are still several open questions remaining. We suggest that

highness for pairs be more strongly investigated using the standard relativization and that a notion

HighF (C, D) parallel to Kihara and Miyabe’s LowF (C, D) be investigated in parallel. Considering

the differences in these classes may be very instructive and may provide another avenue to consider

the role of relativization in randomness.

References

[1] George Barmpalias, Joseph S. Miller, and André Nies. Randomness notions and partial relativization. Israel J.

Math., 191(2):791–816, 2012.

20

FRANKLIN

[2] Benjamı́n René Callejas Bedregal and André Nies. Lowness properties of reals and hyper-immunity. Electronic

Notes in Theoretical Computer Science, 84:73–79, 2003.

[3] Laurent Bienvenu and Joseph S. Miller. Randomness and lowness notions via open covers. Ann. Pure Appl.

Logic, 163(5):506–518, 2012.

[4] G. J. Chaitin. Algorithmic information theory. IBM J. Res. Develop., 21(4):350–359, 1977.

[5] Gregory J. Chaitin. A theory of program size formally identical to information theory. J. Assoc. Comput. Mach.,

22:329–340, 1975.

[6] Alonzo Church. An Unsolvable Problem of Elementary Number Theory. Amer. J. Math., 58(2):345–363, 1936.

[7] S. B. Cooper. Minimal degrees and the jump operator. J. Symbolic Logic, 38:249–271, 1973.

[8] Rod Downey, Noam Greenberg, Nenad Mihailović, and André Nies. Lowness for computable machines. In Computational prospects of infinity. Part II. Presented talks, volume 15 of Lect. Notes Ser. Inst. Math. Sci. Natl.

Univ. Singap., pages 79–86. World Sci. Publ., Hackensack, NJ, 2008.

[9] Rod Downey, Evan Griffiths, and Geoffrey LaForte. On Schnorr and computable randomness, martingales, and

machines. Math. Log. Q., 50(6):613–627, 2004.

[10] Rod Downey, Denis R. Hirschfeldt, André Nies, and Sebastiaan A. Terwijn. Calibrating randomness. Bull.

Symbolic Logic, 12(3):411–491, 2006.

[11] Rod Downey, André Nies, Rebecca Weber, and Liang Yu. Lowness and Π02 nullsets. J. Symbolic Logic, 71(3):1044–

1052, 2006.

[12] Rodney G. Downey and Evan J. Griffiths. Schnorr randomness. J. Symbolic Logic, 69(2):533–554, 2004.

[13] Rodney G. Downey and Denis R. Hirschfeldt. Algorithmic Randomness and Complexity. Springer, 2010.

[14] Johanna N.Y. Franklin. Hyperimmune-free degrees and Schnorr triviality. J. Symbolic Logic, 73(3):999–1008,

2008.

[15] Johanna N.Y. Franklin. Schnorr trivial reals: A construction. Arch. Math. Logic, 46(7–8):665–678, 2008.

[16] Johanna N.Y. Franklin. Lowness and highness properties for randomness notions. In T. Arai et al., editor,

Proceedings of the 10th Asian Logic Conference, pages 124–151. World Scientific, 2010.

[17] Johanna N.Y. Franklin and Keng Meng Ng. Difference randomness. Proc. Amer. Math. Soc., 139(1):345–360,

2011.

[18] Johanna N.Y. Franklin and Reed Solomon. Degrees that are low for isomorphism. Computability, 3(2):73–89,

2014.

[19] Johanna N.Y. Franklin and Frank Stephan. Schnorr trivial sets and truth-table reducibility. J. Symbolic Logic,

75(2):501–521, 2010.

[20] Johanna N.Y. Franklin and Frank Stephan. Van Lambalgen’s Theorem and high degrees. Notre Dame J. Formal

Logic, 52(2):173–185, 2011.

[21] Johanna N.Y. Franklin, Frank Stephan, and Liang Yu. Relativizations of randomness and genericity notions.

Bull. Lond. Math. Soc., 43(4):721–733, 2011.

[22] Péter Gács. Every sequence is reducible to a random one. Inform. and Control, 70(2-3):186–192, 1986.

[23] Noam Greenberg and Joseph S. Miller. Lowness for Kurtz randomness. J. Symbolic Logic, 74(2):665–678, 2009.

[24] Denis R. Hirschfeldt, André Nies, and Frank Stephan. Using random sets as oracles. J. Lond. Math. Soc. (2),

75(3):610–622, 2007.

[25] C. G. Jockusch, Jr., M. Lerman, R. I. Soare, and R. M. Solovay. Recursively enumerable sets modulo iterated

jumps and extensions of Arslanov’s completeness criterion. J. Symbolic Logic, 54(4):1288–1323, 1989.

[26] Takayuki Kihara and Kenshi Miyabe. Uniform Kurtz randomness. J. Logic Comput., 24(4):863–882, 2014.

[27] Takayuki Kihara and Kenshi Miyabe. Unified characterizations of lowness properties via Kolmogorov complexity.

Arch. Math. Logic, 54(3-4):329–358, 2015.

[28] Bjørn Kjos-Hanssen, Joseph S. Miller, and Reed Solomon. Lowness notions, measure and domination. J. Lond.

Math. Soc. (2), 85(3):869–888, 2012.

[29] Bjørn Kjos-Hanssen, André Nies, and Frank Stephan. Lowness for the class of Schnorr random reals. SIAM J.

Comput., 35(3):647–657, 2005.

[30] Antonı́n Kučera. Measure, Π01 -classes and complete extensions of PA. In Recursion theory week (Oberwolfach,

1984), volume 1141 of Lecture Notes in Math., pages 245–259. Springer, Berlin, 1985.

[31] Stuart Alan Kurtz. Randomness and genericity in the degrees of unsolvability. PhD thesis, University of Illinois,

1981.

RELATIVIZATION IN RANDOMNESS

21

[32] L. A. Levin. Laws on the conservation (zero increase) of information, and questions on the foundations of

probability theory. Problemy Peredači Informacii, 10(3):30–35, 1974.

[33] L. A. Levin. Uniform tests for randomness. Dokl. Akad. Nauk SSSR, 227(1):33–35, 1976. Russian.

[34] Wolfgang Merkle and Nenad Mihailović. On the construction of effectively random sets. J. Symbolic Logic,

69(3):862–878, 2004.

[35] Wolfgang Merkle, Joseph S. Miller, André Nies, Jan Reimann, and Frank Stephan. Kolmogorov-Loveland randomness and stochasticity. Ann. Pure Appl. Logic, 138(1-3):183–210, 2006.

[36] Kenshi Miyabe. Truth-table Schnorr randomness and truth-table reducible randomness. MLQ Math. Log. Q.,

57(3):323–338, 2011.

[37] Kenshi Miyabe. L1 -computability, layerwise computability and Solovay reducibility. Computability, 2(1):15–29,

2013.

[38] Kenshi Miyabe. Schnorr Triviality and Its Equivalent Notions. Theory Comput. Syst., 56(3):465–486, 2015.

[39] Kenshi Miyabe and Jason Rute. van Lambalgen’s theorem for uniformly relative Schnorr and computable randomness. In R. Downey et al., editor, Proceedings of the Twelfth Asian Logic Conference, pages 251–270. World

Scientific, 2013.

[40] André Nies. Lowness properties and randomness. Adv. Math., 197(1):274–305, 2005.

[41] André Nies. Computability and Randomness. Clarendon Press, Oxford, 2009.

[42] André Nies, Frank Stephan, and Sebastiaan A. Terwijn. Randomness, relativization and Turing degrees. J.

Symbolic Logic, 70(2):515–535, 2005.

[43] Theodore A. Slaman and Robert M. Solovay. When oracles do not help. In Fourth Annual Workshop on Computational Learning Theory, pages 379–383. Morgan Kaufman., Los Altos, CA, 1991.

[44] Robert Soare. The Friedberg-Muchnik theorem re-examined. Canadian J. of Math., 24:1070–1078, 1972.

[45] Robert I. Soare. Recursively Enumerable Sets and Degrees. Perspectives in Mathematical Logic. Springer-Verlag,

1987.

[46] Robert M. Solovay. Draft of a paper (or series of papers) on Chaitin’s work. Unpublished manuscript, May 1975.

[47] Frank Stephan and Liang Yu. Lowness for weakly 1-generic and Kurtz-random. In Theory and applications of

models of computation, volume 3959 of Lecture Notes in Comput. Sci., pages 756–764. Springer, Berlin, 2006.

[48] Sebastiaan A. Terwijn and Domenico Zambella. Computational randomness and lowness. J. Symbolic Logic,

66(3):1199–1205, 2001.

[49] Alan Turing. Intelligent Machinery. In B. Meltzer and D. Michie, editors, Machine Intelligence 5. Edinburgh

University Press, Edinburgh, 1969.

[50] Michiel van Lambalgen. The axiomatization of randomness. J. Symbolic Logic, 55(3):1143–1167, 1990.

[51] Liang Yu. When van Lambalgen’s theorem fails. Proc. Amer. Math. Soc., 135(3):861–864, 2007.

Department of Mathematics, Room 306, Roosevelt Hall, Hofstra University, Hempstead, NY 115490114, USA

E-mail address: johanna.n.franklin@hofstra.edu