: A Scalable Multicore Code for RNA Secondary GTfold Structure Prediction

advertisement

GTfold: A Scalable Multicore Code for RNA Secondary

Structure Prediction

Amrita Mathuriya

College of Computing

Georgia Institute of Technology

David A. Bader

College of Computing

Georgia Institute of Technology

Christine E. Heitsch

School of Mathematics

Georgia Institute of Technology

Stephen C. Harvey

School of Biology

Georgia Institute of Technology

August 23, 2008

Abstract

The prediction of the correct secondary structures of large RNAs is one of the

unsolved challenges of computational molecular biology. Among the major obstacles

is the fact that accurate calculations scale as O(n4 ), so the computational requirements become prohibitive as the length increases. Existing folding programs implement heuristics and approximations to overcome these limitations. We present a new

parallel multicore and scalable program called GTfold, which is one to two orders of

magnitude faster than the de facto standard programs and achieves comparable accuracy of prediction. Development of GTfold opens up a new path for the algorithmic

improvements and application of an improved thermodynamic model to increase the

prediction accuracy.

In this paper we analyze the algorithm’s concurrency and describe the parallelism

for a shared memory environment such as a symmetric multiprocessor or multicore

chip. In a remarkable demonstration, GTfold now optimally folds 11 picornaviral RNA

sequences ranging from 7100 to 8200 nucleotides in 8 minutes, compared with the two

months it took in a previous study. We are seeing a paradigm shift to multicore chips

and parallelism must be explicitly addressed to continue gaining performance with each

new generation of systems. We also show that the exact algorithms like internal loop

speedup can be implemented with our method in an affordable amount of time. GTfold

is freely available as open source from our website.

1

Introduction

RNA molecules perform a variety of different biological functions including the role of “small”

RNAs (with tens or a few hundred of nucleotides) in gene splicing, editing, and regulation.

1

At the other end of the size spectrum, the genomes of numerous viruses are lengthy singlestranded RNA sequences with many thousands of nucleotides. These single-stranded RNA

sequences base pair to form molecular structures, and the secondary structure of viruses like

dengue [3], ebola [16], and HIV [17] is known to have functional significance. Thus, disrupting

functionally significant base pairings in RNA viral genomes is one potential method for

treating or preventing the many RNA-related diseases.

A G

A

A

A

G

A

U

G

A

A

A

U

G

A

G

A UG

AU C

A

AAU

G

C G

C G

C G

C AG

A

GG C

AU U

C GG

C

A

G

C

U

U

A

A

C

U

A

AG A

AU

AA A

C C

A AG

U

G A U

A 4050

U G

U

C A AG C G

U AA

U

A

C

AA U

A U CG

U

AC G

G C

A

GG

UA G

G

U

GA

C

A

A

UG

U

UU

GA CC UA U

G

UGG U

A

A

A

AC

A

UG UCU

G

AG GCC

A

U

U

A

CG G

U

A

C

A

AU

C

G U A A

AG

U

C

A

UG

C

C

G

C

AA

G

G

U

C

UA G

UA

A

AC

AG

U

G C

A U

A

A

A

A

A

C G

A

CG G GA C G

A

C

CU 4000

C A A AG

A

A G

AU

G A

A

U A AUGU

A

G

U U U AG

C

C

A

A

UU A

AC

C

C

G

U

A

GG

C

U

A

G

C

C

G

A

U

C

A

U

U

A

A

A

U

U

C

G

G

G

A

A

G

U

A

3800

U U

C

5250

U

GU

G

A

G

C

C

A

U

G

C

U

A

C

GG C

C

G AAC

A A

U

GG

UA

A C

A

A AG

A

A

U

U

C G

U

U UA

G

A

AU

U

UA

4450

A

UU

U

AG

A G

U

A

C

A

A A U

U G

GA

G C

U A

U U U A AC

G A A

GA

C

G

G

G

AA G A

GA

G AA

A

G

G

U

A A

GUG A

A

CA

G

A

U

C

G

A

A

U

G

A

G

U

A

A

C

C U

AA

U

GG

U

C

A

A

G

U

A

U

U A

G

A

U

AA

A A

G

G

U

GA A A

G

G C

UG

G

U

A

C C

A

U

G

GG G U

A

U

C A CU

GA A

UG

G

G

AU

AAC

4250

U G

CU

CAA

U

GU

AG

UC

UG

AA

U AA

AU4400

4200

U

UG

C

GAC

A

A

UG

U

G

C

CA

C

C

AAC

U

G

U

UGA

G

U

A

C

4350

U

A

A

G

A

C

C

G

C

A U

C

U

UC 3850

AG CA

GU CC

AG A

U

GC

G

A

A

A

A

A

A U

G

A

C A

U

GA

A

A

C

U

A

UU U

AG

C

GG

C

C AGC

GA

AC

U

C

A

A

A

A

U

UU

A

U A

A

U

A

C

U

G

A

UA

U

A

G

A G

C

G

A

A

U

A

G

U C

U

A A

A C U

U

A

C

G

G

C

C G U

A

C

A A

A

G U

U U

A

U U

U A C

U

U G

A U

C A

A C

U

U

G U

A G

A

A

A

A

G

A

G

A

C

G C

A

A

U

C

AG A

AA

A U

U

U U A

GA CU

A

C

GG 4550

4750

C

A

U

A

A

G

AA UU

UA AU

A

G

A

C GA

CA

G

G C

U

G

G

G

G

A

G

U

G

2400

A

A A

U A

C A

C

U C G U

A

A G C

C A A

G U U

A C G

G

A

A

U A

U

A

A

AG

G

A

G

G

A

AU

A

A

AA U A

GA

U

U

G

C

A C

U

U

A

C

A

AA

GGA

C

C

G

A

G

A

U

A

G 3550A

C

U

A

C C

U

G

C

A

G

C A

A

A

C

G G

U

G U

C

A

U

3600

C

C

G G

C

G G

G

C U

C C

AG

C C

G G

U

2050

G

C G

A U

A

AA

C

G

A

U

U

A

A

U

A

A

U

CAC

C

A

A

A

U

A

U

4900

A

C

G

A

G

G

U A

A

G

A

U A

U

G

C

A

A

A

C

A

U A

U

A C

G

G C

A

U U G U

G

A G

G

U

U A

U

G

G

A A

AA A G

U

C

A

A G

U

U G

A

A

U C

C C

A A GG

U A A A

G

C

G

A

A

A

G

U

UAAAG C

AAAC

AC

AU

AC

AG

C

A

A

G

U

U

G

C

G

G

G

G

U

A

G A

A

AG

G

A

C

U

GA

A

U U

U

6150

A

U

A

A

A

U

A

G

A

A

A

G

A

G

U

A

G

C

U

A

G

U

G

A

A

U

A

U

C

G

A

U

U

G

A

U

C

GC

C

A

U

G

A G

A

A

U

A

G

C

U

A 6250

GG CA A GA

A

U

AC G

C

A

A

C

A

G

A

U

A

G

U

C

A

GG A

G

C

G

A

AA

A

G

A

U

C

G

AC

CA G

G 6300

G

A

GA

G

U

U

U

C

G

G

C

G

C

C

U

A

A G AC

AC

G

A

U

U

G

A

5600

G

C G

C G

A

U

A

A

G

A

G G

U U

AA

A A

G

A

U A

U

A

AU AA A

U

UU UGA

A

AG

6200 A

AU

U

CA

A

5650

A

A

A

U A

A U

A

A

C

C

AA

A

C

G

A

U

A

A

C

A

A

C AA

GG

A

U

U

C

C

G

A

C

AG C

G

G

AU

G

A

C U

U

G

A

G

A

U

A

G

U

G

U A

C G

C G

A

C

A

G

A

C C

G

G

G

C

A

A

U

U A

U G

A

A

A

CA

2000

G A G

A

U

A

A

A

G C

U

AA

U A

G C

U

U

A

A

A

U

G

A A

C A

U A

C G

G

C

A

U

G

A

G

C

A

A C

A

G U

A

C

U

A

A

A

A

A

U

U

U

U

A

C

A C

U

G

A

C

C

G

A

G

C

A

U

G AU

A

A

A

U

U

G

C

U

G

A

G

G

C

C

U

U

A

A

A6100 C A

U

U

U

U

G U

A

A

AU

U

U

C

AAC G

U

AU

U

U

GA

A

C

G

A

G

G

A

G

U

C

A

A

G U

A

G

A

A

G

A

A

G

C

A C

G

G

U C

A

A

G

U C A A

6050

U CG G

U

A G

C

G

UC C

C

CA AG A

GG GU

G

A

C

G

AC

C

G

A

C

C

A

A

A

A 5550

A

5000

A

A

GG

AA

A

AC

G U

U

G

U

A

A U

A U UA C

G U

G

U

U G

A

G

G UC

A

A

G GC

C

AA U

U

A

A

G

A

C

A

2100

2150

U AA U

G A

U

A

G

C

A

U

A

A A

G C

G C

A

U A

U A

A

A

A

A

G

G

A

A

4650

C

G

U A C A G

A

C

U

U

C

A

G C

G C

G

C G

C G

A

G

A

A

U

C

G

G

G

C

G

C

U

A

U

A

C

G

A

U

A UC

U

G

C

U

C G

A A A

G

G

C

A

U

5700

A

A

A

U

G C

A U

A

A

U

A

C

G

C

A

G

CC GG

U

GGC

CGA

A

CA

U

A

U

U

U

A

A

A

A A

AG

G A

A G

C U

C

A

U

U C A

C

C A

G

A

G

A

2200

A

A

A

G

G

U

G

C C

A

A

A

G

G

G G

C C

G C

C A

A

A G

G G

A A

G

C G

A A A A

C C

G U

G A C

U U

G A G A

C A

G G

A

C

U

G U

A

U

A A

G

U C

C U

G U

U U

A G

A

G A

G A A

A G

A

C

CAG

G

A

A

A

A U

GA

A U

G U A

GU CG

UA AU

A

A U

U A

4600

G

2250

G

G

A G A

CA C

C

C U

G GU

G U

A AU U

A

CG GU

G GU U

A AA

U CA

C A A GA U

C UA A

U

C CA G

C U U

GA

A U

A U

U

C A G

C GG

G

A

G

G

G U

U CA G

G CU G

G CC G

A U

A 4850

U A C

UU A A

4700

C

G

A

U

G U

A

A C

U

A

U

A

A U

U A

U

G

G U

A

G

A C A2350

A

A AU

C

G A

A

C G C

G

AC A U A G

A A G G

A

A

U

G

A

C

C

G U C

U

C G

G A

U

G

U C

U C C

G G G GA A G G G G

G C

A U U

C

G C

A

A

C CA U

C U C C

UU C C U U

A

U U C

C

A

A

AA

A

U

A

C

A

U A G C

G

A

U

A

A UG U

C G

C G

A

A

2300

C G

C G

AA

A

GU

C

G

G G

C C

A

U U

A

G

C A

A

A

U

C

G

A

A

U

6000 G G

U CG U

5750

G

GG

C

C

A U

G

UG

U

U

UC C A

CAC A

UG

U

U

G

AUC

A

A C UUG AG

C

A

AA

C

G

CC

G

C

U

GG

U

A A

U

A

UU

AG

C

GG

CC

UG

C

A

C

U

AG

A

G

C

U A

G C

A

A

G

G

A

C

G

G

A

CA

G

A

C G

G C

A

G

U

G

A

G

U

A

5050

C G

U A

U

A

G

U

A

G

C

U

A U

C G

A

C

A

C A

A

U

U

G

U G A

C

G

U AC

A A

A

AC

C

G

A U A

U

C

G A U

G

U A G

U C A C

A

A

A

A

U A

A U

A

A

A

A

U

G

A

U C

A

A

U

A

A

A

G

A

U

CA

G

A

A

A

A

G

A

A

A

G

GC

AA

A A C

3650

C

A

GUU

A

U

G

A

U

GAG

A

A

A

A

C

A

C

A

U

A

G C

A

G U

AG G

A C

A A CU

GU

UU

C

U U

GA C A

C A

A A A A

A

C G

C

A

A C

3250

A

G G G

A

C

G A

G

A GGA GU C

3450

A

A

AG

U

A

G

C A

CA

A

G

C

GA A

C U

U

CU G U

3400

U

A A C A

C U U G C

A G U A

C U

G U G

GU

U

G

U

C

A

A

U

G

G

A

UA

U

U G A C

C

C

G

GA GC A A

C

AG

A A

A

A

G CG U

A

G

U

UU

CG

U

UG

3350

G A A

U

A

C A GC

G

A G

C

A A

C U

A

U A

U

A

A A

C C A

A G

C C

U U

C

GU

U

A

A G

G

U C

U U

A

A

G

U

C

G G

A

A U

A

A

A G

2450

U

U C

A

G

C

A

C U

G

A

U U A

C

C U G G

G

A G

A G A

G G

GU A

G

A U

G G

A C

A

A U U U

A

GA A GC

G

A

U A

U U AG

U G

AA

A

A

A A

A

G

A

A A C A

C

U GA

A

A

A A

U A

AC

3300

G

G U GC

A C

U A

U C

U GA

A

C A

U

U CC

A G

A

C

G U

A A

G

A G

A

A

3500

C U

G G

G

C A

A C

A A

G

C G

A

A

G G A

U

G

A

U U

GA

C A

A

G

G

U

A A

U

U

A

A

UG

AU C

GC

A

C

U

G

G

G U

G

C

A

U

A

U

G

C

U A

A

C

G

U

A

U

A

U

A

A

A

U

A

U

A

A

AG

G

U

G

A GC

AC

G

A

U

A

G

UG

C

GU

GG

UC

UA

AU

GG

CU

A

UU 5850

GA

GG

A

C

G

AC

C

U

G

U

U

U

G

A

U

A

A

U

A

G

A

A U

AG A

G

C

A

5500

U A

A

A

U A

A

A

G

A

A

G

U

G C G

A

A

U

G C U

A

U A U

5450 G

A U C

G

U A

A

A

AU

U

G

G

C

G

G CA

U

U

A UU

U AG C

A

U G

U

A U

A

C GG

C

AAA A

G A UU

A

U

G

G

C

G

G

U

A

A A

G

U A

4800

AA

A

GA

A

AG

C

G

C

U A

G U

G C

A U

C G

G C

G U

C

A

C

C

A

A U G

4150

AU

U A

C C

A

U A C

G

G U

2900

A

A U

A

C

C U

G

A

U

A G

A A

A

AU

A

A G G

U

U

A

U A

U

A

U

G

C

C

A

U

3150

G

C

C

A

G

U

A

A A A

U G

A A G

A

3000

U

G

A

A

A

U

C

G

G U A

U

A

C

U

A

U

A

U G

G A

A

U

U

GU

A U AA

G G G

A AA

A U A U

A AA

U

AA 2750

U

GC

A U

AA U

C

A G

U C A

A AA

U

G G

U

AAA2800

A

U

U UA

U A

A

A

U

C

A

G A A

A

U A

U

GU

C

U

G A

G

U A

C CC U

A

C G

C

G G

G

U

A

A

UA G

G G G A

A

G GG U

A

A

U

U

A

G

G AA

G C

C AC

U

C

AC

G

G G

A

A G G U G C

A

A A

C

A U

G

G A

C C

A C

A

C

A A U A

A A

A

A

C G A

U

C

C U A

G G

A

C

A U

C A

G

U

A C

U C

AA

G

U

A

A

A

A

U

U

AG

A

U

A U A U GA

G U G A AG

C

A

A G A

A

G G A A

A A G G

A

U U G A U C

CA G

U AC A U U U U A 3050

U U

C A A

A

U UC

UA G U

C

C

U A G AA A

A

A

A

C

G

A C

A A A UA G

U

G

A

G C U 2850A

C A ACU

U

A

A

U

UC

A

A

2700

A

AU G G U

U C

U A A

C UA

3200

A

U G

U

G UG C C C

C

G

C

A

G

A

A

A

G

U G

U

A

G

G

C

A

G C C A U

U

U A A

G

U

U C C A A G G G

G A

A

AC

G

A

U

U

A

C

G G U A C

U U

A

A 2600

C

G

A C

U

A G

A

A

U

A

A A U

G

U A

U G UG U G A

C

U

G C

U

A

C A

U U

C

A

3100

A

C

A

A

A

C

U 2650

U U A

A

G A

A

A A A GA U C C U A U

A C AG A C C

U

2550

U AA G C C

A

U G

U G A

G G

G

A

A C U G U

G

G

A U

A G

CG

A

U U

C

A

A

A C A G G

C G

A

A

C

A

A

U

A A A A U

A U G A U U

A

U

G

G

A

U

U G A C A U

A

G G

A

U AU C A

A

G A

U

A

C

G

U

U

U

A

A

A A

2500

C

C U G U G U

A

G C G

C

A

U

A

C

A

G A U A C C

G

U

A

C

A

A

A

C

U

U G

A

A

C

A

G

5100

A

C

U

G C

AU

GA

A

U

G

A

AG

U

U

C

U AA AU

G

C

A GUA

A

A

AC

A

A

A

U

A

UUG

C

A

C

G

C G

G U

A

A

AG

G

A

A

G C

U A

G

UA

A

G

CA

A

C

A

A

G

U A

A

AG G

U

GG

CC

AU

UG

G

AUU

U

A

GAA

A

A

C

C

U A

U A

A

C

C

G

A

3700

A

C G

C

U

C

A

U

GU

U

U

A U

G C

U A

U

C

G

C

G

AU

G U U

A U

G U

A

G

G

C

A

A

C

A U

C G

G U

G

U

AU

G

G

UAA

A

C

AAA AAA

A

U

A

U

4500

C

GG

CA

GU

U

AC

G4300

G

CA

GC

G

AU

U

A

AU

GC

A

A

A

G

A

U

A

A

G C

G

A

G

C G

A U

G

AG

A

GC

A

A

A

G

A

C

A

C

C

G

C

G A

U

U

G

A

U

A

C

A

U

U

A

A

UC

UA

G

C

G

C

G

C

G

C

U

A

G

U

A C U

U

A

UUC

G

U

A U

A

A

GC

A

U

A GC

A C AU

G

U

U

G

U

G

A

C

A

A

A

UG

U

GC C

CU

G

CA

U

U

U

C

AG

G

U

A

CG

A

C

AU

UU

G

C

GA

G

G

U 5950

G

U C

C

A

A

U

AA

A

A

5800

U A

A U

A

A

U

AA A C

A

G

U

U

A

A AA

U

U AU

C

A G

G

GG

A

U

C

C

A

C

A

A

G C

A U

U A

U A

A U

5400

G

G

A U

G C

A

G

U

A G C

A

G

G

U

5900

C

G

U C A

A

4100

G

C

C

A

G

A

A

U

A

3750

U A

G

A

A

C

5300

5150

A

C

G

U A

A

AA

A

5350

A

U U

A

AG C

A AU

UA

A A AU

A G

UA

C

G

CC

U

GC G GA

A U

AG

C G

A

U A

G

G G

C

C

G

A

G

G C

C

A U

AA5200

U

U

U

U A

C

UG

A

UG

A

U

A

A A

U A

U C G

G C

A A U A

A UA

A

U

U

A CU G

C

A

U GG C

A

G U

C CG A

G CU

A UG

U UA

A

A

ACGUGC

CGG

AA

C

A

U

A

G

GUC

A

G

A

UU

G

C

C

G

A

C G

A

C GC

A

ACU

A A

C

C G

G

CU

U

GA

G

U

AG

U

A

A

G

G

U

A G G

3900

2950

U A

U A

A

U

A

3950

A

U A

A U

C G

U A

U

GA

G

C

A

U

U

U

A

1950

A

A

A

C

U

U

4950

A

C

U

U

A

G

A

A

G

A

U

G

A

U

A

C

G

G

C

GA

C

G

CA G

G

G

G

A

A

G

G

A

C

A A

G

C G U

G

C G U U

G

AA

G C

G C

C

U

A

1900

A

U

U

C

U

C

A

G

A

A

C

G

G

G

C

C

G

A

A

G

G A

G

A

C

G

G

C

A

U

A

U

GC

G

A

U

C

G

C

G

U

G

A

U

GG

C

U

G

G

A

U

U

A

GU

C

G

U

A

G

C

A

U

A

G

G

A

U

U

A

C

UG

A

A

A

G

A

A

GA

UCA

U

C

G

U

A

A

U

C

G

U

G

G

1850

A

C

G

6350

A

A A

A

A

C U

A C

A

A G

A

AG A U

G

U

G U

C

U U

A

U

C

G U

UU

C

C

U

G G

GA

C A

U

CC

AC

A A

G U

C A

G

G

C A

G G G

U U

G

A

A U UA

C A

G U

G

C G

A

G U

A

UG

C U

G C

C A

G

G A

CU

A

G G

A U

A C

G U

G

A C

U U

9050

C U

9100

C C

C G

A

A U

A U

UU

G U

6400

G G

A A

G U

C G

A

A

G G

CU C C A C

A

A

G G

C A A

A C

U

A A

A

U

G

A

U A

C C

U

C C

U U

G A

A

G

A C

U

C G

A

C

G G

C U

U G

U G

C U

C G

A

A

G

A

G A

A G

C G

G G

G C

A

A

C

G

C

G CA

G

U

A

G C C

U U C CG

U G 1800

CA G

A A A

G

A

A

A U G AC

A

CG U

G A A A

C

C A

G

U AA

C

U G AU

UU

C U

C

A

U

G

G A

U

A

A

G1700

U

C A

A

A

GA

U

A

A A A

A

AA

U

G

G

G

A

A G

U

C

U

A

U

C

U

U

G

U

A

A

G

A

C

C

A

U

A

G

G

AG G

A

G

1750

C

A

C U A

A A

G A

U U

U

U

AGA

C U A

G

U

C A

U

A

C

U

G

C

U

U

C

C

A

A

C

U

A

1550

U

G

U

A

G

C

A

G

A

UA

C

G U

AG

C

C U U

C

UC C G

A G G

A C G

AU U

U

C

A

U

A

CA

G G

C

GA A U 1600

U

G

C A

A

A

A

U

A

U

C

U

G

A

A

G

C

G C

A A

G

GA

1500

U

U G

C

G

A

1450

A A G A G

A

C

A

G

A 1350

U

U

A

A

C

C

A

G

U

A U

C

A

A

C

G

U

A

A

C

A

G

G

A

U

U

A

U

U GC

A

G

G

GU

U

A

A

A

G

A C 1400

A C

A

A

G

U

G

A

U A

C G

G C

AG U

C

G

A

C

U

G

G G

C

U

C

C

A G

A

CG

C A

CC G

C

AAA

G

A

A

G

A U

U G

A

G

G C

A U

C

A

C

U

C

A

G

C A A A

U G

C

A

C

U C

A

G

C

U

C A

G

C

U

G

G

C C A

C

6550

G

U

C A

U

A A

A

A

U

G

G G

A

A

C

C

U

A

6500

C

U

A

A

C

G

C

A G

C

A

U

U C

C

C

A

A

A

A

G

A

A

A C

A

U A

A

G

U

G

G U

A

A

G

A U

A

A

U U

G U

U

U G

U

U

C G

A

G U

U G

U A

G A U

A

U

A

A C

GA A AA

A

U

U

G

A

C A

G

G

U

U

U G

A

U G

A

A

A

C

AC

C

A

G

G

A

G

A C A C

U

G

U

G

U

A

A

A G

C

A A A

U G

C G A C 8950

A

C

U

A

U

G

6600

C

A C

C

A

U

G

A

G

A

A

A

C C

G

U

G

A U

G U

G G

A

U A

A A C

C A

A

A

A

A

A

G

A

G

A

U

GA

A

G

A

G

U

C

G

A

G

G

A

C

C

6750

A

G

A

A

C

A

C

U

AC

9200

A

G G

A

U

G

U

U

A

U

C

C

U

U

C

C

G

G

A

A

C

C

A

A

8250

G

U

A

A

A

U

AG

G

GG

C

A

U

U

8800 U

A

U

G

G A

G C U

C

U G

U

G A A

G G U

A

G

C

C

G

U

G

A

U

U

G

U

A

A

G C U G

G U U U

UA

U G C G

A A

A

A

C

G

G A C C

A U U C

G

A

A

A

A A A A

U

A

A A U

G

C G C U

A

A

U

A A G A

G

C

A

A

U U A

8200

A

G

G

A

A G

A

U

U

A

A U U

A

UA

AUA

A GA

A

U C

U

G

A A

C

A

G G A

C C U

CG

C

U

A

U

G

A C A

A G

C

C

A

U

A A U G

G

C

A U

A

C A

A C

U A

G U A

U G

A U

A

A C

C A

7000

C C

A

U

U

C A

A

U U AAC

A

G G

G G C

G U A G

U A A

U U C

U A

G

C A

C A U

A A

A

G U

GU G G

C

AC

U

C

A AG

G

U U C GG

CC A A

U

A UG GAC A

8550

C

C

U

A G

G

UA

A

C U

G

U

A

A C A G

C

A

G

U

GA

C

C

A

A

G

G U A

G A U U A

U

8450

G

C A

A

U

C

C

C

U

A

U

G

C U U

C

A

U

C A

G

G

A

G

U

U

C A UU

C

C G A A CC

GG U G AU

GU G G

C

G A UG

G U

8500

A

G

C

U

U

G

A

U

A

A

G

A

A

U

G

6950

U

C

G A A

U

A G

G

A

A

C

G

A G

G

A

C

AG

A

A

U

G

A

A

C

G

A

G

A

G

G

C

6900

U A U U

G C C

A A U U

CAU

U

G

C C

U U U

A C A

G G U

U A A G

U G C

G

U G U

C C

A C G

G G

U

U G A

U

U G A

A A

G

U

G

U

A

G

C

G

C

A

A

A G

A

G

A

G

A

G

U

A

U

A

C

U

A

U

C

G U

A

G

U

C GA

G

A

A

G

C

G

A

A

U

C

G

C

C

A

A

C

A

A

U

G

A

G

A

A

G

G

U

G

G

C

C

G

U

G

U

A

U

A

G

U

A

A G

A

C

U U G

C G

A

A

C

AA

C

A

AU

C U A

C C A

6850

U

G

G C U

G A

U

G

U

U G G U

U

G

A

A C

U

A

U U G U

C G G

A

U

A

C

A C C U

A

A

C A G U

U

A A U A U

C A

U

U G G

U

A

G U C G

A GU

A

A

U

G U

U

A

U

U

U

G

8300

A

G

U

A

A

U

A

G

U

U

U

C

G

U

U

A

A

G

A

U

G

G

G

U

A

A

C

G U A 8350

U

C

A

U

A A

C

G

C

G

U

C GA

C

C

U

U

C

G

8600

A

U

C

A

U

U

AC

G

AC

G

UG

U

A8750

UC C

AG A

8700

A

AU

U

GU AC A

G

UG G

A

AC

C

GA G

U

U

AG A

C C

UC U

G AU

A

G U

A

G

U

U

C A

A

U

G

G

G

C

G

U

G

G G

A

U A

G

U

C

U

G AC

C

8850

U

G

AG

G A

U

CA

G

AC

G

U

AU A G

G G GC

U

G

A

U

G C

G

A

A

C

AUG

A

GA

A

C

U

C

G C

C

U

U

AU A

G

UC GG

C

GC

U AC

A

A U A

C C

G

C U AA

G A G

C

A

9250

G

C

G

C

A

U

A

6700

A

G

A

AG

U

A

C G

U AA A A

A

G AA

G

G

A

A

A

A

C

G C

A

U

G U U

G A A

A

A U

G G GC GA C

AA

G A U

GA U A C U U

U U

A A G

A G

A

U

G G

G A U U

A U

U U

CA

C U

A

A

U

G U

A

A

A

A

G

A

A

C

U

U

C

U

U

C

G

C

A 6800

U

A A A

A

G

U

A

U

A

U A

A

C C

A A

G U

U A

U

G G

A

U U

A U

G A U

A A U

G

A

G U A

C

U

U

U U A

A

U

C

C

A

G

A

C

A

C

C

8400

G

U

C

A

G

C C

C

C G A

A

G

A

C

U

C

G

U

G

C

G

C

C

A

G

G

A

A

G U

U A

A C

U

C C

U

U

C A C

U G

G

G G

U C

U A

AA

G U

U G

A

U

C

6650

A G

A C

A

C

G

G C A

U

A G U

A U G A

8900

A

C

U

A

A

C U G

A

A

A

U C

G

A

G

A U

U

G

A

U U G

G

U

G A

GC

A A

C U

AA

A

G C G

G A G

A G

AU

U

G

U

A

U U

G

A

G

G

G G

A

A G

C

C G

C

A

C

C C

U C

G

U

A U

U

A

A

G

A

U

A

C

C

U

8650

G A

U

G

A

U

G

C

C

G

U

A

U

G

G

C

C

A

G

U

A

C

G

A

A

U

A

G

C

U

G

G

U

U

U

A

G

C

A

A

G

C

CC

G

A GA G

U

G

C

A

U

A

U

A

G

U

A

AG

GU

AG

C

UA

CCA

G

C

CA

A

G

UC

GC

UG

A

AC CA A

G

AA U AU

C

U C AG

G

UU

A

C UC A

A

U

C

U A C

AG

U

C

U

G

9150

A

C

A

A

A

A

A

C

A A C C A

A

U

A

A

U

A

G

C

U

G

A A

A A U

7150

G

C

G

A

C

AA

A

7050

U A

U

A

U G A

C

G

7100

G U

G

U G

C

C U

A

A U C

G

C

A A

C A

G U

C G

A C AC

C U

U U

U

A A

A

G A

U C

A U

C

G

A

U U G

A C

G A A

C U

A

C

U A

C

U U U

G

A

A

A

G

A A G A

U

G A

C A

C

U

A

A A A

A

G

U U

G A U A

A

A

A A A

U C U

G G

A U U

G U C

G

A C

A A

C

A

G GA

A

C U

A G A

A

G C

A

C A G

G G

A

U C

G

A U A

A G

C A

8150

7200

A A U

CG

U

G A

G A

A

A

7300

A

G A

U

C

U U

A

A

U

U A U

A

G

A

AG G GU U A

C AA A

A

A A

C C A

A

A

U

U U A

C

U

G

A

G G G

G

C

A AU G

U U A

C

A

U

A

G C

G G

G

AA A

A U G

G

A

C A G

A

A

U C U

C

G

A C A

A A

A A G

G

A

A

C

C U A

G

G

U C

A C A G

A

7250

A

G U

G

G

A

A A U G G

A

G

A

U

A

U G A U U

A

G

7350

C A C U U U

C A AA U AA U

A

A

A 8100

G

G

A

A AG C

A

A

U

A

U

G

C

G

U U

A

A

7400

A

A U

A

U A

C

U

U A

U

A

A

A

G

U U U A

A

G

A U

U

G A

U G

A

C U AU U

AC

A A

A

A

A GU

U

A G C A G U C C U

A

A U A

G

C C C

A A U

U

A G C

G UA GA U GU G U

G A

A A

A

C A

C

U

A

A

A

A

G G

G

U A U GU AA G

A

C

U G

U AA

U A

G A U A

A A

G G A G GA GA G

A

A

A

A G AG

A

A

G

G

A

U C G U C G G G A

U

U U

U

C

G CG

U U U U U C U A

G U

A

U

C

G GA C A

U A

C

C

A

U

A

AC

G U U

UG

C UG

A

C C A

7650

G A

U U C

G

G G U

A C

A

A C A

C A C A

U

C

A

G

U

U G U U U A

CA

A C CU CU U C C C C CU

C

U

G A

UG

A A A A G G A U G

C A

U

7700

7750

U

A

U A

G U

U U

A U A U C U 7600 A G A

A C

A

A A

G G

G G G

A G G

G

U

A

G G A

G U G U

C A C U

U C

G

A

A C A A A U

C

G

A

A AA A

A U

U A

G U

G

A C

C C U

C

U C

C C

A A

U G

U

A C G

C

G A G

A G

G

A A A U

C

U

A

A

G U G A

G

A G

A A

C

G

G

U GUAA

GA

A G U A

C

A

C G

G G

A

U

AG

A GA

C

GU

C

A G

U

7550

7450

U

A

A

A

A

U

C

A

U

A U A

C

G U C

A

A G

A

A A

U

A A A GAG

C A

A

U

G

AA

CA

G G

U AC

C

C

G

AA

A

U

GA

GUG

G

A C

AAUAAG

G

C A

C

7500

A

G GA

C G

G

A

U

G

U U

A

A

U

A

G G G

G

A AG

U G

C C CG

C

7800

C

U

C U C U

U

G UA

U G U G U

A

A

C A A

A

A

A

8050

U

G C

G

C C

G

G AUU

U U

G U C U

G

G A

G

U

G

A

G

U

C G

A U C

U C

C A

G G G

U U

A

U A G

A

A

G C

A

A

8000

G C

U G

A

U

G G

A G

C

G

G

A

G U C

C

U

A

A

A

C

G

U A

G

A

A

7900

G C

G

G G

C A

A U

C

U

A

A C

C

C

G

G C

U A

7950

C

G U U

G

G

U G G G

A U

G

ACA A

G

U A

A

A UU

G U

U G A

C

U C

C CA G C

G G

U U

UC

U C

G

C

A

C

C CA

G

G GC C

U

AGAG

A AG

AG CC

G UG G

C

GA U

A GA A U UC C

A

A CU A

U GG

C

C G

G CU A

G

U G

U AA

C U

A A C

U

G C GA U

G

U

7850

A G

G U

U

A

U

U

AAA

C G

A

A U

C G

G C

G C

G C

G

G

G C

A

U

G

G

U

G

G AU

A A A

G

A

C

C

A

1200

1250

C G

U G

G

U

C G

U G

U

A

A

A 9500

C

U

U

C G U

G

A

G C

G C

A

G C

C G

G

G C

A U

C G

A

A

G C

G C

A C

U C

GG

G

C

A

G

G

C

A

U A A

A U

C G

G C

A U

G

A

A

U

C

C

G

G

G

G

A U

C G

U G

G C

A

A

C

C A

C

G

C

C

U

A

U

G

U

UU

C

A

A

U

U

UA

U

C

G

A

U

A

C

G

U

A

C

C

U

G

AG

C

A

U

A

G

A

C

U

U

C

A

A

A

C

A

U

A

G

G

A

9450

U A

G C

1300

A

U

U A

A

C

G

G A

U

C

G A

A

U

A

C

U A

G

A

U

A

U

C 6450

A

G

A G

G U

A C

U C

A

C G

U G

U

C

A

G

G

CA

G

C UAA U

C

GAG

U

C

U A

C

A

A

G

U

G

G C

C

G

A U

U G

C

G

A U

U

G

G C

A

A 9350

AA

A

C

G

G

CG

C

CA

C

U

A

A

A

G U

G 9400

C G UC

UA

G

G

C

C

G C

C

A

G

U

A U

A C

C G

A U

GC

U AU

G U G

A U

A

A

G

U

U

G

A U C G

A

C

A

AG

U

A

A

A

A

A

A

G

U A

G UG

A U A

C G

C

U

A

C

A

A

C

U

A U

ACC G

AGA

A

U

A

G

A

C

C

GG

G

AC

G

G

G

C

C

A

C

G C

C

U

A

G C

C

A U

A

G

C

U U

G

G C

C G

U A

A U

G

A

U U

U U

G U

G C

G A

A C

A C

G

A

9000

U

CA CA

U

G 9300

A

C

C

G

A

A

C

G

A

C

G

G

C

U

A U

A

C

U

G

C

A

G

U

C G

C

G

A

A A

U

A

G G

A

UC

AA

G

A U

G U

A

G

A

U

C

U

A

U

G

G

U

A

A

A

U

G

A U

U A

A

U

G

A

U

A

U A

G

A

A

C U

A

G

AA

G

A

1650

A

A

U

A

G

A

A

U

A

A

G

A U

C G

G

C G

U G

A U

C G

G

U

U C

A

A

C

G

A

G GC

AAUA

UA G

C

U A

G C

U G

C G

G C

C G

C G

A U

C G

G C

U A

A

G C

A U

A U

C

G U

A U

U

C G

U G

A U

C G

G C

A U

A

GA

A

A

U

G

A

A

A

A

C

A

A

G

C

A U

U A

U A

U A

C

G

G C

A U

A U

G C

G C

G

A U

A

U

C G

C G

A A

A U

A

U

A

G

G C

A

G

G

A

G U

A

A

C

U

A

G

G

U

AU

G

UA

AA U

AG

C

U G

C

G C

U

G

A 1000

G

A

C

U

A

A

A

A

G

G

A

A

A

G

GC

AG

A

U

U G

C

A U

U A

C G

C G

A

A

U

G

U

U

U

900

A

G

A

C

U

C

A

G

G

C

A U

U

G C

A U

U G

A U

U G

A U

C G

A U

A

A

C

U

A

A G

C

U

G G

G

C G

G C

G C

G C

U G

C

C

A

C

A

U AU

C

U

C G

U

G

U

U

A

G

A

U

A

G

G

U A

C G

A

G

G

A

C

A

G

A

C

U

C C C

U

A

U

C

A

G C

G C

9700

A

G

A U

U

CC

A

A

C

A

U

U A

C G

G U

A

950

G

U

A

A

G

G

A

A

A

C

A

A

C

U G

C G

U

GG

GA U

C G

G

G

U

CA

A

UA

G

UA UA

AU

G

A GC

U

U

U

A

U

C

AG G

G

UC

U

C CA A

U

1050

AG G

C

U

A

G

AC

UC

C

A

A AA

A

G

U

C C

A

U

C

A

G

G C

A U

9650

A

A

C

A

C

G

U

G

U

G C

G U

A U

A U

C

U

A

9550

G

G C

C

A

U A

C G

A

A U

C G

A

G C

G

A

AG

U

A

G

G

A

A

A

1100

A

C G

G U

A

A U

A

A

G

C

A U

G C

UA

GA

A

A

C

A

G

C G

C G

A

A A

U C A U 9600

C

G

C G

G C

C G

C G

A U

G U

A

G

C

C G

A GC A U

AA

C

A

1150

A

G

C

A

U

A

A

U

A

U A A

G

A

A

A

C

G

A

C

U

A

A

G

A

UA

A G

U

G

A

A

850

G

G U

G C

G

U

G

A

A

9750

C

GU

GC

C

A

G

G

G

U

A

G

G

AG

G

A

G

A C

G C

UA

U

A U

C

G U

A U

G

A

U A

C G

G U

G C

GA

A

G

G

A U

C G

G

U

AU

U

G

G

A

U A

A

UC

C

U

C

G

GA

AG

G

A

C

700

G

G

U U

CU

A

G

C

A

A

A

U

G U

G C

U A

G C

A U

G C

G C

G G C

CU

A

G

G

G

G

U

A

CUGGA

GG C C

G CC G

U

A

AG

G

A

C G

G

C

C G

A

G

A

G

G

G

A

A

U

U

350 A

GAC

UUU A

G C

AG UC

C

G UG GC C

G UG AC U

A U A GU

G C

G C C G

A U

G C

U A

C G

G

G CC G

U G

A

U

A U U 400

C G

G

U

C G C A

C

U G

C

U C

U

G

U

G

A

C

G

G

G

C

A A

C

G

G G U

U

U

U

CCA

A

A

A

C

AA

C

A

U

GG

G

C A

UCCGGG

U GAA CU

U

G

G

GA

C

U

A

C AC

G UG

AG

A C GA

AG C A

A

U U UA

C A

A U

50

C

U A

G C

GA C

U

C

G UC

U AG C

C GA U

A

G C

G

G

U

A

A U

U U

G

C G

A 200

U

A U

A U C G

C G

G

A

G C

A

C A

A

G A

G

A

GA

AU

C U A

A

A

C

C

G G

C U A

UU 150

G C G C U

A

UA AU

CA

C

U

C

C GU

A

G UG

U A

U

G

CG C

A

A C

G

G C

C

A

C

U

GCU

G

A

U

A

C

U

C

G

A

UCG

AG

UU

UA

U

C

U

C

G

C

G A U

C

550

A

C

G

C

G

C

GU

C

AG

C G

AA A

UU U

CA

GU

C

GC A U

UG

CU G

GA C

GU G 600

UG U

UC

G C

G

500

G

C

AU

750

C

U

A G

A

U

G

C

G

GA

CU

C A U

GA

G

C

C A U

A

G

U U A

CU

C

G

C

G

G G G

U

A

U

C

U C G C

U G

C

G

C

A

U

U

A

G

C G

U

U C

U

G

AU

C

G

A G A

U A

U

U

C

GC

U

G

AG

C

C

A

G

U

A

U

G A

C C

G

U G

A

C C

GG

CG

AU A

AA U

CA

G

AA

AU

G

C

G

U

U

A

U

G

UA

650

C

U

C

U

G

U A A

5’

C G

C G

C G

G C

A U

G

C

G

U

G C

A

C

450

U

A

A

A

A

U

U

U

G

C

C

A

GA

U

A

A

A

A

G

G

U A

C

G

ACG

CU

A

G

GG

C

CC

GG

U

CU

G

CG

GG

C

G

A

G

C

G

G

C UA

AG G

C U A G A

C U

U G

A

UU

U

U

A

A

A

A

800

G

A

C

C

C

G C

C G

A

A

GA

AU

C C

C

U G

A

C

A

C

C

A

G

G

100

C

U G

G

C G

U G

AU U

G

U

C G

G

G CG C A

A

A

C

CA

CG

G

U A U

AU

G

G

C C G A

G C A

U A

U A C

AU GG C

A U

C G

300

A

G

AA UU

UG AC

C U AC

GC G

A A

A

C

U

U A

A

250

G U

A U

U A

C G

A

C G

G U

G

A

C G

C G

A

G

C G

A

G

U

U U

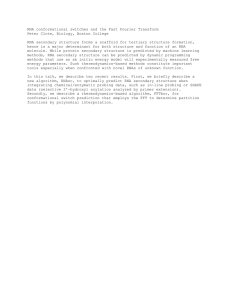

Figure 1: The optimal secondary structure of an HIV-1 virus with 9,781 nucleotides predicted

using GTfold in 84 seconds using 16 dual core CPUs. The minimum free energy of the

structure is -2,879.20 Kcal/mole.

Viral sequences range in length from about 1,000 to over 1,000,000 nucleotides in the

recently discovered virophage. Length of the viral sequences poses significant computational

challenges for the current computer programs. Free energy minimization excluding pseudoknots is a conventional approach for predicting secondary structures. The mfold [20, 11] and

RNAfold [9] programs are the standard programs used by the molecular biology community

for the last several decades. Recently, other folding programs such as simfold [1] have been

developed. These programs predict structures with good accuracy for the RNA molecules

2

having fewer than 1,000 nucleotides. However, for longer RNA molecules, prediction accuracy is very low.

According to the thermodynamic hypothesis, the structure having the minimum free

energy (MFE) is predicted as the secondary structure of the molecule. The free energy of

a secondary structure is the independent sum of the free energies of distinct substructures

called loops. The optimization is performed using the dynamic programming algorithm given

by Zuker and Stiegler in 1981 [21] which is similar to the algorithm for sequence alignment

but far more complex. The algorithm explores all the possibilities when computing the MFE

structure. There are heuristics and approximations which have been applied to satisfy the

computational requirements in the existing folding programs.

One potential approach to improve the accuracy of the predicted secondary structures

is to implement advanced thermodynamic details and exact algorithms. However, while the

incorporation of these improvements can significantly increase the accuracy of the prediction,

it also drastically increases running time and storage needs for the execution. We use shared

memory parallelism to overcome the computational challenges of the problem.

We have designed and implemented a new parallel and scalable program called GTfold

for predicting secondary structures of RNA sequences. Our program runs one to two orders

of magnitude faster than the current sequential programs for viral sequences on an IBM P5

570, 16 core dual CPU symmetric multiprocessor system and achieves comparable accuracy

in the prediction. We have parallelized the dynamic programming algorithm at a coarsegrain and the individual functions which calculate the free energy of various loops at a

fine-grain. We demonstrate that GTfold executes exact algorithms in an affordable amount

of time for large RNA sequences. Our implementation includes an exact and optimized

algorithm in place of the usually adopted heuristic option for internal loop calculations, the

most significant part of the whole computation. GTfold takes just minutes (instead of 9

hours) to predict the structure of a Homo sapiens 23S ribosomal RNA sequence with 5,184

nucleotides. Development of GTfold opens up the path for applying essential improvements

in the prediction programs to increase the accuracy of the predicted structures.

The algorithm has complicated data dependencies among various elements, including

five different 2D arrays. The energy of the subsequences of equal length can be computed

independently of each other without violating the dependencies pattern introduced by the

dynamic programming with a set of five tables. Our approach calculates the optimal energy

of the equal length sequences in parallel starting from the smallest to the largest subsequences and finally the optimal free energy of the full sequence. We also describe the nature

of individual functions for calculating the energy of various loops and strategies for parallelization.

2

Related Work

Several approaches exist for RNA secondary structure prediction. While this paper focuses on

free energy minimization, another approach [15] predicts secondary structures by computing

the structures of smaller subsequences and using them to rebuild the full structure. Though

3

this latter approach runs fast, it is an approximation, and can miss the candidates that do

not follow the usual behavior. Also, the success of these kinds of approaches is dependent

upon the ability of rebuilding methods to identify motifs correctly by consistently combining

the substructures into a full structure.

Several distributed memory implementations of RNA secondary structure prediction have

been developed; however, they may not be portable to current parallel computers (e.g.,

[12, 5]) or do not store the tables necessary for finding suboptimal structures (e.g., see

[9]). For instance, Hofacker et al. [9] partition the triangular portion of 2D arrays into

equal sectors that are calculated by different processors in order to minimize the space

requirements and data is reorganized after computing each diagonal. Traceback for the

suboptimal secondary structures is not possible because it requires the filled tables. In [8],

the authors observe that to fold the HIV virus, memory of 1 to 2GB is required, dictating

the use distributed memory supercomputers; yet in our work, we demonstrate that this can

now be solved efficiently on most personal computers. In our work, for the first time, we

give scientists the ability to solve very large folding problems on their desktop by leveraging

multicore computing.

Zhou and Lowenthal [19] also studied a parallel, out-of-core distributed memory algorithm

for the RNA secondary structure prediction problem including pseudoknots. However, their

approach does not implement the full structure prediction but rather studies a synthetic

data transformation that improves just one of the dependencies found in the full dynamic

programming algorithm.

3

RNA Secondary Structure

RNA molecules are made up of A, C, G, and U, nucleotides which can pair up according to

the rules in {(A,U), (U,A), (G,C), (C,G), (G,U), (U,G)}. Nested base pairings result into

2D structures called secondary structures. There are 3D interactions among the elements of

the secondary structures which result into 3D structures called tertiary structures. Pairings

among bases form various kinds of loops, which can be classified based on the number of

branches present in them. Nearest neighbor thermodynamic model (NNTM) provides a set

of functions and sequence dependent parameters to calculate the energy of various kinds of

loops. The free energy of a secondary structure is calculated by adding up the energy of all

loops and stacking present in the structure.

Figure 2 shows an MFE secondary structure predicted by GTfold of a sequence with 79

nucleotides. Various loops annotated in the figure are named as hairpin loops, internal loops,

multiloops, stacks, bulges and external loops. Loops formed by two consecutive base pairs

are called stacks. Loops having one enclosed base pair and one closing base pair are called

internal or interior loops. Internal loops with length of one side as zero are called bulges.

Loops with two or more enclosed base pairs and one closing base pair are called multiloops

or multibranched loops. The open loop which is not closed by any base pair is called an

external or exterior loop.

4

Hairpin Loop

30

A A

A

C

A G G

C C

G G

C C

G G

C

20

A

A

Internal Loop

A A

A

A

C

A A

G

C

40

G

G

Multiloop

Hairpin Loop

C

C

10

G

G

A

C

G

A A

50

A

C

A

A

A

A

G CA

C G

G C

C G

5’

G C

C G

A

A A

A

C G C G C G

A

G C G C G C

A

A

A

A

A

70

Stack

External Loop

Figure 2: A sample RNA secondary structure with 79 nucleotides.

5

60

4

Thermodynamic Prediction Algorithm

Prediction of secondary structures with the free energy minimization is an optimization

problem like the Smith-Waterman local alignment algorithm. There is a well-defined scoring function which can be optimized via dynamic programming, and structures achieving

the optimum can be found through traceback. However, while sequence alignment can be

performed with one table and a relatively simple processing order, RNA secondary structure

prediction requires five tables with complex dependencies. Each class of loop has a different

energy function which is dependent upon the sequence and parameters. For the internal

loops and multiloops with one or more branches, all enclosed base pairs need to be searched

which makes the loop optimal for the closing base pair.

The algorithm can be defined with recursive minimization formulas. Simplified recursion

formulas are reproduced here from [10] for convenience. Pseudocode of our algorithm that

implements thermodynamically equipped recursion formulas is presented in Appendix A.

Consider an RNA sequence of length N, free energy W (N), and index values i and j which

vary over the sequence such that 1 ≤ i < j ≤ N. The optimal free energy of a subsequence

from 1 to j is given with the following formula:

W (j) = min{W (j − 1), min {V (i, j) + W (i − 1)}}

1≤i<j

(1)

In Eq. (1), V (i, j) is the optimal energy of the subsequence from i to j, if it forms a base

pair (i, j). It is defined by the following equation.

⎧

eH(i, j),

⎪

⎪

⎨

eS(i, j) + V (i + 1, j − 1),

(2)

V (i, j) = min

V BI(i, j),

⎪

⎪

⎩

V M(i, j)

Eq. (2) considers loops that a base pair (i, j) can close. The eH(i, j) function returns the

energy of a hairpin loop closed by base pair (i, j). Function eS(i, j) returns the energy of a

stack formed by base pairs (i, j) and (i + 1, j − 1). V BI(i, j) and V M(i, j) are the optimal

free energies of the subsequence from i to j in the case when the (i, j) base pair closes an

internal loop or a multiloop, respectively.

V BI(i, j) =

min

{eL(i, j, i , j ) + V (i , j )}

i<i <j <j

(3)

where, i − i + j − j − 2 > 0.

The formulation of the multiloop energy function has linear dependence upon the number

of single stranded bases present in the multiloop. The standard is to introduce a 2D array

W M to facilitate the calculation of V M array. Eq. (4) and (5) shows calculations of W M(i, j)

and V M(i, j) respectively.

⎧

V (i, j) + b,

⎪

⎪

⎨

W M(i, j − 1) + c,

W M(i, j) = min

(4)

W M(i + 1, j) + c,

⎪

⎪

⎩

mini<k≤j {W M(i, k − 1) + W M(k, j)}

6

V M(i, j) =

min

{W M(i + 1, h − 1) + W M(h, j − 1) + a}

i+1<h≤j−1

(5)

These minimization formulas can be implemented recursively as well as iteratively. We

implement an iterative formulation of the algorithm in GTfold, described later in this paper.

The implementation uses various 1D and 2D arrays corresponding to W (j) and V (i, j),

V BI(i, j), V M(i, j), W M(i, j) values. Also we use calcW (j), calcV (i, j), calcV BI(i, j),

calcV M(i, j) and calcW M(i, j) functions to calculate the values of W (j), V (i, j), V BI(i, j),

V M(i, j) and W M(i, j) array elements.

4.1

Parallelism

The dynamic programming algorithm is computationally intensive both in terms of running

time and storage. Its space requirements are of O(n2 ) as it uses four 2D arrays named V (i, j),

V BI(i, j), V M(i, j) and W M(i, j) that are filled up during the algorithm’s execution. The

main issue is running time rather than memory requirements. For instance, GTfold has a

memory footprints of less than 2GB (common in most desktop PCs) even for sequences with

10,000 nucleotides.

The filled up arrays are traced in the backwards direction to determine the secondary

structures. The traceback for a single structure takes far less time than filling up these

arrays. Time complexity of the dynamic programming algorithm is O(n3) with the currently

adopted thermodynamic model. The two indices i and j are varied over the entire sequence,

and every type of loop for every possible base pair (i, j) is calculated. This results in the

asymptotic time complexity of O(n2 )× maximum time complexity of any type of loop for a

base pair (i, j).

Computations of internal loops and multiloops are the most expensive parts of the algorithm. We can see from Eq. (3) that, in the calculation of V BI(i, j), all possible internal

loops with the closing base pair (i, j) are considered by varying indices i and j over the

subsequence from i+1 to j −1 such that i < j . This results in the overall time complexity of

O(n4 ). To avoid large running time, a commonly used heuristic is to limit the size of internal

loops to a threshold k usually set as 30. This significantly reduces running time from O(n4 )

to O(k 2 n2 ). The heuristic is adopted in most of the standard RNA folding programs.

Lyngsø et al. [10] suggest that the limit is a little bit small for predictions at higher

temperatures and give an optimized and exact algorithm for internal loop calculations which

has the time complexity of O(n3 ) with the same O(n2) space. The algorithm searches for

all possible internal loops closed by base pair (i, j). Practically, this algorithm is far slower