Experiences with Recombinant Computing: Exploring Ad Hoc Interoperability in Evolving Digital Networks 3

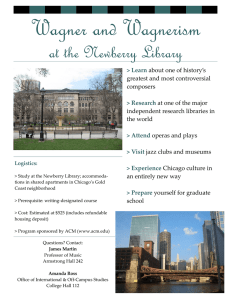

advertisement