The Robust Estimation of Multiple Motions: Parametric and Piecewise-Smooth Flow Fields M

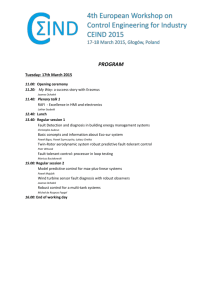

advertisement