Speed-area optimized FPGA implementation for Full Search Block Matching

advertisement

Speed-area optimized FPGA implementation for Full Search Block Matching

Santosh Ghosh and Avishek Saha

Department of Computer Science and Engineering, IIT Kharagpur, WB, India, 721302

{santosh, avishek}@cse.iitkgp.ernet.in

Abstract

ture for FSBM was proposed in [3]. Both [13] and [1]

proposed low-power architectures based on removal of unnecessary computations. Finally, a novel low-power parallel tree FSBM architecture was proposed in [6], which

exploited the spatial data correlations within parallel candidate block searches for data sharing and thus effectively

reduces data access bandwidth and power consumption. [7]

proposed an FPGA architecture to implement parallel computation of FSBM. Systolic array and novel OnLine Arithmetic (OLA) based designs for FSBM were proposed in [8]

and [9], respectively. Customizable low-power FPGA cores

were proposed by [10]. [11] evaluated the performance of

FSBM hardware architectures [4] implemented on Xilinx

FPGA. The results show that, real-time motion estimation

for CIF (352 × 288) sequences can be achieved with 2-D

systolic arrays and moderate capacity (250 k gates) FPGA

chip. An adder-tree based 16 × 1 SAD FPGA hardware was

implemented by [17].

This paper presents an FPGA based hardware design

for Full Search Block Matching (FSBM) based Motion Estimation (ME) in video compression. The significantly

higher resolution of HDTV based applications is achieved

by using FSBM based ME. The proposed architecture uses

a modification of the Sum-of-Absolute-Differences (SAD)

computation in FSBM such that the total number of additions/subtraction operations is drastically reduced. This

successfully optimizes the conflicting design requirements

of high throughput and small silicon area. Comparison results demonstrate the superior performance of our architecture. Finally, the design of a reconfigurable block matching

hardware has been discussed.

1 Introuction

The aforementioned FSBM architectures can be divided

into two categories, namely, FPGA [7, 8, 9, 10, 11, 17] and

ASIC [4, 15, 18, 2, 3, 20, 5, 19, 13, 1, 6]. This work uses

FPGA technology to implement a high-performance ME

hardware with due consideration to (a) processing speed

and (b) silicon area. Almost all aforementioned VLSI architectures optimize any one of these parameters. The novelty

of the proposed architecture lies in its combined optimization of the aforementioned conflicting design requirements.

The proposed hardware uses an initially-split pipeline to reduce processing cycles for each MB and thus increases the

throughput. In addition, this design requires less number of

adders and only one Absolute Difference (AD) PE, which

drastically reduces the silicon area when compared to other

existing designs. The pixels of the search regions have been

organized in memory banks such that two sets of 128-bit

(16 8-bit pixels) data can be accessed in each clock cycle.

Rapid growth in High-Definition (HD) digital video applications has lead to an increased interest in portable HDquality encoder design. HD-compatible MPEG2 MP@HL

encoder uses Full Search Block Matching Algorithm (FSBMA) based Motion Estimation (ME). The ME module accounts for more than 80% of the computational complexity of a typical video encoder. Moreover, the power consumption of an FSBM-based encoder is prohibitively high,

particularly for portable implementations. Hence, efficient

ME processor cores need to be designed to realize portable

HDTV video encoders.

Parameterizable FSBM ASIC design to solve the input

bandwidth problem by using on-chip line buffers was proposed in [15]. [18] proposed a family of modular VLSI architectures which allow sequential inputs but perform parallel processing with 100 percent efficiency. A systolic mapping procedure to derive FSBM architectures was proposed

in [4]. The designs of ([2], [20]) and [5] focused on the

reduction of pin counts by sharing memory units and 2dimensional data reuse, respectively. [19] improved the

memory bandwidth by using an overlapped data flow of

search area which increased the processing element (PE)

utilization. A low-latency high-throughput tree architec-

1-4244-1258-7/07/$25.00 ©2007 IEEE

Section 2 gives an overview of FSBM-based motion estimation. Section 3 presents a brief discussion on SAD

modifications and describes the proposed FSBM hardware.

The implementation and comparative results have been presented in Section 4. Section 5 presents a reconfigurable address generator. Finally, Section 6 concludes this paper.

13

2 FSBM-based Motion Estimation

The detailed proof of the above derivation can be found

in [12]. Again, it can be posited that, if,

Motion-compensated video compression models the

pixel motion within the current picture as a translation of

those within a previous picture. The motion vector is obtained by minimizing a cost function measuring the mismatch between the current MB in current frame and the

candidate block in reference frame. SAD, the most popular

cost function, between the pixels of the current MB x(i, j)

and the search region y(i, j) can be expressed as,

N

−1

−1

N

−1 N

−1 N

x(i, j) −

y(i + u, j + v) ≥ SADmin

SAD(u, v) =

−1

N

−1 N

|x(i, j) − y(i + u, j + v)|

i=0 j=0

then,

SAD(u, v) ≥ SADmin

(1)

where, (u, v) is the displacement between these two blocks.

Thus, each search requires N 2 absolute differences and

(N 2 − 1) additions. The FSBMA exhaustively evaluates

all possible search locations and hence is optimal in terms

of reconstructed video quality and compression ratio. High

computational requirements, regular processing scheme and

simple control structures make the hardware implementation of FSBM a preferred choice.

3.2

(3)

Pipelined SAD Operator

The SAD hardware for FSBMA has been divided into

eight independent sequential steps. It computes the initial

full SAD for the first Search Location (SL) and derives the

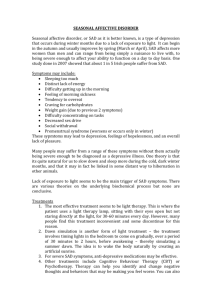

SAD sums for subsequent SLs. Fig. 1 shows the data path

of the proposed SAD operator for N = 16.

Stages 1 to 4 of the proposed design have been split to

facilitate parallel processing. Each half-stage (from Stage 1

to Stage 4) computes the sum of 16 pixel values per clock

cycle. These partial sums are accumulated in SR and M B

registers of Stage 6. Initially, the SR and MB registers

of Stage 6 are initialized to 0. For the first SAD calculation, Stage 5 just passes the intermediate addition result

of Stage 4 to Stage 6. This can be achieved by setting the

S0 control signal of Stage 6 to 0. Thus, the SAD sum of

the candidate MB and the first SL can be computed in 6 (for

the six stages of the pipeline) + 15 (to add 16 values) = 21

cycles. Thereafter, for every subsequent SL, the right and

the left half-stages add the pixel intensities of the old and

new rows/coloumns, respectively. At this point, Stage 5

is activated by enabling the S0 control signal. This stage

differentiates the resultant sum of the two half-stages and

accumulates the result in SR register of Stage 6. Stage 7

computes the AD between the older MB sum and the newly

obtained SL sum. Finally, Stage 8 compares the new SAD

with the existing SADmin and stores the minimum SAD

sum obtained so far. Thus, at each clock cycle, the proposed pipelined architecture computes one new SAD value

and stores the minimum SAD. Hence, with a search region

size of p = 16, this hardware can search the best match for

an MB in only [(2p + 1)2 − 1] + 23 clocks = 1111 clock

cycles.

Table 1: Execution profile of a typical video encoder

SAD

ME/

DCT/

Q/IQ

VLC/ Others

MC

IDCT

VLD

72.28% 16.85% 6.17% 2.35% 1.45% 0.32%

The execution profile of a standard video encoder obtained using the GNU gprof tool has been shown in Table 2.

The table shows that motion estimation is the most computationally expensive module in a typical video encoder. In

addition, SAD computations take the maximum time due to

complex nature of absolute operation and subsequent multitude of additions.

3 Proposed FSBM Architecture

In this section we delineate our proposed speed-area optimized FSBM architecture. The first subsection briefly explains the SAD modification and the MB searching technique. The subsequent subsections describe the proposed

hardware and the memory organization.

SAD modification

This section presents a modification to SAD computation. The SAD expression in Eq. 1 can be re-written as,

N −1 N −1

−1

N

−1 N

SAD(u, v) ≥ x(i, j) −

y(i + u, j + v)

i=0 j=0

(by Eq. 2)

where SADmin denotes the current minimum SAD

value. Thus, if Eq. 3 is satisfied, then the SAD computation at the (u, v)th location may be skipped.

In addition, if X(u, v) be the sum of pixel intensities at

the (u, v)th MB location, then this sum can be derived from

X(u − 1, v) by subtracting and adding the intensity sum of

columns at specific positions. Based on this fact, [12] proposes a search strategy to efficiently derive and compute the

MB sums at successive locations. The MB search technique

used in our proposed design adopts this particular approach.

i=0 j=0

3.1

i=0 j=0

i=0 j=0

(2)

14

Pipeline Stages

+

+

+

+

+

+

+

+

+

+

+

+

+

(1)

(2)

(3)

+

+

+

+

+

+

_

0 1

SR

+

+

+

+

+

+

+

(4)

+

+

+

+

(5)

S0

+

AD

(6)

MB

+

(7)

SAD

a< b

1 0

(8)

Figure 1: Data path of different pipeline stages of the proposed SAD unit

3.3

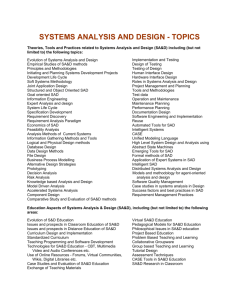

Memory Organization

left or right the oldest column of the pervious search location is subtracted from one new column in the new search

location. This implies that, at every clock, we need to access

two 128-bit (16 × 8) data from the memory. These 128-bit

data are basically represented as a part of one column in

the search region (Fig.3), e.g., [P1,1 , P2,1 , P3,1 , ..., P16,1 ] is

one such 128-bit data, which belongs to the column 1 of the

search region. It is observed that the one of the columns

from column number 17 to 32 are accessed concurrently

with another column from rest of the columns, i.e., 1 to 16

and 33 to 48, in the pre-defined search region. Therefore,

the pixels have been organized in two different memory

banks, as shown in Fig. 2. The data in these memory banks

are organized in column major format so that the whole column can be accessed by a single memory access. The memory controller generates the right address at every clocks for

both the memory banks. The selected 384 bits (48 pixels

of a single column of Fig.3) of each bank are then multiplexed and the correct 16 pixels are passed onto the SAD

processing unit.

Our design adopts the MB scanning technique proposed

in [12]. The pixels in p = 16 search region are represented

by Pi,j where 0 ≤ i ≤ 48 and 0 ≤ j ≤ 48 (shown in

Fig. 3)). This search region has (2p + 1)2 = 332 = 1089

search locations.

1

row number

1

2

3

2

3

column number

….

….

….. …. 48

P1,1 P1,2 ………

….

….

….. …. P1,48

P2,1 P2,2 ………

….

….

….. …. P2,48

.

.

.

.

.

.

48

P48,1 P48,2 ………

….

….

When the search location is moved down from the previous position, then we need to access two set of row pixels. This is not possible by the previously organized memory banks in one clock. It is easily observed Fig. 3 that

either the first 16 pixels or the last 16 pixels of a single

row have to be accessed for this purpose. It is also to

be observed that, for the even row number, the first 16

…. …. P48,48

Figure 3: Position of Pixels in the search region

by

Initially,

sum of the first search location is computed

16 the

16

j=1

i=1 Pi,j equation. Thereafter, to move towards

15

1

1

2

3

.

.

2

3

row number

….

….

3

….

….

….. …. 16

….. …. 48

1

P 1,33 P 1,34 …..…

….

….

….. …. P 1,48

P 2,1 P 2,2 ………

….

….

….. …. P2,16

P 3,33 P 3,34 …..…

….

….

….. …. P 3,48

P1,1 P2,1 …..…... P16,1

….

P32,1

…. P48,1

2

P1,2 P2,2 …..…... P16,2

….

P32,2

…. P48,2

3

P1,3 P2,3 …..…... P16,3

….

P32,3

…. P48,3

RB1

2

3

16 P1,16 P2,16 …..…. P16,16

33 P1,33 P2,33 …..…...P16,33

….

….

P32,16

…. P48,16

P32,33

…. P48,33

….

….

….. …. P16,16

33 P33,33 P33,34 …...

….

….

….. …. P33,48

P 32,17

…. P48,17

18 P1,18 P2,18 ….... P16,18

….

P 32,18

…. P48,18

19 P1,19 P2,19 ….... P16,19

….

P 32,19

…. P48,19

….

P 32,32

…. P48,32

.

.

.

48 P1,48 P2,48 …..…...P16,48

….

P32,48

…. P48,48

(a)

48 P48,1 P48,2 ………

….

….

(b)

…. …. P48,16

(c)

1 2 3

….

….

17 P17,33 P17,34 …...

….

18 P18,1 P18,2 ……

….

19 P19,33 P19,34 …..

.

32 P32,1 P32,2 …….

….

RB2

.

….. …. 48

….

32 P1,32 P2,32 ….... P16,32

16 P16,1 P16,2 …….

.

row number

….

….

17 P1,17 P2,17 ….... P16,17

.

RB3

column number

1

2

column number

1

….

….. …. 16

….

….. …. P17,48

….

….. …. P18,16

….

….. …. P19,48

….

….. …. P32,16

(d)

Figure 2: Organization of pixels in [(a),(c)] column major/[(b),(d)] row major format that are added or subtracted during the

shift of search in left or right/down locations, respectively. (c) and (d) represent the corresponding 2nd column/row memory

banks that are independent of the 1st column/row memory banks shown in (a) and (b), respectively

(Pi,1 , Pi,2 , ... , Pi,16 when i is even) and for the odd row

the last 16 (Pi,33 , Pi,34 , ... , Pi,48 when i is odd) pixels are

accessed to handle the downward movement of the search

location. Hence, we have stored the required row values in

another two memory banks. One is 32 × 128 − bit, to store

32 such row pixel sets and the another one is 16 × 128 − bit,

to store 16 such row pixel sets. Thus, the design needs only

768 bytes of overhead memory. The organization of this

memory banks and the stored pixels are shown in Fig. 2.

In order to reduce the total number of memory accesses in FSBM-based architecture, data reuse can be performed [14] at four different levels. Our on-chip memory

bank organization technique adopts the data reuse defined

as Level A and Level B. Level A describes the locality of

data within the candidate block strip where the search locations are moving within the block strip. Level B describes

the locality among the candidate block strips, as vertically

adjacent candidate block strips are overlapped. In our design this memory organization primarily based on the usage

of Look Up Tables (LUT) in the FPGA implementation.

sized on a Xilinx Virtex IV 4vlx100ff1513 FPGA. The synthesis results show that design requires 333 CLB Slices, 416

DFFs/Latches and a total of 278 input/output pins. The area

of the implementation is 380 look-up tables (LUTs) and the

highest achievable frequency is 221.322 MHz.

The pipelined design takes 23 clock cycles to produce

the first SAD value. Thereafter, one SAD value is generated

in every cycle. A search range of p = 16 has (2p + 1)2 =

1089 search locations. So for a search range of p = 16, the

number of cycles required by our hardware to find the best

matching block is, 23 (for the first search location) + (10891) (for the remaining search locations) = 1111 cycles.

Our FPGA implementation works at a maximum frequency of 221.322MHz (4.52 ns clock cycle). Hence, the

FPGA implementation can process a MB (16x16) in 5.022

usec (1111 clock cycles per MB * 4.52 ns per clock cycle = 5.022 usec) and a 720p HDTV (1280x720) frame in

18.078 msec (3600 MBs per frame * 5.022 usecs per MB

= 18.078 msec). At this speed, the proposed hardware can

process 55.33 720p HDTV frames per second. This is a

big improvement over other approaches, where the frames

processed per second is much lower. This is evident from

Table 2. The high speed and throughput of our design is

mainly because of the modified SAD operation and the split

pipeline design of the proposed architecture.

4 Performance Analysis

This section presents the implementations results of the

proposed hardware. Subsequently, it compares the obtained

results with other exiting FPGA based designs.

4.1

4.2

Implementation Results

Performance Comparison

This subsection compares the hardware features and

performance of the proposed design with existing FPGA

architectures. No comparison has been made with available

ASIC solutions.

The proposed design has been implemented in Verilog HDL and verified with RTL simulations using Mentor

Graphics ModelSim SE. The Verilog RTL has been synthe-

16

Table 2: Comparison of hardware features and performance with N=16 and p=16

Feature-based comparison

Performance

Design

cycles

Freq

CLB Input AD

Adders

Comp HDTV ThroughT

/MB

(MHz)

Slices Ports PEs

720p

put (T)

Area

(fps)

(MBs/sec)

Loukil et al. [7]

8482

103.8

1654

48

33

33 8-bit

17

3.4

12237.7

7.4

(Altera Stratix)

Mohammad et al. [8] 25344

191.0

300

2

33

33 8-bit

34

2.09

7536.3

25.1

(Xilinx Virtex II)

Olivares et al. [9]

27481

366.8

2296

2

256

510 1-bit

1

3.71

13347.4

5.8

(Xilinx Spartan)

Roma et al. [10]

2800

76.1

29430

3

256

15 8-bit

1

7.55

27178.6

0.92

(Xilinx XCV3200E)

Ryszko et al. [11]

1584

30.0

948

16

256

16 8-bit

1

5.26

18939.4

11.9

(Xilinx XC40250)

Wong et al. [17]

45738

197.0

1699

32

16

243 8-bit

1

1.2

4307.1

2.5

(Altera Flex20KE)

Our

1111 221.322

333

256

1

16 8-bit, 8

1

55.33

199209.7 598.2

(Xilinx Virtex IV)

9-bit, 4 10bit, 3 11-bit

& 2 16-bit

stantially high throughput/area value of 598.2.

Table 4.1 compares the hardware features of the proposed and existing FPGA solutions for a macroblock (MB)

of size 16 × 16 and a search range of p = 16. As can

be seen, our design consumes less cycles per MB, has the

highest maximum operating frequency. The splitting of the

initial stage of the pipeline facilitates this high speed. The

area required in terms of CLB slices and the hardware complexity in terms of AD PEs (Absolute Difference Processing

Elements), adders and comparators are much lesser for the

proposed architecture. Modification of the SAD operation

contributes to the high speed and less area and hardware

complexity. The use of memory banks has led to higher

on-chip bandwidth. However, this has also led to the only

drawback of our design, which is the high number of input/output pins.

5 Reconfigurable Block Matching Hardware

Apart from using the full pattern, block matching can

also be performed by using N-queen decimation patterns. It

has been shown [16] that the N-queen patterns have similar

PSNR drop but yield much faster encoding performance as

compared to the full pattern, particularly for N = 4 and

N = 8. This section presents a reconfigurable hardware design to find the minimum SAD value by selecting any one of

the full-search, 8-queen or 4-queen decimation techniques.

To the best of our knowledge no similar hardware design

exists in literature.

For both 4-queen and 8-queen decimation techniques,

the pixels being processed for two consecutive SAD-based

block matching are mutually independent. This fact can be

utilized to further enhance the performance of the SAD operator discussed in section 3. Only the memory organization and the address generation at each clock will differ for

the three decimation patterns. It has been observed that the

reconfigurable address generator and SAD operator require

only 40% and 2% extra hardware cost, respectively, as compared to the already proposed full pixel architecture.

The reconfigurable address generator uses a common

datapath. Two consecutive addresses are represented by

their respective bit value differences. For each decimation technique, the bit value is toggled following some predefined patterns. Bit toggling of the 8-bit address lines are

A performance comparison of the various architectures

has been also shown in Table 4.1. In order to compare

the speed-area optimized performance of different architectures, the new performance criteria of throughput/area has

been used. Higher the throughput/area parameter of a design, more is the speed-area optimization of the architecture. The architectures have been compared in terms of

(a) number of HDTV 720p (1280x720) frames that can be

processed per second, (b) throughput or MBs processed per

second, (c) throughput/area, and (d) the I/O bandwidth. As

can be seen, the proposed design has a very high throughput and can process the maximum number of HDTV 720p

frames per second (fps). Moreover, the superior speed-area

optimization in the proposed design is exhibited by its sub-

17

controlled by their respective enable signals which are being generated by one special controller logic. This state machine based controller generates the respective enable signals depending on 2-bit decimation mode select input signals. The pipelined datapath shown in Fig. 1 can also be reconfigured according to the user specified decimation mode.

In case of 8-queen on 16 × 16 block size, 32 pixel values are

added at every clock by both halves of the pipe stages from

one to five. The resultant value is directly used to perform

absolute difference with the MB to calculate current SAD

value. The same datapath of the pipelined SAD operator

also performs the SAD calculation for 4-queen decimation.

This technique requires 64 pixels for each SAD value for

16 × 16 block size. So, the pipeline is reconfigured in a way

such that its both halves from stage one to five and stage

six are used to perform the addition of these 64 pixel values. Subsequently, it performs sum of absolute differences

to get the new SAD.

[6] S. Lin, P. Tseng, and L. Chen. Low-power parallel tree architecture for full search block-matching motion estimation.

In Proc. of Intl. Symp. Circ. and Sys., volume 2, pages 313–

316, May 2004.

[7] H. Loukil, F. Ghozzi, A. Samet, M. Ben Ayed, and N. Masmoudi. Hardware implementation of block matching algorithm with fpga technology. In Proc. Intl. Conf. on Microelectronics, pages 542–546, Dec 2004.

[8] M. Mohammadzadeh, M. Eshghi, and M. Azadfar. Parameterizable implementation of full search block matching algorithm using fpga for real-time applications. In Proc. 5th

IEEE Intl. Caracas Conf. on Dev., Circ. and Sys., Dominican

Republic, pages 200–203, Nov 2004.

[9] J. Olivares, J. Hormigo, J. Villalba, I. Benavides, and E. Zapata. Sad computation based on online arithmetic for motion

estimation. Jrnl. Microproc. and Microsys., 30:250–258, Jan

2006.

[10] N. Roma, T. Dias, and L. Sousa. Customisable core-based

architectures for real-time motion estimation on fpgas. In

Proc. of 3rd Intl. Conf. on Field Prog. Logic and Appl., pages

745–754, Sep 2003.

[11] A. Ryszko and K. Wiatr. An assesment of fpga suitability

for implementation of real-time motion estimation. In Proc.

IEEE Euromicro Symp. on DSD, pages 364–367, 2001.

[12] A. Saha and S. Ghosh. A speed-area optimization of full

search block matching with applications in high-definition

tvs (hdtv). In To appear in LNCS Proc. of High Performance

Computing (HiPC), Dec 2007.

[13] L. Sousa and N. Roma. Low-power array architectures for

motion estimation. In IEEE 3rd Workshop on Mult. Sig.

Proc., pages 679–684, 1999.

[14] J. Tuan and C. Jen. An architecture of full-search block

matching for minimum memory bandwidth requirement. In

Proceedings of the IEEE GLSVLSI, pages 152–156, Feb

1998.

[15] L. Vos and M. Stegherr. Parameterizable vlsi architectures

for the full- search block- matching algorithm. IEEE Circ.

and Sys., 36(10):1309–1316, Oct 1989.

[16] C. Wang, S. Yang, C. Liu, and T. Chiang. A hierarchical nqueen decimation lattice and hardware architecture formotion estimation. IEEE Transactions on CSVT, 14(4):429–

440, April 2004.

[17] S. Wong, V. S., and S. Cotofona. A sum of absolute differences implementation in fpga hardware. In Proc. 28th

Euromicro Conf., pages 183–188, Sep 2002.

[18] K. Yang, M. Sun, and L. Wu. A family of vlsi designs for

the motion compensation block-matching algorithm. IEEE

Circ. and Sys., 36(10):1317–1325, Oct 1989.

[19] Y. Yeh and C. Lee. Cost-effective vlsi architectures and

buffer. size optimization for full-search block matching algorithms. IEEE Tran. VLSI Sys., 7(3):345–358, Sep. 1999.

[20] H. Yeo and Y. Hu. A novel modular systolic array architecture for full-search blockmatching motion estimation. In

Proc. Intl. Conf. on Acou., Speech, and Sig. Proc., volume 5,

pages 3303–3306, 1995.

6 Conclusions

This paper has presented a FPGA based design for Full

Search Block Matching Algorithm. The novelty of this

design lies in its modified SAD calculation and in splitpipelined design for parallel processing in the initial stages

of the hardware. The macroblock search scan has also been

suitably altered to facilitate the derivation of SAD sums

from previously computed results. Compared to existing

FPGA architectures, the proposed design exhibits superior

performance in terms of high throughput and low hardware

complexity. The high frame processing rate of 55.33 fps

makes this design particularly useful in both frame and field

processing of HDTV based applications. The paper finally

hints out the reconfigurable block matching hardware that

could be useful to general purpose real time video processing unit.

References

[1] V. Do and K. Yun. A low-power vlsi architecture for fullsearch block-matching. IEEE Tran. Circ. and Sys. Video

Tech., 8(4):393–398, Aug 1998.

[2] C. Hsieh and T. Lin. Vlsi architecture for block-matching

motion estimation algorithm. IEEE Tran. Circ. and Sys.

Video Tech., 2(2):169–175, June 1992.

[3] Y. Jehng, L. Chen, and T. Chiueh. Efficient and simple vlsi

tree architecture for motion estimation algorithms. IEEE

Tran. Sig. Pro., 41(2):889–899, Feb 1993.

[4] T. Komarek and P. Pirsch. Array archtectures for block

matching algorithms. IEEE Circ. and Sys., 36(10):1301–

1308, Oct 1989.

[5] Y. Lai and L. Chen. A data-interlacing architecture with

two-dimensional data-reuse for full-search block-matching

algorithm. IEEE Tran. Circ. and Sys. Video Tech., 8(2):124–

127, April 1998.

18