www.ijecs.in International Journal Of Engineering And Computer Science ISSN:2319-7242

advertisement

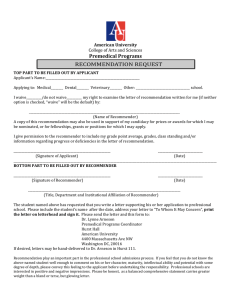

www.ijecs.in International Journal Of Engineering And Computer Science ISSN:2319-7242 Volume 4 Issue 2 February 2015, Page No. 10351-10355 Survey on Evaluation of Recommender Systems Shraddha Shinde1, Mrs. M. A. Potey2 1 Savitribai Phule Pune University, Department of Computer Engg. DYP Akurdi, India. shinde.shraddha97@gmail.com 2 Savitribai Phule Pune University, Department of Computer Engg. DYP Akurdi, India. mapotey@gmail.com Abstract: Recommender Systems (RSs) can be found in many modern applications and that expose the user to a huge collections of items and helps user to decide on appropriate items, and ease the task of finding preferred items in the collection. Recently Recommender systems are gaining popularity in both commercial and research community, where many algorithms have been used for providing recommendations. There are many evaluation metrics used for comparing this recommendation algorithms. The literature on recommender system evaluation offers a large variety of evaluation metrics and choose most appropriate evaluation metric among them. Error-based metrics likes MAE, RMSE and Ranking-based metrics like Precision and Recall are discussed in this paper. Using this evaluation metrics evaluate the performance of recommender systems. Keywords: Recommender Systems, Evaluation, Metrics. 1. Introduction Recently Recommender Systems (RSs) can be found in many modern applications that expose the user to a huge collections of items and typically help users to provide a list of recommended items they might prefer, or supply guesses of how much the user might prefer each item. Recommender systems help users to decide on appropriate items, and ease the task of finding preferred items in the collection. Recommender Systems are designed to suggest users the items that best fit the user needs and preferences. Recommender systems typically produce a list of recommendations in one of two ways - through collaborative or content-based filtering. Among Recommendation Systems techniques, the two most popular categories are content-based filtering and collaborative filtering and their hybridization is called hybrid approach. Knowledge based is the third category of Recommender Systems. These recommender systems deal with two types of entities, users and items. Collaborative filtering approaches building a model from a user's past behavior as well as similar decisions made by other users; then use that model to predict items (or ratings for items) that the user may have an interest in. Content-based filtering approaches utilize a series of discrete characteristics of an item in order to recommend additional items with similar properties. Hybrid RS combine both content and collaborative approaches. Gediminas Adomavicius et.al.[1] have presented a overview of the field of recommender systems and describes the current generation of recommendation methods that are usually classified into the following three main categories: contentbased, collaborative, and hybrid recommendation approaches. They have also described various limitations of current recommendation methods and discusses possible extensions that can improve recommendation capabilities and make recommender systems applicable to an even broader range of applications. These extensions include, among others, improvement of understanding of users and items, incorporation of the contextual information into the recommendation process, support for multi-criteria ratings, and provision of more flexible and less intrusive types of recommendations. Recently Recommender systems are gain popularity in both commercial and research community, where many algorithms have been used for providing recommendations. These algorithms typically perform differently in various domains and tasks. Therefore, it is important from the research perspective, as well as from a practical view, to be able to decide on an algorithm that matches the domain and the task of interest. The standard way to make such decisions is by comparing a number of algorithms online using some evaluation metric. Actually, many evaluation metrics have been suggested for comparing recommendation algorithms and, most researchers who suggest new recommendation algorithms also compare the performance of the new algorithm to a set of existing approaches. Such evaluations are typically performed by applying some evaluation metric that provides a ranking of the candidate algorithms (usually using numeric scores). Many evaluation metrics have been used to rank recommendation algorithms, some measuring similar features, but some measuring drastically different quantities. For example, methods such as the Root of the Mean Square Error (RMSE) measure the distance between predicted preferences and true preferences over items, while the Recall method computes the portion of favored items that were suggested. Shraddha Shinde, IJECS Volume 4 Issue 2 February, 2015 Page No.10351-10355 Page 10351 1.1 Evaluation Metrics Evaluation metrics for recommender systems can be divided into four major classes: 1) Predictive accuracy metrics, 2) Classification accuracy metrics, 3) Rank accuracy metrics and 4) Non-accuracy metrics. Predictive accuracy or rating prediction metrics are very popular for the evaluation of non-binary ratings. It is most appropriate for usage scenarios in which an accurate prediction of the ratings for all items is of high importance. The most important representatives of this class are mean absolute error (MAE), mean squared error (MSE), root mean squared error (RMSE) and normalized mean absolute error (NMAE). MSE and RMSE use the squared deviations and thus emphasize larger errors in comparison to the MAE metric. MAE and RMSE describe the error in the same units as the computed values, while MSE yields squared units. NMAE normalizes the MAE metric to the range of the respective rating scale in order to make results comparable among recommenders with varying rating scales. These predictive accuracy error metrics are frequently used for the evaluation of recommender systems since they are easy to compute and understand. They are wellstudied and are also applied in many contexts other than recommender systems. However, they do not necessarily correspond directly to the most popular usage scenarios for recommender systems. Classification accuracy metrics try to assess the successful decision making capacity (SDMC) of recommendation algorithms. They measure the amount of correct and incorrect classifications as relevant or irrelevant items that are made by the recommender system and are therefore useful for user tasks such as finding good items. SDMC metrics ignore the exact rating or ranking of items as only the correct or incorrect classification is measured. This type of metric is particularly suitable for applications in e-commerce that try to convince users of making certain decisions such as purchasing products or services. The common basic information retrieval (IR) metrics like recall and precision are calculated from the number of items that are either relevant or irrelevant and either contained in the recommendation set of a user or not. The common basic information retrieval (IR) metrics are calculated from the number of items that are either relevant or irrelevant and either contained in the recommendation set of a user or not. A rank accuracy or ranking prediction metric measures the ability of a recommender to estimate the correct order of items concerning the user's preference, which is called the measurement of rank correlation in statistics. Therefore this type of measure is most adequate if the user is presented with a long ordered list of items recommended to him. A rank prediction metric uses only the relative ordering of preference values so that is independent of the exact values that are estimated by a recommender. Most rank accuracy metrics such as Kendall's tau and Spearman's rho compare two total orderings. Evaluating recommender systems requires a definition of what constitutes a good recommender system, and how this should be measured. There is mostly consensus on what makes a good recommender system and on the methods to evaluate recommender systems. The more recommendation approaches are used, the more important their evaluation becomes to determine the best performing approaches and their individual strengths and weaknesses. To determining the `best' recommender approach there are three main evaluation methods, namely user studies, online evaluations, and offline evaluations are used to measure the recommender systems quality. In user studies, users explicitly rate recommendations generated by different algorithms and the algorithm with the highest average rating is considered the best algorithm. User studies typically ask their participants to quantify their overall satisfaction with the recommendations. It is important to note that user studies measure user satisfaction at the time of recommendation. Users do not measure the accuracy of a recommender system because users do not know, at the time of the rating, whether a given recommendation really was the most relevant. In online evaluations, recommendations are shown to real users of the system during their session. Users do not rate recommendations but the recommender system observes how often a user accepts a recommendation. Acceptance is most commonly measured by click-through rate (CTR), i.e. the ratio of clicked recommendations. Offline evaluations use pre-compiled offline datasets from which some information has been removed. Subsequently, the recommender algorithms are analyzed on their ability to recommend the missing information. There are three types of offline datasets, which is define as (1) true-offline-datasets, (2) user-offline-dataset, and (3) expert-offline-datasets. 2. Literature Review The literature on recommender system evaluation offers a large variety of evaluation metrics and using this evaluation metrics evaluate the performance of recommender systems. Error-based metrics likes MAE, RMSE and Ranking-based metrics like Precision and Recall are discussed in detail. 2.1 Accuracy Evaluation Metrics of Recommendation Tasks Asela Gunawardana et.al.[4] have been suggested many evaluation metrics like MAE, RMSE, and Precision ,Recall for comparing recommendation algorithms. The decision on the proper evaluation metric is often critical, as each metric may favor a different algorithm and review the proper construction of offline experiments for deciding on the most appropriate algorithm. They have also discussed the three important tasks of recommender systems, and classify a set of appropriate well known evaluation metrics for each task and demonstrate how using an improper evaluation metric can lead to the selection of an improper algorithm for the task of interest and discussed other important considerations when designing offline experiments. 2.2 Evaluating Recommendation Systems Asela Gunawardana et.al.[6] have discussed how to compare recommenders based on a set of properties that are relevant for the application and focused on comparative studies, where a few algorithms are compared using some evaluation metric, rather than absolute benchmarking of algorithms. They have also described experimental settings appropriate for making choices between algorithms and reviewed three types of experiments, starting with an offline setting, where recommendation approaches are compared without user Shraddha Shinde, IJECS Volume 4 Issue 2 February, 2015 Page No.10351-10355 Page 10352 interaction, then reviewing user studies, where a small group of subjects experiment with the system and report on the experience, and finally describe large scale online experiments, where real user populations interact with the system. 2.3 Comparative Recommender System Evaluation Alejandro Bellogín et.al.[2] have compared common recommendation algorithms as implemented in three popular recommendation frameworks like Apache Mahout (AM) [21], LensKit (LK) [22] and MyMediaLite (MML) [23]. To provide a fair comparison, they have complete control of the evaluation dimensions being benchmarked: dataset, data splitting, evaluation strategies, and metrics. They have also included results using the internal evaluation mechanisms of these frameworks. Their analysis points to large differences in recommendation accuracy across frameworks and strategies, i.e. the same baselines may perform orders of magnitude better or worse across frameworks. Their results show the necessity of clear guidelines when reporting evaluation of recommender systems to ensure reproducibility and comparison of results. Once the training and test splits are available, produced recommendations using three common state-of-the-art methods from the recommender systems literature: user-based collaborative filtering (CF), item-based CF and matrix factorization. These algorithms are calculated using following formulas. Item-based recommenders look at each item rated by the target user, and find items similar to that item. The rating prediction computed by item-based strategies is generally estimated as follows: ~ r (u, i) C sim(i, j )r (u, j ) Matrix factorization recommenders use dimensionality reduction in order to uncover latent factors between users and items, e.g. by Singular Value Decomposition (SVD), and probabilistic Latent Semantic Analysis. Generally, these systems minimize the regularized squared error on the set of known ratings to learn the factor vectors pu and qi. min (r (u, i) q q*, p* T i pu ) ( qi 2 2 pu 2 ) ( u ,i ) 2.4 Precision-Oriented Evaluation of Recommender Systems There is considerable methodological divergence in the way precision-oriented metrics are being applied in the Recommender Systems field, and as a consequence, the results reported in different studies are difficult to put in context and compare. Alejandro Bellogín et.al.[3] have aim to identify the involved methodological design alternatives, and their effect on the resulting measurements, with a view to assessing their suitability, advantages, and potential shortcomings. They have compared five experimental methodologies, broadly covering the variants reported in the literature and in experiments with three state-of-the-art recommenders, four of the evaluation methodologies are consistent with each other and differ from e me ics, in e ms f he c mpa a ive ec mmende s’ performance measurements. The other methodology considerably overestimates performance, and suffers from a strong bias towards known relevant items. In this the accuracy of recommendations has been evaluated by measuring the error between predicted and known ratings, by metrics such as the Mean Average Error (MAE), and the Root Mean Squared Error (RMSE). jSi Where Si is the set of items similar to i. Si is generally replaced by Iu – all items rated by user u – since unrated items are assumed to be rated with a zero. The user-based CF algorithm can be found in the literature under two different formulations: sim(u, v)(r (v, i) ~r (v)) ~ r (u, i) ~ r (u) C Precision-oriented metrics measure the amount of relevant and non-relevant retrieved items. Alejandro Bellogín et.al.[3] have presented a general methodological framework for evaluating recommendation lists, covering different evaluation approaches. The considered approaches essentially differ from each other in the amount of unknown relevance that is added to the test set. They have used Precision, Recall, and Normalized Discounted Cumulative Gain (NDCG) as representative precision oriented metrics. vN k ( u ) And ~ r (u, i) C sim(u, v)r (v, i) vN k ( u ) Where Nk(u) is a neighbourhood of the k most similar users ( ) is he se ’s ave age a ing. N e ha b h va ia i ns a e referred to using the same name, making it difficult to a priori know which version is used. The user similarity sim(u, v) is often computed using the Pearson correlation coefficient or the cosine similarity between the user preference vectors. They have included here a shrunk version of these similarities: sims (a, b) n a ,b n a ,b s sim(a, b) where na,b is the number of use s wh the shrinking parameter. a ed b h i ems, and λs is 2.5 Evaluating Performance of Recommender Systems Francois Fouss et.al.[7] have focused specifically on the “acc acy” f ec mmenda i n alg i hms. G d recommendation (in terms of accuracy) has, however, to be coupled with other considerations. They have been suggests measures aiming at evaluating other aspects than accuracy of recommendation algorithms. Other considerations include (1) coverage, which measures the percentage of a data set that a recommender system is able to provide recommendation for, (2) confidence metrics that can help users make more effective decisions, (3) computing time, which measures how quickly an algorithm can produce good recommendations, (4) novelty/serendipity, which measure whether a recommendation is original, and (5) robustness which measure the ability of the algorithm to make good predictions in the presence of noisy or sparse data. There are six collaborative recommendation methods are investigated. 2.6 Evaluating Recommender Systems Recommender systems are considered as an answer to the information overload in a web environment. Such systems Shraddha Shinde, IJECS Volume 4 Issue 2 February, 2015 Page No.10351-10355 Page 10353 recommend items (movies, music, books, news, web pages, etc.) that the user should be interested in. Collaborative filtering recommender systems have a huge success in commercial applications. The sales in these applications follow a power law distribution. However, with the increase of the number of recommendation techniques and algorithms in the literature, there is no indication that the datasets used for the evaluation follow a real world distribution. Zied Zaier et.al.[5] have introduced the long tail theory and its impact on recommender systems. It also provides a comprehensive review of the different datasets used to evaluate collaborative filtering recommender systems techniques and algorithms. Finally, it investigates which of these datasets present a distribution that follows this power law distribution and which distribution would be the most relevant. 3. Comparative Study Traditional metrics like precision and recall have some limitations like: (1) Search results don't have to be very good. (2) Recall? Not important (as long as you get at least some good hits). (3) Precision? Not important (as long as at least some of the hits on the first page you return are good)." A major problem with the frequently used metrics precision, recall and F1-measure is that they suffer from severe biases. The outcome of these measures not only depends on the accuracy of the recommender (or classifier) but also very much on the ratio of relevant items. In order to illustrate this imagine an ideal recommender and its inverse and apply them to generate top-k lists of four items for varying ratios of relevant items. To solve this problem there are three new metrics are introduced for avoiding these biases by integrating the inverse precision and inverse recall respectively. These three new metrics are: Informedness, Markedness and Matthews Correlation. Markedness combines precision and inverse precision into a single measure and expresses how marked the classifications of a recommender are in comparison to chance: dana et.al.[4] References [1] [2] [3] [5] [6] [7] Correlation = ± Existing Evaluatio n Metrics Feature Author Asela Gunawar Precision They suffer Gunnar Schrode Newly Proposed Evaluation Metrics Informednes s [8] Feature Avoid these biases by integratin g the inverse precision and inverse recall Acknowledgement informedness = recall + inverseRecall-1 Table 1: Compare Existing and Newly Proposed Metrics Markedness Matthews Correlation We would like to thank the publishers, researchers and teachers for their guidance. We would also thank the college authority for providing the required infrastructure and support. Last but not the least we would like to extend a heartfelt gratitude to friends and family members for their support. [4] Autor r et.al.[8] This paper surveys evaluation of Recommender Systems. Recommendation algorithms could be evaluated in order to select the best algorithm for a specific task from a set of candidates are discussed in detail. Many evaluation metrics have been used for comparing recommendation algorithms. Error-based metrics likes MAE, RMSE and Ranking-based metrics like Precision and Recall are also discussed in this paper. Using this evaluation metrics, performance of recommender systems are evaluated. Informedness combines recall and inverse recall into a single measure and expresses how informed the classifications of a recommender are in comparison to chance: The Matthews Correlation combines the informedness and markedness measures into a single metric by calculating their geometric mean: from severe biases. 4. Conclusion markedness = precision + inversePrecision-1 Both markedness and informedness return values in the range [1, 1]. Recall F1measure Adomavicius, Gediminas, and Alexander Tuzhilin. "Toward the next generation of recommender systems: A survey of the state-of-the-art and possible extensions." Knowledge and Data Engineering, IEEE Transactions on 17.6 (2005): 734-749. Said, Alan, and Alejandro Bellogín. "Comparative recommender system evaluation: benchmarking recommendation frameworks." Proceedings of the 8th ACM Conference on Recommender systems. ACM, 2014. Bellogin, Alejandro, Pablo Castells, and Ivan Cantador. "Precision-oriented evaluation of recommender systems: an algorithmic comparison." Proceedings of the fifth ACM conference on Recommender systems. ACM, 2011. Gunawardana, Asela, and Guy Shani. "A survey of accuracy evaluation metrics of recommendation tasks." The Journal of Machine Learning Research 10 (2009): 2935-2962. Zaier, Zied, Robert Godin, and Luc Faucher. "Evaluating recommender systems." Automated solutions for Cross Media Content and Multi-channel Distribution, 2008. AXMEDIS'08. International Conference on. IEEE, 2008. Shani, Guy, and Asela Gunawardana. "Evaluating recommendation systems." Recommender systems handbook. Springer US, 2011. 257-297. Fouss, François, and Marco Saerens. "Evaluating performance of recommender systems: An experimental comparison." Web Intelligence and Intelligent Agent Technology, 2008. WI-IAT'08. IEEE/WIC/ACM International Conference on. Vol. 1. IEEE, 2008. Schröder, Gunnar, Maik Thiele, and Wolfgang Lehner. "Setting Goals and Choosing Metrics for Recommender System Evaluations." UCERSTI2 Workshop at the 5th ACM Conference on Recommender Systems, Chicago, USA. Vol. 23. 2011. Shraddha Shinde, IJECS Volume 4 Issue 2 February, 2015 Page No.10351-10355 Page 10354 [9] Cremonesi, Paolo, et al. "Comparative evaluation of recommender system quality." CHI'11 Extended Abstracts on Human Factors in Computing Systems. ACM, 2011. [10] Ge, Mouzhi, Carla Delgado-Battenfeld, and Dietmar Jannach. "Beyond accuracy: evaluating recommender systems by coverage and serendipity." Proceedings of the fourth ACM conference on Recommender systems. ACM, 2010. [11] del Olmo, Félix Hernández, and Elena Gaudioso. "Evaluation of recommender systems: A new approach." Expert Systems with Applications 35.3 (2008): 790-804. [12] Kluver, Daniel, and Joseph A. Konstan. "Evaluating recommender behavior for new users." Proceedings of the 8th ACM Conference on Recommender systems. ACM, 2014. [13] Lei Shi. Trading-off among accuracy, similarity, diversity, and long-tail: a graph-based recommendation approach. In Proceedings of the 7th ACM conference on Recommender systems, pages 57-64. ACM, 2013. [14] Markus Zanker, Matthias Fuchs, Wolfram Hopken, Mario Tuta, and Nina Muller. Evaluating recommender systems in tourism a case study from austria. Information and communication technologies in tourism 2008, pages 2434, 2008. [15] Beel, Joeran, et al. "A comparative analysis of offline and online evaluations and discussion of research paper recommender system evaluation." Proceedings of the International Workshop on Reproducibility and Replication in Recommender Systems Evaluation. ACM, 2013. [16] Beel, Joeran, et al. "Research paper recommender system evaluation: A quantitative literature survey." Proceedings of the International Workshop on Reproducibility and Replication in Recommender Systems Evaluation. ACM, 2013. [17] McDonald, David W. "Evaluating expertise recommendations." Proceedings of the 2001 International ACM SIGGROUP Conference on Supporting Group Work. ACM, 2001. [18] Ruffo, Giancarlo, and Rossano Schifanella. "Evaluating peer-to-peer recommender systems that exploit spontaneous affinities." Proceedings of the 2007 ACM symposium on Applied computing. ACM, 2007. [19] Stephan Doerfel and Robert Jaschke. An analysis of tagrecommender evaluation procedures. In Proceedings of the 7th ACM conference on Recommender systems, pages 343-346. ACM, 2013. [20] Wolfgang Woerndl and Georg Groh. Utilizing physical and social context to improve recommender systems. In Proceedings of the 2007 IEEE/WIC/ACM International Conferences on Web Intelligence and Intelligent Agent Technology-Workshops, pages 123-128. IEEE Computer Society, 2007. [21] https://mahout.apache.org/general/downloads.html [22] http://lenskit.org/download/ [23] http://mymedialite.net/download/index.html Shraddha Shinde, IJECS Volume 4 Issue 2 February, 2015 Page No.10351-10355 Page 10355