Document 14127000

advertisement

The Class Imbalane Problem: A Systemati

Study

Nathalie Japkowiz and Sha ju Stephen

Shool of Information Tehnology and Engineering

University of Ottawa

150 Louis Pasteur, P.O. Box 450 Stn. A

Ottawa, Ontario, Canada, B3H 1W5

In mahine learning problems, dierenes in prior lass probabilities|or

lass imbalanes|have been reported to hinder the performane of some standard

lassiers, suh as deision trees. This paper presents a systemati study aimed at

answering three dierent questions. First, we attempt to understand what the lass

imbalane problem is by establishing a relationship between onept omplexity, size

of the training set and lass imbalane level. Seond, we disuss several basi resampling or ost-modifying methods previously proposed to deal with lass imbalanes

and ompare their eetiveness. Finally, we investigate the assumption that the lass

imbalane problem does not only aet deision tree systems but also aets other

lassiation systems suh as Neural Networks and Support Vetor Mahines.

Abstrat

onept learning, lass imbalanes, re-sampling, mislassiation osts,

C5.0, Multi-Layer Pereptrons, Support Vetor Mahines

Keywords:

Introdution

As the eld of mahine learning makes a rapid transition from the status of \aademi

disipline" to that of \applied siene", a myriad of new issues, not previously onsidered

by the mahine learning ommunity, is now oming into light. One suh issue is the lass

imbalane problem. The lass imbalane problem orresponds to the problem enountered by

indutive learning systems on domains for whih one lass is represented by a large number

of examples while the other is represented by only a few.1

The lass imbalane problem is of ruial importane sine it is enountered by a large

number of domains of great environmental, vital or ommerial importane, and was shown,

in ertain ases, to ause a signiant bottlenek in the performane attainable by standard

learning methods whih assume a balaned lass distribution. For example, the problem

ours and hinders lassiation in appliations as diverse as the detetion of oil spills in

satellite radar images (Kubat et al., 98), the detetion of fraudulent telephone alls (Fawett

and Provost, 97), in-ight heliopter gearbox fault monitoring (Japkowiz et al., 95), information retrieval and ltering (Lewis and Catlett, 94) and diagnoses of rare medial onditions

suh as thyroid diseases (Murphy and Aha, 94).

To this point, there have been a number of attempts at dealing with the lass imbalane

problem (Pazzani et al., 94; Japkowiz et al., 95; Ling and Li, 98; Kubat and Matwin, 97;

Fawett and Provost, 97; Kubat et al., 98; Domingos, 99; Chawla et al., 01; Elkan, 01);

However, these attempts were mostly onduted in isolation. In partiular, there has not

been, to date, muh systemati strive to link spei types of imbalanes to the degree

of inadequay of standard lassiers nor are there been many omparisons of the various

methods proposed to remedy the problem. Furthermore, no omparison of the performane

of dierent types of lassiers on imbalaned data sets has yet been performed.2

1 In

this paper, we only onsider the ase of onept-learning. However, the disussion also applies to

multi-lass problems.

2 Two studies attempting to systematize researh on the lass imbalane problem are worth mentioning,

nonetheless: One, urrently in progress at AT&T Lab, links dierent degrees of imbalanes to the performane of C4.5, a deision Tree learning system on a large number of real-world data sets. However, it does

not study the eet of onept omplexity nor training set size in the ontext of their relationship with lass

imbalanes, nor does it look at ways to remedy the lass imbalane problem or the eet of lass imbalanes

on lassiers other than C4.5. The seond study is that by [Lawrene et al., 1998℄, whih does not study the

eet of lass imbalanes on lassiers' performane but whih ompares a number of spei approahes

proposed to deal with lass imbalanes in the ontext of Neural Networks and on a few real-world data sets.

In their study, no lassier other than Neural Networks were onsidered and no systemati study onduted.

2

The purpose of this paper is to address these three onerns in an attempt to unify the

researh onduted on this problem. In a rst part, the paper onentrates on explaining

what the lass imbalane problem is by establishing a relationship between onept omplexity, size of the training set and lass imbalane level. In doing so, we also identify the lass

imbalane situations that are most damaging for a standard lassier that expets balaned

lass distributions. The seond part of the paper turns to the question of how to deal with

the lass imbalane problem. In this part we look at ve dierent methods previously proposed to deal with this problem and, all assumed to be more or less equivalent to eah other.

We attempt to establish to what extent these methods are, indeed, equivalent and to what

extent they dier. The rst two parts of our study were onduted using the C5.0 deision

tree indution system. In the third part, we set out to nd out whether or not the problems

enountered by C5.0 when trained on imbalaned data sets are spei to C5.0. In partiular, we attempt to nd out whether or not the same pattern of hindrane is enountered by

Neural Networks and Support Vetor Mahines and whether similar remedies an apply.

The remainder of the paper is divided into six setions. Setion 2 is an overview of

the paper explaining why the questions we set out to answer are important and how they

will advane our understanding of the lass imbalane problem. Setion 3 desribes the

part of the study fousing on understanding the nature of the lass imbalane problem and

nding out what types of lass imbalane problems reate greater diÆulties for a standard

lassier. Setion 4 desribes the part of the study designed to ompare the ve main types

of approahes previously attempted to deal with the lass imbalane problem. Setion 5

addresses the question of what eet lass imbalanes have on lassiers other than C5.0.

Setions 6 and 7 onlude the paper.

3

Overview of the Paper

As mentioned in the previous setion, the study presented in this paper investigates the

following three series of questions:

Question 1:

What is the nature of the lass imbalane problem? i.e., in what domains do

lass imbalanes most hinder the auray performane of a standard lassier suh as

C5.0?

Question 2:

How do the dierent approahes proposed for dealing with the lass imbalane

problem ompare?

Question 3:

Does the lass imbalane problem hinder the auray performane of lassi-

ers other than C5.0?

These questions are important sine their answers may put to rest urrently assumed but

unproven fats, dispel other unproven beliefs as well as suggest fruitful diretions for future

researh. In partiular, they may help researhers fous their inquiry onto the partiular

type of solution found most promising, given the partiular harateristis identied in their

appliation domain.

Question 1 raises the issue of when lass imbalanes are damaging. While the studies

previously mentioned identied spei domains for whih an imbalane was shown to hurt

the performane of ertain standard lassiers, they did not disuss the questions of whether

imbalanes are always damaging and to what extent dierent types of imbalanes aet

lassiation performanes. This paper takes a global stane and answers these questions

in the ontext of the C5.0 tree indution system on a series of artiial domains spanning a

4

large ombination of harateristis.3

Question 2 onsiders ve related approahes previously proposed by independent researhers for takling the lass imbalane problem4:

1. Upsizing the small lass at random.

2. Upsizing the small lass at \foused" random.

3. Downsizing the large lass at random.

4. Downsizing the large lass at \foused" random.

5. Altering the relative osts of mislassifying the small and the large lasses.

In more detail, Methods 1 and 2 onsist of re-sampling patterns of the small lass (either

ompletely randomly or randomly but within parts of the input spae lose to the boundaries

with the other lass) until there are as many data from the small lass as from the large

one.5 Methods 3 and 4 onsists of eliminating data from the large lass (either ompletely

randomly or, randomly but within parts of the input spae far away from the boundaries

with the large lass) until there are as many data in both lasses. Finally, method 5 onsists

3 The

paper, however, onentrates on domains that present a \between-lass imbalane" in that the

imbalane aets eah subluster of the small lass to the same extent. Beause of lak of spae, the

interesting issue of \within-lass imbalanes"|whih are speial ases of the problem of small disjunts

(Holte, 89)|has been omitted here. This very important question is dealt with elsewhere (Japkowiz, 01).

4 In this study, we fous on disrimination-based approahes to the problem whih base their deisions on

both the positive and negative data. The study of reognition-based approahes whih base their deision

on one of the two lasses but not both has been attempted in (Japkowiz, 00) but did not seem to do as well

as disrimination-based methods (this might be linked, however, to the fat that the reognition threshold

was not hosen very arefully. Nonetheless, we leave it to future work to determine truly whether or not

that is the ase).

5 (Estabrooks, 00) and the AT&T study previously mentioned in Footnote 2 show that, in fat, the

optimal amount of re-sampling is not neessarily that whih yields the same number of data in eah lass.

The optimal amount seems to depend upon the input domain and does not seem easy to estimate a priori.

In order to simplify our study, here, we deided to re-sample until the two lasses are of the same size. This

deision will not alter our results, however, sine we are interested in the relative performane of the dierent

remedial approahes we onsider.

5

of reduing the relative mislassiation ost of the large lass (or, equivalently, inreasing

that of the small one) to make it orrespond to the size of the small lass.

These methods were previously proposed by (Ling and Li, 98; Kubat and Matwin, 97;

Domingos, 99; Chawla et al., 00; and Elkan, 01) but were not systematially ompared

before. Here, we ompare the ve methods, one again, to the data sets used in the previous

part of the paper. This was done to see whether or not the ve approahes for dealing with

lass imbalanes respond to dierent domain harateristis in the same way.

Question 3, nally, asks whether the observations made in answering the previous questions for C5.0 also hold for other lassiers. In partiular, we study the eet of lass

imbalanes on Multi-Layer Pereptrons (MLPs), whih ould be thought of being apable

of more exible learning than C5.0, and thus, be less sensitive to lass imbalanes. We then

repeat this study with Support Vetor Mahines (SVMs) whih ould be believed, not to

be aeted by this problem given that they base their lassiation on a small number of

support vetors and, thus, may not be sensitive to the number of data representing eah

lass. We look at the performane of MLPs and SVMs on a subset of the series of domains

used in the previous part of the paper so as to see whether the three approahes are aeted

by dierent domain harateristis in the same ways.

Question 1: What is the nature of the Class Imbalane

Problem?

In order to answer Question 1, a series of artiial onept-learning domains was generated

that varies along three dierent dimensions: the degree of onept omplexity, the size of

the training set, and the level of imbalane between the two lasses. The standard lassier

6

system tested on this domain in this setion was the C5.0 deision tree indution system

(Quinlan, 93). This lassier has previously been shown to suer from the lass imbalane

problem (e.g., (Kubat et al., 98)), but not in a ompletely systemati fashion. The study

in this setion aims at answering the question of what dierent faes a lass imbalane an

take and whih of these faes hinders C5.0 most.

This part of the paper rst disusses the domain generation proess followed by a report

of the results obtained by C5.0 on the various domains.

Domain Generation

For the experiments of this setion, 125 domains were reated with various ombinations

of onept omplexity, training set size, and degree of imbalane. The generation method

used was inspired by Shaer who designed a similar framework for testing the eet of

overtting avoidane in sparse data sets (Shaer, 93). From Shaer's study, it was lear

that the omplexity of the onept at hand was an important part of the data overtting

problem and, given the relationship between the problem of overtting the data and dealing

with lass imbalanes (see (Kubat et al., 98)), it seems reasonable to assume that, here

again, onept omplexity is an important piee of the puzzle. Similarly, the training set

size should also be a fator in a lassier's ability to deal with imbalaned domains given the

relationship between the data overtting problem and the size of the training set. Finally,

the degree of imbalane is the obvious other parameter expeted to inuene a lassier's

ability to lassify imbalaned domains.

The 125 generated domains of our study were generated in the following way: eah of

the domain is one-dimensional with inputs in the [0, 1℄ range assoiated with one of the two

7

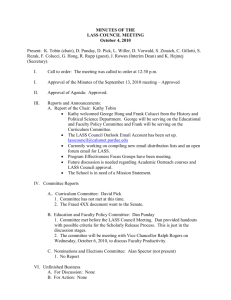

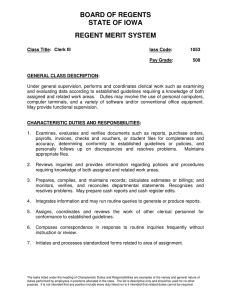

complexity (c) = 3, + = class 1, - = class 0

+

0

-

+

.125 .25 .375

-

+

.5

-

.625

+

.75

-

.875

1

Figure 1: A Bakbone Model of Complexity 3

lasses (1 or 0). The input range is divided into a number of regular intervals (i.e., intervals

of the same size), eah assoiated with a dierent lass value. Contiguous intervals have

opposite lass values and the degree of onept omplexity orresponds to the number of

alternating intervals present in the domain. Atual training sets are generated from these

bakbone models by sampling points at random (using a uniform distribution), from eah of

the intervals. The number of points sampled from eah interval depends on the size of the

domain as well as on its degree of imbalane. An example of a bakbone model is shown in

Figure 1.

Five dierent omplexity levels were onsidered ( = 1::5) where eah level, , orresponds

to a bakbone model omposed of 2 regular intervals. For example, the domains generated

at omplexity level = 1 are suh that every point whose input is in range [0, .5) is assoiated

with a lass value of 1, while every point whose input is in range (.5, 1℄ is assoiated with

a lass value of 0; At omplexity level = 2, points in intervals [0, .25) and (.5, .75) are

assoiated with lass value 1 while those in intervals (.25, .5) and (.75, 1℄ are assoiated with

lass value 0; et., regardless of the size of the training set and its degree of imbalane.6

6 In

this paper, omplexity is varied along a single very simple dimension. Other more sophistiated

models ould be used in order to obtain ner-grained results. In (Estabrooks, 00), for example, a k-DNF

model using several dimensions was used to generate a few artiial domains presenting lass imbalanes.

The study was less systemati than the one in this paper, but it yielded results orroborating those of this

paper.

8

Five training set sizes were onsidered (s = 1::5) where eah size, s, orresponds to a

training set of size round((5000=32) 2s). Sine this training set size inludes all the regular

intervals in the domain, eah regular interval is, in fat, represented by round(((5000=32) 2s )=2) training points (before the imbalane fator is onsidered). For example, at a size

level of s = 1 and at a omplexity level of = 1 and before any imbalane is taken into

onsideration, intervals [0, .5) and (.5, 1℄ are eah represented by 157 examples; If the size

is the same, but the omplexity level is = 2, then eah of intervals [0, .25), (.25, .5), (.5,

.75) and (.75, 1℄ ontains 78 training examples; et.

Finally, ve levels of lass imbalane were also onsidered (i = 1::5) where eah level,

i, orresponds to the situation where eah sub-interval of lass 1 is represented by all the

data it is normally entitled to (given and s), but eah sub-interval of lass 0 ontains only

1=(32=2i)th (rounded) of all its normally entitled data. This means that eah of the subintervals of lass 0 are represented by round((((5000=32) 2s)=2)=(32=2i)) training examples.

For example, for = 1, s = 1, and i = 2, interval [0, .5) is represented by 157 examples and

(.5, 1℄ is represented by 79; If = 2, s = 1 and i = 3, then [0, .25) and (.5, .75) are eah

represented by 78 examples while (.25, .5) and (.75, 1℄ are eah represented by 20; et.

The number of testing points representing eah sub-interval was kept xed (at 50). This

means that all domains of omplexity level = 1 are tested on 50 positive and 50 negative

examples; all domains of omplexity level = 2 are tested on 100 positive and 100 negative

examples; et.

9

Results for Question 1

The results for C5.0 are displayed in Figures 2, 3, 4 and 5 whih plots the error C5.0 obtained

for eah ombination of onept omplexity, training set size, and imbalane level, on the

entire testing set. For eah experiment, we reported four types of results: 1) the orreted

results in whih no matter what degree of lass imbalane is present in the training set, the

ontribution of the false positive error rate is the same as that of the false negative one in the

overall report.7 2) the unorreted results in whih the reported error rate reets the same

imbalane as the one present in the training set.8 3) the false positive error rate; and 4) the

false negative error rate. The orreted and unorreted results are provided so as to take

into onsideration two out of any possible number of situations: one in whih, despite the

presene of an imbalane, the ost of mislassifying the data of one lass is the same as that

of lassifying those of the other lass (the orreted version); the other situation is the one

where the relative ost of mislassifying the two lasses orrespond to the lass imbalane.9

Eah plot in eah of these gures represents the plot obtained at a dierent training set

size. The leftmost plot orresponds to the smallest size (s = 1) and progresses until the

rightmost plot whih orresponds to the largest (s = 5). Within eah of these plots, eah

luster of ve bars represent the onept omplexity level. The leftmost luster orresponds

7 For

this set of results, we simply report the error rate obtained on the testing set orresponding to the

experiment at hand.

8 For this set of results, we modify the ratio of false positive to false negative error obtained on the original

testing set to make it orrespond to the ratio of positive to negative examples in the training set.

9 A more omplete set of results ould have involved omparisons at other relative osts as well. However,

given our large number of experiments, this would have been unmanageable. We thus deided to fous

on two meaningful and important ases only. Similarly, and for the same reasons, we deided not to vary

C5.0's deision threshold aross the ROC spae (Swets et al., 2000). Sine we are seeking to establish the

relative performane of several lassiation approahes we believe that all the results obtained using the

same deision threshold are representative of what would have happened along the ROC urves. We leave

it to future work, however, to verify this assumption.

10

25

25

25

25

25

20

20

20

20

20

15

15

15

15

15

10

10

10

10

10

5

5

5

5

5

0

1

2

3

4

5

0

(a) Size=1

1

2

3

4

5

0

1

(b) Size=2

Figure 2:

2

3

4

5

0

1

() Size=3

2

3

4

5

0

(d) Size=4

18

18

18

18

16

16

16

16

14

14

14

14

14

12

12

12

12

12

10

10

10

10

10

8

8

8

8

8

6

6

6

6

6

4

4

4

4

4

2

2

2

2

2

3

4

5

0

(a) Size=1

1

2

3

4

5

0

1

(b) Size=2

Figure 3:

2

3

4

5

1

() Size=3

2

3

4

5

0

25

20

20

20

20

20

15

15

15

15

15

10

10

10

10

10

5

5

5

5

5

4

5

0

(a) Size=1

Figure 4:

2

3

4

5

C5.0 and the Class Imbalane Problem|UnCorreted

25

3

5

(e) Size=5

25

2

1

(d) Size=4

25

1

4

2

0

25

0

3

C5.0 and the Class Imbalane Problem|Correted

16

1

2

(e) Size=5

18

0

1

1

2

3

4

5

0

1

(b) Size=2

2

3

4

5

0

1

() Size=3

2

3

4

5

0

1

(d) Size=4

2

3

4

5

(e) Size=5

C5.0 and the Class Imbalane Problem| False Positive Error Rate

1.4

1.2

0.8

0.45

0.4

0.7

0.4

0.35

0.35

0.3

0.35

0.6

0.3

1

0.25

0.3

0.5

0.25

0.8

0.2

0.25

0.4

0.2

0.2

0.6

0.15

0.3

0.15

0.15

0.4

0.1

0.2

0.1

0.1

0.2

0

0.1

1

2

3

4

(a) Size=1

5

0

1

2

3

4

(b) Size=2

5

0

0.05

0.05

0.05

1

2

3

4

() Size=3

5

0

1

2

3

4

(d) Size=4

5

0

1

2

3

4

5

(e) Size=5

Figure 5: C5.0 and the Class Imbalane Problem| False Negative Error Rate:

Very Close to 0

11

to the simplest onept ( = 1) and progresses until the rightmost one whih orresponds to

the most omplex ( = 5). Within eah luster, nally, eah bar orresponds to a partiular

imbalane level. The leftmost bar orresponds to the most imbalaned level (i = 1) and

progresses until the rightmost bar whih orresponds to the most balaned level (i = 5, or

no imbalane). The height of eah bar represents the average perent error rate obtained by

C5.0 (over ve runs on dierent domains generated from the same bakbone model) on the

omplexity, lass size and imbalane level this bar represents. To make the omparisons easy,

horizontal bars were drawn at every 5% marks. If a graph does not display any horizontal

bars, it is beause all the bars represent an average perent error below 5%, and we onsider

the error negligeable in suh ases.

Our results reveal several points of interest: rst, no matter what the size of the training

set is, linearly separable domains (domains of omplexity level = 1) do not appear sensitive

to any amount of imbalane. As a matter of fat, as the degree of onept omplexity

inreases, so does the system's sensitivity to imbalanes. Indeed, we an learly see both in

Figure 2 (the orreted results) and Figure 3 (the unorreted results) that as the degree of

omplexity inreases, high error rates are aused by lower and lower degrees of imbalanes.

Although the error rates reported in the orreted ases are higher than those reported in

the unorreted ases, the eet of onept omplexity on lass imbalanes is learly visible

in both situations.

A look at Figures 4 and 5 explains the dierene between Figures 2 and 3 sine it reveals

that most of the error represented in these graphs atually ours on the negative testing

set (i.e., most of the errors are false positive errors). Indeed, none of the average perents

of false negative errors over all degrees of onept omplexity and levels of imbalane ever

12

exeed 5%. This is not surprising sine we had expeted the lassier to overt the majority

lass, but the extent to whih it does so might be a bit surprising.

As ould be expeted, imbalane rates are also a fator in the performane of C5.0 and,

perhaps more surprisingly, so is the training set size. Indeed, as the size of the training set

inreases, the degree of imbalane yielding a large error rate dereases. This suggests that in

very large domains, the lass imbalane problem may not be a hindrane to a lassiation

system. Speially, the issue of relative ardinality of the two lasses|whih is often

assumed to be the problem underlying domains with lass imbalaned|may in fat be easily

overridden by the use of a large enough data set (if, of ourse, suh a data set is available

and its size does not prevent the lassier from learning the domain in an aeptable time

frame).

All in all, our study suggests that the imbalane problem is a relative problem depending

on both the omplexity of the onept represented by the data in whih the imbalane ours

and the overall size of the training set, in addition to the degree of lass imbalane present

in the data. In other words, a huge lass imbalane will not hinder lassiation of a domain

whose onept is very easy to learn nor will we see a problem if the training set is very

large. Conversely, a small lass imbalane an greatly harm a very small data set or one

representing a very omplex onept.

Question 2: A Comparison of Various Strategies

Having identied the domains for whih a lass imbalane does impair the auray of a

regular lassier suh as C5.0, this setion now proposes to ompare the main methodologies

that have been proposed to deal with this problem. First, the various shemes used for this

13

omparison are desribed, followed by a omparative report on their performane. In all the

experiments of this setion, one again, C5.0 is used as our standard lassier.

Shemes for Dealing with Class Imbalanes

Over-Sampling

Two oversampling methods were onsidered in this ategory. The rst

one, random oversampling, onsists of oversampling the small lass at random until it ontains as many examples as the other lass. The seond method, foused oversampling, onsists

of oversampling the small lass only with data ourring lose to the boundaries between

the onept and its negation. A fator of = :25 was hosen to represent loseness to the

boundaries.10

Under-Sampling

Two under-sampling methods, losely related to the over-sampling meth-

ods were onsidered in this ategory. The rst one, random undersampling, onsists of eliminating, at random, elements of the over-sized lass until it mathes the size of the other

lass. The seond one, foused undersampling, onsists of eliminating only elements further

away (where, again, = :25 represents loseness to the boundaries)

Cost-Modifying

The ost-modifying method used in this study onsists of modifying

the relative ost assoiated to mislassifying the positive and the negative lass so that it

ompensates for the imbalane ratio of the two lasses. For example, if the data presents

a 1:10 lass imbalane in favour of the negative lass, the ost of mislassifying a positive

example will be set to 9 times that of mislassifying a negative one.

10 This

fator means that for interval [a, b℄, data onsidered lose to the boundary are those in [a, a+

.25 (b-a)℄ and [a+.75 (b-a), b℄. If no data were found in these intervals (after 500 random trials

were attempted), then the data were sampled from the full interval [a, b℄ as in the random oversampling

methodology.

14

25

25

6

5

3

4.5

5

20

2.5

20

4

3.5

4

15

2

15

3

3

10

10

5

5

2.5

1.5

2

2

1

1.5

1

1

0.5

0.5

0

1

2

3

4

5

0

(a) Size=1

1

2

3

4

5

0

1

(b) Size=2

Figure 6:

18

4

16

3.5

14

12

4

5

0

1

2

3

4

5

0

(d) Size=4

1

2

3

4

5

(e) Size=5

Oversampling: Error Rate, Correted

16

12

3

() Size=3

18

14

2

3.5

2

1.8

3

1.6

3

2.5

1.4

2.5

10

10

8

8

6

6

4

4

1.2

2

2

1

1.5

0.8

1.5

0.6

1

1

2

0

0.4

2

1

2

3

4

5

0

(a) Size=1

0.5

0.5

1

2

3

4

(b) Size=2

Figure 7:

0.2

0

5

1

2

3

4

5

0

() Size=3

1

2

3

4

5

0

(d) Size=4

1

2

3

4

5

(e) Size=5

Oversampling: Error Rate, Unorreted

Results for Question 2

Like in the previous setion, four series of results are reported in the ontext of eah sheme:

the orreted error, the unorreted error, the false positive error and the false negative error.

The format of the results is the same as that used in the last setion. The results for random

oversampling are displayed in Figures 6 to 9; those for foused oversampling, in Figures 1013; those for random undersampling in Figures 14-17; those for foused undersampling in

Figures 18-21; and those for ost-modifying, in Figures 22-25.

25

25

20

20

15

15

6

4.5

3

4

5

2.5

3.5

4

3

2

2.5

3

1.5

2

10

10

2

5

1.5

1

1

5

1

0.5

0.5

0

1

2

3

4

(a) Size=1

5

0

1

2

3

4

(b) Size=2

Figure 8:

5

0

1

2

3

4

() Size=3

5

0

1

2

3

4

5

(d) Size=4

Oversampling: False Positive Error Rate

15

0

1

2

3

4

(e) Size=5

5

2

1

1.8

0.9

0.7

0.4

1.6

0.8

1.4

0.7

0.5

0.45

0.35

0.6

0.4

0.3

1.2

0.6

1

0.5

0.5

0.35

0.25

0.3

0.4

0.2

0.25

0.3

0.8

0.4

0.6

0.3

0.4

0.2

0.2

0.1

0.2

0.15

0.15

0.2

0.1

0.1

0.1

0

1

2

3

4

5

0

(a) Size=1

1

2

3

4

0

5

(b) Size=2

Figure 9:

18

0.05

1

2

3

4

0

5

() Size=3

0.05

1

2

3

4

0

5

(d) Size=4

1

2

3

4

5

(e) Size=5

Oversampling: False Negative Error Rate

25

6

3

5

2.5

4

2

3

1.5

2

1

1

0.5

1.6

16

1.4

20

14

1.2

12

1

15

10

0.8

8

10

0.6

6

0.4

4

5

0.2

2

0

1

2

3

4

5

0

(a) Size=1

1

2

3

4

5

0

(b) Size=2

Figure 10:

1

2

3

4

5

0

() Size=3

1

2

3

4

5

0

(d) Size=4

1

2

3

4

5

(e) Size=5

Foused Oversampling: Error Rates, Correted

The results indiate a number of interesting points. First, all the methods proposed to

deal with the lass imbalane problem present an improvement over C5.0 used without any

type of re-sampling nor ost-modifying tehnique both in the orreted and the unorreted

versions of the results. Nonetheless, not all methods help to the same extent. In partiular, of

all the methods suggested, undersampling is by far the least eetive. This result is atually

at odds with previously reported results (e.g., (Domingos, 99)), but we explain this disparity

by the fat that in the appliations onsidered by (Domingos, 99), the minority lass is the

lass of interest while the majority lass represents everything other than these examples

18

25

6

3

5

2.5

4

2

3

1.5

2

1

1

0.5

1.6

16

1.4

20

14

1.2

12

1

15

10

0.8

8

10

0.6

6

0.4

4

5

0.2

2

0

1

2

3

4

5

0

(a) Size=1

Figure 11:

1

2

3

4

(b) Size=2

5

0

1

2

3

4

() Size=3

5

0

1

2

3

4

(d) Size=4

5

0

1

2

4

(e) Size=5

Foused Oversampling: Error Rates, UnCorreted

16

3

5

25

25

20

8

4.5

7

4

20

2.5

2

3.5

6

3

15

5

15

1.5

2.5

4

2

10

10

5

5

1

3

1.5

2

1

1

0

1

2

3

4

0

5

(a) Size=1

1

2

3

4

5

0.5

0

1

(b) Size=2

Figure 12:

2.5

0.5

2

3

4

5

0

1

() Size=3

2

3

4

5

0

(d) Size=4

1

2

3

4

5

(e) Size=5

Foused Oversampling: Error Rates, False Positives

12

0.8

0.5

0.7

0.45

0.7

0.6

10

2

0.4

0.6

0.5

0.35

8

0.5

1.5

0.3

0.4

6

0.4

0.25

0.3

1

0.2

0.3

4

0.15

0.2

0.2

0.5

0.1

2

0.1

0.1

0

1

2

3

4

0

5

(a) Size=1

1

2

3

4

5

0

(b) Size=2

Figure 13:

0.05

1

2

3

4

0

5

() Size=3

1

2

3

4

5

0

(d) Size=4

25

25

25

20

20

20

20

20

15

15

15

15

15

10

10

10

10

10

5

5

5

5

5

0

0

0

0

3

4

5

(a) Size=1

1

2

3

4

5

(b) Size=2

Figure 14:

1

2

3

4

5

() Size=3

1

2

3

4

5

0

(d) Size=4

18

18

18

18

16

16

16

16

14

14

14

14

14

12

12

12

12

12

10

10

10

10

10

8

8

8

8

8

6

6

6

6

6

4

4

4

4

4

2

2

2

2

2

3

4

(a) Size=1

5

5

0

1

2

3

4

(b) Size=2

Figure 15:

1

2

3

4

5

Undersampling: Correted Error Rate

16

1

4

(e) Size=5

18

0

3

Foused Oversampling: Error Rates, False Negatives

25

2

2

(e) Size=5

25

1

1

5

0

1

2

3

4

() Size=3

5

0

2

1

2

3

4

5

(d) Size=4

Undersampling: Unorreted Error Rate

17

0

1

2

3

4

(e) Size=5

5

25

25

25

25

25

20

20

20

20

20

15

15

15

15

15

10

10

10

10

10

5

5

5

5

5

0

1

2

3

4

5

0

(a) Size=1

1

2

3

4

5

0

(b) Size=2

Figure 16:

14

1

2

3

4

5

0

() Size=3

1

2

3

4

5

0

(d) Size=4

1

2

3

4

5

(e) Size=5

Undersampling: False Positive Error Rate

6

2

3

0.7

1.8

12

5

0.6

2.5

1.6

10

0.5

1.4

4

2

1.2

8

0.4

3

1

1.5

6

0.3

0.8

2

1

0.6

4

0.2

0.4

1

2

0.5

0.1

0.2

0

1

2

3

4

5

0

(a) Size=1

1

2

3

4

5

0

(b) Size=2

Figure 17:

1

2

3

4

5

0

() Size=3

1

2

3

4

5

0

(d) Size=4

25

25

25

20

20

20

20

20

15

15

15

15

15

10

10

10

10

10

5

5

5

5

5

0

0

0

0

3

4

5

(a) Size=1

1

2

3

4

5

(b) Size=2

Figure 18:

1

2

3

4

5

() Size=3

1

2

3

4

5

0

(d) Size=4

18

18

18

18

16

16

16

16

14

14

14

14

14

12

12

12

12

12

10

10

10

10

10

8

8

8

8

8

6

6

6

6

6

4

4

4

4

4

2

2

2

2

2

3

4

5

0

(a) Size=1

Figure 19:

1

5

1

2

3

4

5

Foused Undersampling: Error Rate, Correted

16

1

4

(e) Size=5

18

0

3

Undersampling: False Negative Error Rate

25

2

2

(e) Size=5

25

1

1

2

3

4

(b) Size=2

5

0

1

2

3

4

() Size=3

5

0

2

1

2

3

4

(d) Size=4

5

0

1

2

4

(e) Size=5

Foused Undersampling: Error Rate, Unorreted

18

3

5

25

25

25

25

25

20

20

20

20

20

15

15

15

15

15

10

10

10

10

10

5

5

5

5

5

0

1

2

3

4

5

0

(a) Size=1

1

2

3

4

5

0

(b) Size=2

Figure 20:

14

1

2

3

4

5

0

() Size=3

1

2

3

4

5

0

1

(d) Size=4

2

3

4

5

(e) Size=5

Foused Undersampling: False Positive Error Rate

1.8

1.8

2

0.5

1.6

1.6

1.8

0.45

1.4

1.4

1.2

1.2

12

10

8

6

1.6

0.4

1.4

0.35

1.2

0.3

1

0.25

1

1

0.8

0.8

0.6

0.6

0.4

0.4

0.4

0.1

0.2

0.2

0.2

0.05

0

0

0

4

0.8

0.2

0.6

0.15

2

0

1

2

3

4

5

(a) Size=1

1

2

3

4

5

(b) Size=2

Figure 21:

25

1

2

3

4

5

() Size=3

1

2

3

4

0

5

(d) Size=4

1

2

3

4

5

(e) Size=5

Foused Undersampling: False Negative Error Rate

30

25

4.5

4.5

4

4

3.5

3.5

25

20

20

20

15

3

3

2.5

2.5

15

15

10

2

2

1.5

1.5

10

10

5

5

1

1

0.5

0.5

5

0

1

2

3

4

5

0

(a) Size=1

1

2

3

4

5

0

(b) Size=2

Figure 22:

40

40

35

35

1

2

3

4

5

0

() Size=3

1

2

3

4

0

5

(d) Size=4

1

2

3

4

5

(e) Size=5

Cost Modifying: Correted Error Rate

35

3

9

8

30

2.5

7

30

30

25

2

25

25

20

20

15

15

6

20

5

1.5

4

15

1

3

10

10

10

2

5

0

5

5

1

2

3

4

(a) Size=1

5

0

0.5

1

1

2

3

4

(b) Size=2

Figure 23:

5

0

1

2

3

4

() Size=3

5

0

1

2

3

4

5

(d) Size=4

Cost Modifying: Unorreted Error Rate

19

0

1

2

3

4

(e) Size=5

5

25

25

25

4.5

2.5

4

20

20

20

2

3.5

3

15

15

15

1.5

2.5

2

10

10

10

5

5

5

1

1.5

1

0.5

0.5

0

1

2

3

4

5

0

(a) Size=1

1

2

3

4

5

0

(b) Size=2

Figure 24:

25

1

2

3

4

5

0

1

() Size=3

2

3

4

5

0

(d) Size=4

1

2

3

4

5

(e) Size=5

Cost Modifying: False Positive Error Rate

30

25

0.35

4.5

4

0.3

25

20

20

3.5

0.25

20

3

15

15

0.2

2.5

15

2

0.15

10

10

10

1.5

0.1

5

1

5

5

0.05

0.5

0

1

2

3

4

5

(a) Size=1

0

1

2

3

4

(b) Size=2

Figure 25:

5

0

1

2

3

4

() Size=3

5

0

1

2

3

4

5

0

(d) Size=4

1

2

3

4

5

(e) Size=5

Cost Modifying: False Negative Error Rate

of interest. It follows that in domains suh as (Domingos, 99)'s the majority lass inludes

a lot of data irrelevant to the lassiation task at hand that are worth eliminating by

undersampling tehniques. In our data sets, on the other hand, the roles of the positive and

the negative lass are perfetly symmetrial and no examples are irrelevant. Undersampling

is, thus, not a very useful sheme in these domains. Foused undersampling does not present

any advantages over random undersampling on our data sets either and neither methods are

reommended in those ases where the two lasses play symmetrial roles and do not ontain

irrelevant data.

The situation, in the ase of oversampling, is quite dierent. Indeed, oversampling is

shown to help quite dramatially at all omplexity and training set size level. Just to

illustrate this fat, onsider, for example, the situation at size 2 and degree of omplexity 4:

while in this ase, any degree of imbalane (other than the ase where no imbalane is present)

auses C5.0 diÆulties (see gures 2(b) and 3(b)), none but the highest degree of imbalane

20

do so when the data is oversampled at random (see gures 6(b) and 7(b)). Contrarily to

the ase of undersampling, the foused approah does make a dierene|albeit, small|in

the ase of oversampling. Indeed, at sizes 1 and 2, foused oversampling deals with the

highest level of omplexity better than random oversampling (ompare the results at degree

of diÆulty 5 in gures 6(a, b) and 7(a, b) on the one hand and gures 10(a, b) and 11(a,

b), on the other hand). Interestingly, the improvement in overall error does not seem to

aet the distribution of the error. Indeed, as Figures 8, 9, 12 and 13 will attest, while

the false positive rate has dereased, the false negative one has not signiantly inreased

despite the fat that the size of the positive training set has inreased dramatially. This is

quite an important result sine it ontradits the expetation that oversampling would have

shifted the error distribution and, thus, not muh helped in the ase where it is essential

to preserve a low false negative error rate while learning the false positive error rate. In

summary, oversampling and foused oversampling seem quite eetive ways of dealing with

the problem, at least in situations suh as those represented in our training set.

The last method, ost-modifying, is more eetive than both random oversampling and

foused oversampling in all but a single observed ase, that of onept omplexity 5 and Size 3

(ompare the results for onept omplexity 5 in gures 6(), 7(), 10() and 11() on the one

hand to those of gures 22() and 23() on the other). In this ase both random and foused

oversampling are more aurate than ost-modifying. The generally better results obtained

with the ost-modifying method over those obtained by oversampling are in agreement with

(Lawrene et al., 98) who suggest that modifying the relative ost of mislassifying eah lass

allows to ahieve the same goals as oversampling without inreasing the training set size, a

step that an harm the performane of a lassier. Nonetheless, although we did not show

21

it here, we assume that in those ases where the majority lass ontains irrelevant examples,

undersampling methods may be more eetive than ost modifying ones.

Question 3: Are other lassiers also sensitive to Class

Imbalanes in the Data?

Setions 1 and 2 studied the question of how lass imbalanes aet lassiation and how

they an be ountered all in the ontext of C5.0, a deision tree indution system. In

this setion, we are onerned about whether lassiation systems using other learning

paradigms are also aeted by the lass imbalane problem and to what extent. In partiular,

we onsider two other paradigms whih, a priori, may seem less prone to hindranes in the

fae of lass imbalanes than deision trees: Multi-Layer Pereptrons (MLPs) and Support

Vetor Mahines (SVMs).

MLPs an be believed to be less prone to the lass imbalane problem beause of their

exibility. Indeed, they may be thought to be able to ompute a less global partition of

the spae than deision tree learning systems sine they get modied by eah data point

sequentially and repeatedly and thus follow a top-down as well as a bottom-up searh of the

hypothesis spae simultaneously. Even more onviningly than MLPs, SVMs an be believed

to be less prone to the lass imbalane problem than C5.0 beause boundaries between lasses

are alulated with respet to only a few support vetors, the data points loated lose to

the other lass. The size of the data set representing eah lass may, thus, be believed not

to matter given suh an approah to lassiation.

The point of this setion is to assess whether indeed MLPs and SVMs are less prone to the

lass imbalane problem and if so, to what extent. Again, we used domains belonging to the

22

same family as the ones used in the previous setion to make this assessment. Nonetheless,

beause MLP and SVM training is muh less time-eÆient than C5.0 training and beause

SVM training was not even possible for large domains on our mahine (beause of a lak of

memory), we did not ondut as extensive a set of experiments as we did in the previous

setions. In partiular, beause of memory restritions, we restrited our study of the eets

of lass imbalanes to domains of size 1 for SVMs (for MLPs, we atually onduted our

study on all sizes for the imbalane study sine we did not have memory problems) and,

beause of low training eÆieny, we only looked at the eet of random oversampling and

undersampling for size 1 on both lassiers.11

MLPs and the Class Imbalane Problem

Beause of the nature of MLPs, more experiments needed to be ran than in the ase of

C5.0. Indeed, beause the performane of MLPs depends upon the number of hidden units

it uses, we experimented with 2, 4, 8 and 16 hidden units and reported only the results

obtained with the optimal network apaity. Other default values were kept xed (i.e., all

the networks were trained by the Levenberg-Marquardt optimization method, the learning

rate was set at 0.01; the networks were all trained for a maximum of 300 epohs or until the

performane gradient desended below 10

10

; and the threshold for disrimination between

the two lasses was set at 0.5). This means that the results are reported a-posteriori (after

heking all the possible network apaities, the best results are reported).

The results are presented in Figures 26, 27, 28 and 29 for onept omplexities =1..5,

11 Unlike

in Question 2 for C5.0, our intent here is not to ompare all possible tehniques for dealing with

the lass imbalane problem with MLPs and SVMs. Instead, we are only hoping to shed some light on

whether these two systems do suer from lass imbalanes and get an idea of whether some simple remedial

methods an be onsidered for dealing with the problem.

23

40

40

40

45

40

35

35

35

40

35

30

30

30

25

25

25

20

20

20

15

15

15

35

30

30

25

25

20

20

15

15

10

10

10

10

10

5

0

5

1

2

3

4

0

5

(a) Size=1

1

2

3

4

0

5

(b) Size=2

Figure 26:

12

10

10

8

5

5

1

2

3

4

5

0

() Size=3

1

2

3

4

0

5

(d) Size=4

1

2

3

4

5

(e) Size=5

MLPs and the Class Imbalane Problem|Correted

12

14

14

14

12

12

12

10

10

10

8

8

8

6

6

6

4

4

4

2

2

8

6

6

4

4

2

0

5

2

1

2

3

4

0

5

(a) Size=1

1

2

3

4

0

5

(b) Size=2

Figure 27:

1

2

3

4

5

0

() Size=3

2

1

2

3

4

0

5

(d) Size=4

1

2

3

4

5

(e) Size=5

MLPs and the Class Imbalane Problem|Unorreted

training set sizes s=1..5, and imbalane levels i=1..5. The format used to report these results

is the same as the one used in the previous two setions.

There are several important dierenes between the results obtained with C5.0 and those

obtained with MLPs. In partiular, in all the MLP graphs a large amount of variane an

be notied in the results despite the fat that all results were averaged over ve dierent

trials. The onlusions derived from these graphs thus should be thought of reeting general

trends rather than spei results. Furthermore, a areful analysis of the graphs reveals that

MLPs do not seem to suer from the lass imbalane problem in the same way as C5.0.

40

40

45

35

35

40

30

30

25

25

20

20

15

15

10

10

5

5

35

30

25

20

15

10

0

1

2

3

4

5

(a) Imbalaned

Figure 28:

0

5

1

2

3

4

5

(b) Oversampling

0

1

2

3

4

5

() Undersampling

Lessening the Class Imbalane Problem in MLP Networks|Correted

24

25

35

45

40

30

20

35

25

30

15

20

25

20

15

10

15

10

10

5

5

5

0

1

2

3

4

5

(a) Imbalaned

0

1

2

3

4

5

(b) Oversampling

0

1

2

3

4

5

() Undersampling

Figure 29:

Lessening the Class Imbalane Problem in MLP Networks|

UnCorreted

Looking, for example, at the graphs for size 1 for C5.0 and MLP (see gures 2(a) and 3(a)

on the one hand and gures 26(a) and 27(a) on the other hand), we see that C5.0 displays

extreme behaviors: it either does a perfet (or lose to perfet) job or it mislassies 25% of

the testing set wrongly (see gure 2(a)). For MLP, this is not the ase and mislassiation

rates span an entire range. As a result, MLP seems less aeted by the lass imbalane

problem than C5.0. For example, for size=1, and onept omplexity 4, C5.0 ran with

imbalane levels 4, 3, 2, and 1 (see gure 2(a)) mislassify 25% of the testing set whereas

MLP (see gure 26(a)) mislassies the full 25% of the testing set for only imbalane levels

2 and 1|the highest degrees of imbalane (some mislassiation also ours at imbalane

levels 3 and 4, but not as drasti as for levels 2 and 1). Note that the diÆulty displayed by

MLPs at onept omplexity 5 for all sizes is probably aused by the fat that, one again

for eÆieny reasons, we did not try networks of apaity greater than 16 hidden units. We,

thus, ignore these results in our disussion.

Another important dierene that an be seen by looking at the graphs for size 5 of

both C5.0 (gures 2(e) and 3(e)) and MLP (gure 26(e) and 27(e)) is that while the overall

size of the training set makes a big dierene in the ase of C5.0, it doesn't make any

dierene for MLP: exept for the highest imbalane levels ombined with the highest degrees

25

of omplexity, C5.0 does not display any notieable error at training set size 5|the highest.

MLPS's on the other hand do. This may be explained by the fat that it is more diÆult

for MLP networks to proess large quantities of data than it is for C5.0.

Beause MLP generally suers from the lass imbalane problem, we asked whether, like

for C5.0, this problem an be lessened by simple tehniques. For the reason of eÆieny

noted earlier and for reasons of oniseness of report, we restrited our experiments to the

ases of random oversampling and random undersampling and to the smallest size (size 1)

ase. The results of these experiments are shown in Figures 28 and 29 whih display the

results obtained with no re-sampling at all (a repeat of gures 26(a) and 27(a)), random

oversampling and random undersampling. Only the orreted and unorreted results are

reported.

The results in these gures show that both oversampling and undersampling have a notieable eet for MLPs, though one again, oversampling seems more eetive. The dierene in eetiveness between undersampling and oversampling, however, is less pronouned

in the ase of MLPs than it was in the ase of C5.0. As a matter of fat, undersampling is

muh less eetive than oversampling for MLP in the most imbalaned ases, but it has omparable eetiveness in all the other ones. This suggests that like for C5.0, simple methods

for ounterating the eet of lass imbalanes should be onsidered when using MLPs.

SVMs and the Class Imbalane Problem

Like for MLPs, more experiments needed to be ran with SVMs than in the ase of C5.0.

Atually, even more experiments were ran with SVMs than with MLPs. We ran SVMs with

a Gaussian Kernel but sine the optimal variane of this kernel is unknown, we tried 10

26

300

300

250

250

200

200

150

150

100

100

50

50

500

450

400

350

300

250

200

150

100

50

0

1

2

3

4

0

5

(a) Imbalaned

1

2

3

4

0

5

(b) Oversampling

1

2

3

4

5

() Undersampling

Figure 30: The Class Imbalane Problem in SVMs|Correted.

40

35

35

40

35

30

30

30

25

25

25

20

20

20

15

15

15

10

10

10

5

5

0

1

2

3

4

5

(a) Imbalaned

0

5

1

2

3

4

5

(b) Oversampling

0

1

2

3

4

5

() Undersampling

Figure 31: The Class Imbalane Problem in SVMs|Unorreted.

dierent possible variane values for eah experiment. We experimented with varianes 0.1,

0.2, et. up to 1. We did not experiment with modiations to the soft margin threshold

(note that suh experiments would be equivalent to the ost-modiation experiments of

C5.0). Like for MLPs, the results are reported a-posteriori (after heking the results with

all the possible varianes, we report only the best results obtained).

As mentioned before, beause of problems of memory apaity, the results are reported

for a training set size of 1 and it was not possible to report results similar to those reported

for MLP in Figures 26 and 27. Instead, we report results similar to those of Figures 28 and 29

for MLPs in Figures 30 and 31 for SVMs. In partiular, these gures show results obtained

by SVMs with no resampling at all, random oversampling and random undersampling for

size 1.

The results displayed in Figures 30(a) and 31(a) show that there is a big dierene

between C5.0 and MLPs on the one hand and SVMs on the other. Indeed, while for both

27

C5.0 and MLPs, the leftmost olumn in a luster of olumns|those olumns representing

the highest degree of imbalanes|were higher than the others (gures 2(a) and 26(a)), in the

ase of SVMs (gure 30(a)) the 5 olumns in the lusters display a at value or the leftmost

olumns have lower values than the rightmost ones (see the ase of onept omplexity 4 in

partiular). The unorreted results in Figure 31(a) reet the fat that SVMs are ompletely

insensitive to lass imbalanes and make, on average, as many errors on the positive and

the negative testing set, and are shown to suer if the relative ost of mislassifying the two

lasses is altered in favour of one or the other lass.

This is quite interesting sine it suggests that SVMs are absolutely not sensitive to the

lass imbalane problem (this, by the way, is similar to the property of the deision tree

splitting riterion introdued by (Drummond and Holte, 00)). As a matter of fat, Figures 30

and 31 (b) and () show that oversampling the data at random does not help in any way

and undersampling it at random even hurts the SVM's performane.

All in all, this suggests that when onfronted to a lass imbalane situation, it might

be wise to onsider using SVMs sine they are robust to suh problems. Of ourse, this

should be done only if SVMs fare well on the partiular problem at hand as ompared to

other lassiers. In our domains, for example, up to onept omplexity 3 (inluded), SVMs

(gure 30(a)) are ompetitive with MLPs (gure 28(a)) and only slightly less ompetitive

with oversampled C5.0 (gure 6(a)). At omplexity 4, oversampled MLPs (gure 28(b)) and

C5.0 (gure 6(a)) are more aurate than SVMs (gure 30(a)).

28

Conlusion

The purpose of this paper was to explain the nature of the lass imbalane problem, ompare

various simple strategies previously proposed to deal with the problem and assess the eet

of lass imbalanes on dierent types of lassiers.

Our experiments allowed us to onlude that the lass imbalane problem is a relative

problem that depends on 1) the degree of lass imbalane; 2) the omplexity of the onept

represented by the data; 3) the overall size of the training set; and 4) the lassier involved.

More speially, we found that the higher the degree of lass imbalane the higher the

omplexity of the onept and the smaller the overall size of the training set, the greater the

eet of lass imbalanes in lassiers sensitive to the problem. The three types of lassiers

we tested were not sensitive to the lass imbalane problem in the same way: C5.0 was the

most sensitive of the three, MLPs ame next and displayed a dierent pattern of sensitivity

(a grayer-sale type ompared to C5.0's whih was more ategorial); and SVMs ame last

sine they were shown not to be at all sensitive to this problem.

Finally, for lassiers sensitive to the lass imbalane problem, it was shown that simple

re-sampling methods ould help a great deal whereas they do not help, and in ertain ases,

even hurt the lassier insensitive to lass imbalanes. An extensive and areful study of

the lassier most aeted by lass imbalanes, C5.0, reveals that while random re-sampling

is an eetive way to deal with the problem, random oversampling is a lot more useful

than random undersampling. More \intelligent" oversampling helps even further, but more

\intelligent" undersampling does not. The ost-modifying method seems more appropriate

than the over-sampling and even foused over-sampling method exept in one ase of very

29

high omplexity and medium-range training set size.

Future Work

The work in this paper presents a systemati study of lass imbalane problems on a large

family of domains. Nonetheless, this family does not over all the known harateristis

that a domain may take. For example, we did not study the eet of irrelevant data in the

majority lass. We assume that suh a harateristi should be important sine it it may

make undersampling more eetive than oversampling or even ost-modifying on domains

presenting a large variane in the distribution of the large lass. Other harateristis should

also be studied sine they may reveal other strengths and weaknesses of the remedial methods

surveyed in this study.

In addition several other methods for dealing with lass imbalane problems should be

surveyed. Two approahes in partiular are 1) over-sampling by reation of new syntheti

data points not present in the original data set but presenting similarities to the existing data

points and 2) learning from a single lass rather than from two lasses, trying to reognize

examples of the lass of interest rather than disriminate between examples of both lasses.

Finally, it would be interesting to ombine, in an \intelligent" manner, the various methods previously proposed to deal with the lass imbalane problem. Preliminary work on this

subjet was previously done by (Chawla et al., 01) and (Estabrooks and Japkowiz, 01), but

muh more remains to be done in this area.

Bibliography

Chawla, N., Bowyer, K., Hall, L., and Kegelmeyer, P. SMOTE: Syntheti Minority Oversampling TEhnique International Conferene on Knowledge Based Computer Systems, 2000

30

Domingos, P. Metaost: A General Method for Making Classiers Cost-sensitive. Proeedings of the Fifth ACM SIGKDD International Conferene on Knowledge Disovery and

Data Mining, pp. 155-164

Drummond, Chris and Holte, Robert Exploiting the Cost (In)sensitivity of Deision Tree

Splitting Criteria, Proeedings of the Seventeenth International Conferene on Mahine

Learning, pp. 239-249, 2000.

Elkan, Charles The Foundations of Cost-Sensitive Learning Proeedings of the Seventeenth

International Joint Conferene on Artiial Intelligene, 2001

Estabrook, A. A Combination Sheme for Indutive Learning from Imbalaned Data Sets

MCS Thesis, Faulty of Computer Siene, Dalhousie University, 2000.

Estabrook, A. and Japkowiz, N. A Mixture-of-Experts Framework for Conept-Learning

from Imbalaned Data Sets, Proeedings of the 2001 Intelligent Data Analysis Conferene.,

2001

Tom E. Fawett and Foster Provost Adaptive Fraud Detetion Data Mining and Knowledge

Disovery, 3(1):291{316, 1997.

Holte, R. C. and Aker L. E. and Porter, B. W. Conept Learning and the Problem of

Small Disjunts Proeedings of the Eleventh Joint International Conferene on Artiial

Intelligene, pp. 813-818, 1989

Nathalie Japkowiz, Catherine Myers and Mark Gluk A Novelty Detetion Approah to

Classiation Proeedings of the Fourteenth Joint Conferene on Artiial Intelligene, 518{

523, 1995.

Nathalie Japkowiz Learning from Imbalaned Data Sets: A Comparison of Various Solutions Proeedings of the AAAI'2000 Workshop on Learning from Imbalaned Data Sets,

2000.

Japkowiz, N. Conept-Learning in the Presene of Between-Class and Within-Class Imbalanes Advanes in Artiial Intelligene: Proeedings of the 14th Conferene of the Canadian

Soiety for Computational Studies of Intelligene, pp. 67-77, 2001.

Lawrene, S., Burns, I., Bak, A.D., Tsoi, A.C., Giles, C.L., Neural Network Classiation and Unequal Prior Class Probabilities G. Orr, R.-R. Muller, and R. Caruana, editors,

Triks of the Trade, Leture Notes in Computer Siene State-of-the-Art Surveys, pp. 299314. Springer Verlag, 1998.

Miroslav Kubat and Stan Matwin Addressing the Curse of Imbalaned Data Sets: OneSided Sampling Proeedings of the Fourteenth International Conferene on Mahine Learning, 179{186, 1997.

31

Miroslav Kubat, Robert Holte and Stan Matwin Mahine Learning for the Detetion of

Oil Spills in Satellite Radar Images Mahine Learning, 30:195{215, 1998.

Murphy, P.M., and Aha, D.W. UCI Repository of Mahine Learning Databases. University of California at Irvine, Department of Information and Computer Siene, 1994.

Lewis, D. and Catlett, J. Heterogeneous Unertainty Sampling for Supervised Learning

Proeedings of the Eleventh International Conferene of Mahine Learning, pp. 148-156,

1994.

Charles X. Ling and Chenghui Li Data Mining for Diret Marketing: Problems and Solutions International Conferene on Knowledge Disovery & Data Mining, 1998.

Pazzani, M., Merz, C., Murphy, P., Ali, K., Hume, T. a nd Brunk, C. Reduing Mislassiation Costs Proeedings of the Eleventh International Conferene on Mahine Learning,

217{225, 1994.

David E. Rumelhart, Geo E. Hinton and R. J. Williams Learning Internal Representations by Error Propagation Parallel Distributed Proessing, David E. Rumelhart and J. L.

MClelland (Eds), MIT Press, Cambridge, MA, 318{364, 1986.

Cullen Shaer Overtting Avoidane as Bias Mahine Learning, 10:153{178, 1993.

Swets, J., Dawes, R., and Monahan, J. Better Deisions through Siene Sienti Amerian,

Otober 2000: 82-97.

32