Provably Efficient GPU Algorithms Nodari Sitchinava and Volker Weichert

advertisement

Provably Efficient GPU Algorithms

Nodari Sitchinava1 and Volker Weichert2

1

arXiv:1306.5076v1 [cs.DS] 21 Jun 2013

2

Karlsruhe Institute of Technology, Karlsruhe, Germany

Goethe University Frankfurt am Main, Frankfurt, Germany

Abstract. In this paper we present an abstract model for algorithm design on GPUs by extending the parallel external memory (PEM) model

with computations in internal memory (commonly known as shared memory in GPU literature) defined in the presence of memory banks and bank

conflicts. We also present a framework for designing bank conflict free

algorithms on GPUs. Using our framework we develop the first shared

memory sorting algorithm that incurs no bank conflicts. Our sorting algorithm can be used as a subroutine for comparison-based GPU sorting

algorithms to replace current use of sorting networks in shared memory.

We show experimentally that such substitution improves the runtime of

the mergesort implementation of the THRUST library.

1

Introduction

In the past 10 years GPUs – the massively parallel processors developed for

graphics rendering – have been adapted for general purpose computation and

new languages like C for CUDA and OpenCL have been developed for programming them. With hundreds of cores on the latest hardware, GPUs have become

a standard computational platform in high-performance computing (HPC) and

have been successfully used to analyze data in natural sciences, such as biology,

chemistry, physics and astronomy. However, general purpose computation on

GPUs is still in the early stages and there is very little understanding of what

kind of algorithms translate into efficient GPU implementations.

In this paper we present a theoretical model for GPUs, which simplifies algorithm design, while capturing most important features of the architecture.

Our model is not completely new – some features of our model have appeared

in literature in one way or another. Our contribution is that we identify the

most important aspects of the GPU architecture, consolidate the algorithmic

techniques and design recommendations that appear in literature, and provide

clear complexity metrics for theoretical analysis of GPU algorithms. Our model

is an extension of existing models of computation, allowing reuse of algorithmic

techniques from existing literature applicable to GPUs. The algorithms designed

in our model come close to the performance of finely tuned state of the art GPU

implementations, and with some additional fine tuning and optimizations outperforms existing implementations. At the same time, our algorithms are simple,

which is a big advantage in code maintainability.

Our model decouples data from threads, which is a more similar to the way

programs are designed on manycore CPUs. The model also simplifies GPU algorithm design by removing the requirement for coalesced accesses to global

memory from the algorithm design process, letting the programmer focus on

defining how to process the data in more familiar way.

Finally, we present a framework that clarifies how to design algorithms to

process data in shared without bank conflicts. It is well known that bank conflicts — multiple threads trying to simultaneously access different locations on

the same memory bank of shared memory — cause a significant slowdown in

GPU implementations and complicate theoretical analysis of GPU algorithms.

Some researchers have presented techniques to avoid bank conflicts for a simple

sequential scan of an array [5]. However, up to now it was not clear how to avoid

bank conflicts for more complex data access patterns. Using our framework it

becomes very easy to identify a bank conflict free algorithm to sort items in

shared memory. Our sorting algorithm can be used as a black box to replace

current implementations of sorting networks to sort items in the base case of

the comparison-based algorithms, which leads to predictable and better overall

runtimes.

2

An algorithmic model for GPUs

The model consists of p multiprocessors and global memory of conceptually

unlimited size shared among all processors. Each multiprocessor consists of w

scalar processors each running a single thread. In addition each multiprocessor

pi contains a cache Ci of size M , which is accessible only by threads of pi .

Initially, the input resides in global memory. To process data, pi must contain

it in its cache Ci . The transfer between the global memory and caches is performed by each multiprocessor using so-called input-output (I/O) operations. In

each I/O operation, each pi can transfer a single block of B elements between the

global memory and its cache Ci , independently of and concurrently with other

multiprocessors. Thus, in each step, up to p distinct blocks can be transferred

between the global memory and the p caches. In case of concurrent writes to

the same element in global memory, an arbitrary multiprocessor succeeds, while

others fail without notification. Concurrent writes to the same block but distinct

addresses within the block succeed in a single I/O.

The multiprocessors communicate with each other only by writing to and

reading from the global memory. To avoid race conditions to global memory, the

execution between different multiprocessors can be explicitly synchronized with

barrier synchronizations. Each synchronization makes sure that all multiprocessors finish their computations and I/Os before proceeding any further.

Each multiprocessor pi consists of w scalar processors, each running a single

thread and executing synchronously. At each time step, all threads execute the

same instruction, but can apply the instruction to any item in the cache, i.e., pi

is a SIMD multiprocessor. An execution of an instruction is broken into three

phases. In the first phase each thread reads data from the appropriate address. In

2

the second phase each thread executes the instruction on the data read in the first

phase. In the third phase each thread writes out the result. Each cache consists

of b = w memory banks. If in either phase more than one thread attempts

to access the same memory bank, a bank conflict occurs and only one of the

threads succeeds and finishes the execution. The other threads stall and replay

the instruction in the next time step. Since execution is performed synchronously

across all threads, the threads do not proceed to the next instruction until all

of them finish executing the current one. Thus, bank conflicts are serialized and

can result in up to w time steps to execute a single SIMD instruction.3

Complexity metrics and runtime. The complexity of an algorithm in

our model consists of three metrics. The first one is the parallel I/O complexity

– the number of parallel blocks transferred between the caches and the global

memory. The second one is parallel time complexity – the number of units of

time it takes to execute the instructions by each multiprocessor. Memory bank

conflicts in caches make it difficult to analyze the second metric. Therefore, in

Section 3 we will describe how to develop algorithms without any bank conflicts.

Finally, since barrier synchronizations take non-trivial amount of time, the third

complexity metric is the number of such synchronizations.

Let λ be the latency of accessing a single block of B elements in global

memory and σ be the upper bound on the time it takes to perform a barrier

synchronization. We call the execution between barrier synchronizations a round.

Let tk (n) and qk (n) be, respectively, the parallel time complexity and parallel

I/O complexity of an algorithm A for round k and R(n) be the total number of

rounds. Then the total runtime of A can be upper bounded as follows:

R(n)

T (n) =

X

R(n)

(tk (n) + λqk (n) + σ) =

k=1

X

k=1

R(n)

tk (n) + λ

X

qk (n) + σR(n)

(1)

k=1

Designing efficient GPU algorithms. An efficient algorithm will miniPR(n)

PR(n)

mize the three functions: R(n), k=1 qk (n) and k=1 tk (n). A familiar reader

will notice that our model describes the access to global memory exactly as the

parallel external memory model of Arge et al. [1]. Thus, we can use the algorithmic techniques developed in the PEM model to optimize the parallel I/O

complexity q(n) and number of rounds R(n) in our GPU model. In section 3 we

describe how to design algorithms without bank conflicts. Then, function tk (n)

for such algorithms can be analyzed similarly to the PRAM algorithms.

Observe, that in some cases minimizing both tk (n) and qk (n) functions will be

conflicting goals: optimal parallel I/O complexity for the problem of list ranking

results in increased time complexity [4]. Therefore, to obtain the best running

time, the values of λ and σ need to be considered.4

3

4

The special case of multiple threads accessing the same word in shared memory can

be executed on modern GPUs in a single time step. However, for simplicity of the

model we ignore this case and it can be considered as a fine-grained optimization.

In our experiments on NVIDIA GTX580, σ is 1-2 orders of magnitude larger than

λ, while λ is 2-3 orders of magnitude larger than access by a thread to data within

cache.

3

2.1

Relation between the model and GPU hardware

We only mention the aspects of GPU hardware that are essential for our model.

For more details of GPU hardware we refer the reader to [6]. Since NVIDIA’s

CUDA is the most commonly applied GPU programming framework, we use it

as a reference to design our model. However, we believe that the concepts can

be applied to any GPU that supports programming beyond custom shaders.

SMs, CTAs and warps. A typical graphics hardware consists of off-chip

graphics memory known as global memory and P streaming multiprocessors

(SMs), each consisting of a number of scalar processors and a faster memory

known as shared memory which is shared among the scalar processors within

the SM, but is inaccessible by other multiprocessors.

A CUDA program (kernel) is executed as a number of threads that are

grouped into Cooperative Thread Arrays (CTAs) or thread blocks. The hardware

performs automatic load balancing when assigning the CTAs to SMs, however,

threads of a single CTA are never split among multiple SMs. An SM in turn is

able to accommodate multiple CTAs, which have to share the register file and

shared memory. A CTA is executed in warps, a hardware unit of w threads that

can be executed at the same time (currently w = 32).

Each multiprocessor in our model represents a CTA running a single warp.

The cache in our model represents a fraction of shared memory available to the

CTA. Note, to keep the model simple, we do not model multiple warps on a

CTA. Instead, s CTAs each running r warps on a hardware with P SMs can

be approximated in our model by p = sr multiprocessors, each containing a

private cache of size M = P M̂ /sr, where M̂ is the size of shared memory on

each SM. Algorithms designed and analyzed in this setting will run only faster if

implemented with multiple multiprocessors grouped as multiple warps per CTA.

Since the shared memory and register file resources are shared among all

the warps, it is beneficial to keep p as low as possible. However, too few CTAs

and warps could translate into underutilized bandwidth between global memory

and SMs. In our experiments (Figure 4) we observed that p = 8P is enough to

reach the maximum throughput between global memory and P = 16 SMs of the

GTX580 graphics card.

Coalesced access to global memory. The global memory has a much

higher latency than the shared memory: shared memory latency is 2 to 14 clock

cycles, depending on the literature (compare [14] and [17]), while global memory

latency is between 400 and 800 clock cycles [14]), but by choosing access patterns

that transfer w consecutive elements at the same time the reads can be bundled

into one memory operation, potentially decreasing the overall latency by a factor

of as much as w = 32 [14]. This is called coalesced memory access. The blockwise access of the I/O operations in our model represent these coalesced memory

accesses. That is, any access to global memory on GPUs can load B contiguous

elements in the same time as a single element and can be performed in parallel

by the multiple streaming multiprocessors. In our experiments we observed that

this is true in practice and the value of B for current GPUs can be estimated

anywhere between w and 4w, depending on the number of warps (each containing

4

Memory Bank 0

A0

A8

A16 A24 A32 A40 A48 A56

Memory Bank 1

A1

A9

A17 A25 A33 A41 A49 A57

Memory Bank 2

A2

A10 A18 A26 A34 A42 A50 A58

Memory Bank 3

A3

A11 A19 A27 A35 A43 A51 A59

Memory Bank 4

A4

A12 A20 A28 A36 A44 A52 A60

Memory Bank 5

A5

A13 A21 A29 A37 A45 A53 A61

Memory Bank 6

A6

A14 A22 A30 A38 A46 A54 A62

Memory Bank 7

A7

A15 A23 A31 A39 A47 A55 A63

Fig. 1: The view of shared memory as a b × ⌈M/b⌉ matrix.

w threads) that each CTA consists of. We observed that as long as we access full

blocks with enough warps, whether in contiguous locations or not (Figures 4),

the full SM to global memory bandwidth is reached, thus, justifying our model.

Memory banks of shared memory. The memory bank layout of caches in

our model mimic the organization of shared memory of SMs. We observed that

memory bank conflicts incur significant penalties on overall running time and

match our predictions of serialization of instructions which cause bank conflicts.

Registers. The fastest memory on GPUs is the register file. Accessing a

register incurs no latency and is thus preferable for many situations. However,

modeling registers adds extra complications. First, registers are private to each

thread and communication of register data among threads of a warp must be performed via shared memory. Second, access pattern to registers must be known at

compile time, otherwise the data is moved to local memory, which is a part of the

global memory put aside for each thread’s. Although local memory is cached in

shared memory, bank conflicts and limited cache size make it difficult to analyze

algorithms that use registers if memory accesses are data-dependent. To keep the

model simple, we do not consider registers. Instead we recommend that explicit

use of registers be considered as a final optimization during implementation,

rather than during algorithm design.

Thread synchronization. Synchronization between all threads of a CTA

can be achieved by an internal function ( syncthreads) and occurs no overhead, but inter-CTA synchronization is costly. While efforts have been made to

achieve synchronization within the program [7], the most common strategy is

to end the running kernel and start another. The time to do this is an order of

magnitude larger than the global memory access latency. The barrier synchronizations of our model represent these types of inter-CTA synchronization. Since

each multiprocessor models a CTA running a single warp, the model does not

consider inter-warp synchronizations within a single CTA.

3

Designing bank conflict free algorithms in shared

memory

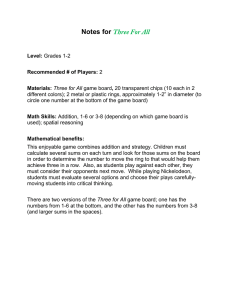

We model the cache of each multiprocessor of size M as a two dimensional matrix

M with b rows and ⌈M/b⌉ columns similar to the view in [5]. Each row of the

5

matrix corresponds to a separate memory bank. When a block of B items is

loaded from global memory to cache, the items of the block are stored in B/b

adjacent columns in the column-major order in the matrix. Thus, an array of

n elements loaded into contiguous space in the cache will be placed into n/b

adjacent columns in the column-major order (see Figure 1).

Observe, that under such view, one can obtain a simple bank conflict free

computation by processing each row of the matrix by a different thread. Note

that if M = b2 , transposition of the matrix will place each column in a separate

row and we can process the original columns with separate threads, again incurring no bank conflicts. Thus, we can design bank conflict free algorithms if we

process matrices by rows or columns.

This approach can easily be generalized for caches of size M = cb2 for small

integer constant c, by transforming the matrix from column-major to row-major

order and vice versa. Since cache space is limited, we must perform the transformation in-place. Although there is no simple in-place algorithm for transforming

general rectangular matrices from row-major to column-major order, c is a sufficiently small integer for current sizes of shared memories on GPUs.

The transformation of a matrix of size M = cb2 from one order into another

can be performed by splitting the rectangular matrix into c square submatrices,

transposing each submatrix and remembering to access the correct submatrices

by index arithmetic. Bank conflict free transposition of a square b × b matrix can

be accomplished trivially in t(b2 ) = b2 /w = b parallel time on a multiprocessor

with w = b threads. The algorithm is given in Appendix B for completeness.

In Section 5 we use this view of shared matrix to implement ShearSort [16] to

obtain the first bank conflict free shared memory GPU sorting implementation.

4

Prefix sums and colored prefix sums

Based on our model, we design a simple three round algorithm for prefix sums,

similar to [12]: a +-reduction round, a spine round and a prefix sums round. Each

multiprocessor pi is assigned a tile: ⌈n/p⌉ contiguous elements of the input. To

process the tile, pi repeatedly loads disjoint pages of size M into its cache.

In the reduction round, pi simply reduces all tile elements using the prefix

sums operation. The result of the +-reduction, which is a single value per tile, is

written out in contiguous space in global memory. The spine round computes the

exclusive prefix sums of the +-reduction round’s results. Since the input data is

very small for this round, we use only a single multiprocessor for this round. In

the prefix sums round, pi scans the tile once more, computing prefix sums using

the inter-tile offsets from the spine round. Within the multiprocessor, we assign

each scalar processor a contiguous part of the page to work on and calculate

intra-page offsets before actually working on the prefix sums.

We expand our prefix sums algorithm to the problem of colored prefix sums.

Given an array of n elements and a set of d colors {c1 , c2 , . . . , cd }, with a color ci

associated with each element, colored prefix sums asks to compute d independent

prefix sums among the elements of the same color.

6

250

100

colored prefix sums

200

kernel 3 runtime

kernel 3 bank conflicts

improved kernel 3 runtime

95

175

100

90

150

85

125

80

100

75

75

70

50

65

25

bank conflicts [106]

150

runtime [ms]

runtime [ms]

200

50

0

60

0

2

4

6

8

10

12

14

16

0

0

colors

2

4

6

8

10

12

14

16

colors

Fig. 2: The runtime of the colored prefix sums algorithm rises with increasing

number of colors. The correlation between the number of bank conflicts and

runtime can be seen in the example of the prefix sums kernel.

The algorithm is basically the same as for prefix sum, apart from having

intermediate values for each color and performing extra operations to identify the

color of each element. The d intermediate values per multiprocessor for the spine

round form a d × p-matrix that is still processed using only one multiprocessor.

Prefix sums operations in shared memory can be performed by one thread per

color without significant slowdown, as they only have to work on w elements.

We would expect the runtime to depend on the input size n, the number of

processors p and the warp size w, but not d since all calculations depending on

the number of colors are performed in parallel. However, the runtime increases

with the number of colors (see Figure 2). The slowdown correlates nicely with the

number of shared memory bank conflicts incurred when calculating intra-page

offsets in the prefix sums round.

To improve our algorithm, we implement a way to calculate the intra-page

offsets without any bank conflicts. We assign each thread one memory bank

for storage of intermediate values. The resulting w × d-matrix lists the color

values in columns. To avoid having multiple threads working on the same banks

we transpose the matrix and then calculate the prefix sums with d threads in

parallel. After retransposing the matrix, we can propagate the offsets.

We observe that the runtime of the algorithm is now constant for different

numbers of colors (see Figure 2). We limit the number of colors to 16 because of

the limited amount of shared memory available for each multiprocessor.

Both algorithms execute one scan of the data in the reduction round, as well

as w computation steps in shared memory. Therefore, the computation time

ps

cps

cps

M

M

tps

1 (M ) = t1 (M ) = O( w ) and parallel I/O is q1 (M ) = q1 (M ) = O( B ). The

data transfer and the computation of the spine round are much smaller: tps

2 (p) =

O( wp · log w) and q2ps (p) = O( Bp ) for simple prefix sums and tcps

2 (d · p) = O(w)

and q2cps (d · p) = O( d·p

B ) for colored prefix sums. These values are too small to

have significant impact on the runtime. The prefix sums round finally causes

two more scans of the data in global memory, as well as two scans in shared

7

M

memory and an extra w for the intra-page offsets, so tps

3 (M ) = O( w + w) and

ps

cps

M

q3 (M ) = q3 (M ) = O( B ). For colored prefix sums we have to perform two

M

additional transpositions that can be done in time O(w), so tcps

3 (M ) = O( w +w)

2

as well. Considering that M = w , B = 4w, and d · p ≪ n, the total runtime is

X

n

tcps

T cps (n) = tcps

2 (d · p) +

k (M )

Mp

k=1,3

X

n

n

n

+ λ q2cps (d · p) +

qkcps (M ) + 3σ = O

+λ

+σ

Mp

wp

wp

k=1,3

5

Sorting

There exist a number of implementations of various sorting algorithms on GPUs.

However, all implementations of comparison-based sorting algorithms have one

thing in common: when the size of the array to be sorted is very small (e.g.

the base case of recursive algorithms), all implementations resort to loading the

array into shared memory and implementing some version of a sorting network.

Sorting networks are ideal for GPUs because they are data oblivious (resulting

in no divergent execution branches) and they are very simple (resulting in very

small constant factors). However, sorting networks still cause bank conflicts. To

the best of our knowledge, there is no implementation of a comparison-based

sorting algorithm that achieves no bank conflicts when sorting small arrays.

Using our view of shared memories (caches) as two dimensional matrices from

Section 3, it becomes very easy to identify a bank conflict free algorithm.

We implement ShearSort [16]. It works on data arranged in an n × m matrix

leaving it sorted in ascending order along the column-major order. It performs

log m rounds of first sorting columns in alternating order and then rows in ascending order. After Θ(log m) rounds matrix is sorted.

On a square matrix of size M = w2 , ShearSort takes O(t̂(w) log w) parallel

time using w threads, where t̂(w) is the time it takes to sort w elements using a

single thread. We can still benefit from the simplicity and data-oblivious nature

of sorting networks by implementing sequential sorting of rows and columns

with a sorting network. Thus, if the row/column sorting is implemented using

Batcher’s sorting network [2], then t̂(w) = O(w log2 w) and the total time it takes

to sort a square matrix is O(w log3 w). Notice, that although this approach incurs

O(log w) extra steps over a simple implementation of a sorting network in shared

memory, this time complexity is guaranteed in the worst case and in practice the

savings due to lack of bank conflicts are larger than the extra O(log w) factor.

We modify the well-known mergesort implementation of the thrust library [3]

to use our ShearSort implementation as base case instead of its own base sorting.

The reason for choosing a mergesort version is the predictable size of the base

case, which enables us to use w × w matrices without the need for padding.

8

CTA0

CTA1

···

CTAP

Setup 1

w00

w10

···

wP 0 w01

w11

···

wP 1

· · · w0W w1W

· · · wP W

Setup 2

Fig. 3: Setup 1 and 2 of the bandwidth experiments with P CTAs and W warps

per CTA (wxy is warp y of CTA x).

It would be interesting to see how the use of ShearSort would perform in

practice on sorting algorithms that do not guarantee fixed sized base case, e.g.

sample sort [10]. We suspect that extra padding would reduce the advantage

of not having any bank conflicts, but the overall running time would still be

improved. We leave this as an open problem for future work.

We cannot analyze the running time of the whole mergesort because the

merging steps still incur bank conflicts. It would be interesting to see if our

framework can help develop a bank conflict free merging, e.g., by using techniques of the external memory algorithms [13] or of Green et al. [8]. Such an

algorithm would be a first fully bank conflict free sorting algorithm on GPUs.

6

Experimental results

We conduct our experiments on a standard PC with a 2.4 GHz Intel Core2 Quad

CPU, 8 GB of RAM and an NVIDIA Geforce GTX580 GPU. Our algorithms

are implemented using the CUDA runtime API and compiled with the CUDA

toolkit version 5.0, gcc version 4.5 and the -O 3 optimization flag.

The runtimes reported do not include the time needed to transfer data from

the host computer to the GPU or back, as both prefix sums and sorting are

primitives often used as a subroutine by more complex algorithms.

6.1

Global memory bandwidth

While the theoretical maximum DRAM bandwidth for our GPU is 192.4 GB/s 5

it is not initially clear how to reach that bandwidth. The CUDA SDK’s sample program to measure the bandwidth using the API’s cudaMemcpy command

reported a bandwidth of 158.9 GB/s for our graphics card.

Merrill describes a scheme [12] that uses NVIDIA’s internal vector classes to

bundle elements. Following this scheme we achieved a bandwidth of 168.2 GB/s.

To verify that the PEM model can serve as a good basis, we created two sets

of experiments. In the first setup, each CTA is assigned one contiguous tile of

the input data and proceeds to read this tile using all its warps. The second

setup breaks with the tile paradigm and treats each warp as a virtual CTA. The

virtual CTAs read one block at a time such that all the ith warp of every CTA

reads a block before any of the (i + 1)th warp of a CTA reads any (see Figure 3).

5

http://www.nvidia.de/object/product-geforce-gtx-580-de.html

9

180

170

170

160

160

150

150

140

140

130

130

throughput [GB/s]

throughput [GB/s]

180

120

110

100

90

80

70

60

120

110

100

90

80

70

60

50

50

40

40

1 block/SM

2 blocks/SM

4 blocks/SM

8 blocks/SM

16 blocks/SM

30

20

10

0

5

10

15

20

25

1 block/SM

2 blocks/SM

4 blocks/SM

8 blocks/SM

16 blocks/SM

30

20

10

30

0

5

10

15

warps/block

20

25

30

warps/block

Fig. 4: Achievable throughput for transfer between global and shared memory in

setups 1 (left) and 2 (right).

0.1

14

thrust scan

b40c scan

our prefix sums

thrust scan

b40c scan

our prefix sums

12

throughput [109 elements/s]

runtime [s]

0.01

0.001

0.0001

10

8

6

4

2

1e-05

216

217

218

219

220

221

222

223

224

225

226

227

228

229

input size

0

216

217

218

219

220

221

222

223

224

225

226

227

228

229

input size

Fig. 5: Runtimes and throughput of prefix sums algorithms.

Our experiments show that eight CTAs per SM and one warp per CTA are

sufficient to reach near peak bandwidth in both setups (see Figure 4). That is

indicative of block accesses of width w elements being feasible to use as unit in

the model. We use this configuration in our kernels.

6.2

Prefix sums

While our kernels are optimized to a point, it sometimes seems excessive to go

even further. For example the summation of w = 32 integers in shared memory

can be performed in parallel in time O(log w). Simply having one thread read

all w values and adding them up in a register is not significantly slower, if at all.

This is done in the reduction kernel of our simple prefix sums implementation.

The spine phase is run on just one CTA because inter-CTA communication is

impossible. The previous kernel’s output is only p = 128 values (8 CTAs·16 SMs)

which are copied into shared memory. The w threads then cooperatively calculate

the prefix sums by four scans of w consecutive values, where each scan starts

with the final value of the previous scan. The scans follow the simple algorithm

10

1

1

thrust mergesort

thrust mergesort (base case 1024)

shearsort

0.8

throughput [109 elements/s]

0.1

runtime [s]

thrust mergesort

thrust mergesort (base case 1024)

shearsort

0.9

0.01

0.001

0.0001

0.7

0.6

0.5

0.4

0.3

0.2

0.1

1e-05

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

input size

0

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

input size

Fig. 6: Runtimes and throughput of thrust mergesort base case in original size

and 1024, as well as ShearSort. The basecase runtimes have been measured with

the NVIDIA Visual Profiler.

by Kogge et al. [9]. Since w consecutive values in shared memory always reside

on different memory banks there are no bank conflicts to worry about.

We compared our prefix sums algorithm against the well-known implementations of the thrust library [3] and the back40computing (b40c) library [11].

Our algorithm outperforms both library implementations on many input sizes,

reaching relative speedups of 13 over thrust and 10 over b40c on smaller inputs. On larger inputs, our implementation is still faster than thrust by a factor

of 1.3, while the highly optimized b40c surpasses our algorithm by a margin of

3%. Figure 5 summarizes the results.

6.3

Sorting

Thrust mergesort [15] is implemented as sorting a small base case and then

iteratively merging the sorted subsequences into larger ones. We replace the base

case by our own ShearSort and adjust the iteration to start at 1024 elements.

Since rows consist of only w = 32 elements and because accesses to registers

are not data dependent, i.e., can be determined at compile time, as an additional

optimization we sort the elements of each row in register space.

For better comparability we modify the thrust library to use a base case size

of 1024 elements (thrust1024 ). While this change causes a slowdown between 1.5

and 4 for the initial sorting, the reduced number of merging phases result in a

faster overall runtime for input sizes smaller than 218 .

On the GTX580, ShearSort fully uses the hardware resources for input sizes

larger than 217 elements. Since smaller inputs simply result in multiprocessors

being idle, it is no surprise that the original thrustorig and thrust1024 are faster

than ShearSort here. For inputs larger than 217 elements, our ShearSort implementation achieves speedups of up to 1.7 over thrustorig and 2.2 over thrust1024 .

11

7

Conclusion

We presented a theoretical model for designing and analyzing GPU algorithms.

Using the model, we designed algorithms which are simple to describe and analyze, yet their implementations are competitive with (and sometimes superior

to) those of the best known GPU implementations. Because we eliminate the

sources of unpredictable runtimes, e.g., bank conflicts, the performance of the

algorithms can be predicted in our model. This provides a basis for algorithm

designers to reason about the performance of their algorithms, thus bridging the

gap between GPU programming and theoretical computer science.

Acknowledgments. We would like to thank Vitaly Osipov for helpful discussions and sharing his insights on GPUs.

References

1. Arge, L., Goodrich, M.T., Nelson, M.J., Sitchinava, N.: Fundamental parallel algorithms for private-cache chip multiprocessors. In: SPAA. pp. 197–206 (2008)

2. Batcher, K.E.: Sorting networks and their applications. In: Proceedings of the

AFIPS Spring Joint Computer Conference. pp. 307–314. ACM (1968)

3. Bell, N., Hoberock, J.: Thrust: A productivity-oriented library for cuda. GPU

Computing Gems: Jade Edition pp. 359–372 (2011)

4. Chiang, Y.J., Goodrich, M.T., Grove, E.F., Tamassia, R., Vengroff, D.E., Vitter,

J.S.: External-memory graph algorithms. In: SODA’95. pp. 139–149 (1995)

5. Dotsenko, Y., Govindaraju, N.K., Sloan, P.P., Boyd, C., Manfedelli, J.: Fast scan

algorithms on graphics processors. In: ICS (2008)

6. Farber, R.: CUDA application design and development. Morgan Kaufmann (2011)

7. Feng, W., Xiao, S.: To GPU synchronize or not GPU synchronize? In: ISCAS’10.

pp. 3801–3804 (2010)

8. Green, O., McColl, R., Bader, D.A.: GPU merge path: a GPU merging algorithm.

In: ICS’12. pp. 331–340 (2012)

9. Kogge, P.M., Stone, H.S.: A parallel algorithm for the efficient solution of a general

class of recurrence equations. IEEE Transactions on Computers C-22(8), 786 –793

(August 1973)

10. Leischner, N., Osipov, V., Sanders, P.: GPU sample sort. In: IPDPS (2010)

11. Merrill, D.: back40computing: Fast and efficient software primitives for GPU computing, http://code.google.com/p/back40computing/, svn checkout 4/21/2013

12. Merrill, D., Grimshaw, A.: Parallel scan for stream architectures. Tech. Rep.

CS2009-14, Department of Computer Science, University of Virginia (2009)

13. Meyer, U., Sanders, P., Sibeyn, J.: Algorithms for Memory Hierarchies: Advanced

Lectures. Springer-Verlag (2003)

14. NVIDIA:

NVIDIA

CUDA

Programming

Guide

5.0

(2012),

http://docs.nvidia.com/cuda/pdf/CUDA_C_Programming_Guide.pdf,

last

viewed on 2/11/2013

15. Satish, N., Harris, M., Garland, M.: Designing efficient sorting algorithms for manycore gpus. In: IPDPS’09. pp. 1–10. IEEE (2009)

16. Sen, S., Scherson, I.D., Shamir, A.: Shear Sort: A true two-dimensional sorting

techniques for VLSI networks. In: ICPP. pp. 903–908 (1986)

12

17. Wong, H., Papadopoulou, M.M., Sadooghi-Alvandi, M., Moshovos, A.: Demystifying GPU microarchitecture through microbenchmarking. In: ISPASS’10. pp.

235–246. IEEE (2010)

13

A

Additional bandwidth

180

180

170

170

160

160

150

150

140

140

130

130

throughput [GB/s]

throughput [GB/s]

We ran experiments in both setups (see Figure 3) both for transfers between

global memory and shared memory and global memory and registers. Another

set of experiments included computation, namely incrementing the data. The

number of increments performed per element was controlled by a parameter.

Apart from the obvious tuning parameters, number of CTAs and number

of warps per CTA, Merrill’s data transfer method [12] proposes two additional

parameters: the vector length for element bundling (this has a direct impact on

the size of the read or write operation performed by CUDA), the number of

accesses per round (can be exploited to overlap computation and data transfer).

120

110

100

90

80

70

60

120

110

100

90

80

70

60

50

50

40

40

1 block/SM

2 blocks/SM

4 blocks/SM

8 blocks/SM

16 blocks/SM

30

20

10

0

5

10

15

20

25

1 block/SM

2 blocks/SM

4 blocks/SM

8 blocks/SM

16 blocks/SM

30

20

10

30

0

5

10

warps/block

15

20

25

30

warps/block

180

180

170

170

160

160

150

150

140

140

130

130

throughput [GB/s]

throughput [GB/s]

Fig. 7: Achievable throughput for transfer from global memory to registers in

both setups.

120

110

100

90

80

70

60

120

110

100

90

80

70

60

50

50

40

40

1 block/SM

2 blocks/SM

4 blocks/SM

8 blocks/SM

16 blocks/SM

30

20

10

0

5

10

15

20

25

1 block/SM

2 blocks/SM

4 blocks/SM

8 blocks/SM

16 blocks/SM

30

20

10

30

0

warps/block

5

10

15

20

25

30

warps/block

Fig. 8: Achievable throughput for transfer from global to shared memory in both

setups.

14

180

180

1 warp/block

2 warps/block

4 warps/block

6 warps/block

8 warps/block

10 warps/block

12 warps/block

170

160

150

140

160

150

140

130

throughput [GB/s]

130

throughput [GB/s]

1 warp/block

2 warps/block

4 warps/block

6 warps/block

8 warps/block

10 warps/block

12 warps/block

170

120

110

100

90

80

70

120

110

100

90

80

70

60

60

50

50

40

40

30

30

20

20

10

10

0

5

10

15

20

25

30

0

5

10

warps/block

15

20

25

30

warps/block

Fig. 9: Achievable throughput for data transfer with increments using 4 CTAs

per SM. Transfer from global memory to registers (left and from global to shared

memory (right).

180

160

150

1 warp/block

2 warps/block

3 warps/block

4 warps/block

5 warps/block

6 warps/block

170

160

150

140

140

130

130

throughput [GB/s]

throughput [GB/s]

180

1 warp/block

2 warps/block

3 warps/block

4 warps/block

5 warps/block

6 warps/block

170

120

110

100

90

80

70

120

110

100

90

80

70

60

60

50

50

40

40

30

30

20

20

10

10

0

5

10

15

20

25

30

0

warps/block

5

10

15

20

25

30

warps/block

Fig. 10: Achievable throughput for data transfer with increments using 8 CTAs

per SM. Transfer from global memory to registers (left and from global to shared

memory (right).

B

Primitives

Data transfer between global and shared memory Based on Merrill’s observations

[12] we use the following scheme to transfer data between global memory and

shared memory. The data is cast into a vector type to increase the load type

size to 128 bits. The requests are split in to multiple rounds (the while-loop

in Algorithm 1) with several vectors being requested to allow the optimizer to

reorganize the requests to achieve optimal throughput (the while-loop in 1 should

be unrolled).

Transposing a matrix in shared memory There is no algorithm known to us that

transposes an arbitrary matrix in-place with O(1) extra memory. Fortunately,

our algorithms operate on either square matrices or m × n-matrices, where m =

2 · n. That allows us to limit our transposition to square matrices, splitting

15

Algorithm 1 Data transfer between global and shared memory

nvec ← pagesize/width vec

elements[count ele ]

count ← threadIdx .x

while count < nvec do

for i ∈ [0, count ele ) do

elements[i] ← in[count + blockDim.x · i]

end for

syncthreads()

for i ∈ [0, count ele ) do

out[count + blockDim.x · i] ← elements[i]

end for

end while

the long matrix into two square matrices and using in-program addressing to

concatenate the pieces.

Algorithm 2 Bank-conflict free transposition of a square matrix

element 1 , element 1 , row

col ← threadIdx .x

for i ∈ [1, count columns ) do

row ← col + i

if row < count rows then

element 1 = matrix [col ][row ]

element 2 = matrix [row ][col ]

matrix [row ][col ] = element 1

matrix [col ][row ] = element 2

end if

syncthreads ()

end for

This function executes one sweep of the matrix below the main diagonal,

using one thread per column. Since no two threads are reading element 1 from

the same row at the same time, and the row where element 2 comes from is

exclusively used by one thread, no bank conflicts can occur.

16