Hardware Assisted Resource Sharing Platform for Personal Cloud

advertisement

Hardware Assisted Resource Sharing Platform for

Personal Cloud

Wei Wang, Ya Zhang, Xuezhao Liu, Meilin Zhang, Xiaoyan Dang, Zhuo Wang

Intel Labs China

Intel Corporation

Beijing, PR China

{vince.wang, ya.zhang, xuezhao.liu, meilin.zhang, xiaoyan.dang, kevin.wang}@intel.com

Abstract—More and more novel usage models require the

capability of resource sharing among different platforms. To

achieve a satisfactory efficiency, we introduce a specific resource

sharing technology under which IO peripherals can be shared

among different platforms. In particular, in a personal working

environment that is built up by a number of devices, IO

peripherals at each device can be applied to support application

running at another device. This IO sharing is our so-called

composable IO because it is equivalent to compose IOs from

different devices for an application. We design composable IO

module and achieve pro-migration PCIe devices access, namely a

migrated application running at the targeted host can still access

the PCIe peripherals at the source host. This is supplementary to

traditional VM migration under which application can only use

resources from the device where the application runs.

Experimental results show that through composable IO,

applications with composable IO can achieve high efficiency

compared with native IO.

Keywords- IO sharing; Live migration; Virtualization

I.

INTRODUCTION

home, he can jointly use smart phone/MID and laptop/desktop

through different network connections to form a personal

Cloud. Under proper resource management scheme, resources

in such a Cloud can be grouped or shared in the most efficient

way to serve this person for his best user experience.

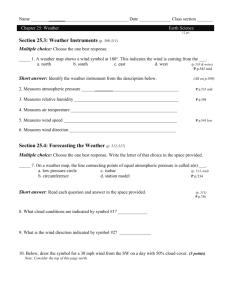

Our vision for future person-centralized working

environment where a personal handheld device, e.g., MID, is

probably the center of a person’s IT environment, is shown in

Figure 1. There are public Clouds that are managed by big data

center providers. A Cloud user can access such Clouds through

Internet, and enjoy different services that may take advantage

of the super computing power and intensive information/data

that data centers provide. There are also personal Clouds that

are built up by a personal handheld device and its surrounding

computing or consumer electronic (CE) devices. The interconnection for the components in a personal Cloud may be, for

example through near field communication (NFC) technology,

under which the underlying networks can be direct cable

connection or wireless networks (e.g., WLAN, WPAN,

Bluetooth, etc.).

The primary goal for Cloud computing is to provide

services that require resource aggregation. Through good

resource management/assignment scheme Cloud resources for

computation, storage, and IO/network can be efficiently

grouped or packaged to accomplish jobs that can’t be handled

by individual devices (e.g., server, client, mobile device).

Cloud may also help to accomplish tasks with a much better

QoS in terms of, e.g., job execution time at a much lower cost

in terms of, e.g., hardware investment and server management

cost.

Most Cloud research focuses on data center, e.g., EC2 [3],

where thousands of computing devices are pooled together for

Cloud service provision. The Cloud usage, however, may also

be applied to personal environment, because today an

individual may have multiple computing or communication

devices. For example, a person may have a cellular phone or a

Mobile Internet Device (MID) that he always carries with him.

He probably also has a laptop or a desktop that has a stronger

CPU/GPU set, a larger MEM/disk, a friendly input interface,

and a larger display. This stronger device may probably be left

somewhere (e.g.,office or home) due to inconvenience of

portability. Once the owner carries a handheld and approaches

to the stronger devices, e.g., when he is set at the office or at

Figure 1. A Person-Centralized Cloud

Efficient management for resource aggregation, clustering,

and sharing is required for both data center Cloud and personal

Cloud. Virtualization has been so far the best technology that

may serve this goal. Based on virtualization, computing and

storage resources can be shared or allocated by generating and

allocating virtual machines (VM) that run part of or entire

applications. In particular, when an application has been

partitioned and run in a number of VMs and each of the VMs

runs at different physical machines, we can say that computing

resources at these machines are aggregated to serve that

application. With the live VM migration capability [1] [2], the

pool of resources can be adjusted by migrating VMs to

different physical machines. In other words, through

virtualization resources at different devices can be aggregated

for single user in a flexible way without any extra hardware

requirements.

keyboard/mouse, and can still access LPIA’s peripherals as the

same manner before migration.

Sharing or grouping CPU computing power by managing

VMs for a large work, as we described in the previous section,

has been widely studied in typical virtualization technologies

including VMware VSphere as well as open source

technologies such as Xen [4] and KVM [5]. VM allocation and

migration are applied for, e.g., load balancing and hot spot

elimination. However, how to efficiently aggregate and share

IO and its peripherals, which is another important resource

sharing issue in Cloud, has not been thoroughly investigated so

far.

The paper is organized as follows. In section 3 we discuss

the resource sharing platform and introduce the composable IO.

In section 3 we present detailed design for composable IO logic.

In section 4 we show major performance measurements.

Finally in section 5 we conclude and list future works.

The methodology of sharing CPU through migration

implies IO sharing, because an application migrated to a new

host can use local IO resources at the target host machine.

However, a resource sharing case that a simple migration

cannot handle is that the required computing resource and IO

resource are located at different physical machines. This may

happen, for example, when an application has to utilize a strong

CPU on a laptop while at the mean time, it relies on handheld

IO functions such as network access.

In this work we address the above IO peripheral sharing

problem by enabling a process running on a physical machine

to access IO peripherals of any other physical machines. In

particular, we consider the highly dynamic nature of personal

Cloud where Cloud components and topology may change

frequently. We cover the case of IO sharing in the context of

VM migration, under which the physical IO that has been

accessed by an application will be maintained during and after

this application has been migrated to different physical

machines.

This remote IO access, or IO composition, is required when

the target host for migration does not have the same IO

environment as the original host, or the equivalent IO part has

been occupied by some other applications. Such a promigration IO access also implies that a user can use aggregated

IO peripherals from different devices, e.g., it can use IO from

both original and target host. Although in this work we use

personal Cloud as our primary investigated scenario, the work

can be extended to data center as well.

As a first step, we design a dedicated hardware to redirect

the mobile device’s I/O peripherals. This dedicated hardware

provides the I/O data path cross LPIA (low power Intel

architecture, e.g. MID and handheld) and HPIA (high

performance Intel architecture, e.g. desktop and laptop), the

LPIA’s application can seamlessly migrate to HPIA to use its

more powerful computation resource and more convenient

In summary, our major contributions are as follows:

– We design the first hardware solution for seamless promigration PCIe IO peripheral access. Such a composable IO

logic provides a much more convenient way for applications to

share Cloud resources especially IO peripherals.

–We prove that the concept works, and can greatly improve

user experience. The I/O device pass-through can be enabled to

provide near native I/O performance to upper layer VM.

II.

COMPOSABLE IO FOR RESOURCE SHARING

A. State of Arts for Resource Sharing

In a personal Cloud the resources may be shared or

aggregated in different ways based on how applications require.

There is a need for computation resource sharing. More than

one device may collaborate and do the computing work

together through, e.g., parallel computing that has been

extensively studied for Grid Computing [7]. Another scenario

for computation resource sharing is that a weak device utilizes

resources from a stronger device. This has been shown in

previous works [8][9]. Graphic applications running at a small

device can be rendered at a device with a stronger GPU and a

larger display, through capturing graphic commands at small

device and sending them to the larger one.

Resource within a Cloud can be shared through either direct

physical resource allocation or virtual resource allocation.

Physical sharing is straightforward. Cloud members are

connected together through networks and assign their disk or

memory an uniform address for access, as what has been done

in [10]. The challenges are that existing network bandwidth

between any two Cloud members is much smaller than the

intradevice IO bandwidth, and the network protocol stack

results in extra latency for data or message move among

devices. This dramatically degrades the application

performance, in particular for real-time services. Even if the

new generation CPU chip architecture and networks may

mitigate such a problem by making the communication

between devices equally fast as within a device, due to the

dynamic nature of personal Cloud caused by mobility of the

centralized user, the Cloud topology and membership may

continuously change. Since any change will cause a reconfiguration for pooling the overall resources in a Cloud and

such a configuration not only takes time but also has to stop all

the active applications, hard resource allocation may not work

well in personal Cloud environment.

To address the above problem, virtualization [4][5] can be

applied and the migration based on virtualization can be used

for resource sharing or re-allocation by migrating applications

among different devices. An application can be carried in a VM

and migrated to a different physical machine that best supports

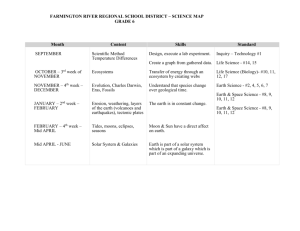

MID Guest OS

PC Guest OS

VMM (PC)

PC

PCIE Root Complex

Native

Performance

624.01MBps

Guest OS

Performance

29.06MBps

VMM

Overhead

95.3%

Emulation

NIC

ParaNIC

624.01MBps 126.42MBps

79.7%

virtualization

Pass-through NIC 624.01MBps 605.29MBps

3%

Compared with emulation and para-virtualization, the passthrough method can significantly reduce the VMM overhead

however, it cannot be used in VM live migration unless there is

a physical link. To better accomplish the IO sharing among

personal Cloud, we propose the Composable IO logic (CIOL)

to redirect MID (LPIA)’s peripherals to PC (HPIA) platform.

Compared to the software redirection [12], which needs VMM

to intercept and redirect I/O operations, the hardware solution

can realize the higher performance I/O virtualization method:

device direct access.

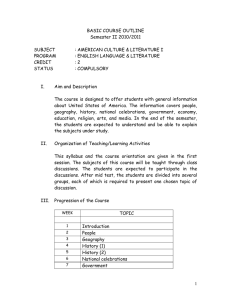

Figure 3 shows the proposed Composable IO logic which

has following components:

To LPIA

On Chip

Fabric

DMA Remapping Unit

MMIO and PIO Remapping Unit

Interrupt Remapping Unit

HPIA Interface

B. Composable IO

Traditional migration enables a certain level of IO sharing

between the origination and destination of a migration. For

example, a migrated process can still access the disk image at

its original host. Technology for pro-migration access for other

IO peripherals such as network adapter and sensors, which are

probably more pertinent to applications, has not been provided.

In personal Cloud, pro-migration access is highly desired

because of large difference in computing capability and broad

variety of IO peripherals among personal devices.

TABLE I. VMM OVERHEAD IN IOV

LPIA Interface

it, probably because that physical machine has the required

computing, network, or storage resources. Virtualization causes

performance and management overhead. However, the

flexibility it brings to resource management makes it by far the

most promising technique for Cloud resource management.

More importantly, VM can be migrated while keeping

applications alive [11]. This advantage makes the resource

sharing through VM allocation/migration even more appealing

in particular for applications that cannot be interrupted.

To HPIA’s

general

Interface

Configuration Space Remapping Unit

IO Redirection Layer

Composable I/O Logic

On Chip Fabric

MID

Device 1(MID)

Device 2(MID)

Device N(MID)

Figure 2. Resource Sharing Platform

The required IO peripheral remote access capability in a

personal Cloud is illustrated in Figure 2. An application

running on laptop (PC) can access the IO peripherals on MID.

In this scenario, the application needs the joint utilization of

computing resource on the laptop and IO peripherals on the

MID. In other words, the overall computing and IO resources

are aggregated and assigned to an application that requires.

Note that the application can also use IO peripherals at the

laptop. As in this case all IO peripherals at both devices are

composed to serve the application, we call this IO sharing

platform composable IO. In the rest of the text, we use promigration IO access and composable IO interchangeably.

Composable IO enables a much richer resource sharing

usage, which helps to achieve easy CPU and IO sharing in a

virtualized Cloud environment. Through composable IO an

application should be able to switch among IO peripherals at

different devices without interrupting applications.

III.

HARDWARE ASSISTED COMPOSABLE IO

There are three kinds of IO virtualization technology, and

the most efficient one is the pass-though method. The other two

methods, emulation and para-virtualization obviously reduce

the IO performance according to our experimental result. With

this component, we can enable pass-through for IO devices.

Our experiments in Table I show the overhead in IOV. The

tested NIC is Intel Pro 1000 Gigabit Ethernet adapter.

Figure 3. HW Assisted Composable IO components

LPIA Interface: Composable I/O Logic acts as a

fabric agent on LPIA platform and the LPIA Interface is

the interface to on chip fabric.

HPIA Interface: Composable I/O Logic acts as a device

on HPIA platform. The interface can be PICe, Light Peak

optical cable or other high speed general purpose I/O interface.

DMA Remapping Unit: In order to support DMA

address translation, a DMA Remapping Unit is needed to pass

the data package to the HPIA memory space in a proper order

and format.

MMIO and PIO Remapping Unit: In order to make the

MID Guest OS, which is running on the HPIA after migration,

accesses the MID devices as the same way, all the IO

transactions between HPIA and MID should be handled by the

MMIO and PIO Remapping Unit.

Interrupt Remapping Unit: If the interrupt comes from

the MID’s device, this unit will forward this interrupt message

to the HPIA, and then trigger one virtual IRQ to Guest OS.

Configuration Space Remapping Unit: This unit is used

to handle the PCI/PCIe configuration message.

With all the functions above, CIOL is required to address

the following issues in IO redirecting:

A. MMIO/PIO Translation

For memory mapped IO, in order to distinguish different

MID’s devices, we need apply a memory space from the HPIA,

larger or equal to the sum of all the MID’s device memory

space. Figure 4 shows the address translation.

MID Memory Space

iii. The CIOL receives the request and then translates it to

Configuration Request to MID device.

PC Memory Space

Device 1 Bar

Byte

0 Offset

00h

31

BAR

Device 1 End

ii. Hypervisor monitors the request and translates it to a

request to CIOL’s register bank;

04h

08h

Device 2 Bar

Device 1 End

Device 2 Start

CIOL

Device 2 End

Device 2 End

Device N Bar

Device N Start

Device N End

Device N End

Figure 4. Memory Address Translation

When it is connected to HPIA, the CIOL applies a memory

space on HPIA platform. The amount of the memory space is

equal to the sum of the device’s memory size. The MMIO/PIO

transaction is trapped by CIOL, and send to the corresponding

device or memory space. The amount of the memory space can

be calculated as:

M IOSF _ PC M MID _ device1 M MID _ device 2

M MID _ deviceN

As shown in Figure 4, CIOL do the address translation

work. The M IOSF _ PC is splited into N segments. Each one

corresponds to an MID device. For PIO, it is similar to MMIO

According to the analysis above, the CIOL should have at

least 3 BARs, one (BAR_CIOL) for its own registers supported

memory transport, one (BAR_MMIO) for MMIO and one

(BAR_PIO) for PIO.

B. Configuration Space Access

There are 3 types of PCIe request: memory, IO and

configuration. The configuration message is generated by the

Root Complex and should be delivered to the device properly.

CIOL is placed between the PC and MID’s devices. To

correctly transfer the configuration messages to the MID’s

devices, we need the software and hardware co-work.

As show in Figure 5, CIOL maintains a register bank which

reflects the configuration space of each device in MID.

31

0

Register Bank on CIOL

Device 1 Configuration

Space

31

31

Device 2 Configuration

Space

0

0

CIOL

31

C. Interrupt Translation

CIOL supports both the MSI and legacy interrupts.

0

1) MSI

Message Signaled Interrupts (MSI) is a feature that enables

a device function to request service by writing a systemspecified data value to a system-specified address (using a PCI

DWORD memory write transaction).

CIOL need do the MSI remapping to support MSI

interrupts redirection. When CIOL is connected to the PC, the

PC software will read the Message Control registers and

provide software control over MSI.

The steps of MSI remapping are shown in Figure 6.

Device 1 interrupt

CIOL Interrupt Vector 1

Device 1

Memory address 1(MID)

Memory address 1(PC)

Device 2

Memory address 2(MID)

Device N interrupt

CIOL Interrupt Vector N

Device N

Memory address N(MID)

Memory address N(PC)

Device 2 interrupt

On the starting process, MID indicates the number of

interrupt vectors that the CIOL needs. When it is connected to

PC, CIOL will tell the PC to allocate corresponding resources.

When a MSI indicates arrives, the CIOL replaces the MID’s

memory address to the PC’s memory address and sends it to

the PC.

2) Legacy Interrupts

The legacy interrupts is supported by using virtual wires.

CIOL converts Assert/DeAssert messages to the corresponding

PCIE MSI messages and maps different device’s virtual wire to

PCIE INTx messages.

D. CIOL working flow

1) Configuration

When CIOL is connected to PC, the MID and PC configure

the CIOL one by one. Configuration from PC starts after

MID’s configuration as the MID should configure some

registers to do the memory mapping.

The MID configures the following CIOL registers:

MID_SM_ready: Indicate the MID is ready to send

memory data to PC;

MID_Device_No: Indicate how many devices on the

MID, 32 as the max;

MID_D(n)_Bar: Indicate Device n’s Bar, n depends

on the MID_Device_No;

MID_D(n)_Size: Indicate Device n’s Memory’s space,

n depends on the MID_Device_No;

Figure 5. CIOL Register Bank

i. Guest OS generates a Configuration Request;

Memory address 2(PC)

Figure 6. MSI remapping

Device N Configuration

Space

The configuration message translation has the following

steps:

CIOL Interrupt Vector 2

Composable IO

Logic

When MID finishes its configuration, CIOL will become

available to PC and PC will start its configuration. It allocates

corresponding memory space to the CIOL and set the

PC_MR_ready to 1.

PC_MR_ready: Indicate the PC is ready to receive

memory data;

As the MID finds both the MID_SM_ready and

PC_MR_ready are asserted, the memory transfer starts.

2) Memroy Transfer

Memory transfer between two platforms is accomplished

by DMA. MID DMA the data to CIOL, and then CIOL

repackages the data to PC. PC uses BAR_ CIOL to configure

following registers:

DMA_ready: Indicate PC is ready to receive DMA

data;

DMA_address: Indicate the DMA base address;

DMA_length: Indicate the DMA’s length;

3) IO Redirction

After the migration, the MID’s Guest OS is running on

PC’s VMM. It uses the same way to access the peripherals as

in the original platform. The accessing steps are explained as

following steps:

i. Guest OS initiates device accessing request, the

device’s address is MID’s memory space;

ii. The request is trapped by PC’s VMM. It transmits the

request to the CIOL;

iii. CIOL receives the request and decodes the address. It

repackages the request to the on chip bus and replaces

the original address by the on chip address;

The memory mapping method is shown in Figure 4.

IV.

VERIFICATION AND EXPERIMENTAL RESULT

In the testing environment, there are two devices connected

through cable network. One of the devices is MID and the

other is laptop. For MID, it has Intel Menlow platform, with an

Atom CPU Z530 (1.33 GHz), a 512K L2 Cache, and a 533

MHz FSB. Memory size is 2G. In MID host OS runs Linux

Kernel 2.6.27, while Guest OS is Windows XP SP3. For laptop,

it is HP DC 7700 CPU, with a Core 2 Duo E6400 2.13GHz

processor, a 2MB L2 Cache, and a 1066MHz FSB. Memory

size is 4G. In laptop host OS is Linux Kernel 2.6.27 and Guest

OS is also Windows XP SP3.

To validate our idea, we realized the device pass through

support on MID platform, and implemented the passed through

device state migration during the VM migration step. The

device pass through is based on memory 1:1 mapping

mechanism as the current LPIA is short of VT-d support.

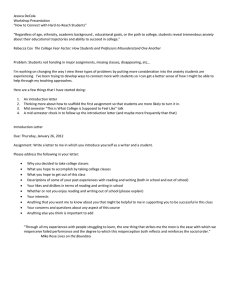

A. Verification Hardware

Figure 10 is the verification platform. This platform

contains 2 PCIex1 ports. One simulates the MID interface and

the other one as the PC interface. The proposed CIOL is

verified in the FPGA.

Figure 7. Verification Platform

To do seamless migration verification, a modified PCIe

switch with a MUX logic is synthesized in order to obtain the

goal of seamless migration. The modified PCIe switch own all

function of a standard PCIe switch. The architecture is shown

in Figure 8.

CPU

CPU

MCH

MCH

ICH

ICH

Modified

PCIe Switch

Address

Translation

Reset

Filter

Mux

Peripheral

Device

Figure 8. Device Seamless Migration Verification

B. Device Pass-through

In 1:1 mapping, the mapping between GPA (Guest Physical

Address) and HVA/HPA (Host Virtual/Physical Address) are

fixed, established at VM initialization stage and kept

unchanged during runtime.

We changed the host OS kernel to reserve low end physical

memory and added a new option to make the VMM using the

reserved memory as guest OS memory, by this mechanism the

1:1 mapping is enabled and then GPA becomes equal to HPA.

So the GPA to HPA address translation is not needed for DMA

operations and device pass through become possible at VT-d

less platform.

For device pass-through, the MMIO or PIO is still needed

to be trapped and emulated by VMM. In our experiment, the

MMIO&PIO operations are intercepted by KVM and routed to

physical device’s PCI memory/IO space. The device interrupt

is served by host OS’ ISR (Interrupt Service Routine) and

injected to guest OS. The DMA operations are bypassed by

KVM.

C. Device State Migration

Hot removing the passed through device before migration

and then hot adding it after migration can make the Guest OS

continue using it, but the device driver inside guest OS needs to

be reloaded and the status is lost. Take NIC for instance, all

connections will be reset due to the migration. There are

several proposed solutions to remain the status and connection

during migration: enabling a virtual NIC and bonding the real

NIC and virtual NIC, letting the virtual NIC take over the I/O

traffic when the real device is hot removed; another solution is

by leveraging “shadow drivers” to capture the state of a passthrough I/O driver in a virtual machine before migration and

start a new driver after migration. However, those solutions

need guest OS modification which needs much additional

effort.

Our proposed CIOL can address the problem mentioned

above. To demonstrate CIOL works well in this scenario, we

realized a new method to solve the problem mentioned above,

which needs no modification of guest OS and is transparent to

up layer applications.

Figure 12 shows the device state migration. The guest OS

has a pass-through device hosted on MID. To pass through a

device to guest OS, the VMM/Hypervisor emulates the

device’s MMIO and PIO and routes guest OS’ MMIO&PIO

operations to host OS/VMM’s physical PCI memory/IO spaces.

The device interrupt is served by host OS/VMM’s ISR and

injected to guest OS while DMA operations are passed through

by VT-d’s address translation or 1:1 mapping mechanism.

After the guest OS is migrated to PC/LAPTOP equipped with

the same device from our verification board, it cannot access

the passed through device since the PCI memory space and

IRQ resources are different at target platform. We reestablish

the mapping between guest OS’ resource and host OS/VMM’s

resource to make the guest OS access the device by the same

manner before migration.

Figure 9. Device state migration

The passed through device information migration and

restoration ability is added to the VM migration process. When

the memory state migration is done, VMM migrates every

passed through device’s information together with the memory

state if presents. The transferred device information includes

the device’s ID (including vendor ID, device ID etc.), the guest

OS’ PCI memory/IO space allocated to that device(including

the base address, size and type), and the guest OS’ IRQ number

for that device.

At the end of VM migration process in acceptance part,

VMM checks if there are passed through device information

embedded, if found, it executes the below steps:

Step 1: Looks for the physical device based on the device

ID extracted from passed through device information from

MID, gets the PCI spaces resources allocated to the device

at host OS/VMM, allocates an IRQ number and registers an

ISR for that device.

Step 2: Establishes the mapping between guest OS’ PCI

spaces and host OS/VMM’s PCI space resource. The guest

OS’ PCI space information is also extracted from passed

through device information from MID. The MMIO&PIO

operations derived from guest OS’ driver will be served by

the MMIO&PIO emulator and routed to the real device’s

PCI spaces.

Step 3: Establishes the mapping between guest OS’ ISR and

host OS/VMM’s ISR, the guest OS’ IRQ number is

extracted from passed through device information from

MID. The device’s interrupt is served by host OS/VMM’s

ISR and injected to guest OS’ original ISR.

Step 4: Enables the device. The PCI/PCIe device on the

target platform must be enabled before using (resuming the

guest OS), or the device will not give response to up layer

software. It is done by writing a value (“0x7” is a common

value) to the command register.

The sequence of step2 and step3 can be exchanged as it

does not affect the result. After executing these 4 steps, the

guest OS can be resumed executing and the device driver can

access its device by the same manner just as before migration.

Because the device’s status and TCP/IP connection are kept in

guest OS, the applications can seamless continue executing

after migration.

D. Experimental Result

We have implemented the prototype of this idea

successfully. In the prototype, a guest Windows is migrated

between MID (Menlow platform) and PC/LAPTOP platforms

with the MUX logic described above. We test both Intel Pro

1000 Ethernet card and Intel 4965 AGN wireless NIC. The up

layer driver only reads NIC’s hard MAC at driver initialization

period and keep it at memory after that, after being migrated

and run on a new NIC, the up layer driver still treats its MAC

as the kept MAC so the TCP/IP traffic can continue seamlessly.

For wireless NIC, there is firmware running on NIC which is

not migrated to target. However, the guest OS’ wireless driver

can treat it as a firmware error and reload firmware to the new

NIC, and the association information and beacon template are

stilled kept unchanged at driver, so the association and network

connection can still be remained and kept unchanged after

being migrated to target.

V.

CONCLUSIONS AND FUTURE WORKS

In this work we design and evaluate composable logic for

personal Cloud applications. IO access is kept alive after live

migration. Such a pro-migration IO sharing helps Cloud to

more flexibly arrange and allocate resources, in particular when

an application may require computing resources and IO

peripherals at different physical machines. Experimental results

validate our design.

At former stage of our work [12], we have implemented the

pure-software solutions which the USB devices or block

devices’ data is redirected to PC/LAPTOP. At that kind of

method, the I/O operations is needed to be intercepted and

redirected by a software module, so the I/O operations cannot

be bypassed between KVM and guest OS, and hence device

pass-through cannot be used for I/O device virtualization.

There are mainly two problems for that kind of pure software

solution:

The I/O virtualization efficiency is very low (4.7% for

Ethernet NIC emulation and 20.3% for Ethernet NIC

para-virtualization)

Much additional software efforts are needed to

realize/maintain the I/O device emulator or paravirtualization driver and the intercept/redirect module.

To enhance the efficiency, we raise our proposal to resolve

following problem: device pass-through plus hardware based

CIOL. The device pass-through can get near native I/O

performance and the additional software efforts to realize the

emulator and para-virtualization driver is also being removed.

The hardware based CIOL provides the data path to make the

applications can still access its original device after being

migrated.

REFERENCES

[1]

T. Wood, P. Shenoy, A. Venkataramani, and M. Yousif, ”Black-box and

Gray-box Strategies for Virtual Machine Migration”, In Proc. 4th

Symposium on Networked Systems Design and Implementation (NSDI),

2007.

[2]

2. C. P. Sapuntzakis, R. Chandra, B. Pfaff, J. Chow, M. S. Lam, and M.

Rosenblum, ”Optimizing the Migration of Virtual Computers”, In Proc.

5th Symposium on Operating Systems Design and Implementation

(OSDI), 2002.

[3] EC2: Amazon Elastic Compute Cloud, http ://aws.amazon.com/ec2/.

[4] P. Barham, B. Dragovic, K. Fraser, S. Hand, T. Harris, R. N. Alex Ho, I.

Pratt, and A.Warfield, ”Xen and the Art of Virtualization”, in

Proceedings of ACM Symposium on Operating Systems Principles

(SOSP 2003), 2003.

[5] KVM Forum, http ://www.linux-kvm.org/page/KVMF orum.

[6] Takahiro Hirofuchi, Eiji Kawai, Kazutoshi Fujikawa, and Hideki

Sunahara, ”USB/IP - a Peripheral Bus Extension for Device Sharing

over IP Network”, in Proc. of 2005 USENIX Annual Technical

Conference.

[7] K. Krauter1, R. Buyya, and M. Maheswaran, ” A taxonomy and survey

of grid resource management systems for distributed computing”,

SOFTWARE RACTICE AND EXPERIENCE, 32:135164, 2002.

[8] X. Wu and G. Pei, ”Collaborative Graphic Rendering for Improving

Visual Experience”, in Proc.of Collabratecomm, 2008.

[9] Yang, S. J., Nieh, J., Selsky, M., and Tiwari, N, ”The Performance of

Remote Display Mechanisms for Thin-Client Computing”, in

Proceedings of the General Track of the Annual Conference on USENIX

Annual Technical Conference, 2002.

[10] Amnon Barak* and Avner Braverman, ”Memory ushering in a scalable

computing cluster”, Microprocessors and Microsystems, Volume 22,

Issues 3-4, 28 August 1998, Pages 175-182.

[11] Christopher Clark, Keir Fraser, Steven Hand, Jacob Gorm Hanseny, Eric

July, Christian Limpach, Ian Pratt, Andrew Warfield, ”Live Migration of

Virtual Machines”, in NSDI 2005.

[12] X. Wu, W. Wang, B. Lin, M. Kai, ” Composable IO: A Novel Resource

Sharing Platform in Personal Clouds”, in Proc.of CoudCom, 2009.