The Sensory Order The Road to Servomechanisms: The Influence of

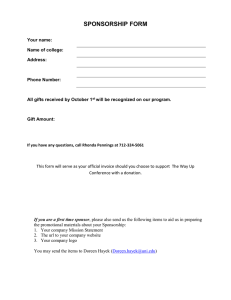

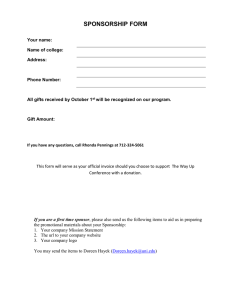

advertisement