Lecture 14: Regression specification, part I BUEC 333 Professor David Jacks

advertisement

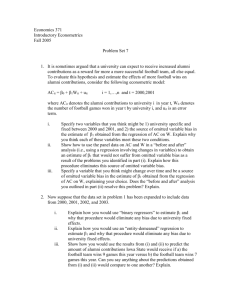

Lecture 14: Regression specification, part I BUEC 333 Professor David Jacks 1 All the way back in Lecture 11, we set out the six assumptions of the CLRM. We also noted that when these six assumptions are satisfied, the least squares estimator is BLUE. Given the prominence of the OLS estimator in empirical applications, we would like to know whether or not the classical assumptions. Violating the classical assumptions 2 In the remaining weeks of the term, we will consider in more detail: 1.) what happens to the OLS estimator when the classical assumptions are violated 2.) how to test for these violations 3.) what to do about it if we detect a violation Violating the classical assumptions 3 Assumption 1 of the CLRM: 1.) model has correct functional form a.) regression function is linear in the coefficients, E(Yi|Xi) = β0 + β1Xi b.) we have included all the correct X’s, and c.) we have applied the correct transformation of Y and the X’s Violating Assumption 1 4 Already noted 2.) is a pretty weak assumption as OLS will automatically correct this for us. Also rely on theory and intuition to tell us that the regression is linear in coefficients; if it is not, we can estimate a non-linear regression model. So we begin by considering case where our regression model is incorrectly specified and specification errors are a problem. Violating Assumption 1 5 Every time we estimate a regression model, we make some very important choices (unfortunately, sometimes without even thinking about it): 1.) What independent variables belong in model? 2.) What functional form should the regression function take (e.g. logarithms, quadratic,…)? Specification 6 The fact that we have choice is both good and bad. On the good side, it represents flexibility (often needed in the “real world” of data analysis). On the bad side, it raises the prospect of searching over specifications until we get the results that proves our point. Specification 7 Again, we look to theory and intuition to guide us, but more often than not, justifying our decisions is an exercise in persuasion. The particular model that we decide to estimate (and interpret) is the culmination of these choices: we call it a specification. A regression specification consists of the model Specification 8 It is convenient to think of there being a right answer to each of the questions from before. That is, a correct specification does indeed exist. Sometimes, we refer to this as the data generating process (DGP), the true model that “generates” the data we actually observe. Specification error 9 One way to think about regression analysis then is that we want to learn about the DGP, given the data at hand (i.e., our sample). A regression model that differs from the DGP is an incorrect specification…leading us to say that the regression model is mis-specified. Again, an incorrect specification arises Specification error 10 We will begin by talking about the choice of which independent variables to include in the model and the types of errors we can make: 1.) We can exclude (leave out) one or more important independent variables that should be in the model. 2.) We can include irrelevant independent variables that should not be in the model. Choosing the independent variables 11 3.) We can choose a specification that confirms what we hoped to find without relying on theory or intuition to specify the model. In what follows, we discuss: a.) the consequences of each of these kinds of specification error and Choosing the independent variables 12 Suppose the true DGP is: Yi = β0 + β1X1i + β2X2i + εi But instead, we estimate the regression model: Yi = β0 + β1X1i + εi* Think of this in terms of a regression were Y is earnings, X1 is education, and X2 is “work ethic”. Omitted variables 13 The question now becomes what are the consequences of omitting the variable X2 from our model…does it mess up our estimates of β1? It definitely messes up our interpretation of β1: with X2 in the model, β1 measures the marginal effect of X1 on Y holding X2 constant… Omitted variables 14 It is pretty easy to see why leaving out X2 out of the model biases our estimate of β1: the error term in the mis-specified model is now εi* = β2X2i + εi. So, if X1 and X2 are correlated, then Assumption 3 of the CLRM is violated: X1 is correlated with εi*. This implies that if X2 changes, so do εi*, X1 and Y. Omitted variable bias 15 That is, β1 measures the effect of X1 and (some of) the effect of X2 on Y; consequently, our estimate of β1 is biased. Returning to the example from before on earnings. Suppose the true β1 > 0 so that more educated workers earn more. Omitted variable bias 16 Finally, suppose that Cov(X1,X2) > 0 so that workers with a stronger work ethic acquire more education on average. When we leave work ethic out of the model, β1 measures the effect of education and work ethic on earnings. If we were very lucky, then Cov(X1,X2) = 0 Omitted variable bias 17 We know that if Cov(X1,X2) ≠ 0 and we omit X2 from the model, our estimate of β1 is biased: E[ˆ1 ] 1 But is the bias positive or negative? That is, can we predict whether E[ ˆ1 ] 1 or E[ ˆ1 ] 1? In fact, we have shown in Lecture 11 that Is the bias positive or negative? 18 Bias E[ ˆ1 ] 1 2 Cov X 1 , X 2 Var X 1 Therefore: 1.) missing regressors with zero coefficients do not cause bias; 2.) uncorrelated (“non-co-varying”) missing regressors do not cause bias Is the bias positive or negative? 19 Consider our previous example where β2 > 0 and Cov(X1,X2) > 0. Bias E[ ˆ1 ] 1 2 Cov X 1 , X 2 Var X 1 Hence, we overestimate the effect of education on earnings…that is, we measure the effect of both having more education and a stronger work ethic. Is the bias positive or negative? 20 The amount of bias introduced by omitting a variable is equal to the impact of the omitted variable on the dependent variable times a function of the correlation between the omitted and the included variables. In particular, Bias = βomitted * f(rincluded, omitted). From the earnings regression with work ethic, βomitted > 0 and rincluded, omitted > 0 How much bias? 21 So, how do we know if we have omitted an important variable? Before we specify, we must think hard about what should be in the model…what theory and intuition tell us are important predictors of Y. Before we estimate, always predict the sign of the regression coefficients…if we generate a “wrong” Detecting and correcting omitted variable bias 22 Next, how do we correct for omitted variable bias? “Easy” way out: just add the omitted variable into the model, but we presumably would have done this in the first place if it was possible. We can also include a “proxy” for the omitted variable instead where the proxy is something highly correlated with the omitted variable. Detecting and correcting omitted variable bias 23 What about the opposite problem of including an independent variable in our regression that does not belong there? Suppose the true DGP is Yi = β0 + β1X1i + εi. But the model we estimate is Yi = β0 + β1X1i + β2X2i + εi*. Including irrelevant variables 24 If X2 really is irrelevant, then β2 = 0 and 1.) our estimates of β0 and β1 will be unbiased 2.) our estimate of β2 will be unbiased as we expect it to be zero. In practice, this requires that Cov(X2,Y) = 0 as β2 = Cov(X2,Y)/Var(X2). Oftentimes, it is possible for X2 and Y to co-vary Including irrelevant variables 25 Recall the gravity model in Lecture 13: Source SS df MS Model Residual 1123.39127 533.896273 2 146 561.695635 3.65682379 Total 1657.28754 148 11.1978888 lntrade Coef. lngdpprod lndist _cons 1.469513 -1.713246 18.09403 Std. Err. .1002652 .1351385 2.530274 t 14.66 -12.68 7.15 Number of obs F( 2, 146) Prob > F R-squared Adj R-squared Root MSE P>|t| 0.000 0.000 0.000 = = = = = = 149 153.60 0.0000 0.6778 0.6734 1.9123 [95% Conf. Interval] 1.271355 -1.980326 13.09334 1.667672 -1.446165 23.09473 Now add a variable if a country starts with “I”: Source SS df MS Model Residual 1200.85538 456.432168 3 145 400.285125 3.14780805 Total 1657.28754 148 11.1978888 lntrade Coef. lngdpprod lndist i _cons 1.533656 -1.65529 -1.748512 16.39695 Std. Err. .0939199 .125924 .3524703 2.372372 t 16.33 -13.15 -4.96 6.91 Including irrelevant variables Number of obs F( 3, 145) Prob > F R-squared Adj R-squared Root MSE P>|t| 0.000 0.000 0.000 0.000 = = = = = = 149 127.16 0.0000 0.7246 0.7189 1.7742 [95% Conf. Interval] 1.348027 -1.904174 -2.445156 11.70806 1.719285 -1.406406 -1.051869 21.08585 26 Even in the case of a truly irrelevant independent variable with zero co-variance, there is still the cost imposed by losing a degree of freedom. Thus, we should expect the adjusted R2 to fall as R2 1 2 e i i / (n k 1) Y Y i i 2 / (n 1) , and less precise estimates in general (larger standard errors, smaller t) for other coefficients as ˆ ˆ Var 1 2 e i i / n k 1 X i Including irrelevant variables i X 2 . 27 Want to avoid either omitting relevant or including irrelevant variables, but which is the greater sin? Omitting relevant variables induces omitted variable bias but has no clear effect on variance. Including irrelevant variables is not as big of a problem as OLS is still unbiased, but is not best. Irrelevant versus omitted variables 28 At the end of the day, it is up to you as the econometrician to decide what independent variables to include in the model. Naturally, there is a temptation to choose the model that fits “best” or that tells you what you (or your boss or your client) want to hear. Resist this temptation and instead let theory and intuition be your guide! Data mining as a (potentially) bad practice. Data mining 29 Data mining (in econometrics) consists of estimating lots of “candidate” specifications and choosing the one whose results we like the most. The problem is we will discard the specifications: 1.) where the coefficients have the “wrong” sign (not wrong theoretically, but “wrong” in the sense we do not like the result) and/or Data mining 30 So we end up with a regression model where the coefficients we care about have big t stats and the “right” signs. But how confident can we really be of our results if we threw away lots of “candidate” regressions? Did we really learn anything about the DGP? Data mining 31 Data mining can also be used to explore a data set to uncover empirical regularities that can inform economic theory. An inductive versus deductive approach… Nothing wrong with this if used appropriately: a hypothesis developed using data mining techniques must be tested Data mining 32 A common test for specification error is Ramsey’s Regression Specification Error Test (RESET). It works as follows: 1.) Estimate the regression you care about (the one you want to test); suppose it has k independent variables and call it Model 1. The RESET test 33 3.) Regress Y on the k independent variables and on M powers of Y-hat; call this Model 2: 2 3 M ˆ ˆ ˆ Yi 0 1 X 1i 2Yi 3Yi ... mYi i 4.) Compare the results of the two regressions using an F-test where ( RSS1 RSS 2 ) / M F ~ FM ,n k M 1 RSS 2 / (n k M 1) The RESET test 34 5.) If the test statistic is larger than your critical value, then reject the null hypothesis of a correct specification of the regression model. What exactly is the intuition here? If the model is correctly specified, then all those functions of Y-hat (and thus, the independent variables) should not help in explaining Y. The RESET test 35 The test statistic is large if RSS1 >> RSS2… that is, the part of variation in Y that we cannot explain is much larger in Model 1 than Model 2. This means that all those (non-linear) functions of Y-hat (and thus, the independent variables) help explain a lot more variation in Y. Problem: if you reject the null of correct specification The RESET test 36 One of the econometrician’s primary concerns comes with ensuring that Assumption 1 of the CLRM is satisfied. Violations occur when we: 1.) exclude an important independent variable 2.) include an irrelevant independent variable Conclusion 37