Lecture 11: The classical linear regression model BUEC 333 Professor David Jacks

advertisement

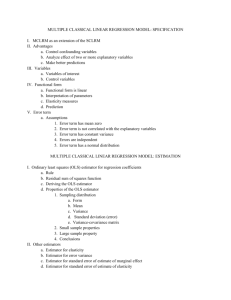

Lecture 11: The classical linear regression model BUEC 333 Professor David Jacks 1 First half of this course dealt with the intuition and statistical foundations underlying regression analysis. This week, we will explore the key assumptions embedded in the classical (normal) linear regression model, or C(N)LRM. Second half of this course will deal with the Review of regression analysis 2 But first, need to review what we have seen before with respect to what constitutes regression. At the heart of it, there is the regression function, a conditional expectation expressed as E(Y|X). Generally, our goal is to make predictions regarding the value of Y or statistically summarize the economic relationship between Y and X. Review of regression analysis 3 The simplest example of a regression model is the case where the regression function f is a line: E[Y|X] = β0 + β1X This says that the conditional mean of Y is a linear function of the independent variable X. We call β0 and β1 the regression coefficients. Review of regression analysis 4 The regression function is never an exact representation of the relationship between dependent and independent variables. There is always some variation in Y that cannot be explained by the model; we think of this as error. To soak up all that variation in Y that cannot be explained by X, we include a Review of regression analysis 5 Our simple linear regression model has a deterministic (non-random) and a stochastic (random) component now: Y = β0 + β1X + ε As β0 + β1X is the conditional mean of Y given X, Y = E(Y|X) + ε implies ε = Y − E(Y|X). This comprises that which is known, unknown, and assumed Review of regression analysis 6 Goal to end up with a set of estimated coefficients (sample statistics that we compute from our data): ˆ0 , ˆ1 , ˆ2 ,..., ˆk One sample would produce one set of estimated coefficients, another sample a different set… Thus, they are RVs with their own sampling distribution (well-defined mean and variance… how likely is it we end up with a certain estimate). Example: class average on midterm vs. samples Review of regression analysis 7 From population regression to sample analog: Yi 0 1 X 1i 2 X 2i k X ki i Yi ˆ0 ˆ1 X 1i ˆ2 X 2i ˆk X ki ei Yi Yˆi ei Ordinary least squares (OLS) as a means to minimize our “prediction mistakes”… OLS minimizes the sum of squared residuals: Review of regression analysis 8 How to determine this minimum? Differentiate w.r.t. β0 and β1, set to zero, and solve. The solutions to this minimization problem are: βˆ0 Y ˆ1 X X n X n ˆ1 i 1 i X Yi Y X n i 1 i X i 1 = 2 i X Yi Y n 1 X n i 1 i X 2 n 1 Review of regression analysis 9 Our question for the week is for which model does OLS give us the best estimator? But what do we mean by best? That is, how do we evaluate an estimator? First, take a look at the sampling distribution, and ask whether the estimator is: 1.) centered around the “true value”? OLS as the best estimator 10 An estimator is unbiased if it is centered at the true value, or E ( ˆk ) k . This is our minimal requirement for an estimator. Using only unbiased estimators, the one with the smallest variance is called efficient (or best). Means using our data/information optimally. OLS as the best estimator 11 OLS as the best estimator 12 If the assumptions of the classical model hold, then the OLS estimator is BLUE. BLUE = Best Linear Unbiased Estimator. But what is the purpose of demonstrating this? 1.) Provides the statistical argument for OLS. The classical assumptions 13 1.) The regression model is correctly specified: a.) has the correct functional form and b.) has an additive error term. 2.) The error term has zero population mean, or E(εi) = 0. 3.) All independent variables are uncorrelated with the error term, or Cov(Xi,εi) = 0, for each independent variable Xi . The classical assumptions 14 4.) No independent variable is a perfect linear function of any other independent variable 5.) Errors are uncorrelated across observations, or Cov(εi,εj) = 0 for two observations i and j 6.) The error term has a constant variance, or Var(εi) = σ2 for every i The classical assumptions 15 Remember that we wrote the regression model as Yi = E(Yi|Xi) + εi Assumption 1 says: a.) The regression has the correct functional form for E[Yi|Xi]. This entails that: a.) E[Yi|Xi] is linear in parameters, b.) we have include all the correct X’s, and Assumption 1: specification 16 Assumption 1 also says: b.) The regression model has an additive error terms as in Yi = E(Yi|Xi) + εi. Not very restrictive: can always write the model this way if we define εi = Yi - E[Yi|Xi]. But if we fail on any one of these counts (so that Assumption 1 is violated), OLS will give us the wrong answer. Assumption 1: specification 17 Also known as having a zero mean error term. Pretty weak assumption as all it says is that there is no expected error in the regression function. Of course, if we expected a particular error value, then (part of) the error term would be predictable, and we could just add that to the regression model. Assumption 2: Zero population mean 18 In this case, E(Yi|Xi) = E(β0 + β1Xi + εi) = β0 + β1Xi + E(εi) E(Yi|Xi) = β0 + β1Xi + 5 We could just define a new intercept β0* = β0 + 5 and a new error term εi* = εi – 5. Then we have Yi = β0* + β1Xi + εi* If Assumption 2 is violated, OLS will be Assumption 2: Zero population mean 19 We need Assumption 3 to be satisfied for all independent variables Xi; that is, there is zero covariance between the Xi and the error term. When Assumption 3 is satisfied, we say Xi is exogenous. When Assumption 3 is violated, we say Xi is endogenous. Why are applied economists so worried about endogeneity? Assumption 3: No correlation with the error term 20 Remember we cannot observe εi—it is one of the unknowns in our population regression function. Furthermore, the error term should be something that happens separately from everything else in the model….it is, in a sense, a shock. If Cov(Xi,εi) ≠ 0 and Xi is in our model, then OLS attributes variation in Yi to Xi that is due to εi. Assumption 3: No correlation with the error term 21 Our estimator should not “explain” this variation in Y using X because it is due to error, not X. But if Cov(Xi,εi) ≠ 0, then when ε moves around, so does X. Thus, we see X and Y moving together, and the least squares estimator therefore “explains” some of this variation in Y using X. Assumption 3: No correlation with the error term 22 Consequently, OLS gives us the wrong answer; we get a biased estimate of the coefficient on X because it measures the effect of X and ε on Y. This assumption is violated most frequently when a researcher omits an important independent variable from an equation. We know from Assumption 2 that the influence of omitted variables will show up in the error term; Assumption 3: No correlation with the error term 23 But if not, then we have omitted variable bias. Example: suppose income depends on standard variables like age, education, and IQ, but we do not have the data on IQ. We might also think that education and IQ are correlated: smarter people tend to get more education(?). Assumption 3: No correlation with the error term 24 How do we ever know if Assumption 3 is satisfied? There are some statistical tests available to tell us that our independent variables are exogenous, but they are not very convincing. Sometimes we can rely on economic theory. More often than not, we have to rely on Assumption 3: No correlation with the error term 25 If Assumptions 1 through 3 are satisfied, then the OLS estimator is unbiased. Suppose we have the simple linear regression Yi 0 1 X i i ˆ 1 X X Y Y X X X X X X X i i 2 i ˆ1 i i i i 0 1 i 0 1 X 2 i Unbiasedness i i 26 ˆ1 X i i X 1 X i X i X X X X X X X X X X 2 i i 2 ˆ 1 1 i i i i i 2 i i 2 i i ̂1 1 E ˆ1 1 Unbiasedness 27 This is more of a technical assumption…perfect collinearity makes OLS coefficients incalculable. With perfect (multi-)collinearity, one (or more) independent variables is a perfect linear function of other variables. Suppose in our hockey data, we have POINTS, GOALS, and ASSISTS as independent variables in a regression model where Assumption 4: No perfect collinearity 28 Perfect collinearity is a problem because for OLS there is no unique variation in X to explain Y. If we regress SALARY on POINTS, GOALS, and ASSISTS whenever we see SALARY vary with GOALS, it also varies with POINTS by exactly the same amount. Thus, we do not know if the corresponding variation in SALARY should be attributed to GOALS Assumption 4: No perfect collinearity 29 Perfect collinearity between two independent variables implies that: 1.) they are really the same variable, 2.) one is a multiple of the other, and/or 3.) a constant has been added to a variable In any case, they are providing the same information about population parameter values. The solution is simple Assumption 4: No perfect collinearity 30 Observations of the error terms should not be correlated with each other as they should be independent draws, or Cov(εi,εj) = 0. If not, we say the errors are serially correlated. If they are serially correlated, this tells us that if ε1 > 0, then ε2 is more likely to be positive also. Assumption 5: No serial correlation 31 Suppose there is a big negative shock (ε < 0) to GDP this year (e.g., oil prices rise or there is another financial crisis). This likely triggers a recession and we can expect to see another negative shock in the path of GDP. If the case of serial correlation, OLS is still unbiased but there are more efficient estimators Assumption 5: No serial correlation 32 Assumption 5: No serial correlation 33 Assumption 5: No serial correlation 34 Error terms should possess a constant variance as they should be independent, identical draws. If true, we say the errors are homoskedastic. If false, we say the errors are heteroskedastic. (Hetero-skedasis = different-dispersion) In general, heteroskedasticity arises when Var(ε) depends Assumption 6: No heteroskedascticity 35 Suppose we regress income (Y) on education (X). Although people with more education have higher income on average, they also (as a group) have more variability in their earning. That is, some people with PhDs get good jobs and earn $$$, but some are “over-qualified” and have a hard time finding a job. Assumption 6: No heteroskedascticity 36 In contrast, almost everyone that drops out of high school earns very little…low levels of education, low average and variance of earnings. The residuals from our regression of earnings on education will likely increase as education increases. Like before, OLS is still unbiased but there are more efficient estimators Assumption 6: No heteroskedascticity 37 Assumption 6: No heteroskedascticity 38 Assumption 6: No heteroskedascticity 39 When Assumptions 1 through 6 are satisfied, then least squares estimator, ˆ j , has the smallest variance of all linear unbiased estimators of βj. An important theorem: it allows us to say that least squares is BLUE (remember, Best Linear Unbiased Estimator). What do we mean by a linear estimator? The Gauss-Markov Theorem (GMT) 40 From Lecture 8, OLS estimator as a weighted average of the Y’s: X X n 1 1 i ˆ0 Y ˆ1 X X n Yi 2 n i 1 n Xi X i 1 n X i X Yi Y n X i X 1 Y ˆ1 i 1 n i 2 2 n n i 1 Xi X Xi X i 1 i 1 w’s just place more emphasis on observations of Y The Gauss-Markov Theorem (GMT) 41 The bottomline: we have established the conditions necessary for OLS to be BLUE. Assumptions 1 through 3: OLS is unbiased. Given unbiasedness, what can we say about the sampling variance of estimates using OLS? That is where Assumptions 4 through 6 come in: OLS has the smallest sampling variance possible; consequently, the highest reliability possible. Conclusion 42