Lecture 2: Review of statistics – one random variable BUEC 333

advertisement

Lecture 2:

Review of statistics – one random variable

BUEC 333

Professor David Jacks

1

Many of the things we will be interested in this

class are random variables (RVs); that is,

variables whose outcome is subject to chance.

We do not know what value a RV will take until

we observe it.

Examples: outcome of coin toss;

value of the S&P/TSX in the future;

your starting salary after graduation.

Random variables

2

The actual value taken by a RV is an outcome.

We will use capital letters to denote a RV, and

lower case letters to denote particular outcomes.

Examples: Rolling two dice;

call the sum of the values rolled X;

a particular outcome might be x = 7.

Random variables

3

RVs are said to be discrete if they can only take

on a finite (that is, countable) set of values.

Examples:

1.) Outcome of a coin toss: {Heads, Tails}

2.) Outcome of rolling a die:

{1, 2, 3, 4, 5, 6}

3.) Handedness of next person you meet:

{Left, Right}

Discrete versus continuous RVs

4

RVs are said to be continuous if they can take on

a continuum (that is, uncountable infinite) set of

values.

Examples:

1.) Value of the S&P/TSX one year from

today: any positive number is

possible.

2.) Your starting salary after graduation:

any positive(?) number is possible.

Discrete versus continuous RVs

5

Associated with every possible outcome of a RV

is a probability which tells us how likely a

particular outcome is.

Pr(X = x) denotes the probability that the random

variable X takes the value x.

Pr(X = x) as the proportion of times that x occurs

in the ―long run‖ (in many repeated trials);

Probability

6

1.) Probabilities of individual outcomes lie

between 0 and 1.

a.) If Pr(X = x) = 0, then outcome X = x

never occurs.

b.) If Pr(X = x) = 1, then outcome X = x

always occurs.

2.) The sum of the probabilities of all possible

individual outcomes always equals 1.

Properties of probability

7

Every RV has a probability distribution.

A probability distribution describes the set of all

possible outcomes of a RV, and the probabilities

associated with each possible outcome.

This is summarized by a probability distribution

function (or pdf).

Probability distributions

8

Example 1: tossing a fair coin

Pr (X = Heads) = Pr (X = Tails) = 1/2

Example 2: Rolling a die

Pr (X = 1) = Pr (X = 2) = …= Pr (X = 6)= 1/6

Example 3: # of times a laptop crashes before MT

Pr (X = 0) = 0.80, Pr (X = 1) = 0.10

Pr (X = 2) = 0.06, Pr (X = 3) = 0.03

Probability distributions

9

An alternate way to describe a probability

distribution is the cumulative distribution

function (or cdf).

It gives the probability that a RV takes a value less

than or equal to a given value, Pr(X ≤ x).

Example: number of times a laptop crashes before

a midterm; the cdf measures the probability that a

Cumulative probability distribution

10

1.0

1.0

0.8

0.8

0.6

0.6

0.4

0.4

0.2

0.2

0.0

0.0

0

1

2

3

4

0

Cumulative probability distribution

1

2

3

4

11

Because continuous RVs can take an infinite

number of values, the pdf and cdf cannot

enumerate the probabilities of each of them.

Instead we describe the pdf and cdf using

functions.

Usual notation for the pdf is f(x).

Usual notation for the cdf is F(x) = Pr(X ≤ x).

The case of continuous RVs

12

Because pdfs and cdfs of continuous RVs can also

be complicated functions, we will just use pictures

to represent them.

We plot outcomes, x, on the horizontal axis, and

probabilities, f(x) or F(x), on the vertical axis.

The cdf is an increasing function that ranges from

zero to one.

Graphing pdfs and cdfs of continuous RVs

13

Remember: the area under the pdf gives the

probability that X lies in a particular interval.

Therefore, the total area under the pdf must be

equal to one; e.g., normally distributed test scores

1.00

0.20

cdf

0.80

pdf

0.15

0.60

0.10

0.40

0.05

0.20

0.00

0.00

0.0

25.0

50.0

75.0

100.0

0.0

25.0

50.0

Graphing pdfs and cdfs of continuous RVs

75.0

100.0

14

The pdf and cdf effectively tell us ―everything‖ we

might want to know about a RV.

But sometimes we only want to describe particular

features of a probability distribution.

One feature of interest in this course is the

expected value or mean of a RV.

Another useful measure of the dispersion

Describing RVs

15

Think of the expected value of a RV as its long run

average over many repeated trials.

More intuitively, can be thought of as the

―middle‖ of a probability distribution or a ―good

guess‖ of the value of a RV.

More precisely, it is a probability-weighted

average of all possible outcomes of X.

Expected values

16

Example: laptop crashes before a midterm

f(0) = 0.80, f(1) = 0.10, f(2) = 0.06 ,

f(3) = 0.03, f(4) = 0.01

E(X) = 0 * (0.80) + 1 * (0.10) + 2 * (0.06)

+ 3 * (0.03) + 4 * (0.01)

Expected values

17

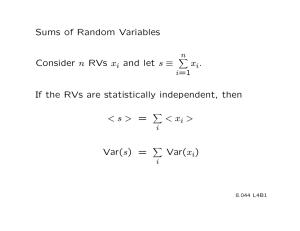

The general case for a discrete RV when X can

take k values x1, x2,…, xk with associated

probabilities p1, p2,…, pk:

k

E ( X ) pi xi

i 1

Reminder about sigma notation: sigma represents

Even more exciting facts about E(X)

18

We can think of E(X) as a mathematical operation

just like (+, -, *, or /).

Conveniently, it is also a linear operator which

means we can pass it through addition and

subtraction operators.

That is, if a and b are constants and X is a RV, then

E(a + bX) = a + E(bX) = a + bE(X)

Example where a = 5, b = 10, and

Even more exciting facts about E(X)

19

Variance measures dispersion—how ―spread out‖

a probability distribution is.

A large (small) variance means a RV is likely to

take a wide (narrow) range of values.

Formally, if X takes one of k possible values x1,

x2,…, xk with associated probabilities p1, p2,…, pk:

2

Var ( X ) E ( X x )

Variance

20

Because Var(X) is measured in the square of the

scale of X, we often prefer the standard deviation:

X Var ( X )

which is measured on the same scale as X.

Example: variance of laptop crashes

Var(X) = (0 – 0.35)2 * (0.80) + (1 – 0.35)2 * (0.10)

+ (2 – 0.35)2 * (0.06) + (3 – 0.35)2* (0.03)

Variance and standard deviation

21

In some sense, a RV’s probability distribution,

expected value, and variance are abstract

concepts.

More precisely, they are population parameters,

characteristics of the whole set of possible

observations of a RV.

An important aside and a preview of things to come

22

As econometricians, our goal is to estimate these

parameters (with varying degrees of precision).

We do that by computing statistics from a sample

of data drawn from the population.

The usefulness of econometrics comes from

learning about

An important aside and a preview of things to come

23

![Problem sheet 1 (a) E[aX + b] = aE[X] + b](http://s2.studylib.net/store/data/012919538_1-498bfd427f243c5abfa36cc64f89d9e7-300x300.png)