Given name:____________________ Family name:___________________

advertisement

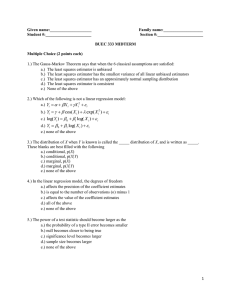

Given name:____________________ Student #:______________________ Family name:___________________ Section #:______________________ BUEC 333 MIDTERM Multiple Choice (2 points each) 1) If the covariance between two random variables X and Y is zero then a) X and Y are not necessarily independent b) knowing the value of X provides no information about the value of Y c) E(X) = E(Y) = 0 d) a and b are true e) none of the above 2) A negative covariance between X and Y means that whenever we obtain a value of X that is greater than the mean of X: a) we will have a greater-than-50% chance of obtaining a corresponding value of Y which is greater than the mean of Y b) we will have a less-than-50% chance of obtaining a corresponding value of Y which is smaller than the mean of Y c) we will obtain a corresponding value of Y which is greater than the mean of Y d) we will obtain a corresponding value of Y which is smaller than the mean of Y e) none of the above 3) In the regression specification, Yi = β0 + β1 X i + ε i , which of the following is a justification for including epsilon? a) it accounts for potential non-linearity in the functional form b) it captures the influence of some of the omitted explanatory variables c) it incorporates measurement error in the betas d) it reflects deterministic variation in outcomes e) all of the above 4) In the Capital Asset Pricing Model (CAPM): a) β measures the sensitivity of the expected return of a portfolio to systematic risk b) β measures the sensitivity of the expected return of a portfolio to specific risk c) β is greater than one d) α is less than zero e) R2 is meaningless 5) Which of the following is an assumption of the CLRM? a) the model is correctly specified b) the independent variables are exogenous c) the errors have mean zero d) the errors have constant variance e) all of the above 1 6) Suppose that in the simple linear regression model Yi = β0 + β1Xi + β2Zi + εi on 100 observations, you calculate that R2= 0.5, the sample covariance of X and Y is 10, and the sample variance of X is 15. Then the least squares estimator of β1 is: a) not calculable using the information given b) 1/3 c) 1 / 3 d) 2/3 e) none of the above 7) The law of large numbers says that: a) the sample mean is a biased estimator of the population mean in small samples b) the sampling distribution of the sample mean approaches a normal distribution as the sample size approaches infinity c) the behaviour of large populations is well approximated by the average d) the sample mean is a consistent estimator of the population mean in large samples e) none of the above 8) Suppose you have the following information about the pdf of a random variable X, which takes one of 4 possible values: Value of X pdf 1 0.1 2 0.2 3 0.3 4 Which of the following is/are true? a) Pr(X = 4) = 0.4 b) E(X) = 2.5 c) Pr(X = 4) = 0.2 d) all of the above e) none of the above 9) For a model to be correctly specified under the CLRM, it must: a) be non-linear in the coefficients c) include all irrelevant independent variables and their associated transformations c) have a multiplicative error term d) all of the above e) none of the above 10) Which of the following is not a linear regression model: a) Yi = α + β X i + γX i2 + ε i b) Yi = β 0 + β1 X i β2 + εi c) log(Yi ) = β 0 + β1 log( X i ) + ε i d) Yi = β 0 + β1 log( X i ) + ε i e) none of the above 2 11) Suppose you have a random sample of 100 observations from a normal distribution with mean 10 and variance 5. The sample mean ( x ) is 9 and the sample variance is 6. The sampling distribution of x has: a) mean 9 and variance 0.06 b) mean 9 and variance 0.05 c) mean 10 and variance 0.06 d) mean 10 and variance 0.05 e) none of the above 12) To be useful for hypothesis testing, a test statistic must: a) be computable using population data b) have a known sampling distribution when the alternative hypothesis is true c) have a known sampling distribution when the null hypothesis is false d) a and b only e) none of the above 13) If two random variables X and Y are independent: a) their joint distribution equals the product of their marginal distributions b) the conditional distribution of X given Y equals the conditional distribution of X c) their covariance is zero d) a and c e) a, b, and c 14) The OLS estimator of the sampling variance of the slope coefficient in the regression model with one independent variable: a) will be smaller when there is less variation in ei b) will be smaller when there are fewer observations c) will be smaller when there is less variation in X d) will be smaller when there are more independent variables e) none of the above 15) If q is an unbiased estimator of Q, then: a) Q is the mean of the sampling distribution of q b) q is the mean of the sampling distribution of Q c) Var[q] = Var[Q] / n where n = the sample size d) q = Q e) a and c 3 Short Answer #1 (10 points – show your work!) Consider the simple univariate regression model, Yi = β0 + β1 X i + ε i . Prove that the sample regression line passes through the sample mean of both X and Y. 4 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 5 Short Answer #2 (20 points – show your work!) For a homework assignment on sampling you are asked to program a computer to do the following: i) Randomly draw 25 values from a standard normal distribution. ii) Multiply each these values by 5 and add 10. iii) Take the average of these 25 values and call it A1. iv) Repeat this procedure to obtain 500 such averages and call them A1 through A500. a) What is your best guess as to the value of A1? Explain your answer. b) What is the value of the variance associated with A1? Explain your answer. c) If you were to compute the average of the 500 A values, what should it be approximately equal to? d) If you were to compute the sampling variance of these 500 A values, what should it be approximately equal to? 6 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 7 Short Answer #3 (20 points – show your work!) Suppose we have a random sample with 10 observations. These 10 observations (X1, X2, …, X10) are drawn from a population distribution with mean, µ, and variance, σ2. We know that x − bar = X = X 1 + X 2 + ... + X 10 10 X i =∑ 10 i =1 10 E(X ) = µ Var ( X ) = σ2 10 X + X3 + X5 + X7 + X9 x − tilde = X%= 1 4 a) Is x-bar a random variable? Explain your answer. b) Is x-bar an unbiased estimator of the population mean? Explain your answer. c) Is x-tilde an unbiased estimator of the population mean? Explain your answer. d) Which one of the two estimators is more efficient? Explain your answer. 8 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 9 Short Answer #4 (20 points – show your work!) Consider the following estimated regression equation that describes the relationship between a student’s weight and their height: ˆ Weight = 100 + 6.0* Height a) The sum of squared residuals is equal to 15,000. There are 1,002 observations in the data set. The covariance between height and weight is equal to 36. The variance of weight is equal to 60. What is your best guess about the value of the variance of the error term in the population regression model? b) Using the same information as in a), compute the value of R2 and briefly explain what the calculated value of R2 means. c) Suppose an additional variable is included, the last digit of a student’s ID number. We think this second variable should not determine variation in a student’s weight. The new estimated regression equation is ˆ Weight = 100 + 6.04* Height + 0.02* ID How is it possible that the R2 of the second regression is higher than that of the first? d) If a student’s ID number is indeed not related to a student’s weight, should the estimated coefficient be equal to zero? That is, do our results suggest we have done something wrong with this regression? 10 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 11 Useful Formulas: k E( X ) = [ 2 k ] ∑ (x − µ Var ( X ) = E ( X − µ X ) = ∑p x i i Pr(Y = y | X = x) = Pr( X = x) = ∑ Pr ( X = x, Y = yi ) X )2 pi i =1 i =1 k i i =1 Pr( X = x, Y = y ) Pr( X = x) m k ( ) E Y = E (Y | X = xi ) Pr ( X = xi ) E (Y | X = x ) = yi Pr (Y = yi | X = x ) i =1 i = 1 k 2 Var (Y | X = x) = [yi − E (Y | X = x )] Pr (Y = yi | X = x ) E(a + bX + cY ) = a + bE( X ) + cE(Y ) i =1 k m Var(a + bY ) = b 2Var(Y ) x j − µ X ( yi − µY )Pr X = x j , Y = yi Cov( X , Y ) = i =1 j =1 Cov( X , Y ) Var(aX + bY ) = a 2Var( X ) + b 2Var(Y ) + 2abCov( X ,Y ) Corr ( X , Y ) = ρ XY = Var( X )Var(Y ) 2 2 Cov(a + bX + cV ,Y ) = bCov( X ,Y ) + cCov(V ,Y ) E Y = Var(Y ) + E (Y ) X −µ X −µ ⎛ σ 2 ⎞ t= ( ) E XY = Cov ( X , Y ) + E ( X ) E ( Y ) Z = X ~ N ⎜ µ , ⎟ s/ n σ n ⎠ n ⎝ n 1 1 n 2 2 1 (xi − x ) X = xi s = (xi − x )( yi − y ) rXY = sXY / sX sY s XY = n i =1 n − 1 i =1 n − 1 i =1 n X i − X Yi − Y i =1 ˆ For the linear regression model Yi = β 0 + β1 X i + ε i , β1 = & βˆ0 = Y − βˆ1 X n 2 Xi − X i = 1 Yˆ = βˆ + βˆ X + βˆ X + ! + βˆ X i 0 1 1i 2 2i k ki e2 e2 / (n − k − 1) RSS 2 ESS TSS − RSS 2 i i i i R = = = 1− = 1− R = 1− 2 2 TSS TSS TSS Y − Y Yi − Y / (n − 1) i i i e2 e 2 / ( n − k − 1) i i i i s 2 = ˆ ˆ where E ⎡⎣ s 2 ⎤⎦ = σ 2 Var ⎡⎣ β1 ⎤⎦ = 2 ( n − k − 1) Xi − X i βˆ j − β H βˆ − β H Z = t= 1 ~ tn −k −1 ~ N ( 0,1) ∑ ∑ ∑ ∑∑ ( ) ( ) ( ) ∑ ∑ ∑ ∑( )( ∑( ∑ ∑( ) s.e.( βˆ1 ) Var[ βˆ j ] Pr[ βˆ j − tα* /2 × s.e.( βˆ j ) ≤ β j ≤ βˆ j + tα* /2 × s.e.( βˆ j )] = 1 − α T ∑ (e − e ) d= ∑ e t =2 2 t =1 t ) ∑ ) ∑( (∑ ) ) ∑( ∑ t T ) t −1 F= ESS / k ESS (n − k − 1) = RSS / (n − k − 1) RSS k 2 ≈ 2(1 − ρ ) 12