Modeling Changing Perspectives — Reconceptualizing Sensorimotor Experiences: Papers from the 2014 AAAI Fall Symposium

From Visuo-Motor to Language

Deepali Semwal, Sunakshi Gupta, Amitabha Mukerjee

Department of Computer Science and Engineering

Indian Institute of Technology, Kanpur

Abstract

We propose a learning agent that first learns concepts in

an integrated, cross-modal manner, and then uses these

as the semantics model to map language. We consider

an abstract model for the action of throwing, modeling the entire trajectory. From a large set of throws, we

take the trajectory images and and the throwing parameters. These are mapped jointly onto a low-dimensional

non-linear manifold. Such models improve with practice, and can be used as the starting point for real-life

tasks such as aiming darts or recognizing throws by others.

How can such models can be used in learning language?

We consider a set of videos involving throwing and

rolling actions. These actions are analyzed into a set of

contrastive semantic classes based on agent, action, and

the thrown object (trajector). We obtain crowdsourced

commentaries for these videos (raw text) from a number of adults. The learner attempts to associate labels

using contrastive probabilities for the semantic class.

Only a handful of high-confidence words are found, but

the agent starts off with this partial knowledge. These

are used to learn incrementally larger syntactic patterns, initially for the trajector, and eventually for full

agent-trajector-action sentences. We demonstrate how

this may work for two completely different languages

- English and Hindi, and also show how rudiments of

agreement, synonymy and polysemy are detected.

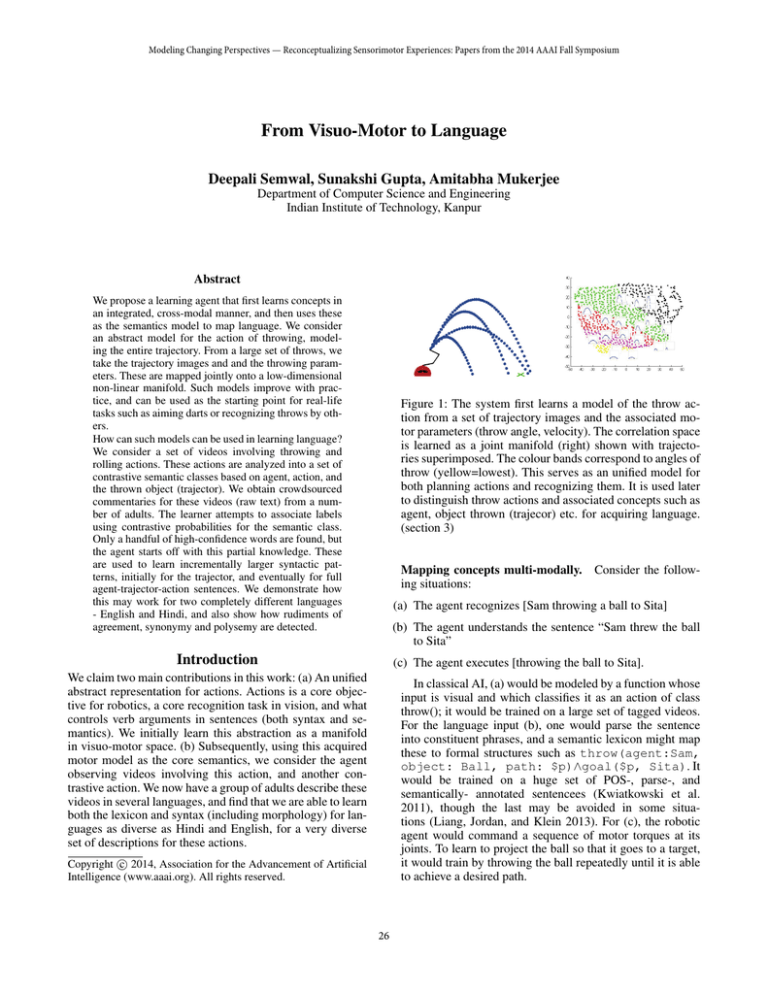

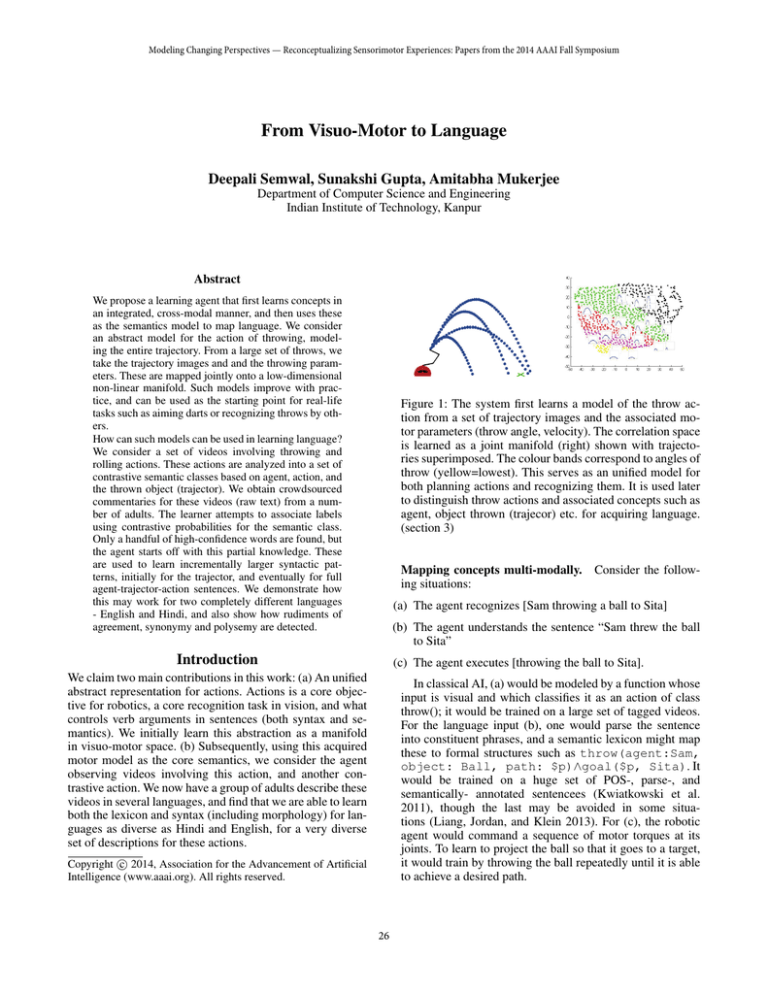

Figure 1: The system first learns a model of the throw action from a set of trajectory images and the associated motor parameters (throw angle, velocity). The correlation space

is learned as a joint manifold (right) shown with trajectories superimposed. The colour bands correspond to angles of

throw (yellow=lowest). This serves as an unified model for

both planning actions and recognizing them. It is used later

to distinguish throw actions and associated concepts such as

agent, object thrown (trajecor) etc. for acquiring language.

(section 3)

Mapping concepts multi-modally. Consider the following situations:

(a) The agent recognizes [Sam throwing a ball to Sita]

(b) The agent understands the sentence “Sam threw the ball

to Sita”

Introduction

(c) The agent executes [throwing the ball to Sita].

We claim two main contributions in this work: (a) An unified

abstract representation for actions. Actions is a core objective for robotics, a core recognition task in vision, and what

controls verb arguments in sentences (both syntax and semantics). We initially learn this abstraction as a manifold

in visuo-motor space. (b) Subsequently, using this acquired

motor model as the core semantics, we consider the agent

observing videos involving this action, and another contrastive action. We now have a group of adults describe these

videos in several languages, and find that we are able to learn

both the lexicon and syntax (including morphology) for languages as diverse as Hindi and English, for a very diverse

set of descriptions for these actions.

In classical AI, (a) would be modeled by a function whose

input is visual and which classifies it as an action of class

throw(); it would be trained on a large set of tagged videos.

For the language input (b), one would parse the sentence

into constituent phrases, and a semantic lexicon might map

these to formal structures such as throw(agent:Sam,

object: Ball, path: $p)∧goal($p, Sita). It

would be trained on a huge set of POS-, parse-, and

semantically- annotated sentencees (Kwiatkowski et al.

2011), though the last may be avoided in some situations (Liang, Jordan, and Klein 2013). For (c), the robotic

agent would command a sequence of motor torques at its

joints. To learn to project the ball so that it goes to a target,

it would train by throwing the ball repeatedly until it is able

to achieve a desired path.

c 2014, Association for the Advancement of Artificial

Copyright Intelligence (www.aaai.org). All rights reserved.

26

This process makes it hard to correlate knowledge between these modalities - e.g. looking at another person

throwing short, it is hard to tell her to throw it higher, say.

It is also inefficient in terms of requiring thrice the circuitry compared to a unified system. Indeed, primate brains

seem to be operating in a more integrated manner. Motor

behaviour invokes visual simulation to enable rapid detection of anomalies, while visual recognition invokes motor

responses to check if they match. Linguistic meaning activates this wide range of modalities (Binder and Desai 2011).

Such a cross-modal model also permits affordance and intentionality judgments, which is a core capability for learning langugae (Rizzolatti and Arbib 1998).

Figure 2: Variations in the manifold according to a) angle of

projection, and b) range. (low values in yellow). For constant

velocity, the range is high towards the left, corresponding to

the “bends” in the coloured bands. This is also the region

around the 45◦ angle of throw (see fig. 1b).

Unified action models as manifolds. The first part of this

work (section 2) is inspired by the observation that each time

the robot throws the ball for motor learning), it also generates a visual trajectory. The motor parameters - torque history, time of release, etc. are correlated with the visual feedback - trajectory, velocities, etc. In fact, both map to the same

low-dimensional non-linear manifold which can be discovered jointly. This is the core idea in the first part of this work.

We note that this approach is more abstract than models that actually attempt to learn throwing actions, e.g. (da

Silva, Konidaris, and Barto 2012; Neumann et al. 2014). Our

model may be thought of as a high-level summary of the

entire space of throws - given any desired trajectory, it can

come up with the motor parameters; given a motor parameter, it can “imaagine” the throw. The model can be applied

to either visual recognition or to motor tasks. It can also be

used to assess other throws, to catch a ball, say, or to guess

the intention of a person throwing something.

ent objects (Steels and Loetzsch 2012) The semantic models learned here are completely unsupervised, whereas other

models with similar motivation often use hand-coded intermediate structures to model the semantics (Roy 2005).

Another distinguishing feature language learning is incremental and does not come to a closure at any point. At every

stage, the learner has only a “best guess” to how a phrase or

sentence may be used. Thus, another name for this approach

may be dynamic NLP. Also, after an initial bootstrapping

phase driven by this multi-modal corpus, learning can continue to be informed by text alone, a process well-known

from the rapid vocabulary growth after the first phase of language acquisition in children (Bloom 2000).

Visuo-motor Pattern Discovery

Learning a few visuo-motor tasks are among our agent’s

very first achievements. Let us consider the act of throwing

a ball. Our learner knows the motor parameters of the throw

as it is being thrown - here we focus not on the sequence of

motor torques, but just the angle and velocity at the point of

release.

Each trajectory gives us an image (fig. 1). Given a dataset

of images X = x1 ...xn , drawn from a space I of all possible trajectory images. Each image has 100×100 pixels, i.e.

4

I ⊂ R10 . Though the set of possible images is enormous, if

we assign pixels randomly, the probability that the resulting

image will be a [throw] trajectory is practically zero. Thus,

the subspace of [throw] images I is a vanishingly small part

of all possible images.

Next we would like to ask what types of changes can we

make to an image while remaining within this subspace? In

fact, since each throw varies only on the parameters (θ, v),

there are only two ways in which we can modify the images while remaining locally within the subspace I of throw

trajectory images. This is the dimensionality of the local

tangent space at any point, and by stitching up these tangent spaces we can model the entire subspace as a nonlinear manifold of the same intrinsic dimensionality. The

struture of this image manifold exactly mimics the structure of the motor parameters (the motor manifold). They

can be mapped to a single joint manifold (Davenport et al.

2010), which can be discovered using standard non-linear

Bootstrapping a lexicon and syntax. For the language

task (section 3), we cosider the system which already has

a rudimentary model for [throw], being exposed to a set of

narratives while observing different instances of throwing.

The narratives are crowdsourced from speakers for a set of

synthetic videos which have different actions (throw or roll),

agents (“dome” or “daisy” - male/female), thrown object or

trajector (ball or square), colour of trajector (red or blue) and

path (on target, or short, or long). We work with transcribed

text, and not with direct speech, so we are assuming that our

agent is able to isolate words in the input.

An important aspect of working with crowdsourced data

is that descriptions of the same action vary widely. For the

very beginning learner, we need a more coherent input, and

we first identify a small coherent subset (called the family

lect) on which initial learning is done. This is then extended

to the remaining narratives (the multi-lect).

The approach learns fragments of the linguistic discourse

incrementally. Starting with a few high-confidence words

(fig. 4), it uses these to learn larger phrases, interleaving the

partial syntax with the semantic knowledge to build larger

syntactic patterns.

This partial-analysis based approach is substantially

different from other attempts at grounded modeling in

NLP (Madden, Hoen, and Dominey 2010), (Kwiatkowski

et al. 2011), (Nayak and Mukerjee 2012). It is also different from attempts that learn only nouns and their refer-

27

Table 1: Sum Absolute Error (Velocity) falls as N increases

Figure 3: Throwing darts. a) Two trajectores that hit the

board near the middle. b) A band for “good throws” in the

2D manifold of projectile images, with a tighter curve for

the “best” throws. This model can now be used to make suggestions such as “throw it higher”.

Figure 4: High confidence lexeme discovery : Contrastive

scores and dominance ratio for top lexemes in. Highlighted

squares show high-confidence lexemes (ratio more than

twice)

dimensionality reduction algorithms such as ISOMAP. In

fig. 2, we show the resulting manifold obtained using a hausdorff distance metric (Huttenlocher, Klanderman, and Rucklidge 1993): (h(A, B) = maxa∈A minb∈B ka − bk, symmetrized).

The same idea can be used to find correlations in any system involving a motion or a change of initial conditions, using algorithm 1:

Barto 2012).

As in those studies, our model also ignores lateral deviations, the and we consider successful trajectories as those

that intersect the dartboard near its center (fig. 3a). In order

to throw the dart, the system focuses on a “good zone” in

the latent space (central band in fig. 3b), where it focuses on

throws that are more nearly orthogonal to the board at contact (short curved axis). Of course, actual task performance

will improve with experience (more data points in the vicinity of the goal), resulting in better performance. Such an abstract model only provides a starting point.

Bootstrapping Language

In order to learn language, we create a set of 15 situations

involving the actions [throw] and [roll] (one of the agents is

shown in fig. 1a). The videos of these actions are then put

up on our website. Largely students on the campus network

contributed commentaries. We obtain 18 Hindi and 11 English transcribed narratives.

The compiled commentaries vary widely in lexical and

constructional choices - e.g. one subject may say "daisy

throws a blue square", whereas another describes the same

video as "now daisy threw the blue box which fell right

on the mark". Differences in linguistic focus cause wide

changes in lexical units and constructions. As described earlier, we first select a coherent subset of narratives - those that

have a more consistent vocabulary, and identify these as the

family lect.

The abstract motor model already learned for throw is

used as a classifier manifold - if the action falls in this

class (is modelable in terms of a local neighbourhood), it is

marked as a throw. The other class, roll, is not implemented

specifically, but taken to be the negation. In this case, the

two classes can be separated quite easily. In addition to the

This is a generic algorithm for discovering patterns in

visuo-motor activity. Initially, its estimate of how to achieve

a throw are very bad, but they improve with experience. We

model this process in table 1 - as the number of inputs N increases, the error in predicting a throw decreases - at N=100,

the error is nearly twice that at N=1000.

Using the model to throw darts

The visuo-motor manifold model developed here is not taskspecific, but can be applied for many tasks involving projectile motion, catching a ball, throwing at a basket, darts,

tennis, etc. As an example we can consider darts, a problem often addressed in the psychology and robotics literature (Müller and Sternad 2009; da Silva, Konidaris, and

28

Table 2: Initial constructions learned from the trajector delimited strings

Table 3: Syntax for trajector - iteration 1, Family-lec

action being recognized, we also assume that the system can

the agent who throws, the object thrown, and its attributes

- shape (square vs circle), and colour (red vs blue). These

contrasts - e.g. the two agents - [Dome] with a moustache

(fig. 1, male), and [Daisy] with a ponytail) - are crucial to

word learning. [Note: we use square brackets to indicate

a concept, the semantic pole of a linguistic symbol) This

contrastive approach has been suggested as a possible strategy applied by child learners (Markman 1990). Given two

contrasting concepts c1 , c2 , we compute the empirical joint

probabilities of word (or later, n-gram) ωi and concept c1 , c2 ,

and compute their contrast score:

patterns which can be used to induce broader regularities.

We compare two available unsupervised grammar induction

systems - Fast unsupervised incremental parsing (Seginer

2007) and ADIOS(Solan et al. 2005); results shown here

adopt the latter because of a more explicit handling of discovered lexical classes.

The initial patterns learned for the trajector in this manner

are shown in Table 2. These are generalized using the

phrase substitution process to yield the new lexeme include

graphicsinline2(ball), [ball], in Hindi and “blue” in English

(Table. 3). This is done based on the family-lect (Figure

4), and the filtered sub-corpus is used to learn patterns and

equivalence classes.

Sωi ,c1 =

P (ωi , c1 )

P (ωi , c2 )

The system now knows patterns for [red square], say, and

it now pays attention to situations where the pattern is almost present, except for a single substitution. It can look

into a the other semantic classes as well, (e.g. [blue square],

[red ball]). In most of these instances (Fig. 4) we already

have partial evidence for these units from their contrastive

scores. Now if we discover new substitution phrases p1 in

the position of p0, referring to a concept in the same semantic class (e.g. [ball] for [square]), and if p1 is already

partially acceptable for [ball] based on contrastive probability, then p1 becomes an acceptable label for this semantic

concept. This process iterates - new lexemes are used to induce new patterns, and then further new lexemes, until the

patterns stabilize. This is then extended to the entire corpus

beyond the small family lect; results are shown in Table 4.

The table captures a reasonable diversity of Noun Phrase

patterns describing coloured objects. Note that words like

“red” and “ball” have also become conventionalized in

Hindi. We also observe that the token niley appears in the

4-word pattern niley rang kaa chaukor and is highly confident even from a single occurrence; this reflects the fast

mapping process observed in child language acquisition after the initial grounding phase (Bloom 2000). As the iteration progresses, these patterns are used for further enhancing

the learners inventory of partial grammar.

We also compare the corpus of narratives here with that

from a larger unannotated corpus (Brown Corpus for English

and the CIIL/IITB Corpus for Hindi). We look for words that

are more frequent in the domain input than in a general situation. This rules out many frequent words like a, and, the for

[is, of] (Hindi). The small set of high conEnglish, and

fidence words - whose contrastive probability is more than

twice the next best match - are highlighted in (Fig. 4).

Interleaving of Word / Syntax learning

Once the system has a few grounded lexemes, we proceed

to discovering syntactic constructions. At the start, we try

to learn the structure of small contiguous elements. One assumption we use here is that concepts that are very tightly

bound (e.g. object and its colour) are also likely to appear

in close proximity in the text (Markman 1990). Another assumption (often called syntactic bootstrapping), is used for

mapping new phrases and creating equivalence classes or

contextual synonyms. This says that given a syntactic pattern, if phrase p1 appears in place of a known phrase p0, and

if this substitution is otherwise improbable (e.g. the phrase is

quite long), then p0, p1 are synonyms (if in the same semantic class) or they are in the same syntactic lexical category.

We find that one type of trajector (e.g. “ball”) and its

colour attribute (e.g. “lAl”, [red]) have been recognized.

So the agent pays more attention to situations where these

words appear. Computationally this is modelled by trying

to find patterns among the strings starting and ending with

(delimited by) one of these high-confidence labels (e.g. “red

coloured ball”). This delimited corpus consistes of strings

related to a known trajector-attribute complex. Within these

tight fragments, we show that standard grammar induction procedures are able to discover preliminary word-order

Verb phrases and sentence syntax

Having learned the syntax for a trajector, this part of the input is now known with some confidence, and the learner can

venture out to relate the agent to the action and path. In this

study, we failed to find any high-confidence lexemes related

to path, hence we were not able to bootstrap that aspect. In

the following we restrict ourselves to patterns for the semantic classes [agent], [action], [trajector].

At this stage, the agent notes that many of the words seem

29

Figure 5: Verb learning. Recomputed contrast scores after

morphological clustering. Note that “threw” now appears as

a high-confidence label.

Table 4: Learned constructions pertaining to trajectors : The

coloured units are the initially grounded lexemes

Table 6: Sentence syntax discovered - iteration 1 - FL

used to obtain a filtered corpus. To simplify the task of discovering verb phrase syntax, the known trajector syntax (table 4) is considered as a unit (denoted as [TRJ], and the

concept of agent ([daisy] or [dome]) is denoted [AGT]. Results of initial patterns, obtained based on the two known action lexemes, are shown in Table 5. Note that pattern matching with Adios now discovers the class a, the as equivalence

class E23 (replaced hereon).

Next, we interleave this syntactic discovery with lexical discovery, permitting also bigram substitutions. Thus

“threw” and “rolled” are found to be substitutable by has

thrown, is throwing; and has slid respectively. This gives us

the more general results of Table 6. Again the system iterates

over the corpus till the discovery of new patterns converges

(Table 7) results. An interesting observation is that the Hindi

(nilaa

data finds a phrase in the TRJ position chaukor) [blue square]. This had not been learned in the trajector

iteration, since nilaa was less frequent.

Thus we see that with this approach we are able to acquire

several significant patterns. These patterns apply to only a

single action input, and for a very limited set of other participants. But it would be reasonable to say that the agent

may observer similar structures elsewhere - e.g. in a context involving hitting, say, if we have the sentence “Daisy hit

Dome” then the agent may use the syntax of [AGT] [verb]

[TRJ] to extend to this context and guess that “hit” may be

a verb and “Dome” the object of this action (which it knows

Table 5: Initially acquired sentence constructions

rather similar (e.g. “sarkAyA”, “sarkAyI” (H); or “throwing”, “thrown” (E)). A text-based morphological similarity

analysis is reveals several clusters with alterations at the end

of words (Fig. 5). To quantify this aspect, we consider normalised Levenshtein distance and perform a morphological

similarity analysis. Since our input is text, we limit ourselves

to analysis based on the alphabetic patterns as opposed to

phonemic maps. Similar words are clustered using a normalized similarity index .Thus we have twelve type instances of

-aa) - (

ii) , and seven types for (

-aa) - ( (

e). We find that these variants - e.g. “sarkaayaa”, “sarkaayii”

- appear in the same syntactic and semantic context. These

clusters are now used to further strengthen the lexeme and

action association.

Again, we use an iterative process, starting with grounded

unigrams, moving a level up to learn simple word-order

patterns, learning alternations and lexical classes through

phrase substitution, and so on to acquire a richer lexicon and

syntax. The learner has the concept of agent and has associated the words “daisy” and “dome”. One action word is

known for both Hindi and English (Fig. 5), and these are

30

Will the approach scale to other actions? The first task is

to demonstrate scalability, by including actions other than

[throw]. One of our main strengths is that neither the visuomotor nor the linguistic components had any kind of annotation, so the training dataset needed for this should be relatively easy to generate compared to treebanks and semantically annotated data.

Another problem would be to use the motor model to

guess intentions and formulate sentences such as “try to

throw a little higher”. In the present work, the intention can

plausibly be captured, but we are not able to handle the linguistic part yet. This is because the last part of the sentences

in these narratives - those that encode the quality of the

throws (“overshot the mark”, “hit the target exactly”) exhibit

too much variation to be learned from this small corpus, but

it should be possible to do this with a larger dataset.

A third area would be to extend this work into metaphor,

e.g. sentences such as (d) “Sam threw the searchlight beam

at the roof.”, or (e) "Sam threw her a searching look." These

can be related to the motor model of [throw], as a motion.

But these are typically never encountered in a grounded

manner, but quite often in language-only input. Linking this

language input to the existing grounded models would open

up new vistas for concept and language discovery.

References

Binder, J. R., and Desai, R. H. 2011. The neurobiology of

semantic memory. Trends in cognitive sciences 15(11):527–

536.

Bloom, P. 2000. How Children Learn the Meaning of Words.

MIT Press.

da Silva, B. C.; Konidaris, G.; and Barto, A. G. 2012. Learning parameterized skills. In Proc. ICML.

Davenport, M. A.; Hegde, C.; Duarte, M. F.; and Baraniuk,

R. G. 2010. High-dimensional data fusion via joint manifold learning. In AAAI Fall 2010 Symposium on Manifold

Learning, Arlington, VA.

Huttenlocher, D. P.; Klanderman, G. A.; and Rucklidge,

W. J. 1993. Comparing images using the hausdorff distance.

IEEE PAMI 15(9):850–863.

Kwiatkowski, T.; Zettlemoyer, L.; Goldwater, S.; and Steedman, M. 2011. Lexical generalization in ccg grammar induction for semantic parsing. In Proc. EMNLP, 1512–1523.

Liang, P.; Jordan, M. I.; and Klein, D. 2013. Learning

dependency-based compositional semantics. Computational

Linguistics 39(2):389–446.

Madden, C.; Hoen, M.; and Dominey, P. 2010. A cognitive

neuroscience perspective on embodied language for humanrobot cooperation. Brain and Language 112(3):180–188.

Markman, E. M. 1990. Constraints children place on word

meanings. Cognitive Science 14(1):57–77.

Müller, H., and Sternad, D. 2009. Motor learning: changes

in the structure of variability in a redundant task. In Progress

in motor control. 439–456.

Nayak, S., and Mukerjee, A. 2012. Grounded language acquisition: A minimal commitment approach. In Proc. COLING 2012, 2059–76.

Table 7: Learned constructions over trajector phrases, The

coloured units are the initially grounded lexemes

from the semantics). Thus, once a few patterns are known,

it becomes easier to learn more and more patterns, which is

the fast mapping stage we have commented upon earlier.

Note that our lexical categories are not necessarily the

same as that of a linguist. But those categories are often

a matter of fierce contention, and most human language

users are blissfully unaware of these and perhaps our system can also do quite well without quite mastering these. At

the same time, most of the structures (e.g. Adj-N (red ball,

laal chaukor),Art-N (E23 ball), etc are indeed discovered.

It also discovers agreement between the the unit “chaukor”,

[square, M] and verbs ending in -aa. However, following

the usage-based approach (Tomasello and Tomasello 2009),

we would be inclined to view these constructions as reducing the description length needed to code for the strings arising in this context.

Conclusion

The above analysis provides a proof-of-concept that a system starting with very few priors can, a) combine motor,

visual (and possibly other modalities) into an integrated

model, and b) use this rudimentary concept knowledge to

bootstrap language. We also note that such a system can

then learn further refinements to this concept space using

language alone.

We believe that this novel idea of linking modalities will

open up a number of new areas in AI. We mention three

possible directions.

31

Neumann, G.; Daniel, C.; Paraschos, A.; Kupcsik, A.; and

Peters, J. 2014. Learning modular policies for robotics.

Frontiers in Computational Neuroscience 8:62.

Rizzolatti, G., and Arbib, M. A. 1998. Language within our

grasp. Trends in neurosciences 21(5):188–194.

Roy, D. 2005. Semiotic schemas: A framework for grounding language in action and perception. Artificial Intelligence

167(1-2):170–205.

Seginer, Y. 2007. Fast unsupervised incremental parsing. In

ACL Ann. Meet, volume 45, 384.

Solan, Z.; Horn, D.; Ruppin, E.; and Edelman, S. 2005.

Unsupervised learning of natural languages.

PNAS

102(33):11629–34.

Steels, L., and Loetzsch, M. 2012. The grounded naming

game. Experiments in Cultural Language Evolution. Amsterdam: John Benjamins.

Tomasello, M., and Tomasello, M. 2009. Constructing a

language: A usage-based theory of language acquisition.

Harvard University Press.

32