Proceedings of the Ninth Symposium on Abstraction, Reformulation and Approximation

Planning with State Uncertainty via

Contingency Planning and Execution Monitoring

Minlue Wang and Richard Dearden

School of Computer Science, University of Birmingham,

Birmingham B15 2TT, UK

{mxw765,rwd}@cs.bham.ac.uk

In the past, quasi-deterministic problems have been

solved by assuming the world is perfectly known in advance (for the MER Mars rovers (Bresina and Morris 2007)

this is done by attaching goals to doing experiments on targets whether or not the target turns out to be interesting) or

will be known at execution time (Brenner and Nebel 2006;

Bresina et al. 2002; Pryor and Collins 1996). However,

these approaches ignore the noise in the observations, assuming that any observation made is correct. This means

that they can perform arbitrarily poorly in the presence of

sensor noise, and they also have no way of choosing between different ways to make observations. An alternative is to consider these problems as partially observable

Markov decision problems (POMDPs) (Cassandra, Kaelbling, and Littman 1994). POMDPs allow an explicit representation of quasi-deterministic problems. However, in

practice they are very hard to solve—state of the art exact solvers can manage hundreds or thousands of states for

general problems (Poupart 2005). As POMDPs can represent a more general class of problem (allowing stochastic actions as well as observations), it should be possible to exploit the division between state-changing actions

(deterministic) and observation-making actions (stochastic)

to solve quasi-deterministic problems more efficiently (although quasi-deterministic POMDPs are easier to solve

than general POMDPs, finding -optimal policies is still in

PSPACE (Besse and Chaib-draa 2009)).

Abstract

This paper proposes a fast alternative to POMDP planning for

domains with deterministic state-changing actions but probabilistic observation-making actions and partial observability

of the initial state. This class of planning problems, which we

call quasi-deterministic problems, includes important realworld domains such as planning for Mars rovers. The approach we take is to reformulate the quasi-deterministic problem into a completely observable problem and build a contingency plan where branches in the plan occur wherever an observational action is used to determine the value of some state

variable. The plan for the completely observable problem is

constructed assuming that state variables can be determined

exactly at execution time using these observational actions.

Since this is often not the case due to imperfect sensing of

the world, we then use execution monitoring to select additional actions at execution time to determine the value of the

state variable sufficiently accurately. We use a value of information calculation to determine which information gathering

actions to perform and when to stop gathering information

and continue execution of the branching plan. We show empirically that while the plans found are not optimal, they can

be generated much faster, and are of better quality than other

approaches.

1

Introduction

Many robotic decision problems are characterised by worlds

that are not perfectly observable but have deterministic or

almost deterministic actions. An example is a Mars rover:

thanks to low-level control and obstacle avoidance, rovers

can be expected to reach their destinations reliably, and can

collect and communicate data, but they do not know in advance which science targets are interesting and hence will

provide valuable data. Similarly, robots performing tasks

such as security or cognitive assistance are generally able to

navigate reliably, but use unreliable vision algorithms to detect the people and objects with which they are supposed

to interact. Following Besse and Chaib-draa (2009), we

will refer to problems with deterministic actions but stochastic observations as quasi-deterministic problems, which differ from Deterministic-POMDPs (DET-POMDPS) (Bonet

2009) by taking into account of uncertainty from observation model.

The major problem with applying POMDP approaches to

realistic planning problems like the Mars rovers is the sheer

size of the problems. Using point-based approximations and

structured representations similar to those used in classical planning (Poupart 2005), problems with tens of millions

of states can be solved approximately, but even that corresponds to a classical planning problem with only 25 binary

variables, which is a quite small problem by the standards

of classical deterministic planning. The alternative we propose in this paper is to construct a series of classical deterministic planning problems from the quasi-deterministic

problem. By solving each of these deterministic problems

we construct a contingent plan—one that contains branches

to be chosen between at run-time. However, the contingent

plan is not directly executable as the conditions that lead to

each branch may not be known. Therefore we use execution

monitoring to determine which branch to take at run time by

c 2011, Association for the Advancement of Artificial

Copyright Intelligence (www.aaai.org). All rights reserved.

132

s ∈ S, a ∈ A for the reward the agent receives for executing action a in state s.

executing one or more observation-making actions.

To generate the classical planning problem from the original quasi-deterministic problem, we take the approach of

Yoon et al. (2007) and determinise the problem by replacing the probabilistic effects of actions and observations with

their most probable outcome (this is called single outcome

determinisation in Yoon et al.). We then pass this problem

to our classical planner (FF (Hoffmann and Nebel 2001)) to

generate a plan. For each point in this plan where an observation action is executed (also for non-deterministic actions) and therefore a state different from the determinised

one could occur, we use FF to generate another plan which

we insert as a branch. This process continues until the contingent plan is completed.

As the contingent plan is executed, the execution monitoring system maintains a belief distribution over the values

of the state variables in exactly the same way a POMDP

planner would. Whenever a branch point is reached that depends on the value of some variable x (in general, branch

points could be based on functions of many variables; for

ease of exposition we will present the single variable case),

execution monitoring repeatedly uses a value of information

calculation to greedily choose an observation action to try to

compute the value of x. Once no observation action is available that has greater than zero value, execution continues

with the branch with the highest expected value.

In Section 2 we formally present quasi-deterministic planning problems and describe how we generate a classical

planning problem from them. Section 3 then presents the

contingency planning algorithm in detail, while Section 4

presents the execution monitoring approach. We discuss related work in Section 5, present an experimental evaluation

in Section 6, and finish with our conclusions and future directions.

2

Quasi-deterministic planning problems can be defined as

problems in which the actions are of two types: statechanging actions and observation-making actions. We

define state-changing actions as those where ∀s∃s :

P (s, a, s ) = 1, P (s, a, s ) = 0 for s = s and

∀s, a, s ∃o : P (s, a, s , o) = 1, P (s, a, s , o ) = 0 for

o = o. That is, for every state they are performed in there is

exactly one state they transition to, and their observations are

uninformative. In contrast, for observation-making actions

the observation function O is unconstrained and the state

does not change: ∀s, a : P (s, a, s) = 1, P (s, a, s ) = 0 for

s = s1 . This is the point at which the model differs from

the DET-POMDP formulation (Bonet 2009), where the observation function must also be deterministic.

In practice, the problems we are interested in are unlikely

to be specified in a completely flat general POMDP form.

Rather, we expect that just as in classical planning, they

will be specified using state variables, for example in a dynamic Bayesian network as in symbolic Perseus (Poupart

2005), which we will use for comparison purposes. Similarly, we use factored-observable models (Besse and Chaibdraa 2009) to simplify the representation of the observation

space.

In addition to the definition of the POMDP itself, we also

need an initial state and an optimality criterion. For quasideterministic POMDPs where S is represented using state

variables as in classical planning, the state variables can be

divided into completely observable variables So (such as the

location of the rover) and partially observable ones Sp (such

as whether a particular rock is of interest). Since all the statechanging actions are deterministic, the partially observable

variables are exactly those that are not known with certainty

in the initial state. We represent this by an initial belief state

and for all s ∈ Sp write b(s) for the probability that s is true

(for the purposes of exposition, we will assume all variables

are Boolean). For the optimality criterion, so as to be able to

compare performance directly between our approach and a

POMDP solver (symbolic Perseus), we will use total reward.

We illustrate this using the RockSample domain (Smith

and Simmons 2004), in which a robot can visit and collect

samples from a number of rocks at locations in a rectangular

grid. Some of the rocks are “good” indicating that the robot

will get a reward for sampling them. Others are “bad” and

the robot gets a penalty for sampling them. The robot’s statechanging actions are to move north, south, east or west in

the grid, or to sample a rock, and the observational actions

are to check each rock, which returns a noisy observation of

whether the rock is good or not.

Quasi-Deterministic Planning Problems

The POMDP model of planning problems is sufficiently

general to capture all the complexities of the domains we

consider here (although in principle our approach will work

for problems with resources and durative actions as well).

Formally, a POMDP is a tuple S, A, T, Ω, O, R where:

S is the state space of the problem. We assume all states

are discrete.

A is the set of actions available to the agent.

T is the transition function that describes the effects of the

actions. We write P (s, a, s ) where s, s ∈ S, a ∈ A for

the probability that executing action a in state s leaves the

system in state s .

Ω is the set of possible observations that the agent can

make.

O is the observation function that describes what is

observed when an action is performed. We write

P (s, a, s , o) where s, s ∈ S, a ∈ A, o ∈ Ω for the probability that observation o is seen when action a is executed

in state s resulting in state s .

R is the reward function that defines the value to the

agent of particular activities. We write R(s, a) where

Classical Planning Representation

To use a contingency planner to solve this problem, we

translate it into the probabilistic planning domain definition

1

We note that our approach does not require that all statechanging actions have uninformative observations. However, the

efficiency of the approach we describe depends on the number of

observations in the plan as this determines the number of branches.

133

language (PPDDL) (Younes and Littman 2004). PPDDL

is designed for problems that can be represented as completely observable Markov decision problems (known state

but stochastic actions). To use it for a quasi-deterministic

problem we need to represent the effects of the observationmaking actions. Following Wyatt et al. (Wyatt et al. 2010),

we do this by adding a knowledge predicate kval() to indicate the agent’s knowledge about an observation variable.

For instance, in the RockSample problem (Smith and Simmons 2004), we use kval(rover0, rock0, good) to reflect that

rover0 knows rock0 has good scientific value. The knowledge predicate is included in the effects of the observationmaking action and also appears in the goals to ensure that the

agent has to find out the value. Because state-changing actions produce uninformative observations, knowledge predicates will only appear in the effects of observation-making

actions. As an example, the checkRock action from the

RockSample domain might appear as follows:

For example, for a RockSample problem with a single

rock rock0, which is a good rock with probability 0.6, we

determinise the initial state to produce one where the rock

is good, and the goal becomes to sample a rock where

kval(rover0, rock0, good) is true. The action checkRock then

becomes determinised (in PDDL) as:

(:action checkRock

:parameters

(?r -rover ?rock -rocksample

?value -rockvalue)

:preconditions

(not (measured ?r ?rock ?value))

:effect

(and (when (and (rock_value ?rock ?value)

(= ?value good))

(and (measured ?r ?rock good)

(kval ?r ?rock good)))

(when (and (rock_value ?rock ?value)

(= ?value bad))

(and (measured ?r ?rock bad)

(kval ?r ?rock bad)))))

(:action checkRock

:parameters

(?r -rover ?rock -rocksample

?value -rockvalue)

:preconditions

(not (measured ?r ?rock ?value))

:effect

(and (when (and (rock_value ?rock ?value)

(= ?value good))

(probabilistic

0.8 (and (measured ?r ?rock good)

(kval ?r ?rock good)))

0.2 (and (measured ?r ?rock bad)

(kval ?r ?rock bad)))

(when (and (rock_value ?rock ?value)

(= ?value bad))

(probabilistic

0.8 (and (measured ?r ?rock bad)

(kval ?r ?rock bad)))

0.2 (and (measured ?r ?rock good)

(kval ?r ?rock good)))))

3

Since in reality each observation-making action in the

plan could have an outcome other than the one selected in

the determinisation, we then traverse the plan updating the

initial belief state as we go, until an observation-making action is encountered. This then forms a branch point in the

plan. For each possible value of the observed state variable

apart from the one already planned for, we generate a new

initial state using the belief state from the existing plan and

the value of the variable, and then call FF again to generate a new branch which can be attached to the plan at this

point. This process repeats until all observation-making actions have branches for every possible value of the observed

variable. The full algorithm is displayed in Algorithm 1.

Algorithm 1 Generating the contingent plan using FF

plan=FF(initial-state,goal)

while plan contains observation actions without branches

do

Let o be an initial observation making variable v = v1

without a branch in plan

Let s be the belief state after executing all actions preceding o from the initial state

for each value vi , i = 1 of v with non-zero probability

in s do

branch = FF(s ∪ (v = vi ), goal)

Insert branch as a branch at o

end for

end while

Generating Contingency Plans

Given a quasi-deterministic planning problem as described

in the previous section we seek to generate contingent plans

where each branch point in a plan is associated with one possible outcome of an observation action. We use the simple

approach of Warplan-C (Warren 1976) to generate contingent plans. There are two steps in generating contingency

plans for a quasi-deterministic planning problem. First, we

determinise the problem according to single-outcome determinisation (Yoon, Fern, and Givan 2007). For each observation action, only the most likely probabilistic effect is

chosen. Similarly, only the most likely state from the initial belief state is used to define the initial state of the determinised problem. This approach will convert a quasideterministic planning problem into a standard classical deterministic model. We then forward this determinised problem to the classical planner FF (Hoffmann and Nebel 2001).

FF then generates a plan to achieve the goal from the determinised initial state. Since the goal includes knowledge

predicates, the plan by necessity includes some observationmaking actions.

Since the approach in Algorithm 1 enumerates all the possible contingencies that could happen during execution, the

number of branches in the contingent plan is exponential

in the number of observation-making actions in the plan.

This is precisely why it is useful that the state-changing

actions do not generate observations—to keep the number of branches as low as possible. Also, since the determinised problem assumes the observation-making actions

are perfectly accurate, in any branch of the plan at most one

134

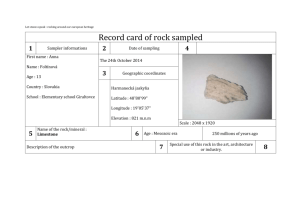

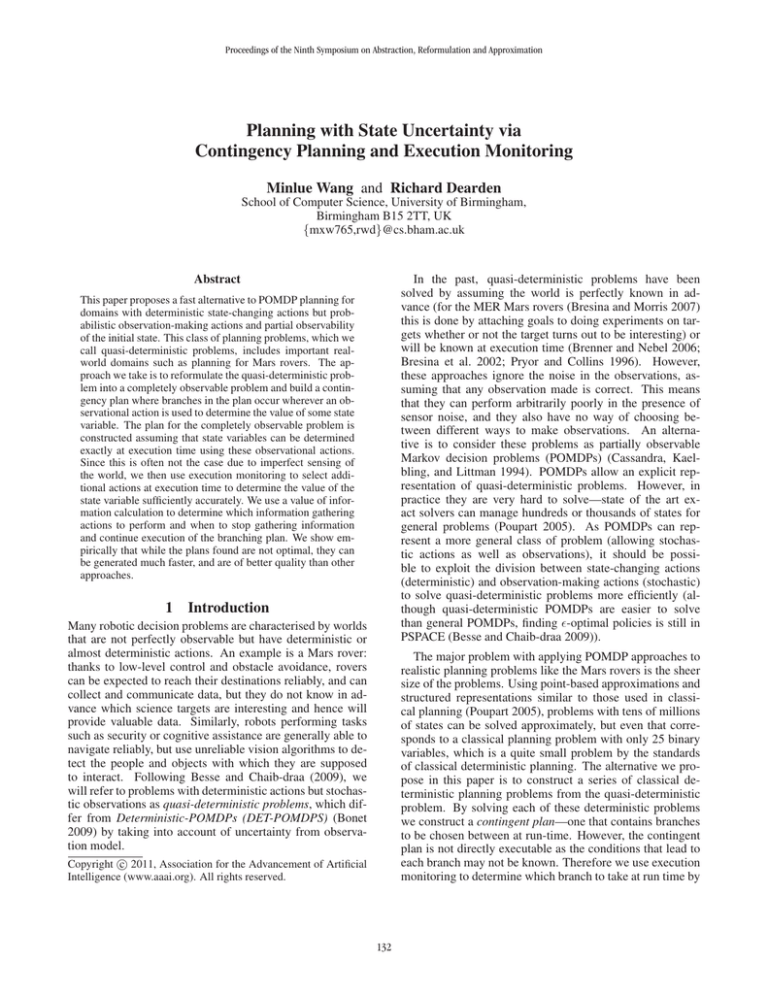

Figure 1: An example of the RockSample(4,2) domain and a contingent plan generated for that problem. The rectangles in the

plan are state-changing (mostly moving) actions and the circles are observation-making actions for the specified rock.

a1 , followed by observation action o1 , which measures state

variable c. If c is true, branch T1 will be executed, and if c is

false, branch T2 will be executed. When execution reaches

o1 , execution monitoring calculates the expected utility of

the current best branch T ∗ based on the belief state b(c) over

the value of c after a1 as follows:

observation-making action will appear for each state variable in Sp . Thus in practice, we expect there to be a relatively small number of branches. In the RockSample domain, for example, there is one branch per rock in the problem. This is illustrated in Figure 1. On the left is an example

problem from the RockSample domain with a 4x4 grid and

two rocks, while the right hand side shows the plan generated by the contingency planner. In this version of the domain, the robot gets a large positive reward if it moves to the

exit with a sample of a good rock, it gets zero reward if it

moves to the exit with no sample, and it gets a large negative

reward if it moves to the exit with a sample of a bad rock.

4

Ub (T ∗ ) = max U (Ti , b)

Ti

(1)

where Ub (T ∗ ) represents the value in belief state b of making no observations and simply executing the best branch.

U (Ti , b) is the expected value of executing branch Ti in belief state b.

Next we examine the value of performing an observationmaking action o (not necessarily the same o1 as planned)

that gives information about c. Performing o will change the

belief state depending on the observation that is returned.

Let B be the set of all possible such belief states, one for

each possible observation returned by o, and let P (b ) be

the probability of getting an observation that produces belief

state b ∈ B. Let cost(o) be the cost of performing action o.

The value of the information gained by performing o, is the

value of the best branch to take in each b , weighted by the

probability of b , less the cost of performing o and the value

of the current best branch:

P (b )Ub (T b ) − Ub (T ∗ ) − cost(o) (2)

VG(o) =

Execution Monitoring

The approach we described in Section 3 for generating

branching plans relies on relaxation of the uncertainty in the

initial states and observation actions. The results of this are

plans that account for every possible state the world might be

in but do not account for the observations needed to discover

that state. That is, they are executable only if we assume

complete observability at execution time (or equivalently,

that the observation actions are perfectly reliable as in DETPOMDPs). If, as is the case in the RockSample domain, the

sensing is not perfectly reliable and therefore the state is not

known with certainty, they may perform arbitrarily badly. To

overcome this problem we propose a novel execution monitoring and plan modification approach to increase the quality of the plan that is actually executed. During execution,

we keep track of the agent’s belief state after each selected

action via a straightforward application of Bayes rule, just

as a POMDP planner would. To select actions to perform

when we reach an observation-making action in the plan,

we utilise a value of information calculation (Howard 1966).

Suppose the plan consists of state-changing action sequence

b ∈B

Where T b is the best branch to take given belief state b :

Ub (T b ) = max U (Ti , b )

Ti

(3)

Both Equation 1 and Equation 3 rely on the ability to compute the utility of executing a branch of the plan, U (T, b).

Building the complete contingent plan allows us to estimate

135

outcome of o, weighted by the probability according to our

current belief state of getting that outcome.

The execution monitoring algorithm is given in Algorithm

2. The equations above are used to select an action to perform in each iteration, and the process repeats until no action with a positive value can be found. At that point, execution selects the best branch and continues by executing

it. We might expect that in some circumstances this greedy

approach to observation-making action selection might be

sub-optimal. However, the action selection problem clearly

satisfies the requirements for sub-modularity (Krause and

Guestrin 2007) which guarantees the greedy approach is

close to optimal.

this value when deciding what observation actions to perform. We do this by a straightforward backup of the expected rewards of each plan branch given our current belief

state. The value of U (T, b) (the utility of branch T in belief

state b is computed as follows:

• if T is an empty branch, then U (T, b) is the reward

achieved by that branch of the plan.

• if T consists of a state-changing action a followed by the

rest of the branch T , then U (T, b) = U (T , b) − cost(a),

that is, we subtract the cost of this action from the utility

of the branch.

• if T consists of an observation-making action o on

some variable d (observation-making actions for each

variable will appear at most once), then U (T, b) =

d b(di )U (Ti , b) − cost(o), that is, we weight the value

of each branch at o by our current belief about d.

This ability to estimate the value of each branch is in contrast to the alternative approach of replanning (e.g. see (Goebelbecker, Gretton, and Dearden 2011), which we discuss in

more detail in Section 5) where the utility of the future plan

is impossible to determine since you cannot be sure what

plan will actually be executed until the replanning has occurred. Even in our case, we cannot compute this value

exactly as we don’t know what additional actions execution monitoring will add to the plan. However, since FF

will choose the minimum cost observational action2 we can

be sure that the cost we estimate for the tree by the procedure described above will be an underestimate, thus ensuring

that execution monitoring will never perform fewer observational actions than are needed to determine which branch to

execute.

For the plan in Figure 1, assuming we get rewards of V + ,

0, and V − for sampling a good rock, taking no sample, and

sampling a bad rock respectively, and costs of Co for observation actions, Cs for sampling actions and Cm for moving

actions, when we reach the observation action for rock R1

in a belief state b, the value of the “good” branch is:

Algorithm 2 Execution monitoring at observation-making

action o

Let c be the variable being observed by o

Let A be the set of actions that provide information about

c

repeat

Let V G(a) be the value gain for a ∈ A according to

Equation 2

Let a∗ = arg maxa V G(a)

if V G(a∗ ) > 0 then

execute a∗ and update the belief state b based on the

observation returned

end if

until V G(a∗ ) ≤ 0

Execute the best branch given the new belief state b according to Equation 3

The restriction that execution monitoring can only choose

among the observation-making actions is important (if we

allow state-changing actions to have non-trivial observations, they may have positive value of information). If execution monitoring was allowed to select actions that changed

the state, the rest of the plan might not be executable from

the changed state. This fact limits the applicability of this

approach in general POMDPs.

One thing worth noting is that the value of information

approach has the ability to choose between multiple observation actions by looking at the value gained by every observation action and picking the one that has the highest value.

After that action is executed, we continue to choose and execute the best observation-making action until there is no

action o with VG(o) > 0.

b(R1 = good)V + + b(R1 = bad)V − − 4Cm − Cs

while the value of the “bad” branch is:

b(R2 = g)V + + b(R2 = b)V − − 3Cm − Cs ,

max

−3Cm

−(2Cm + Co )

Here the top line of the equation is the value of taking the

left branch at R2 , the second line is the value of the right

branch, which is simply the cost of moving to the exit without sampling any rocks, and the bottom line is the penalty

for moving to R2 and observing its value, which applies to

both branches. Note that if the “bad” branch is taken at R1 ,

then in neither case is any reward gained from R1 , so the

belief we have in that rock becomes irrelevant to the plan

value. To compute the value gain for an action o, we compare the value of the best branch given our current belief

state with the value of the best branch given each possible

5

Related Work

Many execution monitoring approaches (Fikes, Hart, and

Nilsson 1972; Giacomo, Reiter, and Soutchanski 1998;

Veloso, Pollack, and Cox 1998) have been developed to detect discrepancies between the actual world state and the

agent’s knowledge of world, and incorporate plan modification or replanning to recover from any such unexpected

state. In most cases the discrepancies that these approaches

are trying to detect result from exogenous events or action

failures. In addition, most of these approaches are only focusing on monitoring the execution of straight-line plans. A

2

This is due to the fact that the determinised versions of the

observation-making actions are identical apart from their costs.

136

6

good survey of these approaches can be found in (Pettersson

2005). However, as none of them are addressing the same

problem of partial observability as we investigate, their approaches are not comparable with ours.

Experimental Evaluation

We tested our approach on the classical POMDP problem

RockSample (Smith and Simmons 2004). As described in

Section 2, there are five state-changing actions in the domain: four moving actions and one sampling action. Each

rock has an observation action which is not perfectly reliable. A reward of 20 will be given if the rover samples a

good rock and goes to the exit and a reward of −40 if a

bad rock is sampled. A large penalty is given if there is

no rock at the position of the rover when sampling or if

the rover moves out of grid except to go to the exit. We

write RockSample(n, k) for a n by n grid with k rocks. The

size of the state space is n2 × 2k . To capture the idea that

observation-making actions are usually faster and cheaper

than state-changing actions, we use a version of RockSample with costs on the actions: The movement actions have

cost two, while all others have cost one. An example of

RockSample(4, 2) is given in Figure 1.

For comparison purposes we wanted to use an optimal

plan as computed by a POMDP solver. The only one we

could find that would solve RockSample problems of interesting size was symbolic Perseus (Poupart 2005), which is a

point-based solver that uses a structured representation. This

algorithm is only approximately optimal, and the quality of

the policies found depends strongly on how the points for the

approximation are selected, however, having run the algorithm with a range of different parameters, we are fairly confident that the policies found are very close to optimal. Unfortunately, incompatibilities between the PDDL and symbolic Perseus domain specification languages meant that we

couldn’t use the standard version of the RockSample problem (Smith and Simmons 2004) directly. To overcome this

we added the exit to the grid and made the goal to go to the

exit with a sample from a good rock, and in addition we removed the ability to observe rocks from a distance, leaving

only a single observe action that works when the rover is in

the same square as the rock. When the robot goes to the exit,

the problem resets.

As well as symbolic Perseus, we also compared with the

same contingent plan without the execution monitoring. For

evaluation we need a plan quality measure which can easily

be computed for both the POMDP and classical planners.

To that end, we compare all the planners in terms of their

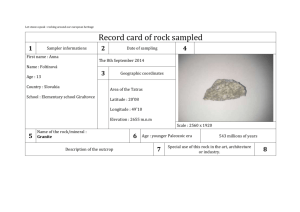

total reward averaged over 200 runs, each of 200 steps. Figure 2 shows the total reward of these three approaches. Any

problems which are larger than size (8,8) cannot be solved

by symbolic Perseus within reasonable time (one hour) and

memory usage. To aid comparison, we show the total reward

for the contingency planning approaches as a fraction of the

expected reward for the optimal policy computed by symbolic Perseus. The error bars are one standard deviation each

side of the mean. The addition of execution monitoring produced a significant improvement over contingency planning

alone, although neither classical planning approach reached

optimal performance for most problems.

The key argument for this work is that the classical

planning approach has a significant speed advantage over

POMDP planning. Figure 3 shows this on the same set of

problems, with time shown on a log scale. Time for generat-

The closest piece of related work to ours from the execution monitoring literature is (Boutilier 2000), which similarly used classical planning plus execution monitoring to

solve problems that could be represented as POMDPs. In

that work the plans are non-branching and the problem is

to decide when to observe the preconditions of actions and

determine if they are true, as opposed to using execution

monitoring to determine which branch to take. In common

with our approach, they use value of information to measure

whether monitoring is worthwhile, but then formulate the

monitoring decision problem as a set of POMDPs, rather

than using value of information directly to select observational actions.

The other most closely related approach is that of (Fritz

2009). They are interested in monitoring plan optimality

and identifying when a discrepancy in the plan is relevant

to the quality of the plan. To show that the current plan is

still optimal, they need to record the criteria that makes the

current plan optimal (i.e. the conditions that make it better

than the next best plan). This allows them to monitor just

those relevant conditions, and in an approach similar to ours,

directly compare the quality of two candidate plans from the

current state.

An alternative to execution monitoring for solving quasideterministic problems efficiently is described in (Goebelbecker, Gretton, and Dearden 2011). There they use a classical planner and a decision-theoretic (DT) planner to solve

these problems, switching between them as they generate a

plan. The approach is similar to ours in that they use FF to

plan in a determinisation of the original problem augmented

with actions to determine the values of state variables, which

they call assumption actions. However, they build linear

plans with FF and switch to the DT planner to improve the

plan whenever an observation action is executed. The DT

planner looks for a plan either to reach the goal, or to disprove one of the assumptions. If this occurs, replanning is

triggered. The advantage of their approach is that it can find

more general plans using the DT planner, so we might expect it to produce slightly better quality plans overall. However, the DT planner is much more computationally expensive than the simple value of information calculation we use.

Classical planners have also been applied in fully observable MDP domains. By far the most successful of these approaches has been FF-replan (Yoon, Fern, and Givan 2007),

from which we have taken the determinisation ideas discussed above. Because these approaches rely on being able

to determine the state after each action, they cannot easily

be applied in POMDPs. Our approach can be thought of as

FF-replan (although we actually build the entire contingent

plan rather than a single branch) where we use the execution monitoring to determine with sufficient probability the

relevant parts of the state.

137

number of actions, and prefers cheaper actions, before deciding that the rock isn’t worth sampling and moving on to

the next. When few untested rocks remain the algorithm is

willing to put a lot more effort into finding out if they are

good since it may otherwise not find any rock to sample.

This illustrates the value of taking into account the branches

in the remainder of the plan when deciding what observations to make.

1

Expected Total Reward/Optimal

0.9

0.8

0.7

7

0.5

Optimal (symbolic Perseus)

FF plus execution monitoring

FF without execution monitoring

0.4

(4,4)

(5,5)

(6,6)

Rocksample problem

(7,7)

(8,8)

Figure 2: Ratio of plan quality to optimal as computed by

Symbolic Perseus.

4

10

3

10

2

Plan generation time

10

Symbolic Perseus

FF with execution monitoring

FF without execution monitoring

1

10

0

10

−1

10

−2

10

(4,4)

(5,5)

(6,6)

Rocksample problem

(7,7)

Conclusion and Future Work

We have presented an approach to solving quasideterministic POMDPs by converting them into a contingency planning problem and using execution monitoring to

repair the plans at run-time. The monitoring approach differs from most other execution monitoring algorithms in that

we are monitoring beliefs about state variables rather than

whether the plan is still executable. The monitoring approach selects actions to make the belief state more certain,

using a value of information-based heuristic. The approach

is orders of magnitude faster than using a POMDP solver,

and our initial experiments suggest that the plans found are

not too far below optimal, and significantly better than without execution monitoring. We are currently working to apply

the approach in a wider set of domains, as well as to compare

it with other approaches, in particular that of (Goebelbecker,

Gretton, and Dearden 2011).

Other future work we are planning includes adding some

of the features of more traditional execution monitoring,

such as consideration of exogenous events and action failures. We would also like to add more of the richness of recent classical planning domains, including durative actions,

uncertainty about action costs and durations, etc. We believe

that many important real-world domains can be represented

as quasi-deterministic problems, and that cheap and fast solutions such as the one we have described here will be key

to solving them on-board autonomous robots.

0.6

(8,8)

Acknowledgements

Figure 3: Plan Generation Time in seconds as a function of

domain size. Note that the y-axis is log scaled.

This research was partly supported by EU FP7 IST Project

CogX FP7-IST-215181.

References

ing policies in Symbolic Perseus grows exponentially, while

generation time for contingent plans is orders of magnitude

less especially when the domain size is large. For instance,

Symbolic Perseus needs about 50 minutes to compute an optimal policy for RockSample(8,8) while FF only needs 0.09s,

and still is within 80% of the optimal policy.

The RockSample domain we have used only has a single

observation action so the actions selected by the execution

monitoring are rather uninteresting, mostly consisting of repeated tries at the action until the same observation is made

twice in a row. However, we have also tested the approach

on a variant where multiple observation actions are available

with different performance in terms of reliability and cost. In

this case we find that the actions selected by execution monitoring vary over the course of the contingency plan. For example, on early rocks, since there are lots of other untested

rocks available, execution monitoring will only use a small

Besse, C., and Chaib-draa, B. 2009. Quasi-deterministic

partially observable Markov decision processes. In Leung,

C.-S.; Lee, M.; and Chan, J. H., eds., ICONIP (1), volume 5863 of Lecture Notes in Computer Science, 237–246.

Springer.

Bonet, B. 2009. Deterministic POMDPs revisited. In Proceedings of the Twenty-Fifth Conference on Uncertainty in

Artificial Intelligence, UAI ’09, 59–66. Arlington, Virginia,

United States: AUAI Press.

Boutilier, C. 2000. Approximately optimal monitoring of

plan preconditions. In In Proceedings of the 16th Conference in Uncertainty in Artificial Intelligence (UAI00, 54–62.

Morgan Kaufmann.

Brenner, M., and Nebel, B. 2006. Continual planning and

acting in dynamic multiagent environments. In Proceedings

138

Wyatt, J. L.; Aydemir, A.; Brenner, M.; Hanheide, M.;

Hawes, N.; Jensfelt, P.; Kristan, M.; Kruijff, G.-J. M.; Lison, P.; Pronobis, A.; Sjöö, K.; Skočaj, D.; Vrečko, A.; Zender, H.; and Zillich, M. 2010. Self-understanding and selfextension: A systems and representational approach. IEEE

Transactions on Autonomous Mental Development 2(4):282

– 303.

Yoon, S. W.; Fern, A.; and Givan, R. 2007. FF-replan: A

baseline for probabilistic planning. In Proceedings of the

Fourteenth International Conference on Automated Planning and Scheduling, 352–.

Younes, H. L. S., and Littman, M. 2004. PPDDL1.0: The

language for the probabilistic part of IPC-4. In Proceedings

of the International Planning Competition.

of the 2006 international symposium on Practical cognitive

agents and robots, PCAR ’06, 15–26. New York, NY, USA:

ACM.

Bresina, J. L., and Morris, P. H. 2007. Mixed-initiative planning in space mission operations. AI Magazine 28(2):75–88.

Bresina, J.; Dearden, R.; Meuleau, N.; Ramkrishnan, S.;

Smith, D.; and Washington, R. 2002. Planning under continuous time and resource uncertainty: A challenge for AI.

In Proc. of UAI-02, 77–84. Morgan Kaufmann.

Cassandra, A. R.; Kaelbling, L. P.; and Littman, M. L. 1994.

Acting optimally in partially observable stochastic domains.

In AAAI’94: Proceedings of the twelfth national conference

on Artificial intelligence (vol. 2), 1023–1028. Menlo Park,

CA, USA: American Association for Artificial Intelligence.

Fikes, R. E.; Hart, P. E.; and Nilsson, N. J. 1972. Learning

and executing generalized robot plans. Artificial Intelligence

3:251–288.

Fritz, C. 2009. Monitoring the generation and execution of

optimal plans. Ph.D. Dissertation, University of Toronto.

Giacomo, G. D.; Reiter, R.; and Soutchanski, M. 1998. Execution monitoring of high-level robot programs. In KR,

453–465.

Goebelbecker, M.; Gretton, C.; and Dearden, R. 2011. A

switching planner for combined task and observation planning. In Proceedings of the 25th Conference on Artificial

Intelligence (AAAI), to appear.

Hoffmann, J., and Nebel, B. 2001. The FF planning system:

Fast plan generation through heuristic search. Journal of

Artificial Intelligence Research 14:263–302.

Howard, R. 1966. Information value theory. Systems Science and Cybernetics, IEEE Transactions on 2(1):22 –26.

Krause, A., and Guestrin, C. 2007. Near-optimal observation selection using submodular functions. In National

Conference on Artificial Intelligence (AAAI), Nectar track.

Pettersson, O. 2005. Execution monitoring in robotics: A

survey. Robotics and Autonomous Systems 53(2):73 – 88.

Poupart, P. 2005. Exploiting Structure to Efficiently Solve

Large Scale Partially Observable Markov Decision Processes. Ph.D. Dissertation, Department of Computer Science, University of Toronto.

Pryor, L., and Collins, G. 1996. Planning for contingencies:

A decision-based approach. Journal of Artificial Intelligence

Research 4:287–339.

Smith, T., and Simmons, R. 2004. Heuristic search value iteration for POMDPs. In Proceedings of the 20th conference

on Uncertainty in artificial intelligence, UAI ’04, 520–527.

Arlington, Virginia, United States: AUAI Press.

Veloso, M. M.; Pollack, M. E.; and Cox, M. T. 1998.

Rationale-based monitoring for planning in dynamic environments. In Proceedings of the Fourth International Conference on Artificial Intelligence Planning Systems, 171–

179. AAAI Press.

Warren, D. H. D. 1976. Generating conditional plans and

programs. In AISB (ECAI)’76, 344–354.

139