Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence

Bayesian Model Averaging Naive Bayes (BMA-NB):

Averaging over an Exponential Number of Feature Models in Linear Time

Ga Wu

Australian National University

Canberra, Australia

wuga214@gmail.com

Scott Sanner

NICTA & Australian National University

Canberra, Australia

ssanner@nicta.com.au

Rodrigo F.S.C. Oliveira

University of Pernambuco

Recife, Brazil

rfsantacruz@gmail.com

to different feature sets, BMA has the advantage over feature

selection that its predictions tend to have lower variance in

comparison to any single model.

While BMA has had some notable successes for averaging over tree-based models such as the context-tree

(CTW) weighting (Willems, Shtarkov, and Tjalkens 1995)

algorithm for sequential prediction, or prunings of decision

trees (Oliver and Dowe 1995), it has not been previously

applied to averaging over all possible feature sets (i.e., the

exponentially sized power set) for classifiers such as NB,

which would correspond to averaging over all nodes of the

feature subset lattice — a problem which defies the simpler

recursions that can be used for BMA on tree structures.

In this paper, we show the somewhat surprising result

that it is indeed possible to exactly evaluate BMA over

the exponentially-sized powerset of NB feature models in

linear-time in the number of features; this yields an algorithm no more expensive to train than a single NB model

with all features, but yet provably converges to the globally

optimal feature subset in the asymptotic limit of data.

This is the first-time we are aware of such an exact lineartime computation result for BMA over the feature subset lattice for any classifier or regressor. While previous work has

proposed algorithms for BMA over the feature subset lattice in generalized linear models such as logistic regression

and linear regression (Raftery 1996; Raftery, Madigan, and

Hoeting 1997; Hoeting et al. 1999), all of these methods either rely on biased approximations or use MCMC methods

to sample the model space with no a priori bound on the

mixing time of such Markov Chains; in contrast our proposal for NB does not approximate and provides an exact

answer in linear-time in the number of features.

We evaluate our novel BMA-NB classifier on a range of

datasets showing that it never underperforms NB (as expected) and sometimes offers performance competitive (or

superior) to classifiers such as SVMs and logistic regression

while taking a fraction of the time to train.

Abstract

Naive Bayes (NB) is well-known to be a simple but effective classifier, especially when combined with feature selection. Unfortunately, feature selection methods

are often greedy and thus cannot guarantee an optimal

feature set is selected. An alternative to feature selection is to use Bayesian model averaging (BMA), which

computes a weighted average over multiple predictors;

when the different predictor models correspond to different feature sets, BMA has the advantage over feature

selection that its predictions tend to have lower variance on average in comparison to any single model.

In this paper, we show for the first time that it is possible to exactly evaluate BMA over the exponentiallysized powerset of NB feature models in linear-time in

the number of features; this yields an algorithm about

as expensive to train as a single NB model with all features, but yet provably converges to the globally optimal

feature subset in the asymptotic limit of data. We evaluate this novel BMA-NB classifier on a range of datasets

showing that it never underperforms NB (as expected)

and sometimes offers performance competitive (or superior) to classifiers such as SVMs and logistic regression while taking a fraction of the time to train.

Introduction

Naive Bayes (NB) is well-known to be a simple but effective

classifier in practice owing to both theoretical justifications

and extensive empirical studies (Langley, Iba, and Thompson 1992; Friedman 1997; Domingos and Pazzani 1997;

Ng and Jordan 2001). At the same time, in domains such

as text classification that tend to have many features, it is

also noted that NB often performs best when combined with

feature selection (Manning, Raghavan, and Schütze 2008;

Fung, Morstatter, and Liu 2011).

Unfortunately, tractable feature selection methods are in

general greedy (Guyon and Elisseeff 2003) and thus cannot

guarantee that an optimal feature set is selected — even for

the training data. An interesting alternative to feature selection is to use Bayesian model averaging (BMA) (Hoeting et

al. 1999), which computes a weighted average over multiple

predictors; when the different predictor models correspond

Preliminaries

We begin by providing a graphical model perspective of

Naive Bayes (NB) classifiers and Bayesian Model Averaging (BMA) that simplify the subsequent presentation and

derivation of our BMA-NB algorithm.

c 2015, Association for the Advancement of Artificial

Copyright Intelligence (www.aaai.org). All rights reserved.

3094

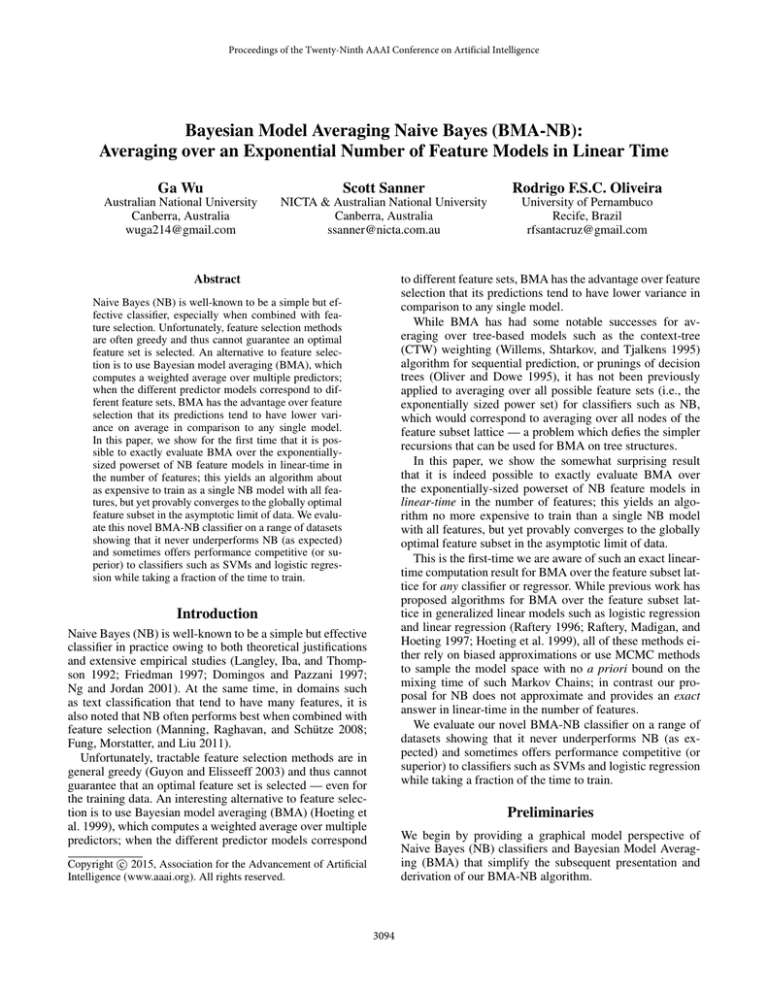

Bayesian Model Averaging (BMA)

yi

xi,k

If we had a class of models m ∈ {1..M }, each of which

specified a different way to generate the feature probabilities P (xi |yi , m), then we can write down a prediction for a

generative1 classification model m as follows:

yi

yi

m

fk

xi

xi,k

k=1..K

k=1..K

i=1..N+1

i=1..N+1

(a)

(b)

P (y, x|m, D) = P (x|m, y, D)P (y|D).

i=1..N+1

While we could select a single model m according to

some predefined criteria, an effective way to combine all

models to produce a lower variance prediction than any single model is to use Bayesian model averaging (Hoeting et

al. 1999) and compute a weighted average over all models:

(c)

Figure 1: Bayesian graphical model representations of (a) Naive

Bayes (NB), (b) Bayesian model averaging (BMA) for generative

models such as NB, and (c) BMA-NB. Shaded nodes are observed.

P (y, x|m, D)

X

=

P (x|m, y, D)P (y|D)P (m|D).

Naive Bayes (NB) Classifiers

Assume we have N i.i.d. data points D = {(yi , xi )} (1 ≤

i ≤ N ) where yi ∈ {1..C} is the discrete class label for datum i and xi = (xi,1 , . . . , xi,K ) is the corresponding vector

of K features for datum i. In a probabilistic setting for classification, given a new feature vector xN +1 (abbreviated x),

our objective is to predict the highest probability class label

yN +1 (abbreviated y) based on our observed data. That is,

for each y, we want to compute

P (y|x, D) ∝ P (y, x, D) =

N

+1

Y

P (yi , xi ),

Here we can view P (m|D) as the weight of model m and

observe that it provides a convex

P combination of model predictions, since by definition: m P (m|D) = 1. BMA has

the convenient property that in the asymptotic limit of data

D, as |D| → ∞, P (m|D) → 1 for the single best model m

(assuming no models are identical) meaning that asymptotically, BMA can be viewed as optimal model selection.

To compute P (m|D), we can apply Bayes rule to obtain

(1)

P (m|D) ∝ P (D|m)P (m) =

where the first proportionality is due to the fact that D and

x are fixed (so the proportionality term is a constant) and

QN +1

the i=1 owes to our i.i.d. assumption for D (we likewise

assume the N +1st data point is also drawn i.i.d.) and the fact

that we can absorb (y, x) = (yN +1 , xN +1 ) into the product

by changing the range of i to include N + 1.

The Naive Bayes independence assumption posits that all

features xi,k (1 ≤ k ≤ K) are conditionally independent

given the class yi . If we additionally assume that P (yi ) and

P (xi,k |yi ) are set to their maximum likelihood (empirical)

estimates given D, we arrive at the following standard result

for NB in (3):

K

Y

i=1

k=1

arg max P (y|x, D) = arg max

y

yN +1

= arg max P (yN +1 )

yN +1

P (yi )

K

Y

N

Y

P (yi , xi |m)

i=1

=

N

Y

P (yi )P (xi |m, yi ). (6)

i=1

Substituting (6) into (5) we arrive at the final simple form,

where we again absorb P (x|m, yN +1 , D)P (y|D) into the

QN +1

renamed N + 1st factor of i=1 :

P (y, x|m, D) ∝

+1

X NY

m

P (yi )P (xi |m, yi ).

(7)

i=1

We pause to make two simple observations on BMA before proceeding to our main result combining BMA and

NB. First, as for NB, we can represent the underlying joint

distribution for (7) as the graphical model in Figure 1(b)

where in this case m is a latent (unobserved) variable that

we marginalize over. Second, unlike the previous result for

NB, we observe that with BMA, we cannot simply absorb

the data likelihood into the proportionality constant since it

depends on the unobserved m and is hence non-constant.

P (xi,k |yi ) (2)

P (xN +1,k |yN +1 )

(5)

m

i=1

N

+1

Y

(4)

(3)

k=1

QN

In (3), we dropped all factors in the i=1 from (2) since

these correspond to data constants in D that do not affect

the arg maxy .

Later when we derive

QN BMA-NB, we’ll see that it is not

possible to drop the i=1 since latent feature selection variables will critically depend on D. To get visual intuition for

this perspective, we pause to provide a graphical model representation of the joint probability for (2) in Figure 1(a) that

we extend later. We indicate all nodes as observed (shaded)

since D and x are given and to evaluate arg maxy , we instantiate y to its possible values in turn.

Main Result: BMA-NB Derivation

Consider a naive combination of the previous sections on

BMA and NB if we consider each model m to correspond to

a different subset of features. Given K features, there are 2K

possible feature subsets in the powerset of features yielding

an exponential number of models m to sum over in (7).

1

A generative model like NB provides a full joint distribution

P (yi , xi ) over class labels and features (Ng and Jordan 2001).

3095

However, if we assume feature selections are independent

of each other (a strong assumption justified in the next section), we can explicitly factorize our representation of m and

exploit this for computational efficiency. Namely, we can

use fk ∈ {0, 1} (1 ≤ k ≤ K) to represent whether feature

xi,k for each datum i is used (1) or not (0).

Considering our model class m to be f = (f1 , . . . , fK ),

we can now combine the naive Bayes factorization of (2)

and BMA from (7) — and their respective graphical models in Figures 1(a,b) — into the BMA-NB model shown

in Figure 1(c) represented by the following joint probability marginalized over the latent model class f and simplified

by exploiting associative and reverse distributive laws:

"K

# N +1

K

X Y

Y

Y

P (y|x, D) =

P (fk )

P (yi ) P (xi,k |fk , yi )

f

k=1

i=1

Feature Model Justification

One may question exactly why implementing P (xi,k |fk , yi )

as in (10) corresponds to a feature selection approach in

Naive Bayes. As already outlined, then fk = 0, this is equivalent to making P (xi,k |fk , yi ) a constant w.r.t. yi and hence

this feature can be ignored when determining the most probable class yi . However there is slightly deeper second justification for this model based on the following feature and

class independence analysis:

QN

QN

i=1 P (xi,k , yi )

i=1 P (xi,k |yi )

=

≥1

(12)

QN

QN

i=1 P (xi,k )P (yi )

i=1 P (xi,k )

On the LHS, we show the ratio of the joint distribution of

feature xi,k and class label yi to the product of their marginal

distributions — a ratio which would equal 1 if the two variables were independent, but which would be greater than 1 if

the variables were dependent — the joint would carry more

information than the product of the marginals. Simply by diQN

viding the LHS through by i=1 (P (yi )/P (yi )) = 1, we

arrive at the equivalent middle term which shows the two

terms used in (10).

From this we can conclude that when feature xi,k and

class label yi are independent (i.e., xi,k carries no information as a predictor of yi ) then P (xi,k |fk , yi ) = P (xi,k ) regardless of fk . Only when P (xi,k |fk , yi ) is predictive would

we expect P (fk = 1|xi,k , yi ) > P (fk = 0|xi,k , yi ) and

hence BMA-NB would tend to include feature k (i.e., models including feature k would have a higher likelihood and

hence a higher model weight), which is precisely the BMA

behavior we desire to weight models with more predictive

features more highly than those with less predictive features.

k=1

" +1

# K

N

+1

Y

XX X NY

Y

...

P (yi )

P (fk )

=

P (xi,k |fk , yi )

f1

f2

fk

i=1

k=1

i=1

"N +1

# K

N

+1

Y

YX

Y

=

P (yi )

P (fk )

P (xi,k |fk , yi )

i=1

k=1 fk

(8)

i=1

Before we proceed further, we need to define specifically what model prior P (fk ) and feature distribution

P (xi,k |fk , yi ) we wish to use for BMA-NB. For P (fk ), we

use the simple unnormalized prior

(

1

if fk = 1

P (fk ) ∝ β

(9)

1 if fk = 0

for some positive constant β.

In a generative model such as NB, what it means for a

feature to be used is quite simple to define:

P (xi,k |yi ) if fk = 1

P (xi,k |fk , yi ) =

(10)

P (xi,k )

if fk = 0

BMA vs. Feature Selection

The analysis in the last subsection suggests that features

which have a higher mutual information (MI) with the class

label will lead to higher weights for models that include

those features. This is encouraging since it is consistent with

the observation that MI is often a good criterion for feature

selection (Manning, Raghavan, and Schütze 2008).

However one may ask: why then bother with BMA-NB

if one could simply use MI as a feature selection criterion

instead? Aside from the tendency of BMA to reduce predictor variance on average compared to the choice of any single model along with the asymptotic convergence of BMA

to the optimal feature subset, there is an additional important reason why BMA might be preferred. Feature selection

requires choosing a threshold on the given criterion (e.g.,

MI), which is another classifier hyperparameter that must be

properly tuned on the training data via cross-validation for

optimal performance. Since this expensive threshold tuning

substantially slows down the training time for naive Bayes

with feature selection, it (somewhat surprisingly) turns out

to be much faster to simply average over all exponential

models in linear time using BMA-NB.

Then whenever fk = 0, P (xi,k |fk , yi ) effectively becomes

a constant that can be ignored in the arg maxy of (2) of NB.

Putting the pieces together and explicitly summing over

fk ∈ {0, 1}, we can continue our derivation from (8):

P (y|x, D) ∝

(11)

"N +1

# K "N +1

#

N +1

Y

Y Y

1 Y

P (xi,k |yi )

P (yi )

P (xi,k ) +

β i=1

i=1

i=1

k=1

And this brings us to a final form for BMA-NB that allows P (y|x, D) to be efficiently computed. Note that here

we have averaged over an exponential number of models f

in linear time in the number of features K. This result critically exploits the fact that NB models do not need to be

retrained for different feature subsets due to the feature conditional independence assumption of NB. As standard for

BMA, we note that in the limit of data D, P (fk |D) → 1 for

the optimal feature selection choice fk = 1 or fk = 0 thus

leading to a linear-time aymptotically optimal feature selection algorithm for NB. Before we proceed to an empirical

evaluation, we pause to discuss a number of design choices

and implementation details that arise in BMA-NB.

Hyperparameter Tuning

Our key hyperparameter to tune in BMA-NB is the constant

β used in the feature inclusion prior (9) for fk . The purpose

3096

of this constant is simply to weight the a priori preference

for models with the feature and without it. Clearly a β = 1

places no prior preference on either model, while β > 1

prefers the simpler model in the absence of any data and

corresponds to a type of Occam’s razor assumption — prefer

the simplest model unless the evidence (Bayesian posterior

for fk ) suggests otherwise.

In experimentation we found that the optimal value of β is

highly sensitive to the amount of data N since the likelihood

QN +1

terms i=1 of (11) scale proportional to N . A stable way

to tune the hyperparameter β across a range of data is to

instead tune Γ defined as

β = ΓN +1

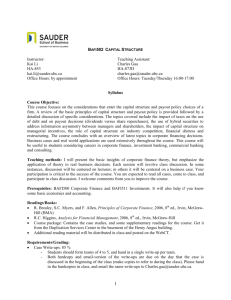

Table 1: UCI datasets for experimentation.

Problem

anneal

autos

vote

sick

crx

mushroom

heart-statlog

segment

labor

vowel

audiology

iris

zoo

lymph

soybean

balance-scale

glass

hepatitis

haberman

(13)

where N is size of training data and we evaluated Γ ∈

{0.5, 0.6, 0.7, 0.8, 0.9, 1, 1.1, 1.2, 1.3, 1.4, 1.5} since, empirically, wider ranges did not lead to better performance.

Conditional Probability Estimation

As usual for NB and other generative classifiers, it is critical to smooth probability estimates for discrete variables to

avoid 0 probabilities (or otherwise low probabilities) for low

frequency features. As often done for NB, we use Dirichlet

prior smoothing for discrete-valued features taking M possible feature values:

V

#D{xk y} + α

P (xk |y) =

(14)

#D{y} + αM

When the Dirichlet prior α = 1, then this approach is called

Laplace smoothing (Mitchell 2010). Note that while α is

also a potential hyperparameter in this work, we keep α = 1

as performance for BMA-NB and NB did not differ significantly for other values of α.

For continuous features xk , we instead model the condi2

tional distribution P (xk |y) = N (µk,y , σk,y

) as a Gaussian

where for each continuous feature k and class label y we

2

estimate the empirical mean µk,y and variance σk,y

for the

corresponding subset of feature data {xi,k |yi = y}i for class

label y.

K

38

26

16

30

16

22

13

19

16

14

69

5

18

19

35

4

10

19

4

|D|

798

205

435

3772

690

8124

270

2310

57

990

226

150

101

148

683

625

214

155

306

C

6

7

2

2

2

2

2

7

2

11

23

3

7

4

19

3

7

2

2

K/|D|

4.76%

12.68%

3.68%

0.80%

2.32%

0.27%

4.81%

0.82%

28.07%

1.41%

30.53%

3.33%

17.82%

12.84%

5.12%

0.64%

4.67%

12.25%

1.31%

Missing?

Yes

Yes

Yes

Yes

No

Yes

No

No

No

No

Yes

No

No

No

Yes

No

No

No

No

Comparison Methodology

In our experimentation, we compare classifiers using a

nested random resampling cross-validation (CV) model to

ensure all classifier hyperparameters were properly tuned via

CV on the training data prior to evaluation on the test data.

All algorithms — NB, BMA, SVM, and Logistic Regression

(LR) — require hyperparameter tuning during nested crossvaliation as outlined below along with other training details

for each algorithm.

The implementation of SVM and LR are from Liblinear

API package (Fan et al. 2008). Both toolkits optimize the

regularized error function

l

X

1

ξ (w; xi ; yi )

min wT w + C

w 2

i=1

(15)

where SVM uses hinge loss for ξ and LR uses log loss for

ξ. Both use a regularization parameter denoted λ, typically

tuned in reciprocal form C = λ1 . C is regarded as penalty

factor or cost factor.2 With large C, the classifiers are more

sensitive to the empirical loss ξ and tend to overfit for high

C, while small C simply prevents learning altogether.

Another parameter of Liblinear package is the constant

bias term B. The following equation corresponds to separating hyperplane for general linear classifiers like SVM and

LR

K

X

y (x, w) =

wk φk (x) + B (w0 )

(16)

Experiments

We evaluated NB, BMA-NB (shortened to just BMA here),

SVM, and Logistic Regression (LR) on a variety of realworld datasets from the UCI machine learning repository (Asuncion and Newman 2007). Characteristics of the

datasets we evaluated are outlined in Table 1.

We compare to the NB classifier with the full feature set

to determine whether BMA always provides better performance than NB as we might expect (since it should place

high weight on the full feature set used by NB if that feature

set offers best performance). We choose both SVM and LR

as discriminative linear classifiers known for their typically

stronger performance but slower training time compared to

NB. While we would not expect generatively trained BMA

to beat disriminatively trained SVM and LR, we are interested to see (a) where BMA falls in the range between SVM

and LR, (b) whether it is significantly faster to train than

SVM and LR, and (c) if there are cases where BMA is competitive with SVM and LR.

k=1

where φk is base function, w is parameter vector and B is

the bias.3

2

We use C to be consistent with the Liblinear software package

we use, but this is not to be confused with the number of classes C;

the intended usage should be clear from context.

3

Note it is not a good idea to regularize B; it is often better to

tune it via cross-validation.

3097

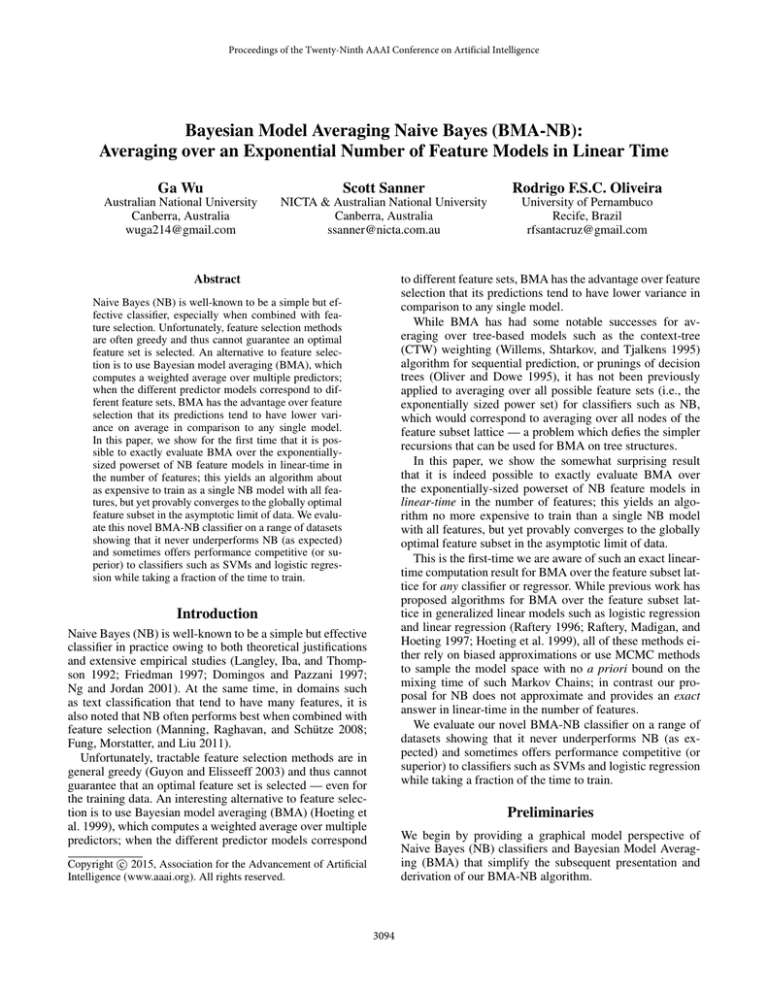

Table 2: (left) Error ±95% confidence interval for each classifier with the best performance average performance shown in bold

— lower is better; (right) training time (ms). Datasets that show a 0.00 error rate appear to be linearly separable.

Problems

anneal

autos

vote

sick

crx

mushroom

heart-statlog

segment

labor

vowel

audiology

iris

zoo

lymph

soybean

balance-scale

glass

hepatitis

haberman

BMA

7.87±2.00

30.00±7.13

4.65±2.25

6.37±0.89

23.19±3.58

1.35±0.29

7.41±3.55

23.81±1.97

0.00±0.00

33.33±3.34

22.73±6.21

6.67±4.54

0.00±f0.00

7.14±4.72

11.76±2.75

9.68±2.63

52.38±7.61

26.67±7.91

33.33±6.00

NB

7.87±2.00

40.00±7.62

11.63±3.42

7.69±0.97

23.19±3.58

3.82±0.47

7.41±3.55

23.81±1.97

0.00±0.00

42.42±3.50

27.27±6.60

13.33±6.18

0.00±0.00

7.14±4.72

11.76±2.75

9.68±2.63

52.38±7.61

26.67±7.91

33.33±6.00

LR

6.74±1.86

25.00±6.74

4.65±2.25

2.65±0.58

18.84±3.32

2.96±0.42

18.52±5.27

10.82±1.44

0.00±0.00

44.44±3.52

9.09±4.26

6.67±4.54

0.00±0.00

14.29±6.41

10.29±2.59

16.13±3.28

33.33±7.18

33.00±8.77

36.67±6.14

SVM

17.98±2.86

55.00±7.74

4.65±2.25

4.51±0.75

21.74±3.50

4.06±0.49

11.11±4.26

14.72±1.64

0.00±0.00

47.47±3.54

13.64±5.09

6.67±4.54

0.00±0.00

14.29±6.41

11.76±2.75

12.90±2.99

47.62±7.61

46.67±8.93

33.33±6.00

Since parameter tuning of SVM and LR is very time consuming, we limited our hyperparameter search to a combination of 36 joint values of B and C as shown below:

C ∈ {0.01, 0.1, 1, 10, 20, 100}

(17)

B ∈ {0.01, 0.1, 1, 10, 20, 100}

(18)

We implement the Naive Bayes classifier ourselves to

guarantee that NB is exactly the special case of BMA where

weight 1 is placed on the model with f = 1 and weight 0 on

other models. As described previously, while β is tuned for

BMA, α = 1 yielded best results for both NB and BMA and

setting α = 1 for both classifiers allows us to evaluate the

performance of BMA’s model averaging vs. the same full

model that NB uses.

Problems

anneal

autos

vote

sick

crx

mushroom

heart-statlog

segment

labor

vowel

audiology

iris

zoo

lymph

soybean

balance-scale

glass

hepatitis

haberman

BMA

526

81

10

202

36

248

18

435

3

105

81

4

6

7

99

15

14

7

4

NB

147

40

6

79

9

98

5

120

3

35

14

2

3

3

18

5

5

3

2

LR

618

183

22

434

61

1072

22

2606

6

557

172

10

31

22

559

40

50

16

11

SVM

2070

1278

33

2475

540

5785

213

6637

27

5266

260

102

222

175

818

542

621

120

157

To continue with our experimentation, we now perform

some specialized experiments for text classification with the

UCI newsgroups dataset to experiment with BMA performance relative to other classifiers as the amount of data

and number of features changes. We choose text classification for this analysis since both data and features are abundant in such tasks and they are also a special case where

NB is known to perform relatively well, especially with

feature selection (Manning, Raghavan, and Schütze 2008;

Fung, Morstatter, and Liu 2011).

We focus on a binary classification problem which is abstracted from the newsgroup dataset. To construct D for our

first task, we chose 1000 data entries selected from two categories of newsgroups — it is an exactly balanced dataset.

The K = 1000 features are selected from 20,000 words with

high frequency of occurrence after removing English stopwords. Results for this text classification analysis are shown

in Figure 2. We average this plot over a large number of

runs to obtain relatively smoothed average performance results, but omit dense confidence intervals to keep the plots

readable.

In Figure 2(left), we observe that after around 150 features selected, BMA, SVM and LR show significantly better

performance than NB. Furthermore, the accuracy of BMA,

SVM and Logistic Regression also asymptote earlier with

BMA showing competitive performance with SVM and LR

for this text classification task with a large number of (correlated) word features.

To show whether training dataset size can significantly

impact the accuracy of news classification task, we refer

to Figure 2(right), which is similar to the setup for Figure 2(left) except with |D| = 4000 and K = 500. These

results show the discriminative classifiers SVM and LR performing better than BMA, but overall asymptoting more

quickly than NB indicating an ability of BMA to place heav-

Experimental Results

Now we proceed to compare each of the classifiers on the

UCI datasets as shown in Table 2.

Here we observe a few general trends:

• BMA never underperforms NB as expected. LR seems to

offer the best performance, edging out SVM since we generally found that LR was more robust during hyperparameter tuning in comparison to SVM.

• In the range of performance between NB and LR/SVM,

BMA twice outperforms LR (when NB performance is

significantly worse) and outperforms SVM even more often! When BMA does worse than LR and SVM, it often

comes close to the best performance in many cases.

• BMA is up to 4 times slower than NB, but it’s significant performance improvement over BN seems worth this

tradeoff.

• BMA is always faster than LR and SVM — up to 5 times

faster than LR in some cases and up to 50 times faster than

the SVM for glass!

3098

Figure 2: (left) Accuracy vs. number of features, (right) accuracy vs. number of training data.

Figure 3: Effect of tuning hyperparameter Γ for BMA on the UCI datasets.

Table 2 where we see that BMA performance matches NB

performance (which uses all features) for the problems in

Figure 3(right).

ier weight on the most useful features when data is limited

and many features are noisy.

Finally we show results for hyperparameter tuning of

BMA across the UCI datasets in Figure 3. We break the results into two sets — Figure 3(left) where there seems to be

an optimal choice for β and Figure 3(right) where the optimal choice seems to be a small β < 1 which places a strong

prior on using all features.

If we examine the dataset characteristics in Table 1, we

see all of the problems in Figure 3(right) have small numbers

of features and large amounts of data; hence it seems that in

these settings all features are useful and thus the hyperparameter tuning is largest for a prior which selects all features.

This is further corroborated by the performance results in

Conversely, we might infer that in Figure 3(left), not all

features in these problems are useful, thus a peak value for

prior β > 1 is best which indicates a preference to exclude

features unless the data likelihood overrides this prior. For

many of these problems in Figure 3(left) we similarly note

that the performance of BMA was better than NB indicating

that BMA may have placed low weight on models with certain (noisy) features, whereas NB was constrained to use all

features and hence performed worse.

3099

Fan, R.-E.; Chang, K.-W.; Hsieh, C.-J.; Wang, X.-R.; and

Lin, C.-J. 2008. Liblinear - a library for large linear classification. The Weka classifier works with version 1.33 of

LIBLINEAR.

Friedman, J. H. 1997. On bias, variance, 0/1 - loss, and

the curse-of-dimensionality. Data Mining and Knowledge

Discovery 1:5577.

Fung, P. C. G.; Morstatter, F.; and Liu, H. 2011. Feature selection strategy in text classification. In Advances in Knowledge Discovery and Data Mining. Springer. 26–37.

Guyon, I., and Elisseeff, A. 2003. An introduction to variable and feature selection. J. Mach. Learn. Res. 3:1157–

1182.

Hoeting, J. A.; Madigan, D.; Raftery, A. E.; and Volinsky,

C. T. 1999. Bayesian model averaging: A tutorial. Statistical

Science 14(4):382–417.

Lafferty, J. D.; McCallum, A.; and Pereira, F. C. N. 2001.

Conditional random fields: Probabilistic models for segmenting and labeling sequence data. In Proceedings of the

Eighteenth International Conference on Machine Learning

(ICML-01), ICML ’01, 282–289.

Langley, P.; Iba, W.; and Thompson, K. 1992. An analysis

of bayesian classifiers. In AAAI-92.

Manning, C. D.; Raghavan, P.; and Schütze, H. 2008. Introduction to Information Retrieval. New York, NY, USA:

Cambridge University Press.

Mitchell, T. M. 2010. Generative and discriminative classifier: Naive bayes and logistic regression.

Ng, A. Y., and Jordan, M. I. 2001. On discriminative vs.

generative classifiers: A comparison of logistic regression

and naive bayes. In NIPS, 841–848.

Oliver, J. J., and Dowe, D. L. 1995. On pruning and averaging decision trees. In ICML-95, 430–437. 340 Pine Street,

6th Floor San Francisco, California 94104 U.S.A.: Morgan

Kaufmann.

Raftery, A., and Dean, N. 2006. Variable selection for

model-based clustering. Journal of the American Statistical

Assocation 101:168–178.

Raftery, A.; Karny, M.; and Ettler, P. 2010. Online prediction under model uncertainty via dynamic model averaging:

Application to a cold rolling mill. Technometrics 52:52–66.

Raftery, A. E.; Madigan, D.; and Hoeting, J. A. 1997.

Bayesian model averaging for linear regression models.

Journal of the American Statistical Association

92(437):179–191.

Raftery, A. E. 1996. Approximate Bayes Factors and Accounting for Model Uncertainty in Generalized Linear Models. Biometrika 83(2):251–266.

Willems, F. M. J.; Shtarkov, Y. M.; and Tjalkens, T. J. 1995.

The context-tree weighting method: basic properties. IEEE

Transactions on Information Theory 41(3):653–664.

Conclusion

In this work we presented a novel derivation of BMA for

NB that averaged over the exponential powerset of feature

models in linear time in the number of features. This tends

to yield a lower variance NB predictor (a result of averaging multiple estimators) that converges to the selection of

the best feature subset model in the asymptotic limit of data.

This is the first such result we are aware of for exact BMA

over the powerset of features that does not require approximations or sampling.

Empirically, we observed strong performance from BMANB in comparison to NB, SVMs, and logistic regression

(LR). These results suggest that (1) in return for a fairly constant 4 times slow-down (no matter what dataset size or feature set size), BMA-NB never underperforms NB; (2) sometimes it performs as well as or better than LR or SVM; (3)

it trains faster than LR and SVM and in the best case is up

to 50 times faster than SVM training. These results suggest

that BMA-NB may be a superior general replacement for

NB, and in some cases BMA-NB may even be a reasonable

replacement for LR and SVM, especially when efficient online training is required.

Future work should examine whether the derivational approach used in this paper can extend linear-time BMA over

all feature subsets to other problems. It may be difficult to

do this for the class of discriminative probabilistic classifiers such as logistic regression since each feature subset

leads to a different set of optimal parameters (unlike NB

where the learned parameters for each feature are independent thus preventing the need to retrain NB for each model).

However, BMA has also been applied beyond classification to other problems such as unsupervised learning (Dean

and Raftery 2010; Raftery and Dean 2006) and sequential

models (Raftery, Karny, and Ettler 2010), where techniques

developed in this work may apply. For example, hidden

Markov models (HMMs) are an important generative sequential model with observation independence (as in NB)

that may admit extensions like that developed in BMA-NB;

it would be interesting to explore how such HMM extensions

compare to state-of-the-art linear chain conditional random

fields (CRFs) (Lafferty, McCallum, and Pereira 2001).

Acknowledgements

NICTA is funded by the Australian Government as represented by the Department of Broadband, Communications

and the Digital Economy and the Australian Research Council through the ICT Centre of Excellence program. Rodrigo

F.S.C. Oliveira was sponsored by CNPq - Brazil.

References

Asuncion, A., and Newman, D. J. 2007. UCI machine learning repository.

Dean, N., and Raftery, A. 2010. Latent class analysis variable selection. Annals of the Institute of Statistical Mathematics 62:11–35.

Domingos, P., and Pazzani, M. 1997. On the optimality of

the simple Bayesian classifier under zero-one loss. Machine

Learning 29:103130.

3100